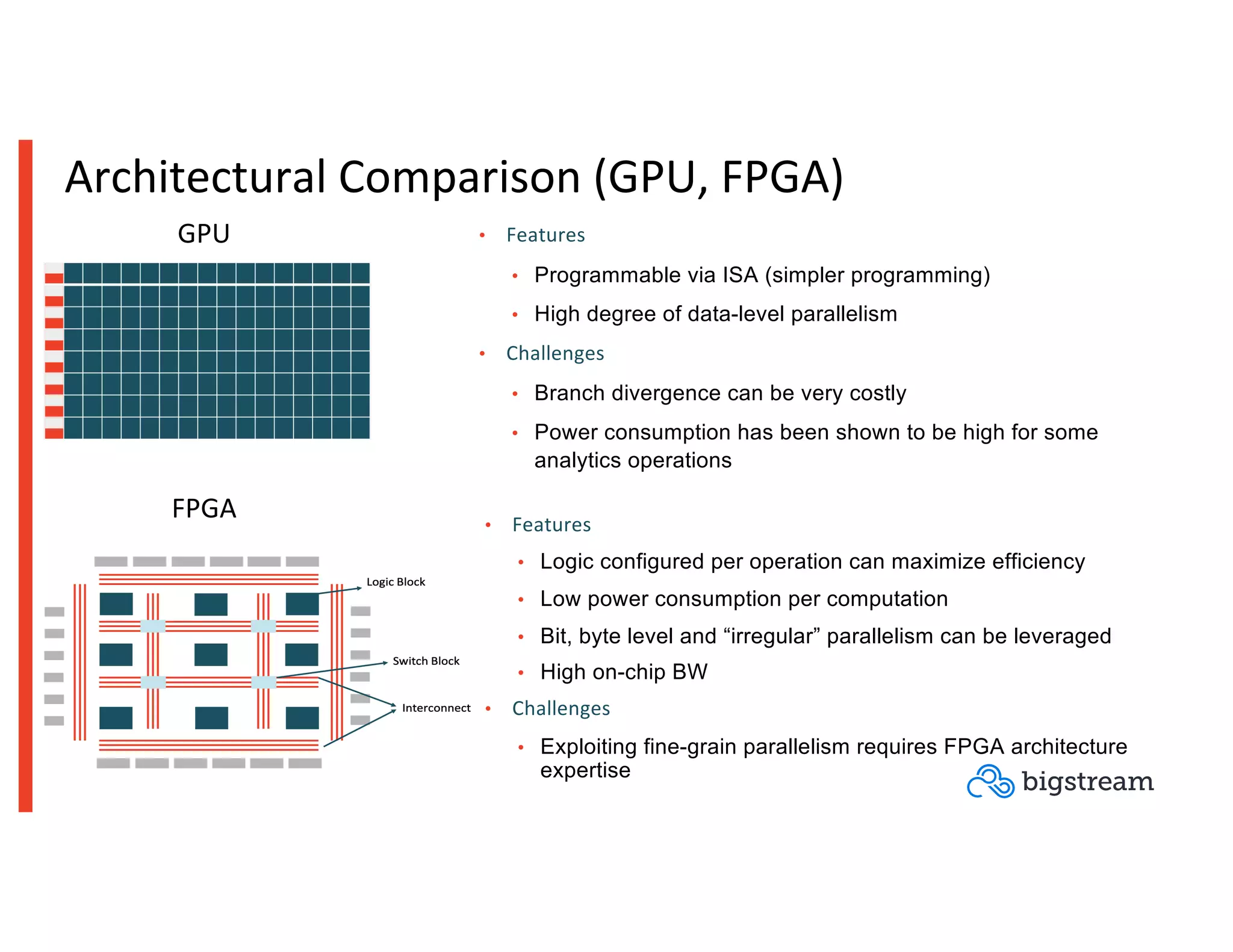

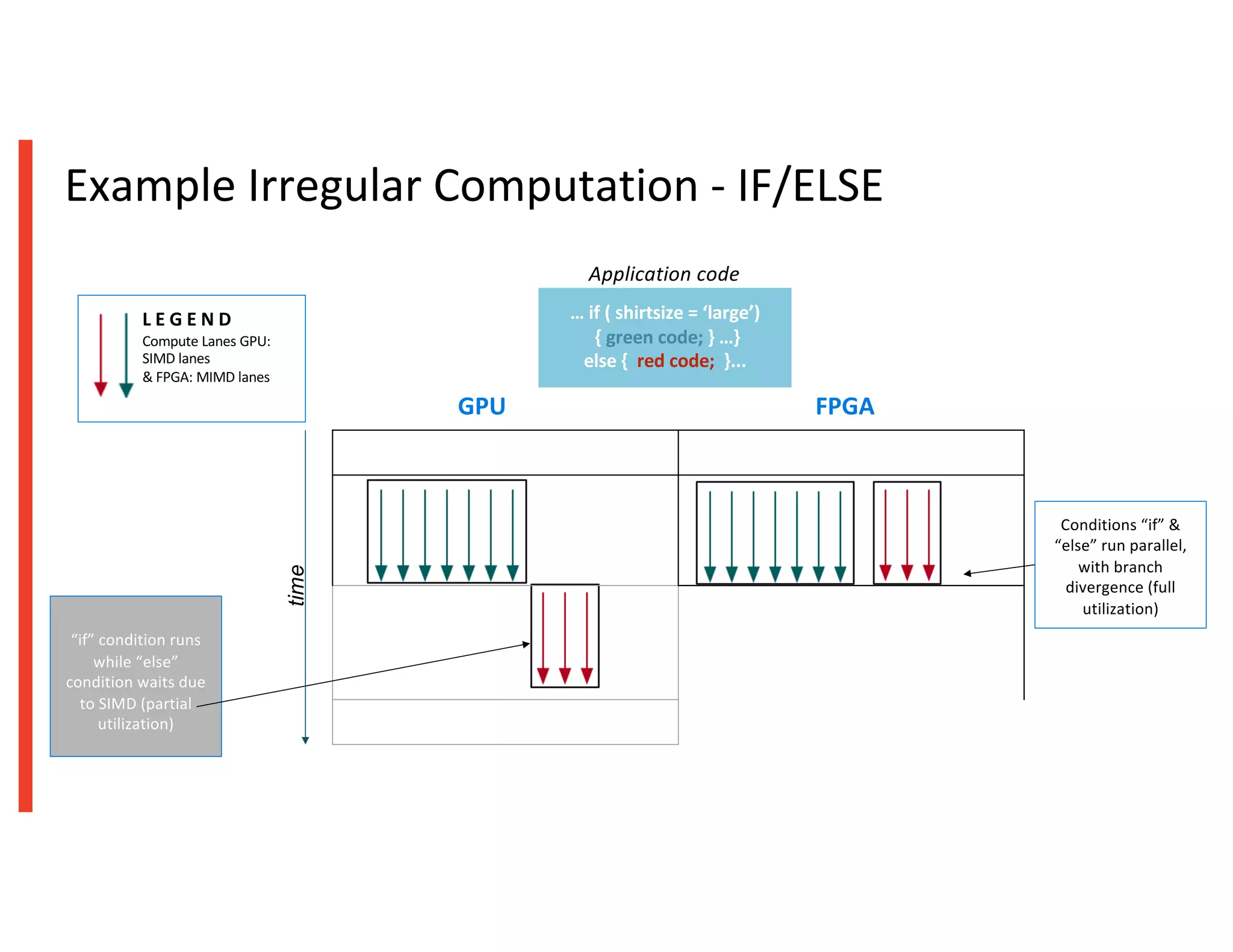

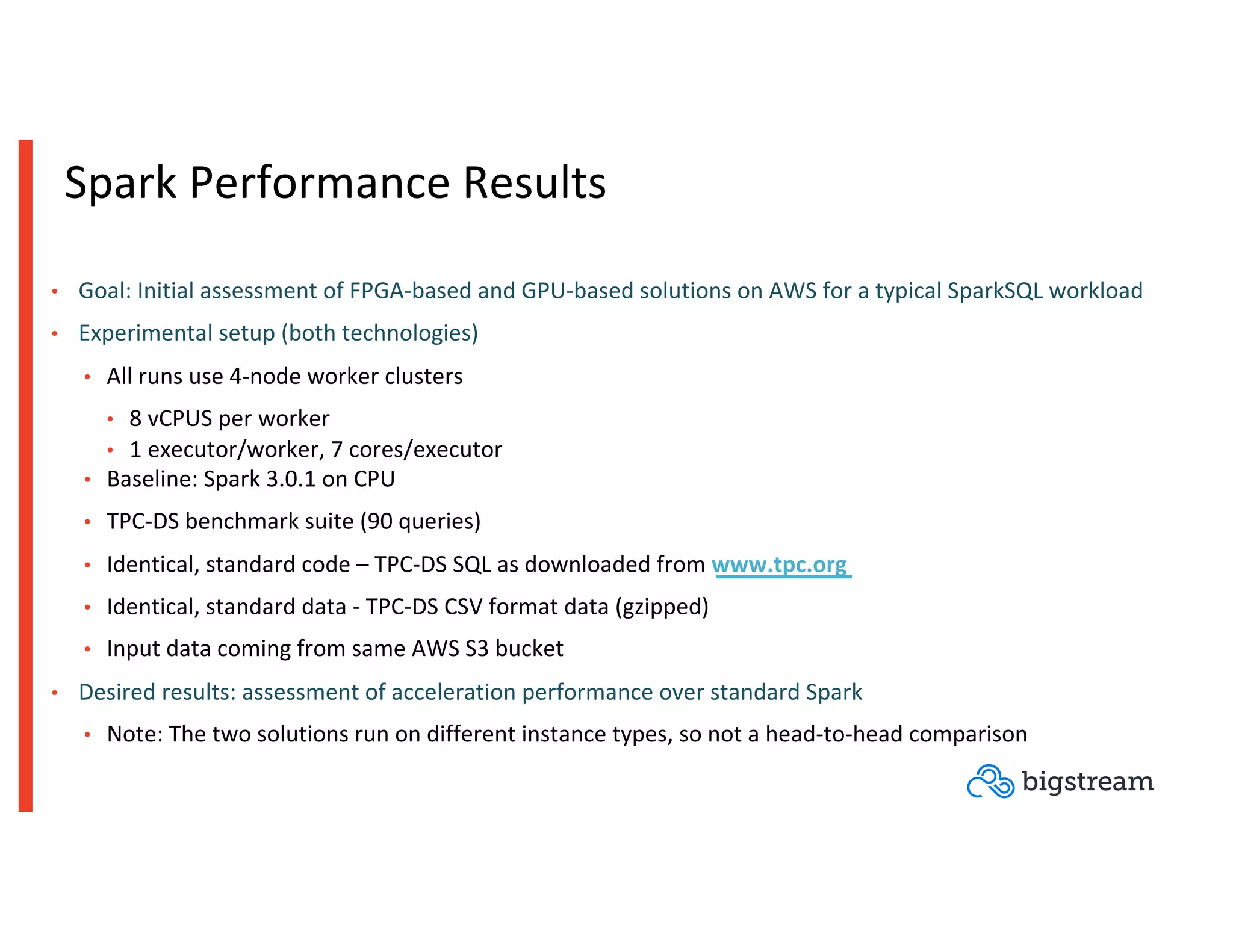

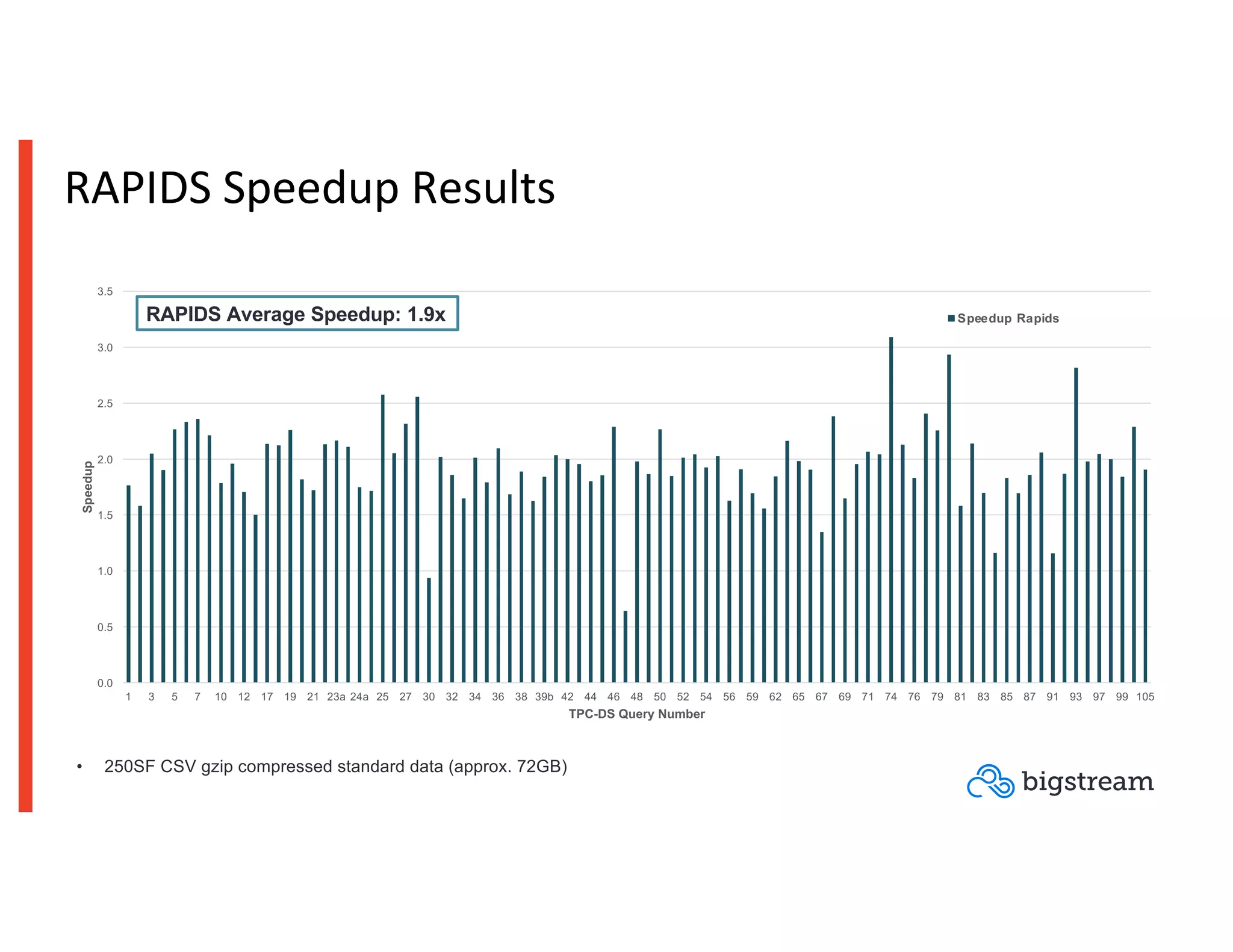

The document compares GPU-based and FPGA-based acceleration for Apache Spark, highlighting the limitations of traditional CPU clusters in handling big data demands. It details various solutions, including software acceleration with Bigstream, which offers average speedups significantly higher than Spark 3.0, and describes the performance outcomes of both GPU and FPGA technologies in analytics workloads. The findings suggest that FPGA acceleration can significantly enhance Spark performance while requiring no code changes, thus presenting a viable option for managing increasing data analytics needs.