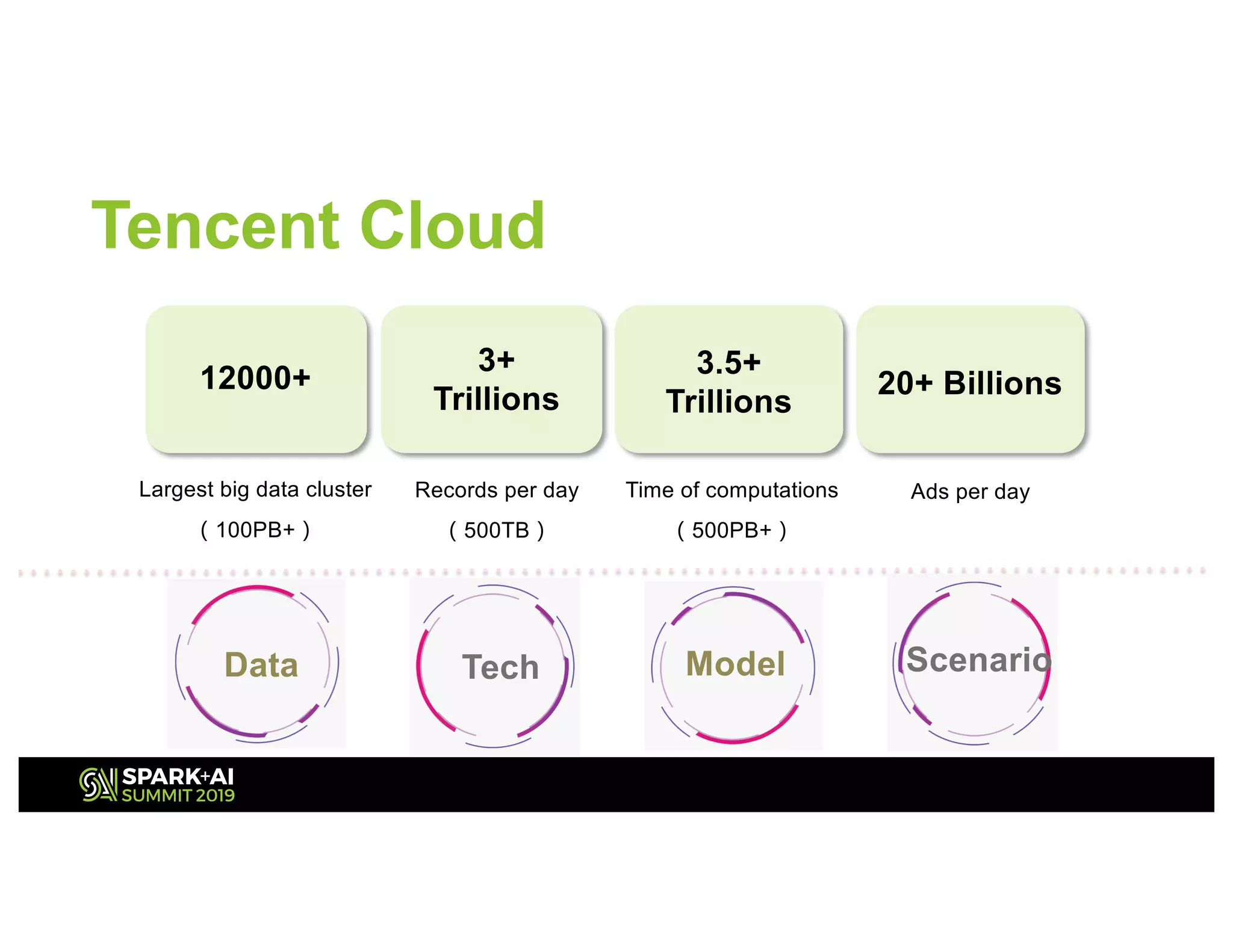

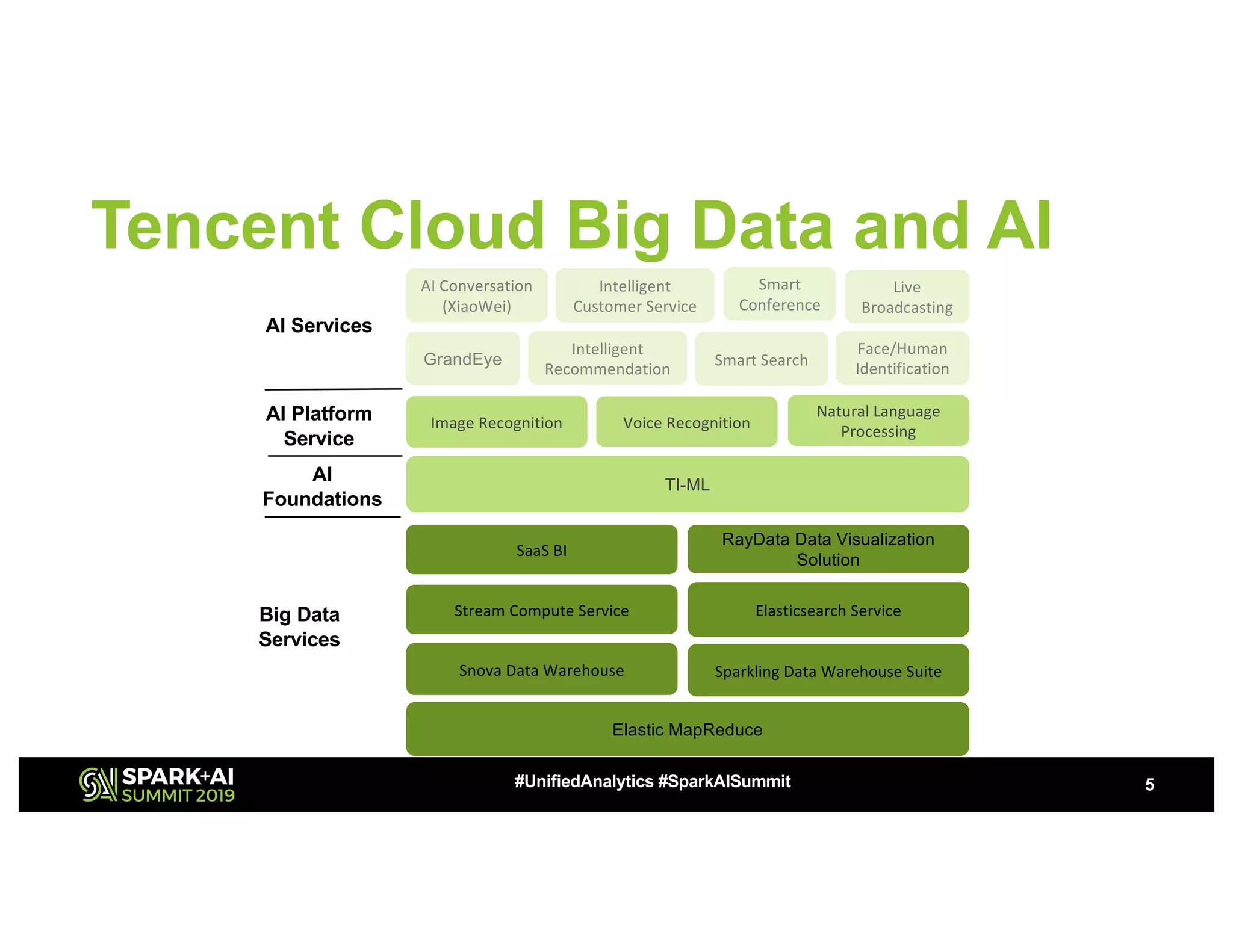

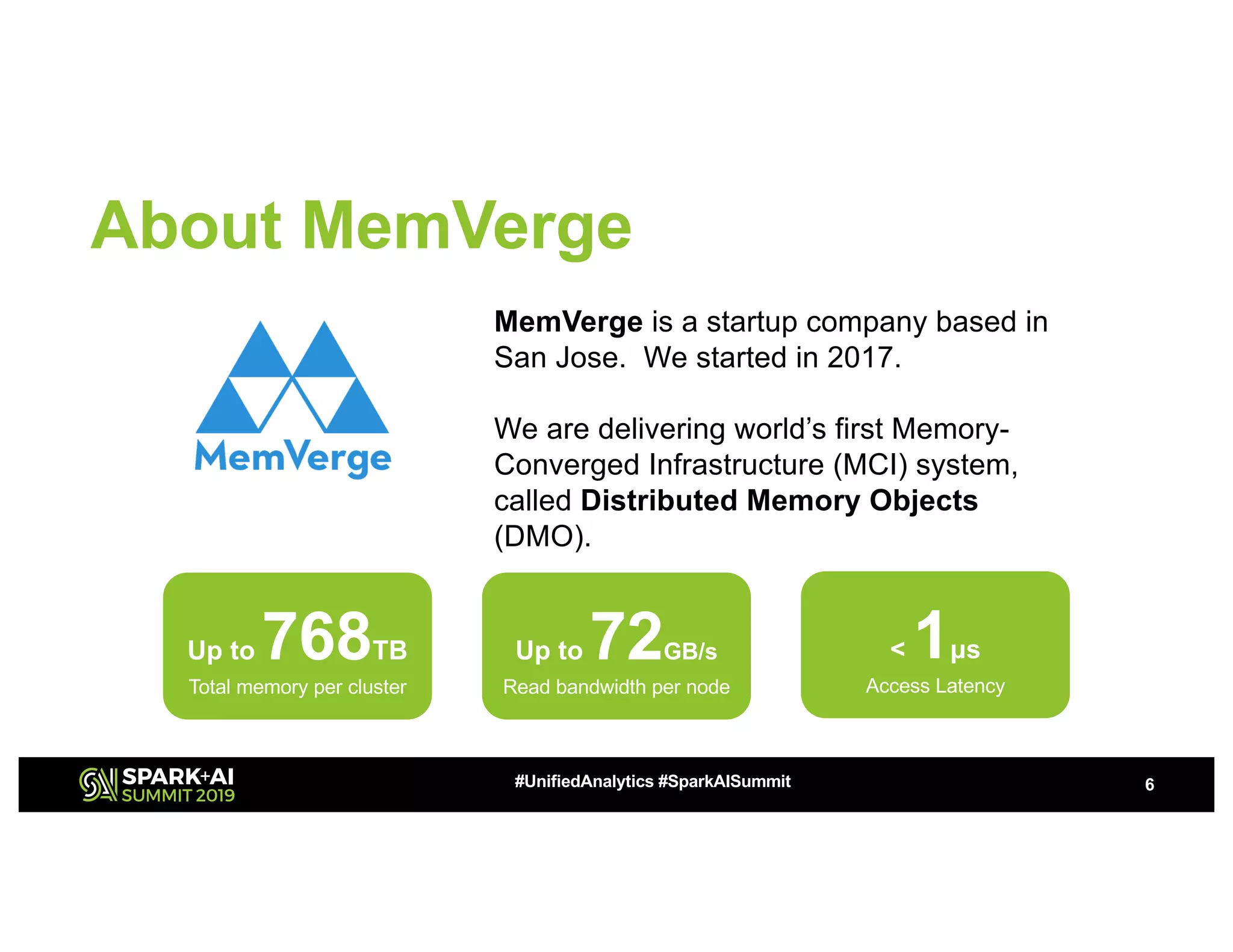

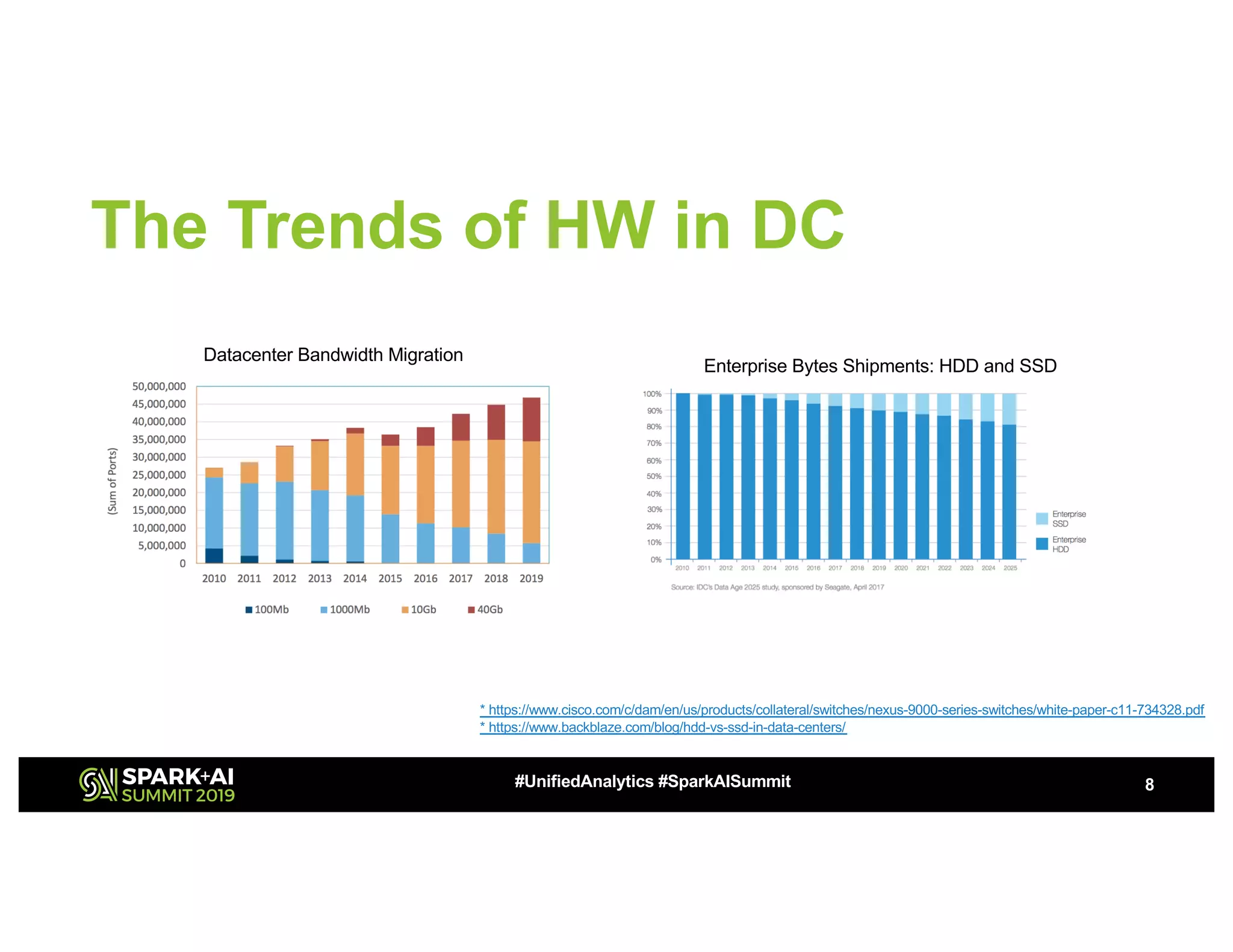

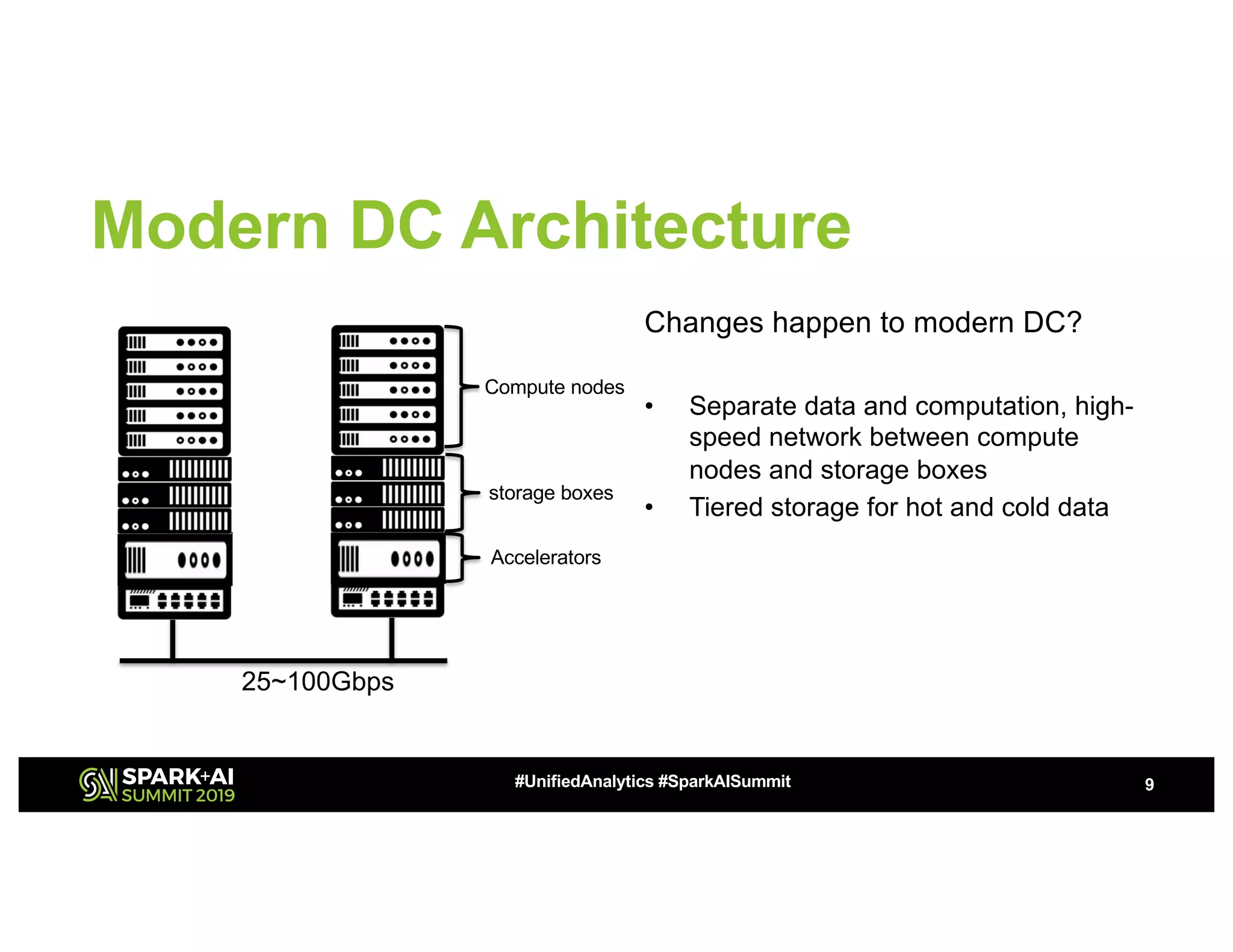

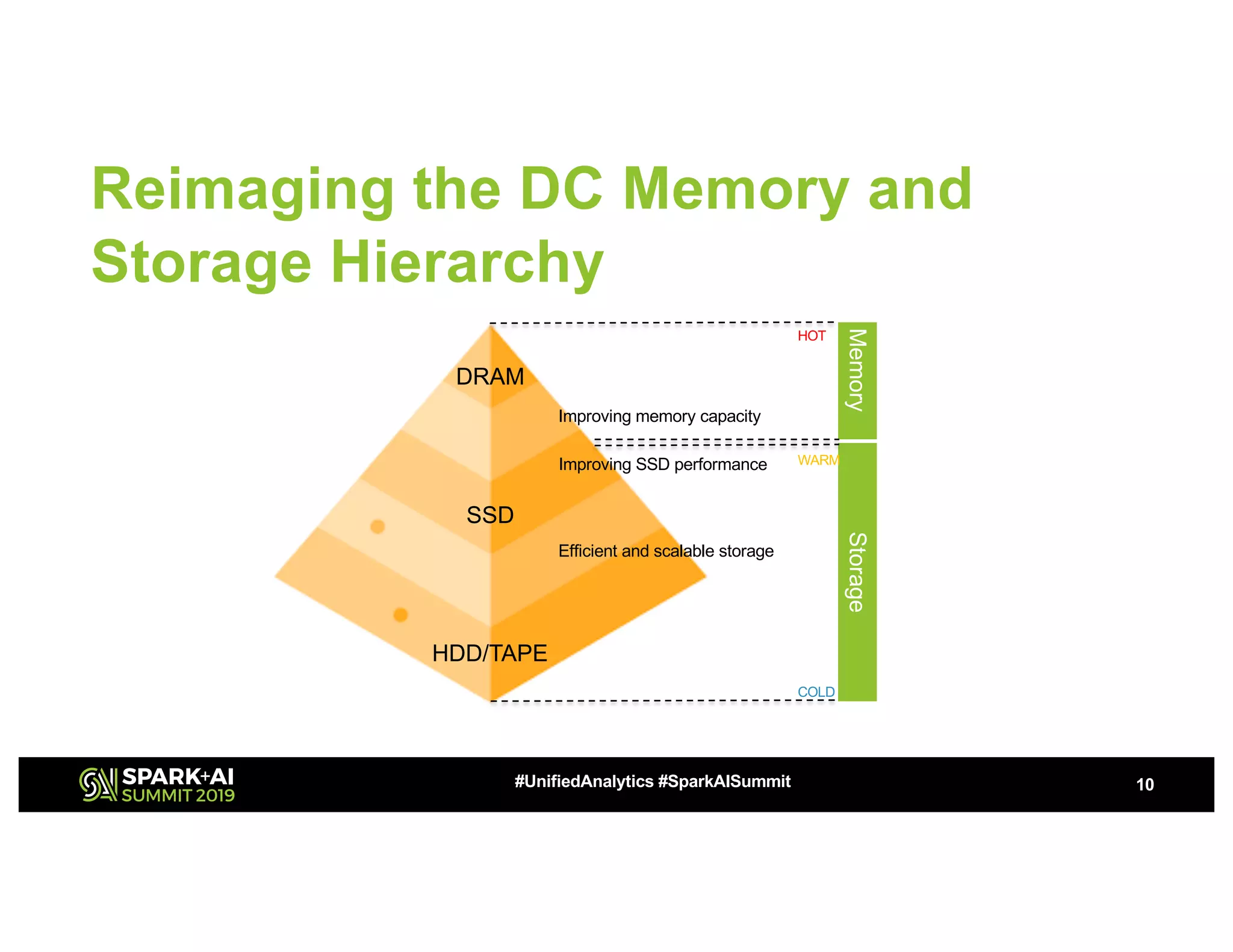

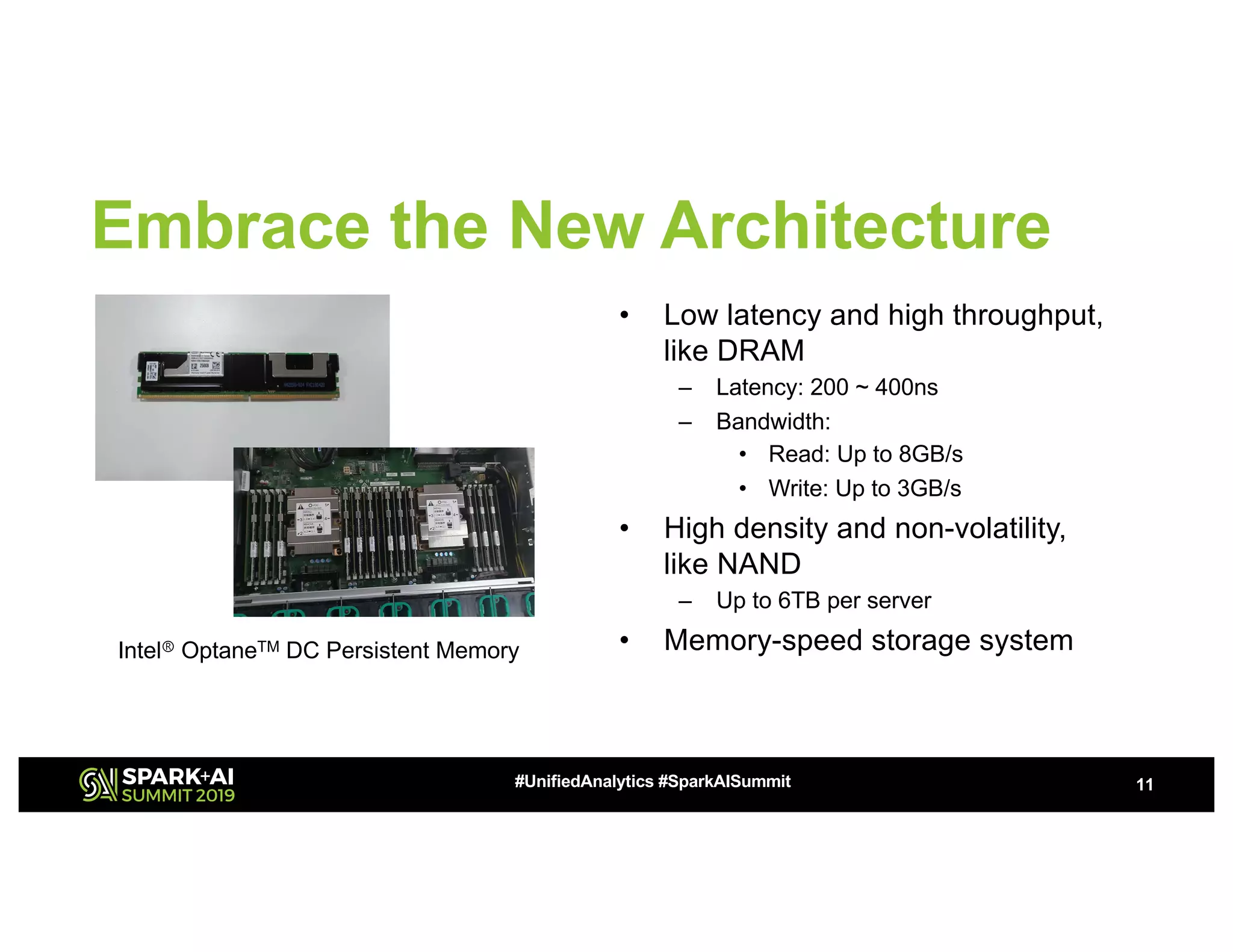

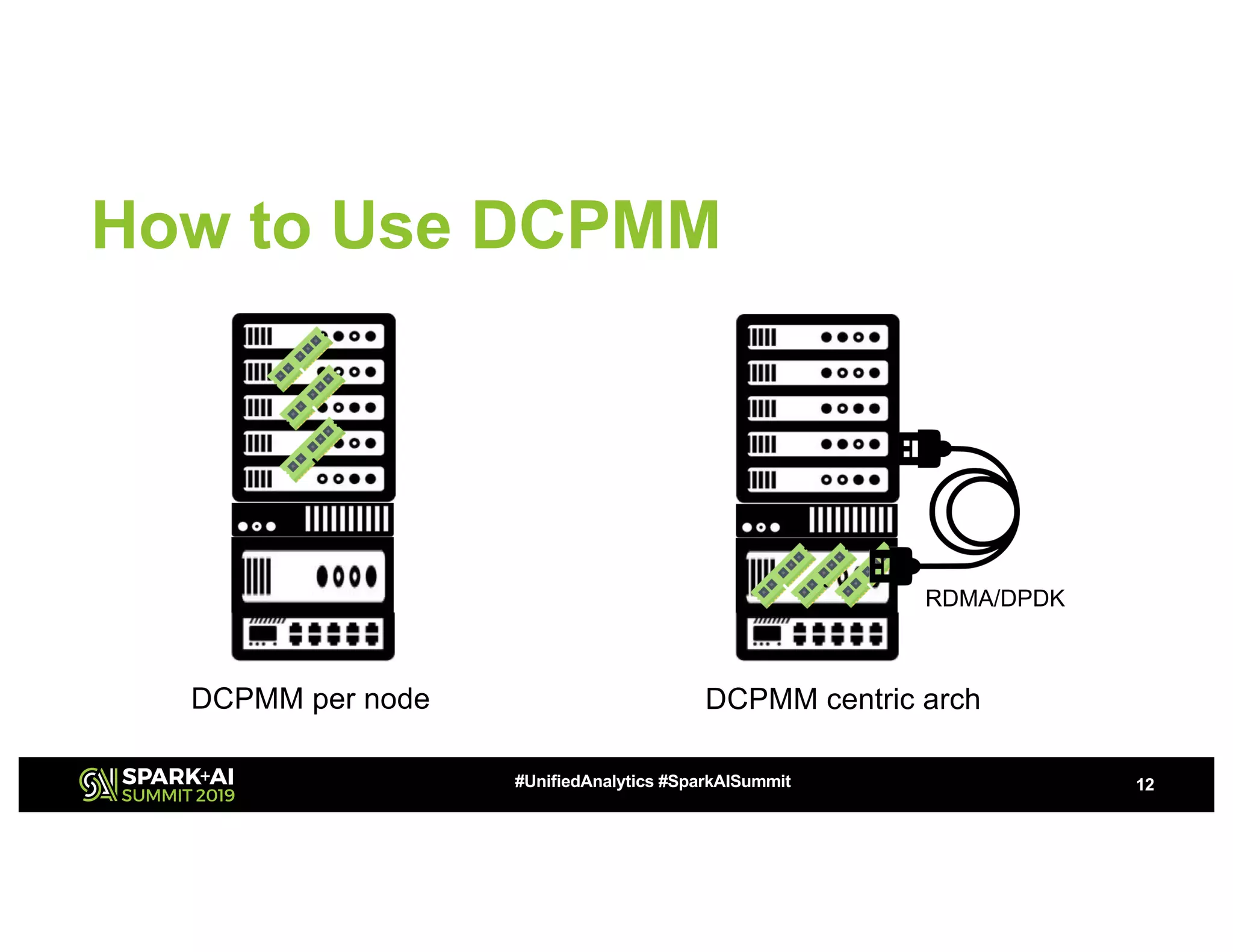

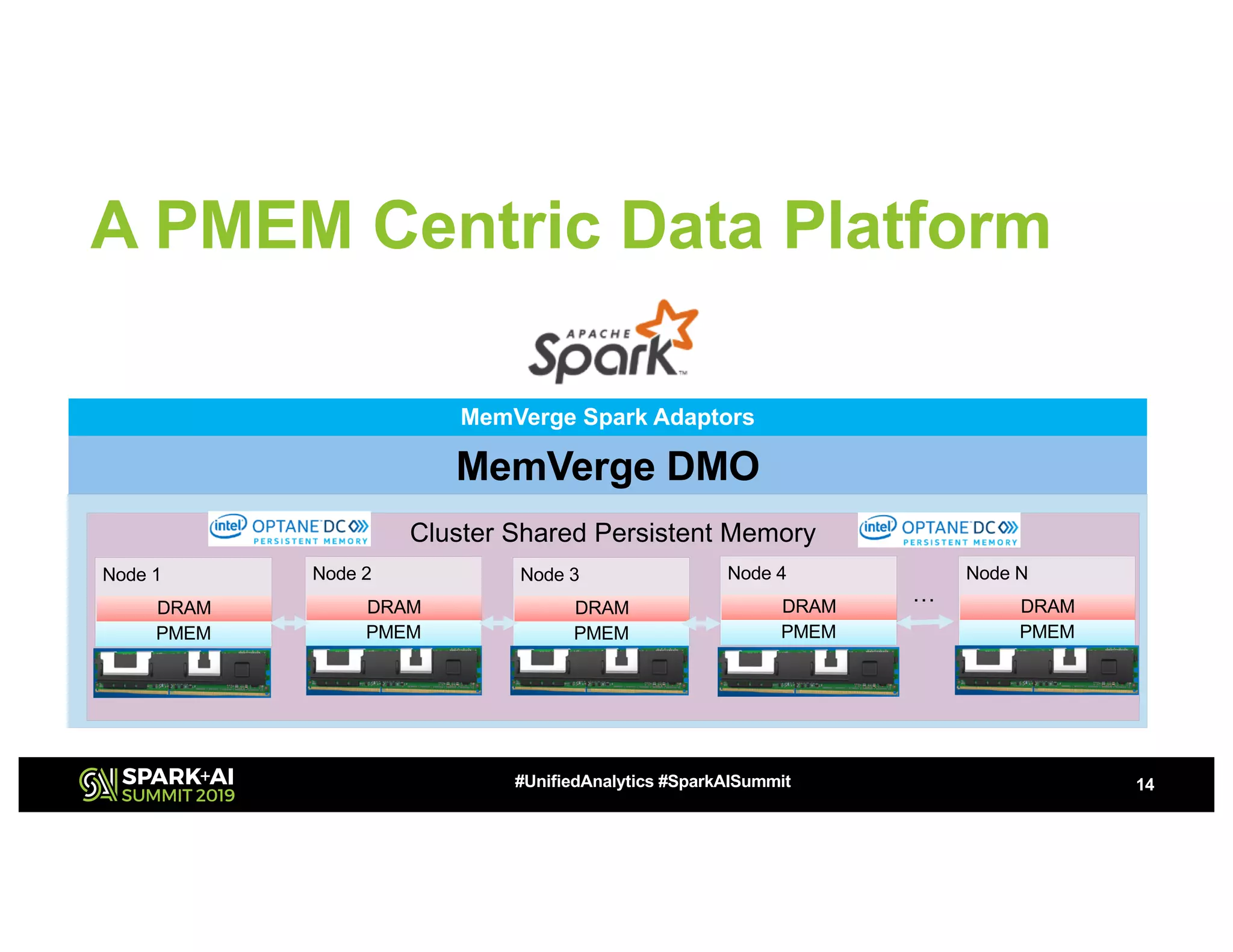

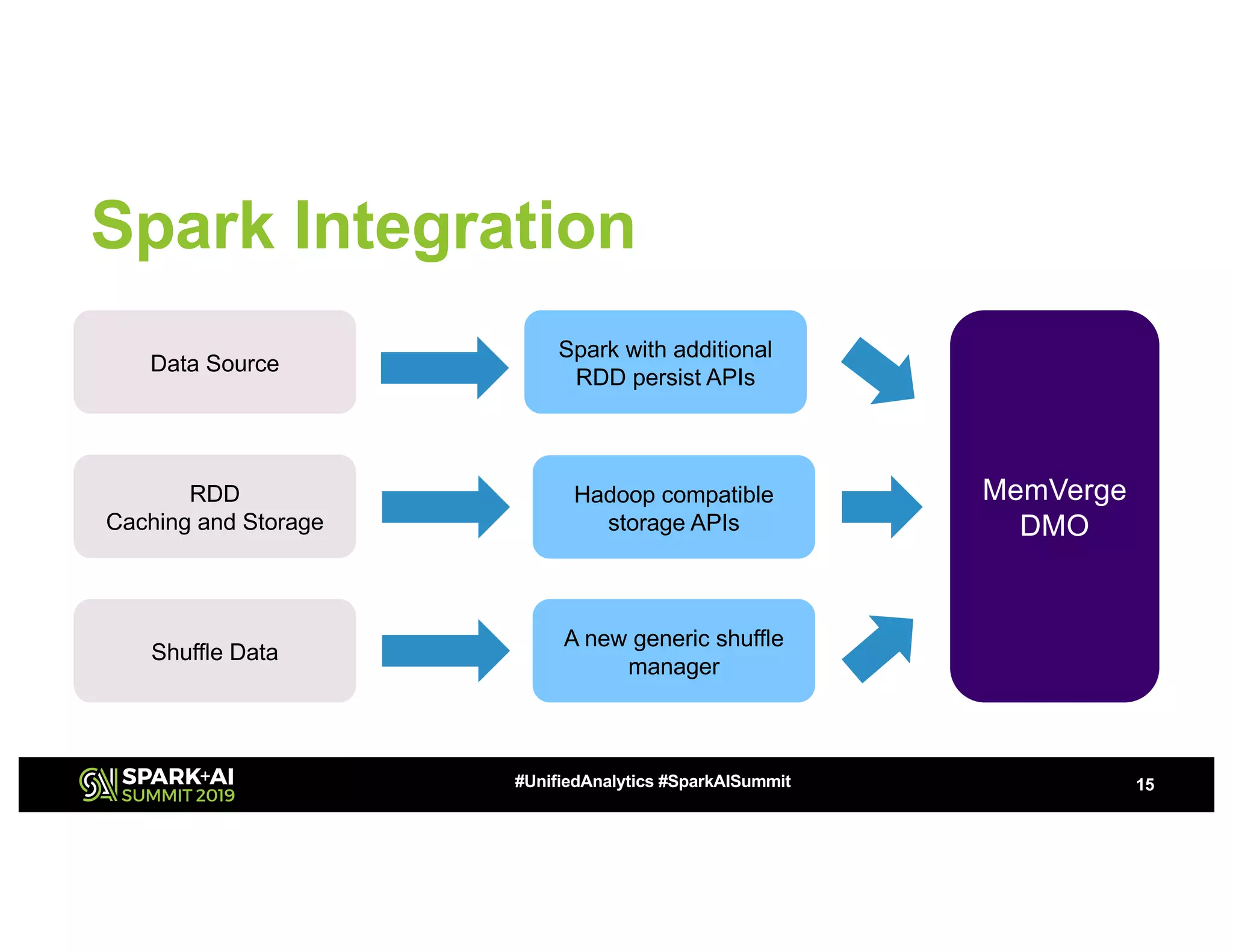

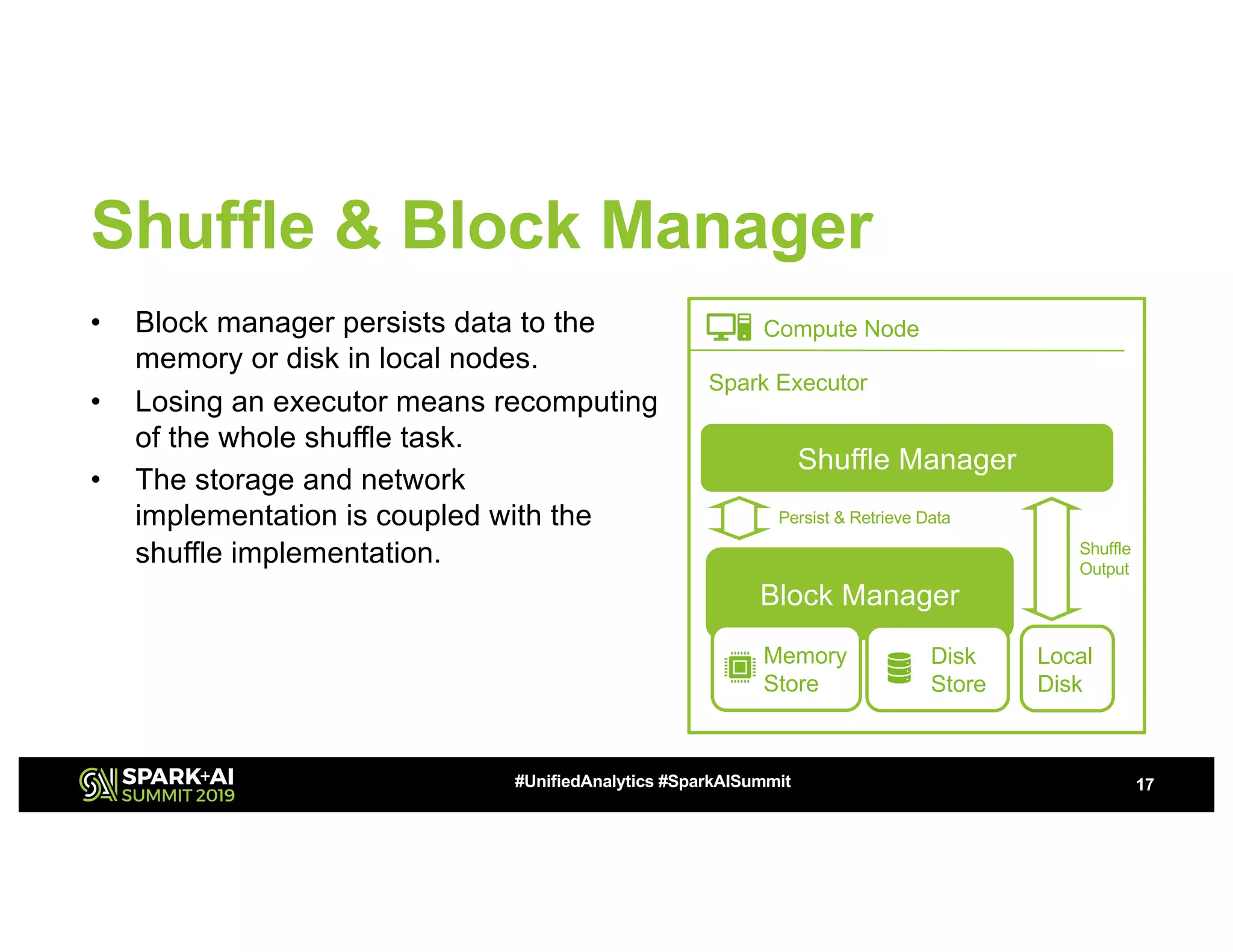

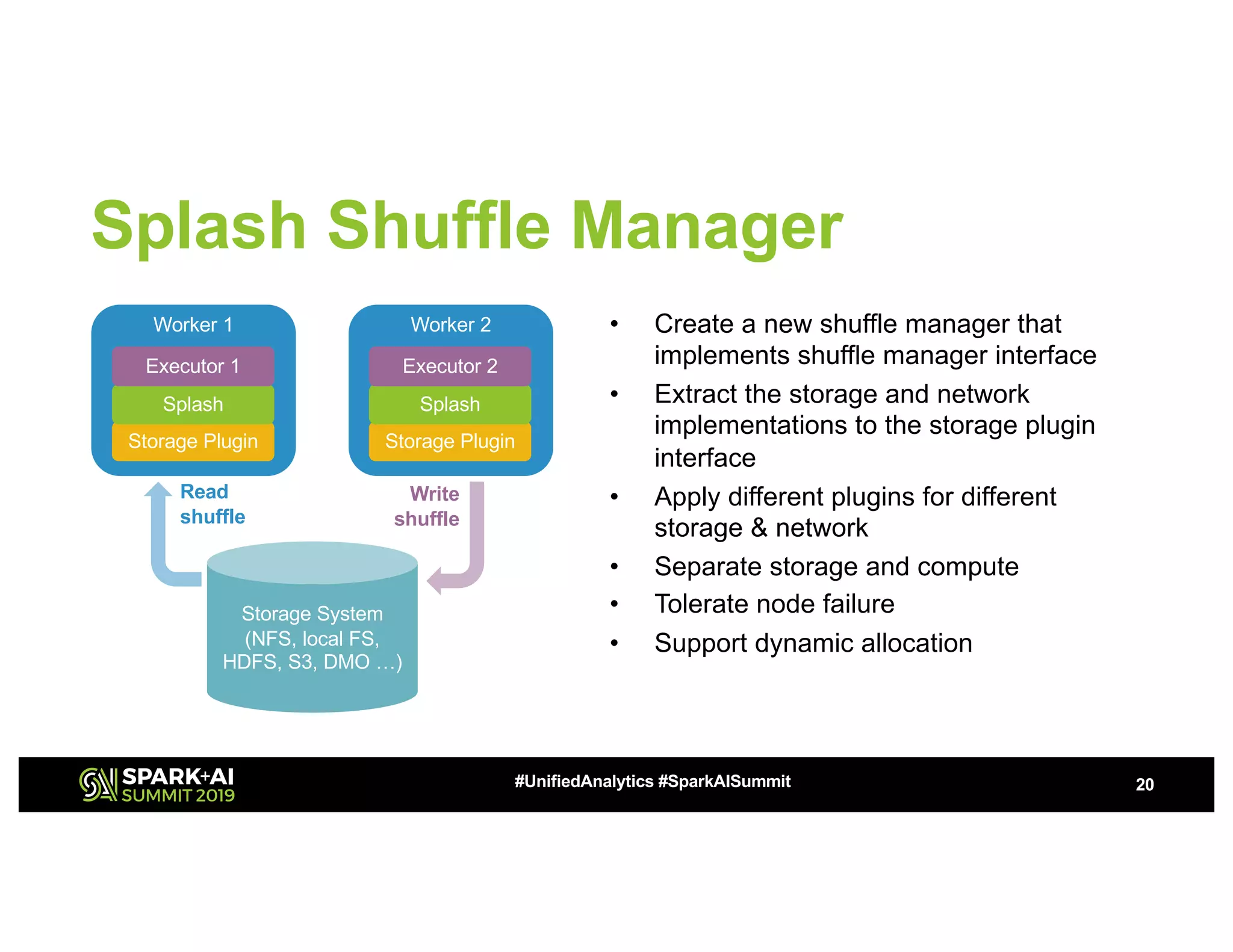

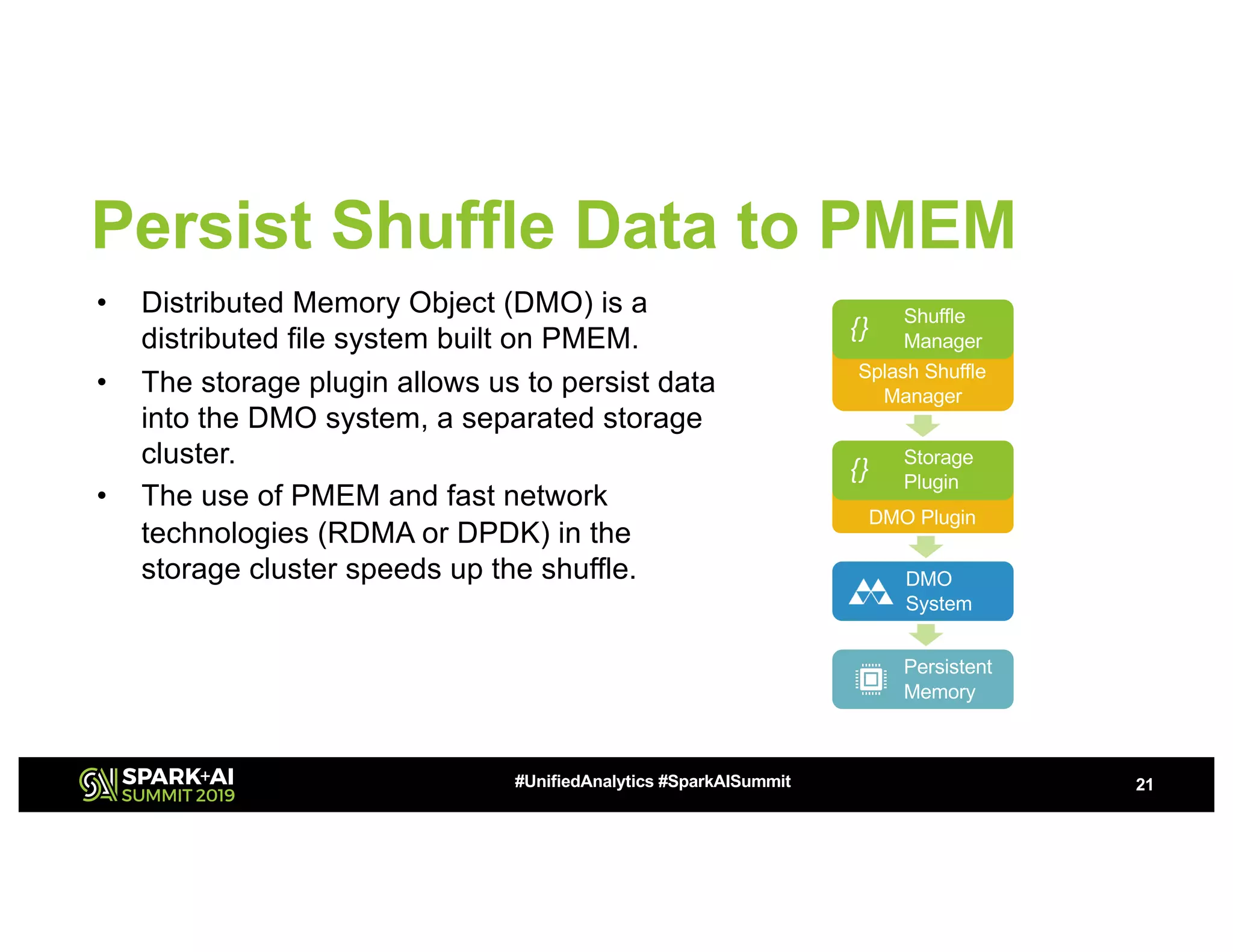

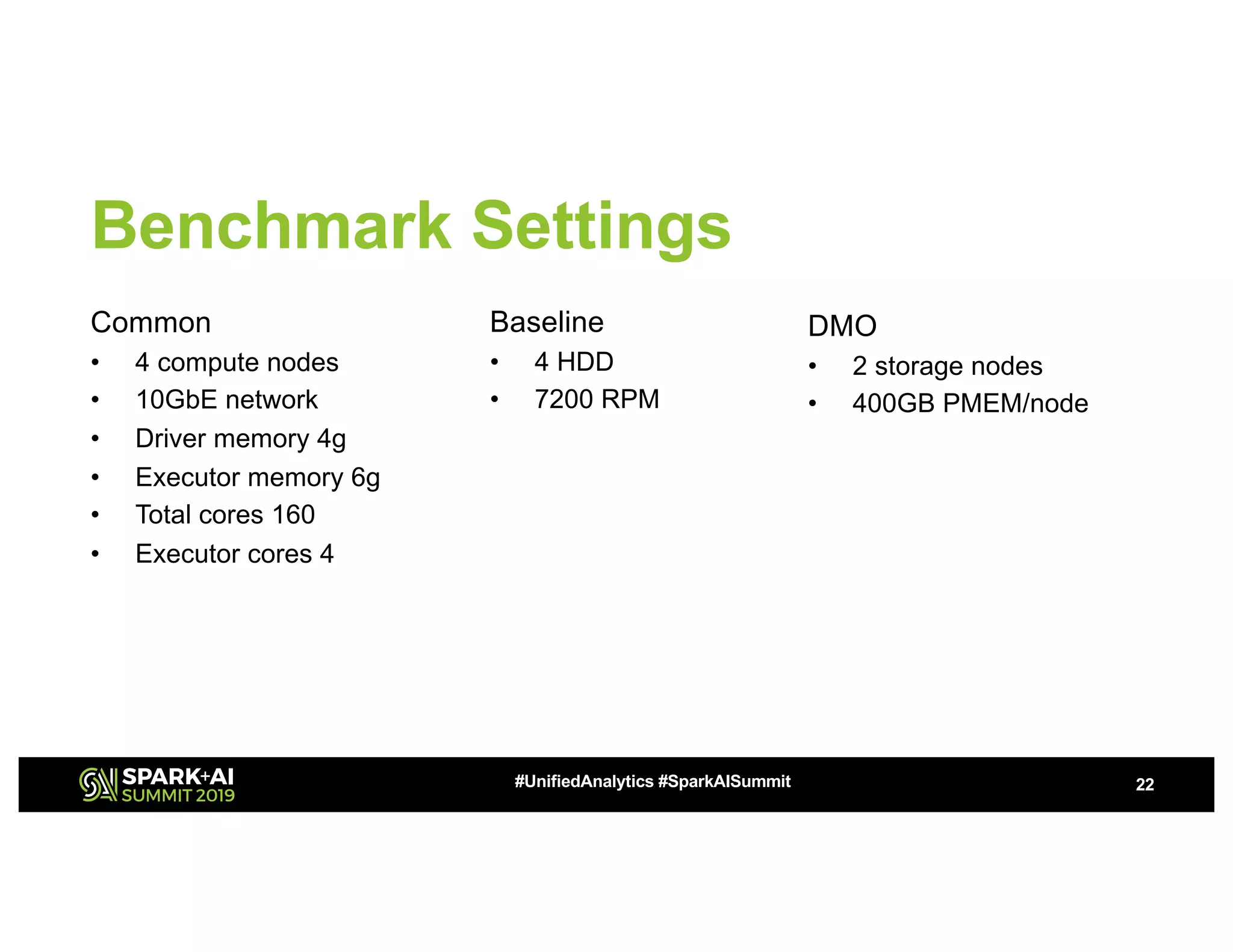

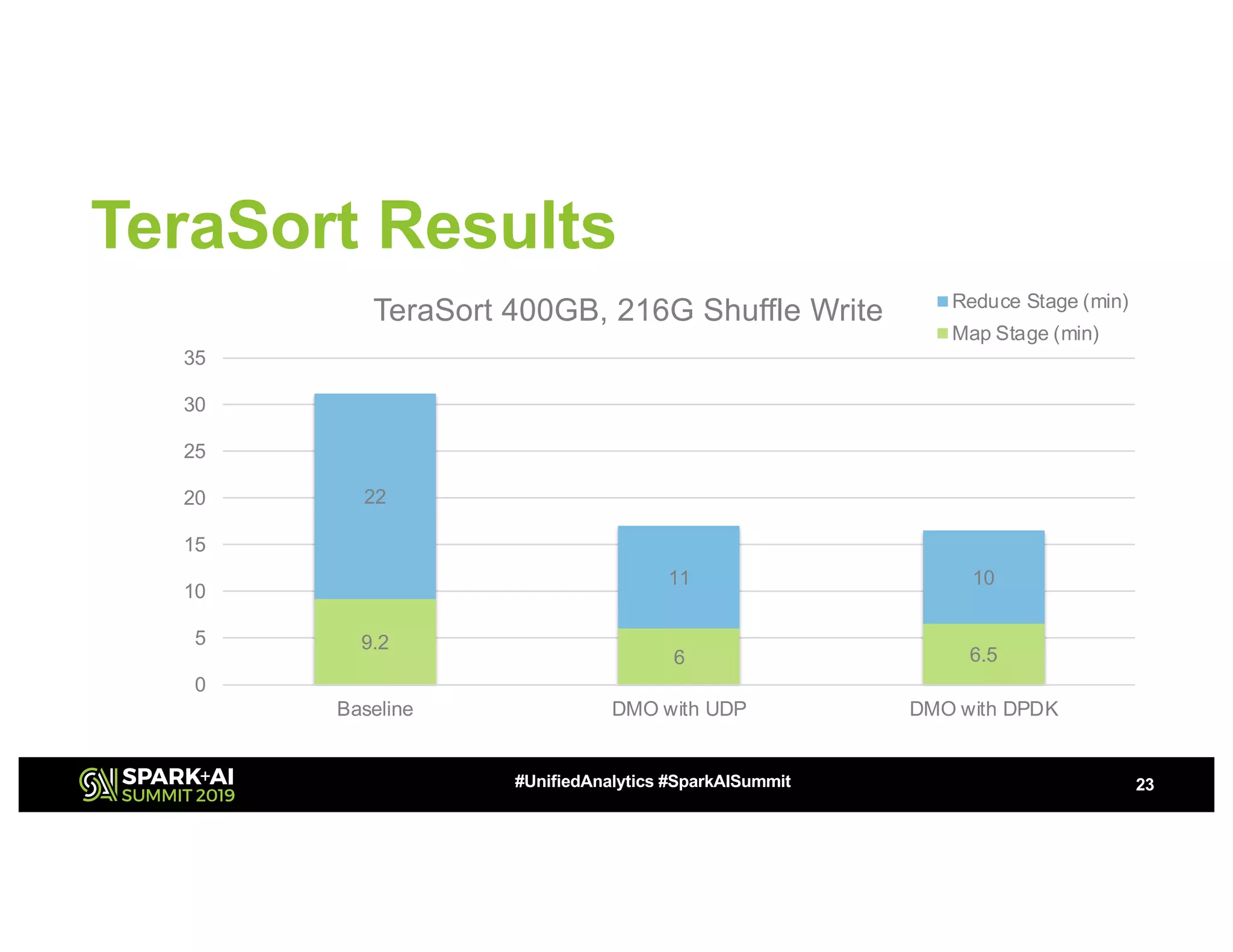

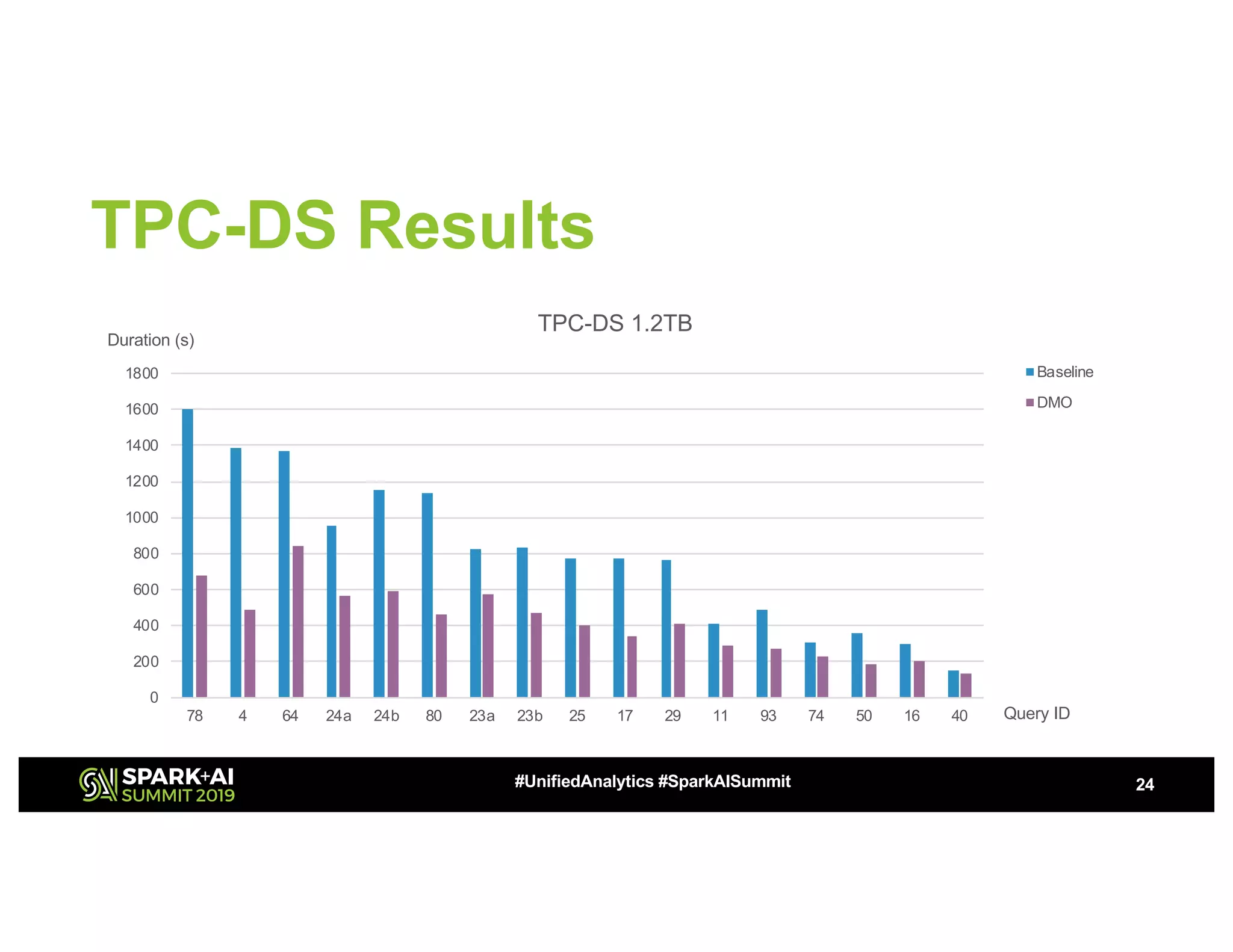

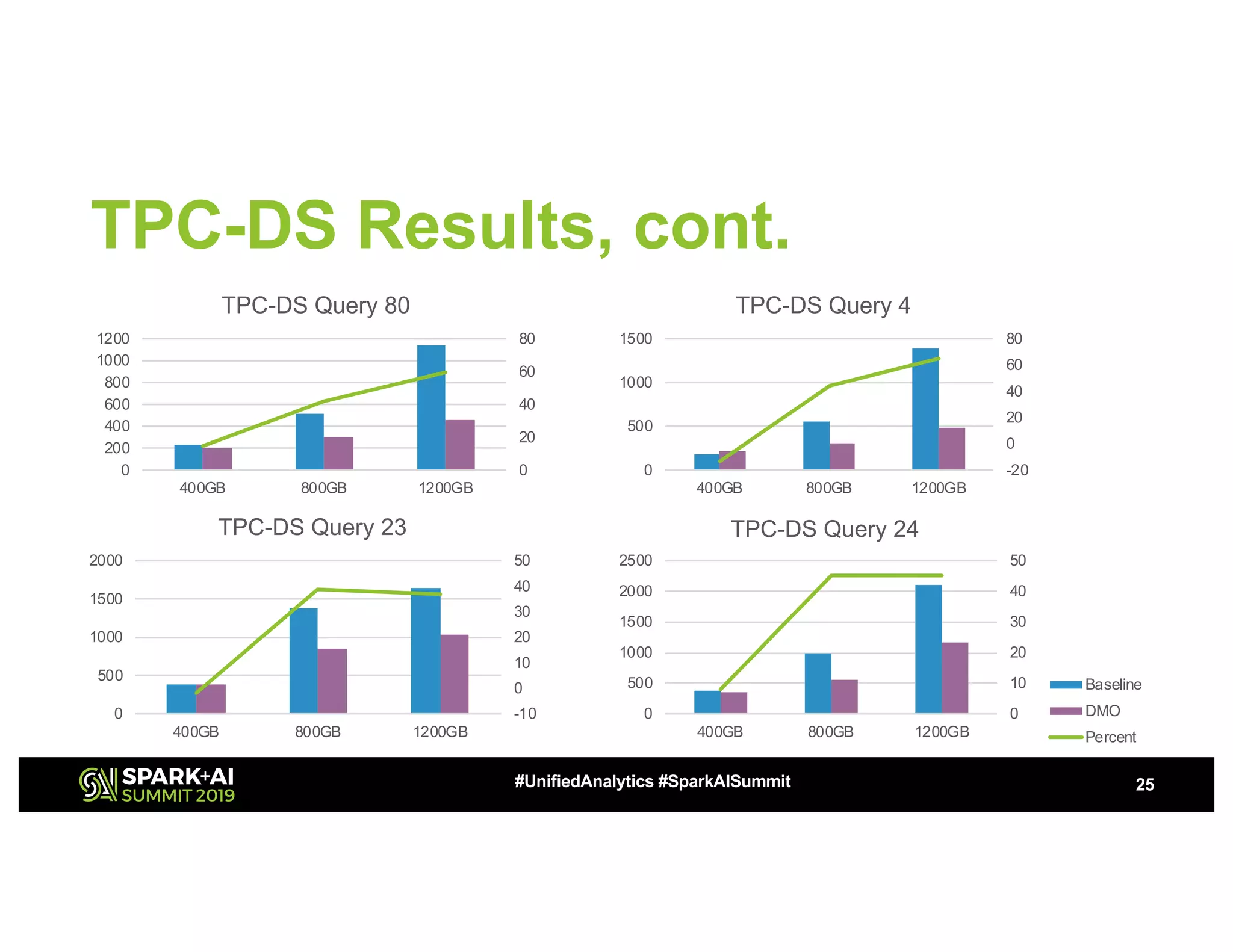

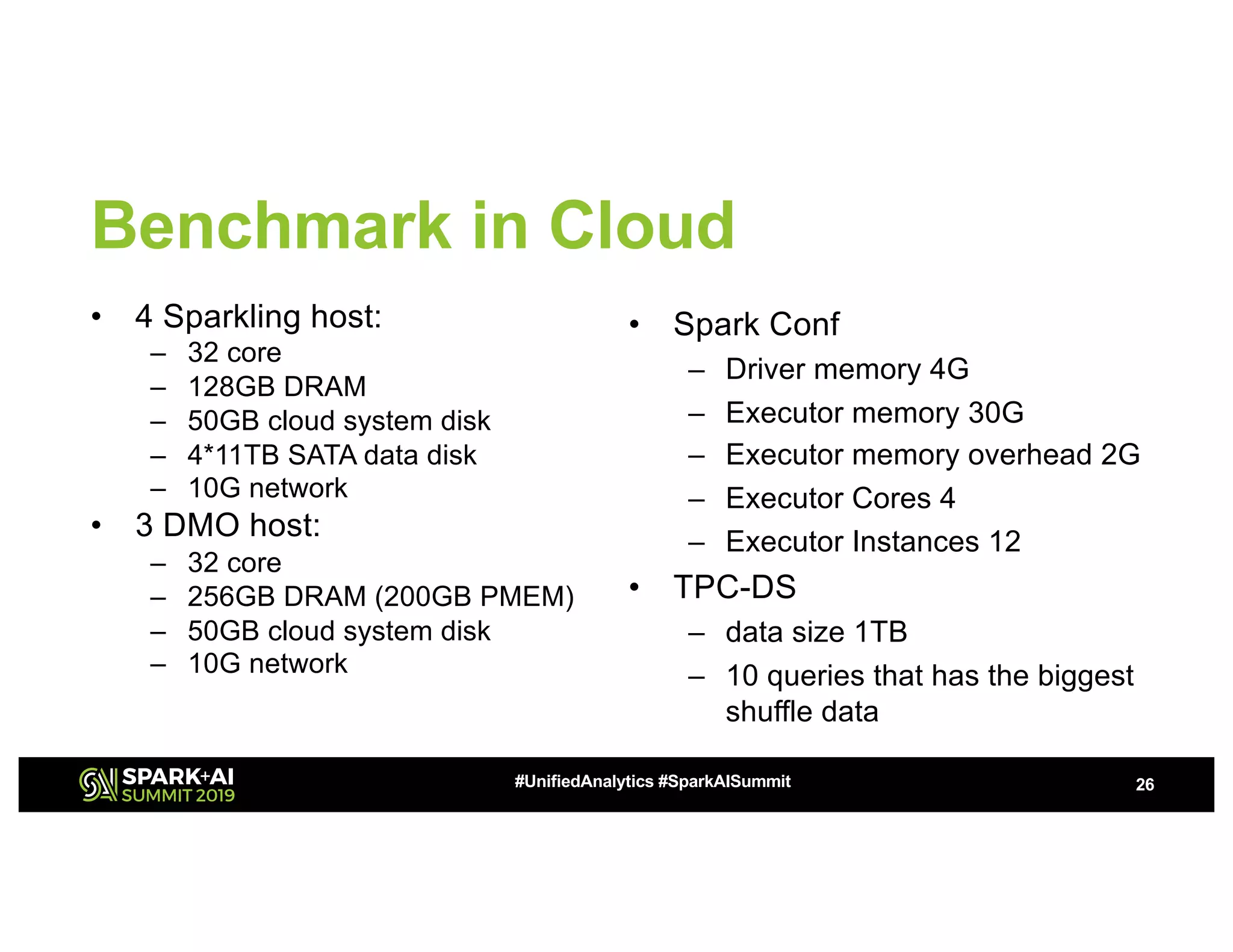

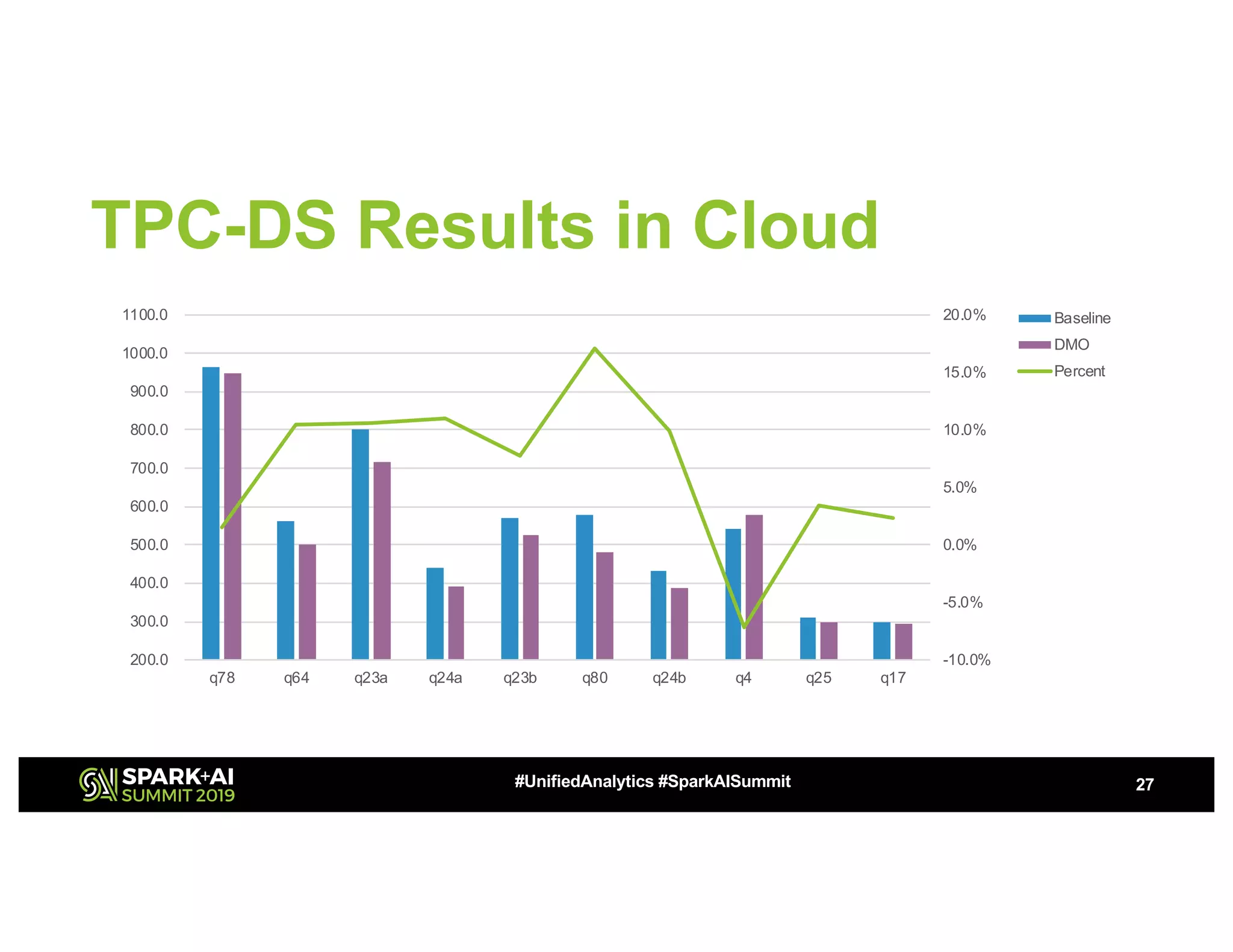

The document discusses advancements in big data technologies by MemVerge and Tencent Cloud, highlighting their work on a memory-converged infrastructure (MCI) system called Distributed Memory Objects (DMO). It emphasizes the importance of persistent memory in improving data processing speeds and overall system performance in cloud environments, particularly for applications involving Apache Spark. The future work includes performance tuning and the application of new shuffle management solutions to enhance scalability and efficiency across various industries.