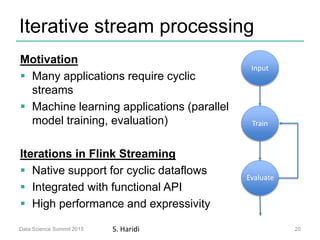

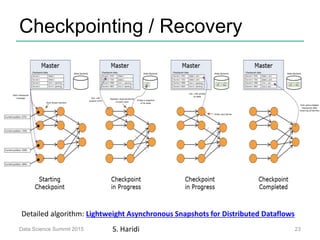

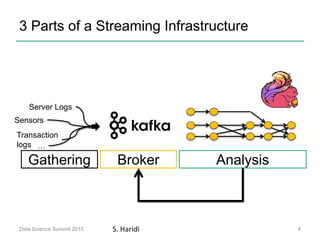

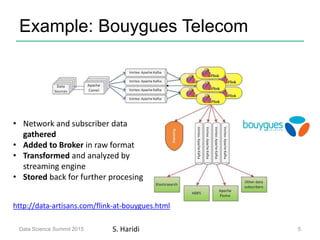

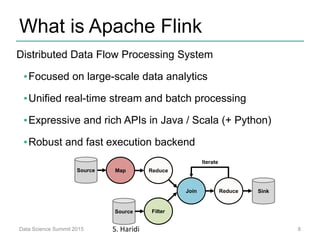

This document provides an introduction to stream processing with Apache Flink. It discusses why streaming is important, the key parts of a streaming infrastructure, and gives an example of how Bouygues Telecom uses Flink for stream processing. It then provides an overview of what Apache Flink is, including its unified batch and stream processing capabilities. The rest of the document focuses on stream processing features in Flink, including its DataStream API, flexible windowing options, support for iterative processing, fault tolerance mechanisms, and exactly-once processing semantics.

![Word count in Batch and Streaming

17

case class Word (word: String, frequency: Int)

val lines: DataStream[String] = env.fromSocketStream(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.keyBy("word”).window(Time.of(5,SECONDS))

.every(Time.of(1,SECONDS)).sum("frequency")

.print()

val lines: DataSet[String] = env.readTextFile(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.groupBy("word").sum("frequency")

.print()

DataSet API (batch):

DataStream API (streaming):

Data Science Summit 2015 S. Haridi](https://image.slidesharecdn.com/rmkwdtpktxukuheyxx7w-signature-2cb12a0c7c89b6350af6329aede7c58fd694119638315dbf50ca3232d5381cf8-poli-150723223300-lva1-app6892/85/SICS-Apache-Flink-Streaming-17-320.jpg)