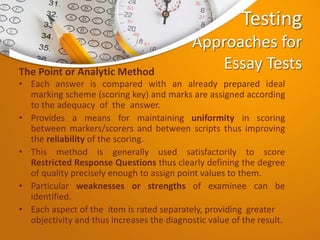

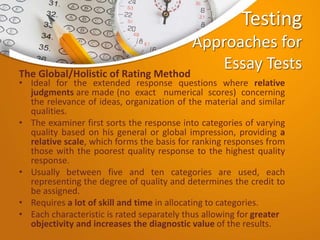

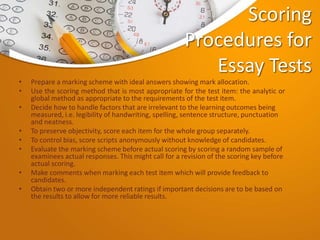

This document discusses different approaches to test scoring, including for objective and essay tests. It outlines key thinkers who contributed to scoring methods, such as Benjamin Bloom's taxonomy, and Robert Glaser's work on criterion-referenced assessment. Specific procedures are described for scoring classroom tests, essay tests, and objective tests, including using analytic or holistic methods for essays and manual, stencil, or machine scoring for objectives. References are also provided that discuss intelligence testing and measurement of learning outcomes.

![Testing

Approaches for

Objective Tests

• Manual Scoring: Direct comparison of candidates’ answers to a

marking scheme.

• Stencil Scoring: A scoring stencil is prepared using a blank answer

sheet by pending holes where the correct answers should be. The

stencil is then placed over each answer sheet and the number of

answer checks appearing through the holes is counted. Each test

paper is scanned afterwards to eliminate possible errors due to

examinees supplying more than one answer.

• Machine Scoring: Ideal for multiple choice questions. Specially

prepared answer sheets are used where responses are shown through

shading. Answer sheets are then machine scored with computers or

scanners.

• Correction for guessing: The most common formula for guessing

correction is: Score = Right responses – [wrong responses / (n-1)]

where n is the number of possible responses per item.](https://image.slidesharecdn.com/pst5205scoringapproaches-240404054236-dbaf1279/85/Scoring-Approaches-for-Essays-and-Objective-Tests-pptx-10-320.jpg)