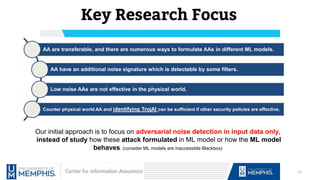

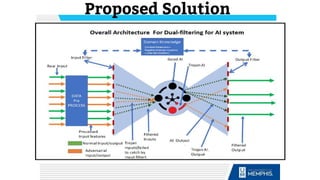

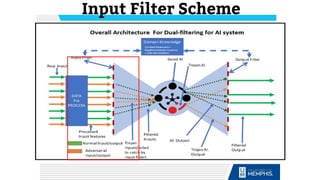

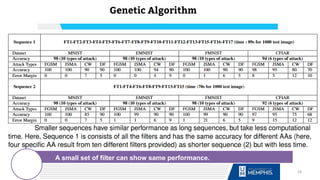

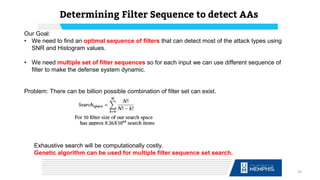

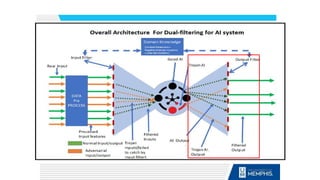

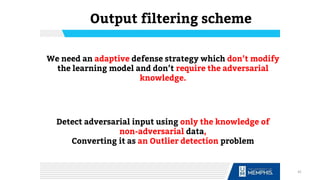

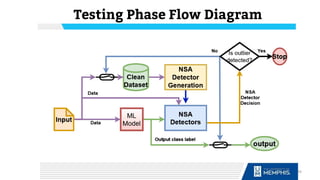

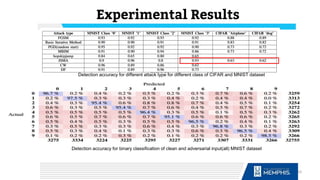

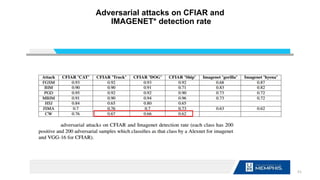

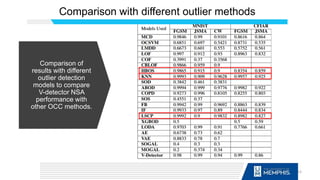

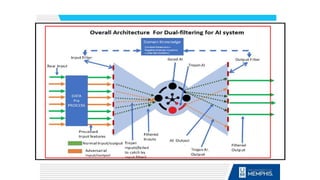

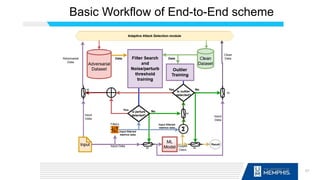

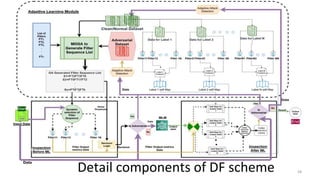

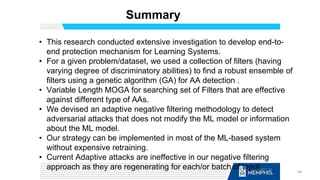

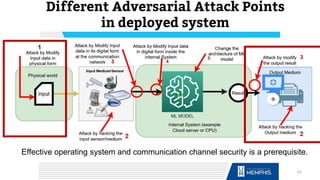

This presentation discusses robust filtering schemes to defend machine learning systems against adversarial attacks. It outlines three main defense schemes: input filtering, output filtering, and an end-to-end protection scheme. The input filtering scheme uses a genetic algorithm to determine an optimal sequence of filters to detect adversarial examples. The output filtering scheme formulates the detection of adversarial inputs as an outlier detection problem. The end-to-end scheme integrates components for adversarial detection, filtering, and classification into a unified framework for protection. Experimental results show the proposed approaches can effectively detect various adversarial attack types while maintaining high classification accuracy.

![Adversarial Attack (AA) on AI/ML

Types:

• Poisoning Attack : Manipulate training data

“Manipulation of training data, Machine Learning (ML) model architecture, or manipulate

testing data in a way that will result in wrong output from ML”

3

Reference[1]](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-3-320.jpg)

![Adversarial Attack (AA) on AI/ML

Types:

• Poisoning Attack : Manipulate training data

• Evasion Attack: Manipulate input data

“Manipulation of training data, Machine Learning (ML) model architecture, or manipulate

testing data in a way that will result in wrong output from ML”

4

Reference[2]](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-4-320.jpg)

![Defense Strategies for AA

• Generate Adversarial Example and

Retrain the model

• Limitations: Reduce the accuracy of

learning model

Retrain:

• Using PCA, low-pass filtering, JPEG

compression, soft thresholding techniques

as pre-processing technique.

• Limitation: Vulnerable to adaptive attack.

Input Reconstruction or

Transformation:

• Modifying the ML architecture to detect

adversarial attack

• Limitations: Require Modification of

learning models.

Model Modification:

Reference[5]

7](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-7-320.jpg)

![Nature of AAs

Reference[5]

8](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-8-320.jpg)

![Input Filter Scheme

Reference[5]

14](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-14-320.jpg)

![References:

1.Machine learning in cyber security: Survey, D Dasgupta, Z Akhtar and Sajib Sen

2.https://medium.com/onfido-tech/adversarial-attacks-and-defences-for-convolutional-neural-networks-66915ece52e7

3.“Poisoning Attacks against Support Vector Machines”, Biggio et al. 2013.[https://arxiv.org/abs/1206.6389]

4.“Intriguing properties of neural networks”, Szegedy et al. 2014. [https://arxiv.org/abs/1312.6199]

5.“Explaining and Harnessing Adversarial Examples”, Goodfellow et al. 2014. [https://arxiv.org/abs/1412.6572]

6.“Towards Evaluating the Robustness of Neural Networks”, Carlini and Wagner 2017b. [https://arxiv.org/abs/1608.04644]

7.“Practical Black-Box Attacks against Machine Learning”, Papernot et al. 2017. [https://arxiv.org/abs/1602.02697]

8.“Attacking Machine Learning with Adversarial Examples”, Goodfellow, 2017. [https://openai.com/blog/adversarial-example-research/]

9.https://medium.com/@ODSC/adversarial-attacks-on-deep-neural-networks-ca847ab1063

10.Tianyu Gu, Brendan Dolan-Gavitt, and Siddharth Garg. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv preprint

arXiv:1708.06733, 2017.

11.A brief survey of Adversarial Machine Learning and Defense Strategies.Z Akhtar, D Dasgupta Technical Report, The University of Memphis,

12.Determining Sequence of Image Processing Technique (IPT) to Detect Adversarial AttacksKD Gupta, D Dasgupta, Z Akhtar, arXiv preprint arXiv:2007.00337

13.Aaditya Prakash, Nick Moran, Solomon Garber, Antonella DiLillo, and James Storer. Deflecting adversarial attacks with pixel deflection. In Proceedings of the IEEE

conference on computer vision and pattern recognition, pages 8571–8580, 2018.

14.Nicholas Carlini. Lessons learned from evaluating the robustness of defenses to adversarial examples. 2019.

15.Nicholas Carlini, Anish Athalye, Nicolas Papernot, Wieland Brendel, Jonas Rauber, Dimitris Tsipras, Ian Goodfellow, Aleksander Madry, and Alexey Kurakin. On

evaluating adversarial robustness. arXiv preprint arXiv:1902.06705, 2019.

16.Nicholas Carlini and DavidWagner. Defensive distillation is not robust to adversarial examples. arXiv preprint arXiv:1607.04311, 2016.

17.Nicholas Carlini and David Wagner. Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM Workshop on

Artificial Intelligence and Security, pages 3–14, 2017.

18.Nicholas Carlini and DavidWagner. Magnet and" efficient defenses against adversarial attacks“ are not robust to adversarial examples. arXiv preprint

arXiv:1711.08478, 2017.

65](https://image.slidesharecdn.com/defensekishor-210624162950/85/Robust-Filtering-Schemes-for-Machine-Learning-Systems-to-Defend-Adversarial-Attacks-65-320.jpg)