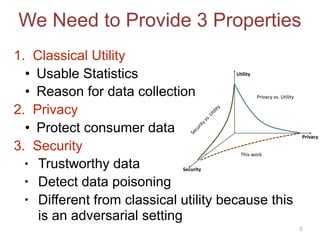

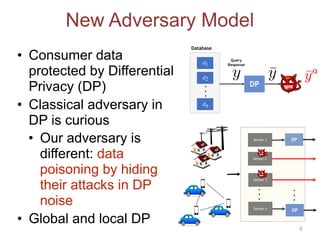

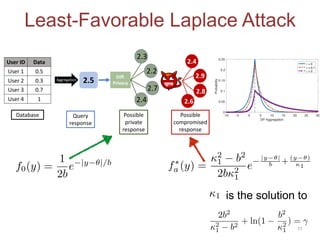

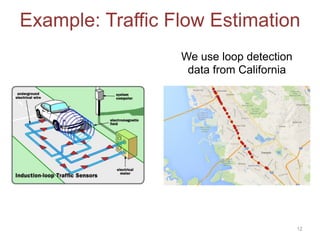

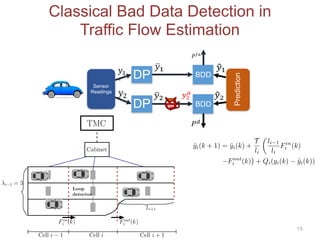

This document discusses adversarial classification under differential privacy. It proposes a new defense against adversarial distributions that aims to provide utility, privacy, and security.

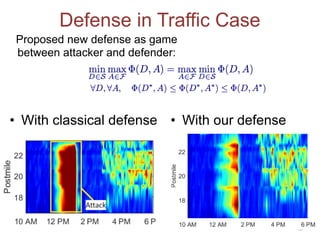

The defense frames the problem as a game between an attacker and defender. The defender first designs a classifier to minimize errors while satisfying privacy constraints. The attacker then tries to maximize damage by poisoning the data.

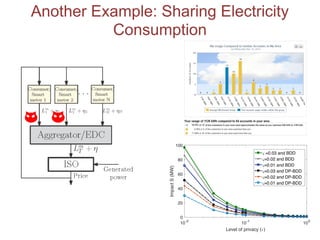

The proposed defense can outperform classical defenses by allowing the defender to certify performance guarantees no matter the attacker's strategy, improving on approaches that are limited once the attacker adapts. Examples applying this defense to traffic flow estimation and electricity consumption data are also discussed.

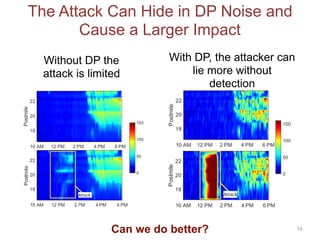

![Adversary Goals

• Intelligently poison the data in a way that is

hard to detect (hide attack in DP noise)

• Achieve maximum damage to the utility of the

system (deviate estimate as much as possible)

7

max

fa

E[Y a

]

s.t.

DKL(fakf0)

fa 2 F

Classical DP

Attack Goals:

Multi-criteria Optimization

¯Y M(D)

¯Y ⇠ f0

Attack

Y a

instead of ¯Y](https://image.slidesharecdn.com/ndss2020-200227230156/85/Adversarial-Classification-Under-Differential-Privacy-7-320.jpg)

![Defense Against Adversarial (Adaptive)

Distributions

•Player 1 designs classifier D ∈ S minimize Φ(D,A) (e.g.,

Pr[Miss Detection] Subject to fix false alarms)

-Player 1 makes the first move

•Player 2 (attacker) has multiple strategies A∈ F

-Makes the move after observing the move of the classifier

•Player 1 wants provable performance guarantees:

-Once it selects Do by minimizing Φ, it wants proof that no matter what

the attacker does, Φ<m, i.e.

•

15](https://image.slidesharecdn.com/ndss2020-200227230156/85/Adversarial-Classification-Under-Differential-Privacy-15-320.jpg)