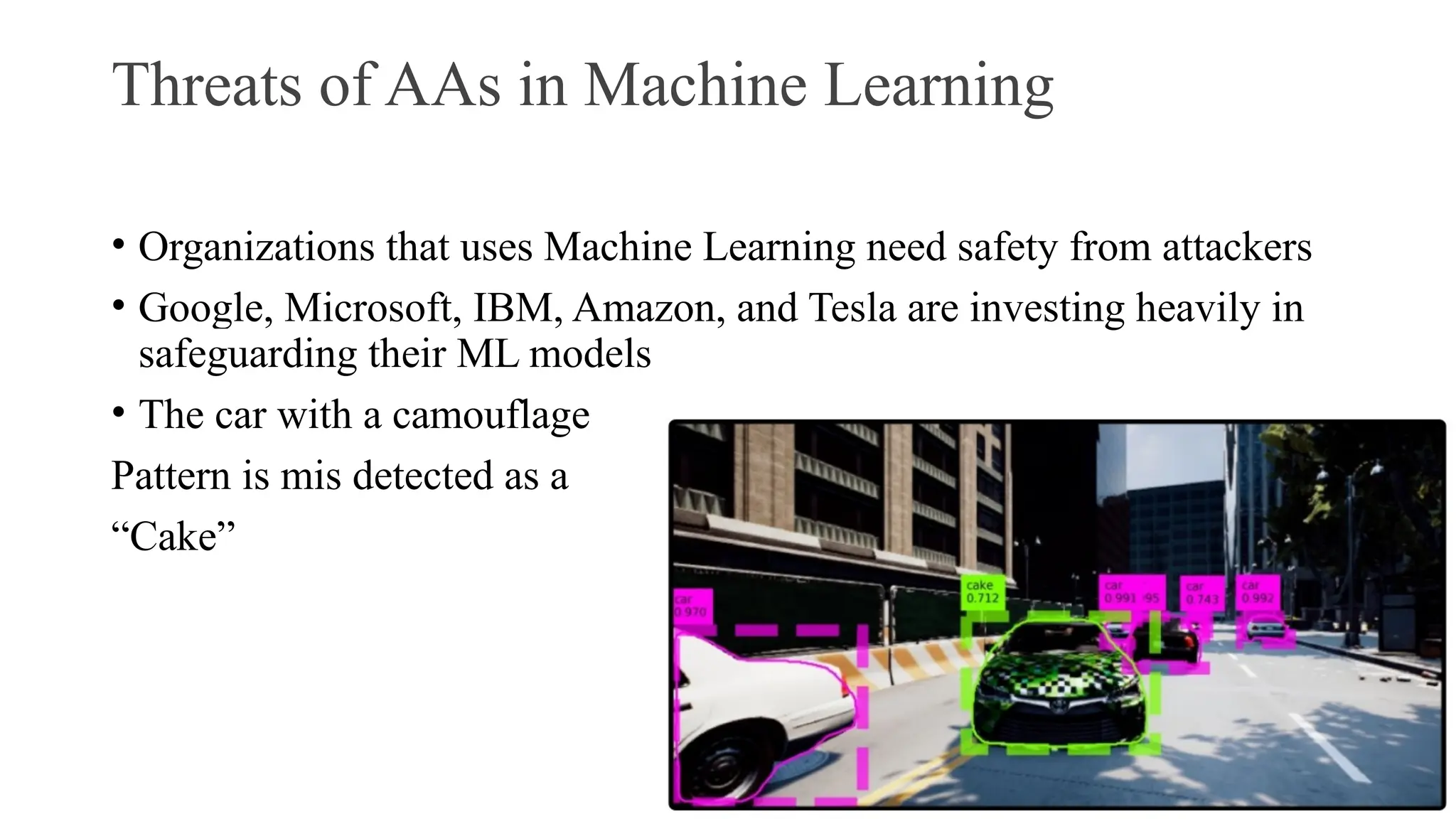

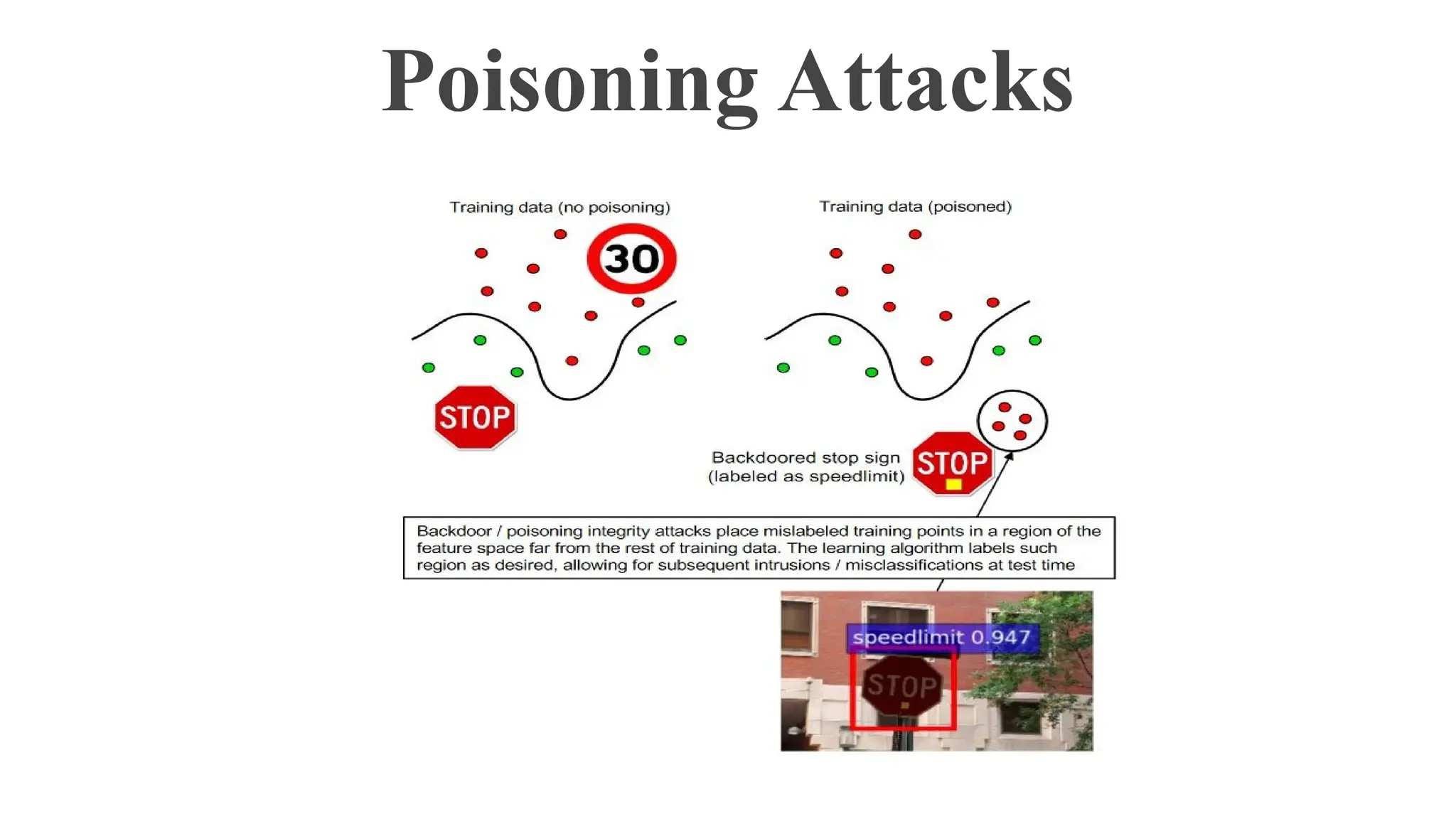

Adversarial machine learning (AML) involves techniques to deceive ML models through manipulated inputs, with notable attack methods including poisoning, evasion, and model extraction. Organizations like Google and Microsoft are investing in defenses against such attacks, which pose significant threats to ML applications. The document details various adversarial attack methods, their advantages, and disadvantages, while stressing the importance of strong defense strategies against potential exploitation.