Embed presentation

Download as PDF, PPTX

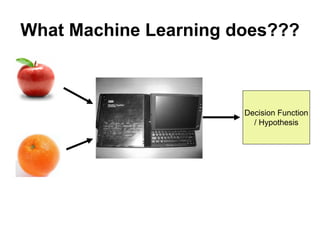

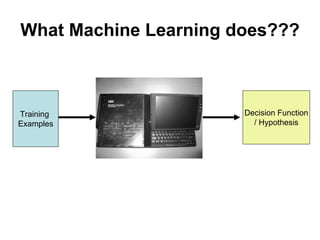

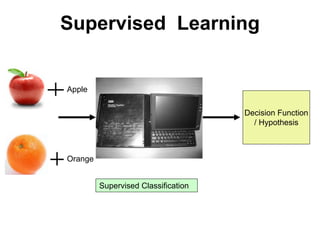

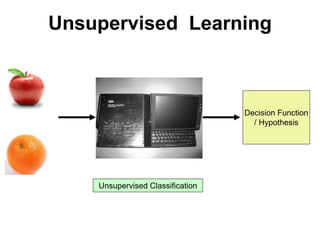

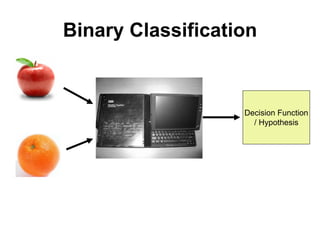

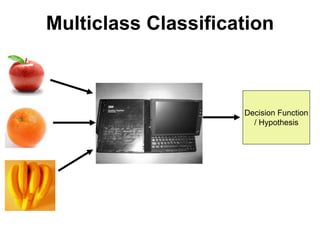

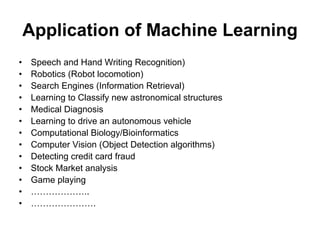

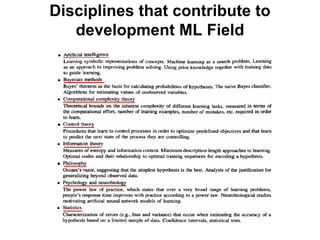

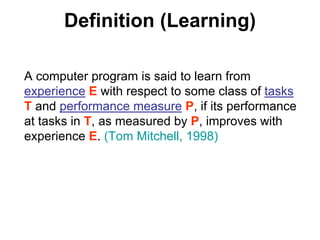

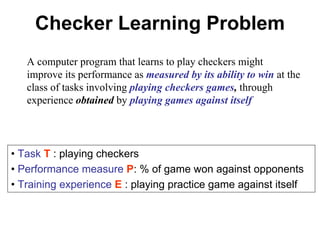

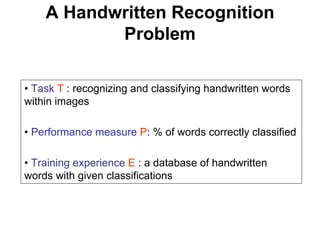

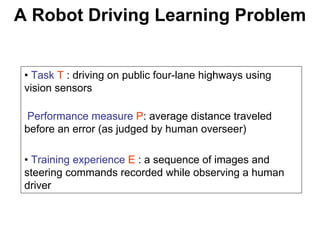

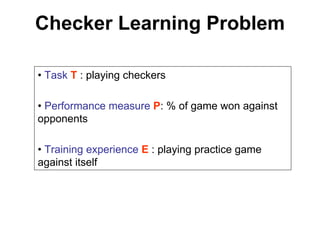

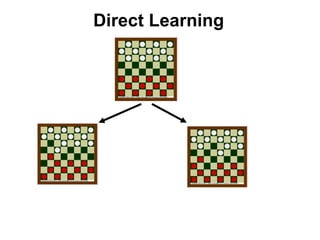

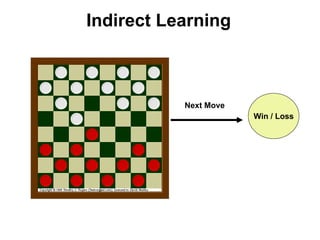

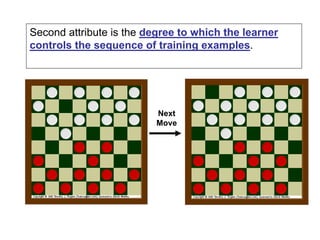

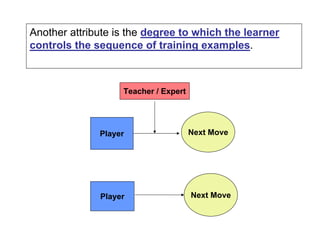

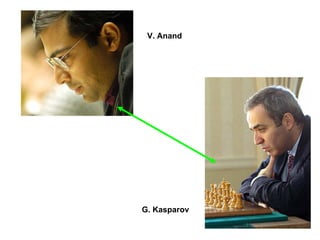

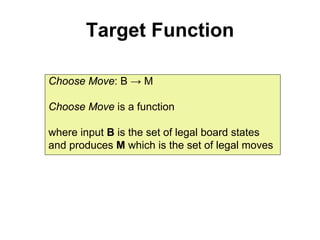

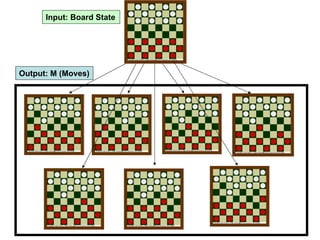

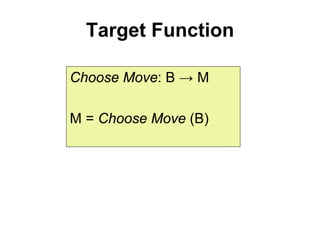

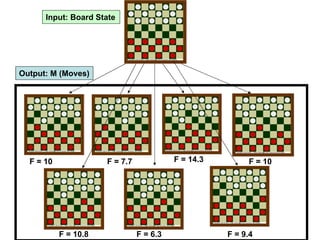

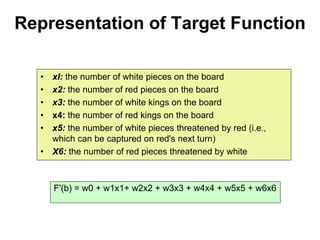

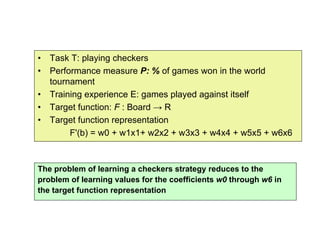

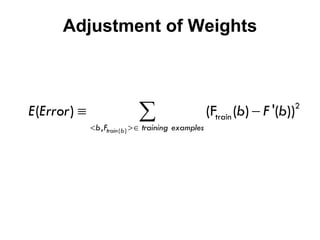

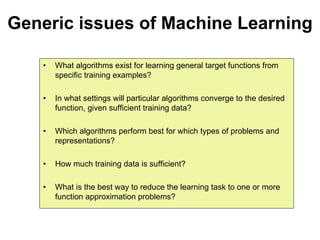

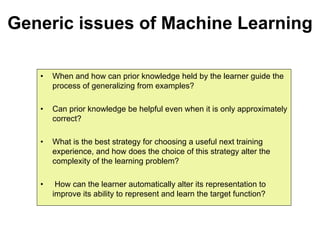

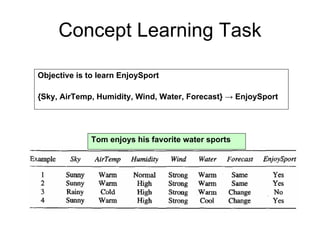

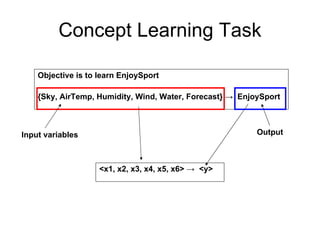

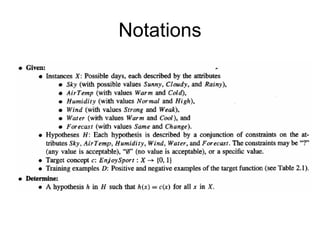

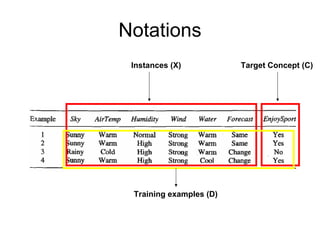

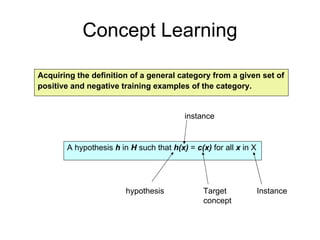

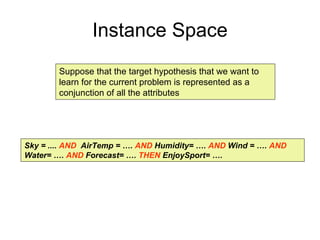

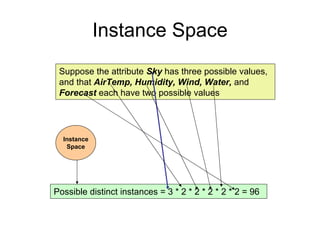

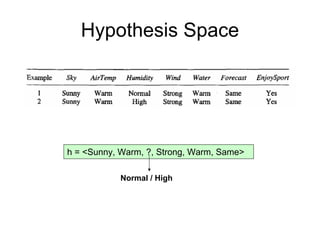

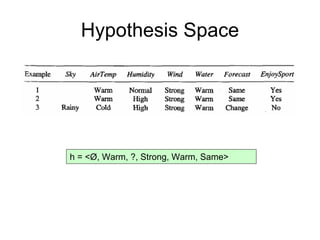

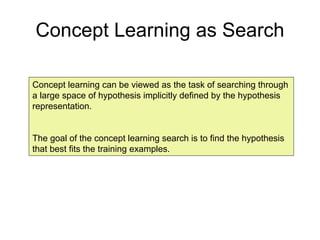

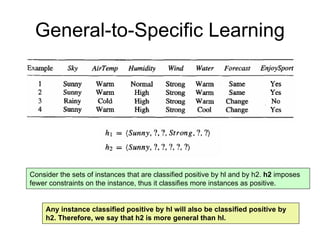

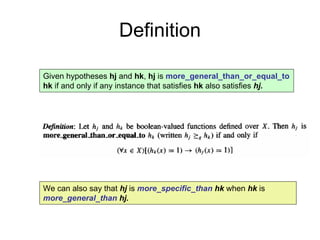

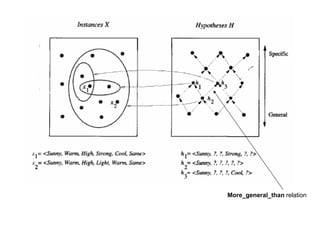

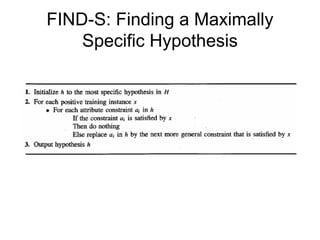

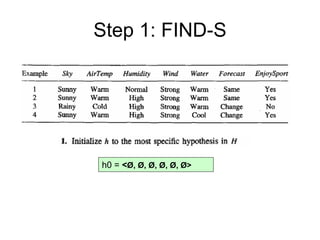

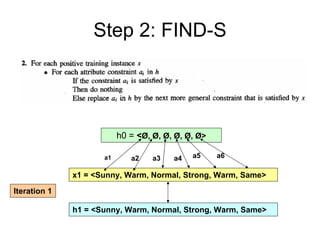

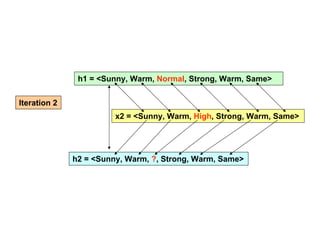

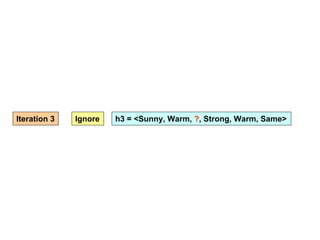

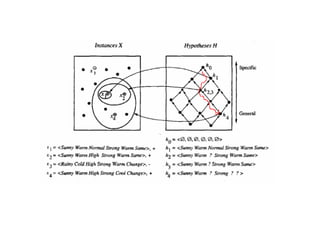

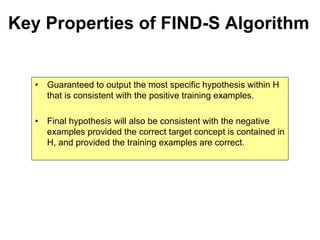

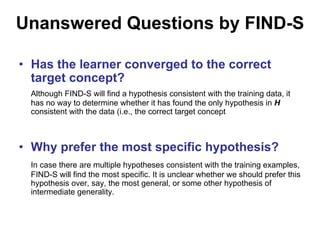

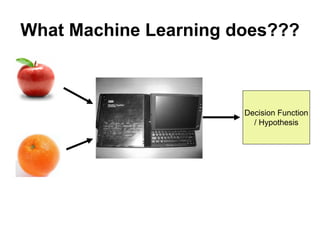

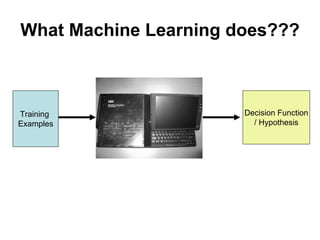

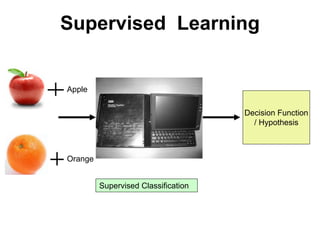

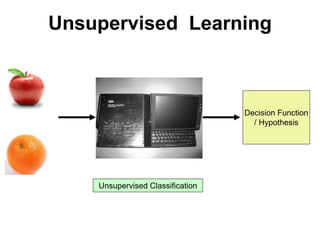

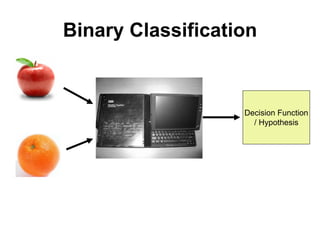

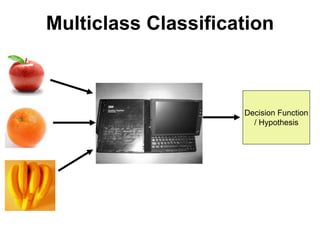

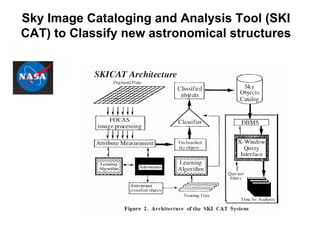

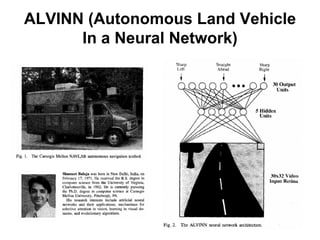

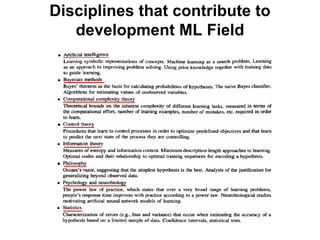

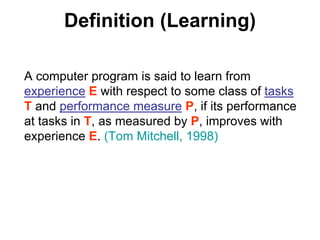

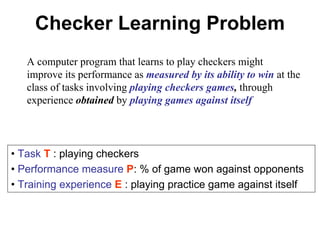

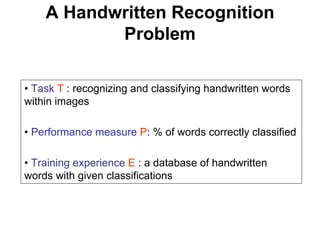

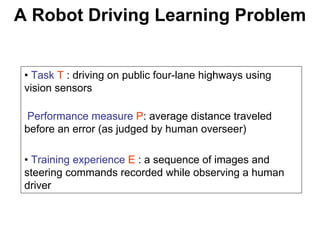

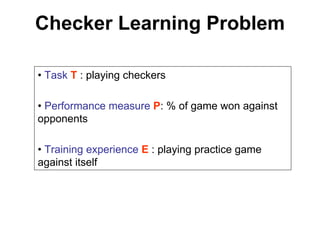

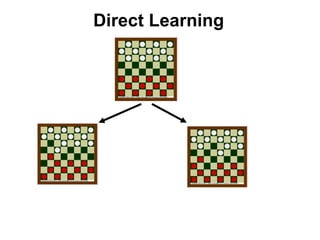

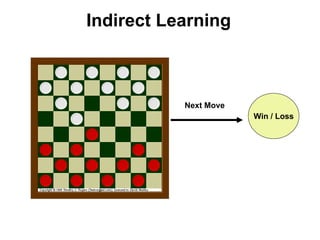

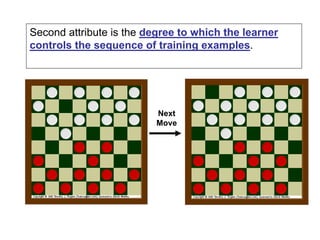

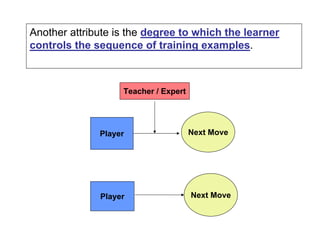

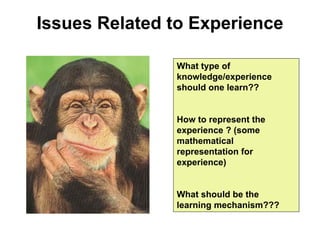

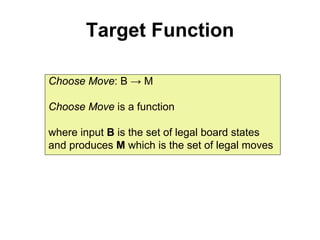

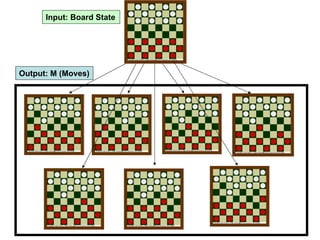

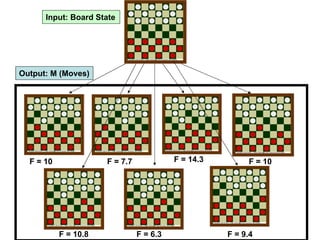

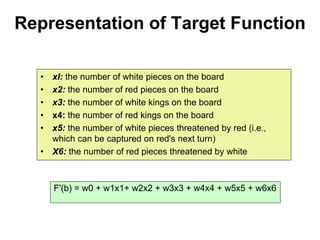

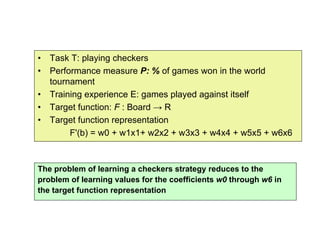

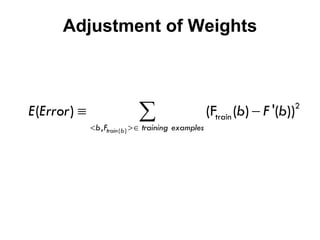

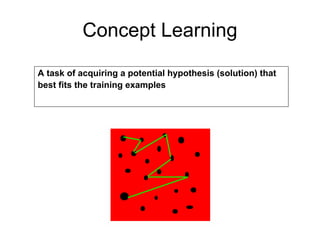

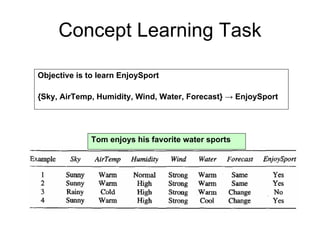

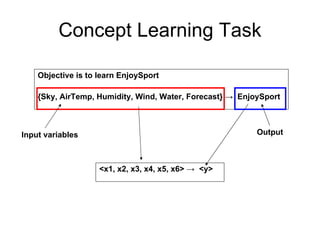

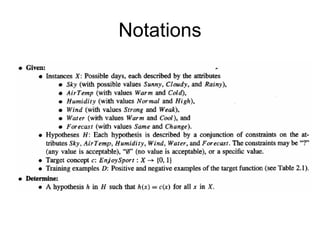

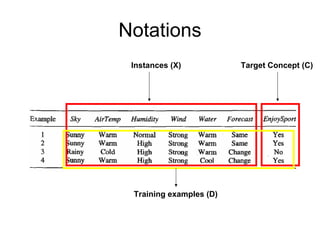

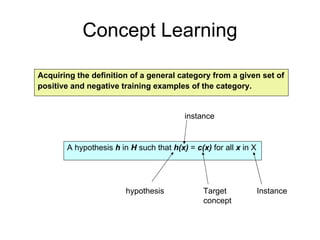

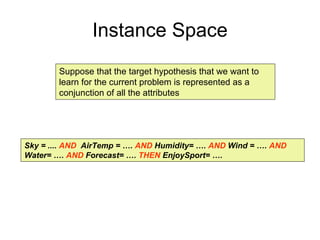

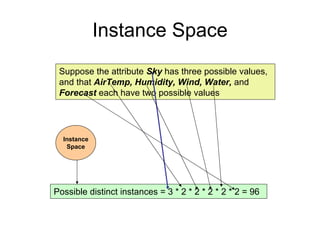

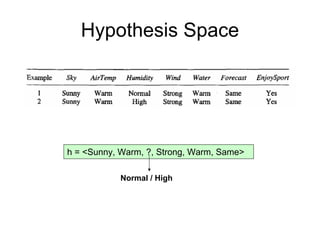

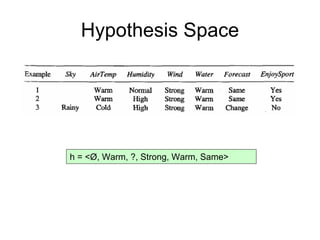

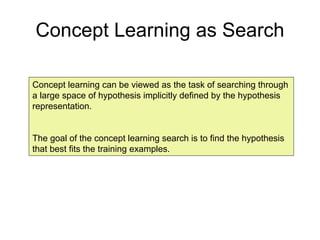

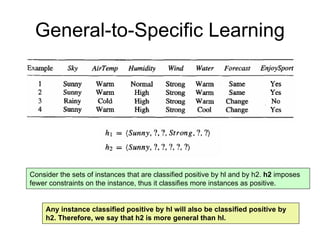

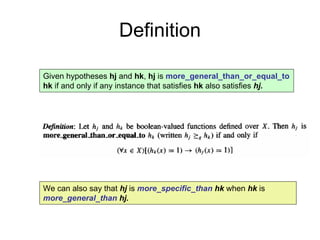

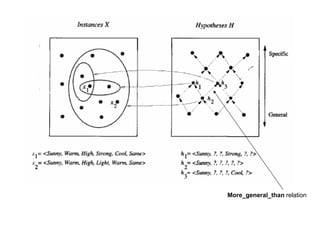

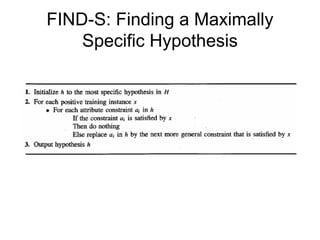

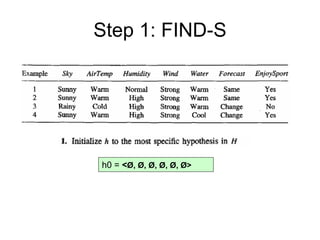

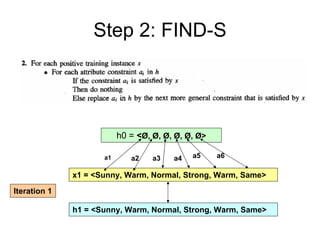

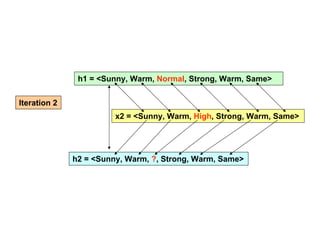

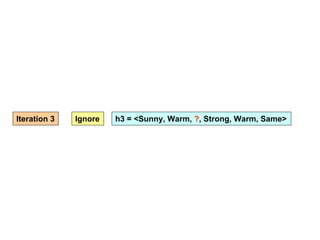

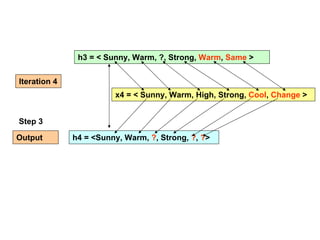

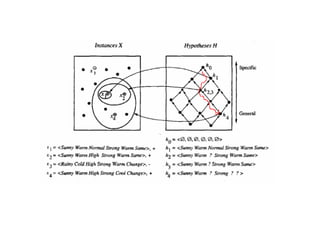

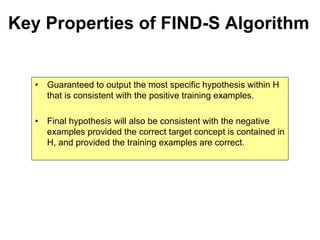

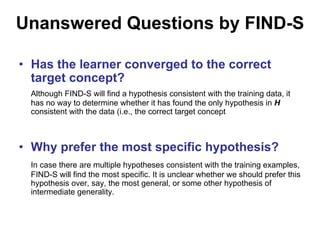

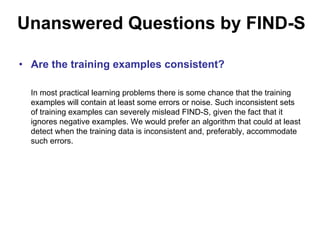

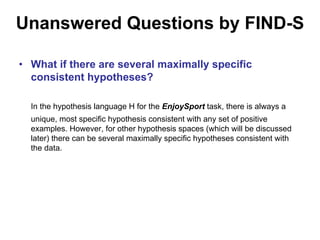

- The document discusses a lecture on machine learning given by Ravi Gupta and G. Bharadwaja Kumar. - Machine learning allows computers to automatically improve at tasks through experience. It is used for problems where the output is unknown and computation is expensive. - Machine learning involves training a decision function or hypothesis on examples to perform tasks like classification, regression, and clustering. The training experience and representation impact whether learning succeeds. - Choosing how to represent the target function, select training examples, and update weights to improve performance are issues in machine learning systems.