Embed presentation

Download to read offline

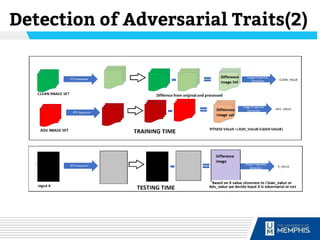

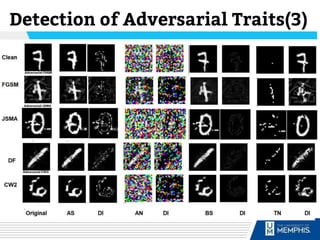

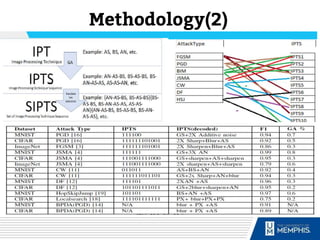

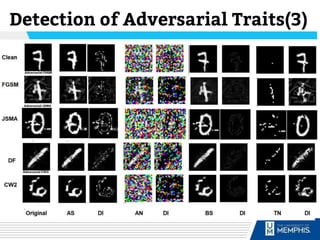

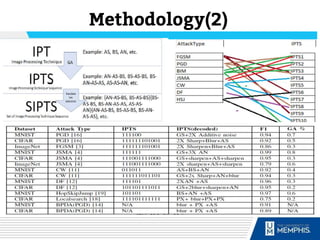

The document discusses methods of detecting adversarial inputs in AI and machine learning through image processing techniques. It outlines various types of adversarial attacks, including poisoning, evasion, and Trojan AI, and emphasizes the quantifiable noise differences between clean and adversarial images. The methodology proposed aims to effectively identify attack types while maintaining machine learning efficiency and applicability across different attack scenarios.