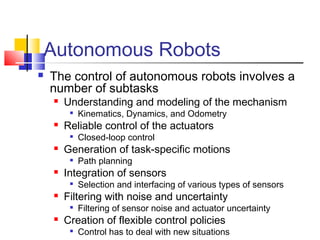

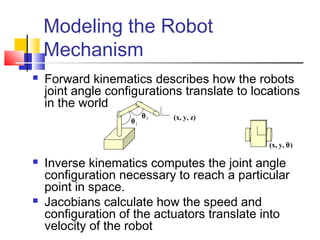

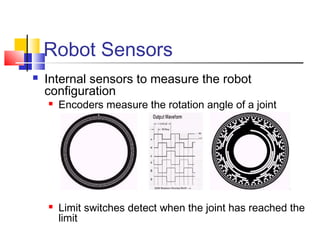

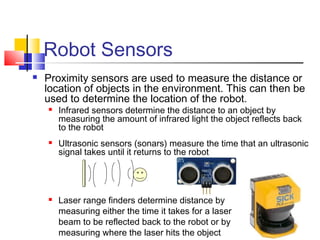

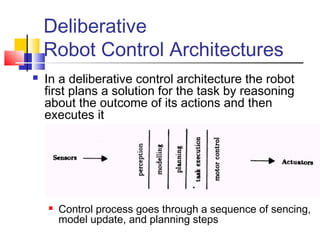

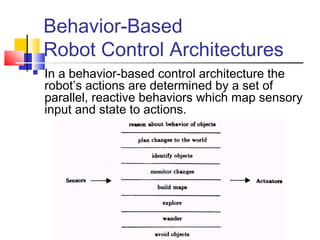

This document is a final report on automation and robotics submitted by Truong Ha Anh to their advisor. The report provides an overview of automation and robotics in intelligent environments, including how robots can be used for tasks like home automation, personal assistance, cleaning, and security. It also discusses autonomous robot control and challenges like dealing with uncertainty. Key topics covered include modeling robot mechanisms, sensor-driven control, deliberative and behavior-based control architectures, and developing intuitive human-robot interfaces.