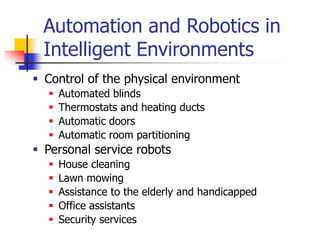

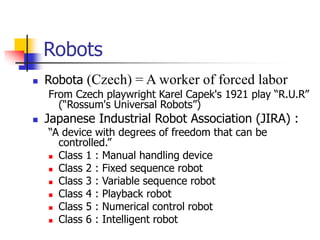

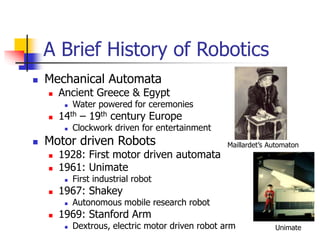

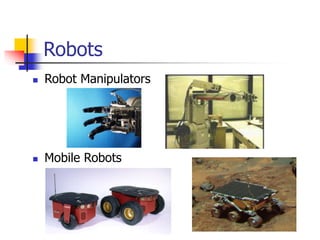

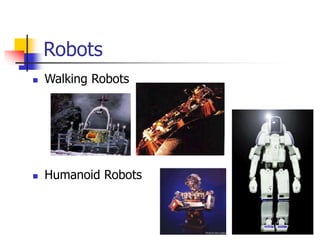

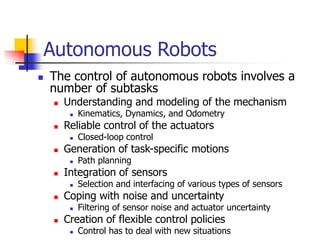

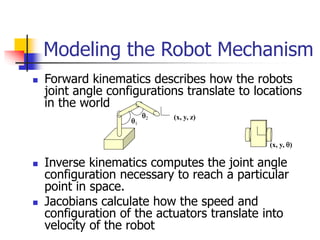

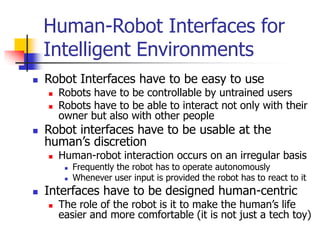

This document discusses smart home technologies and automation using robotics. It describes how robots and automation can automate functions in the home, provide services to inhabitants, and execute decisions made by decision makers. It covers types of automation like automated blinds and doors as well as personal service robots for tasks like cleaning, maintenance, and assistance. The document also provides background on robotics, including definitions, classifications of robots, challenges of using traditional industrial robots in homes, and requirements for robots in intelligent environments.