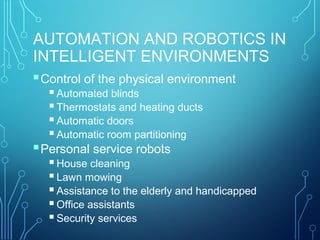

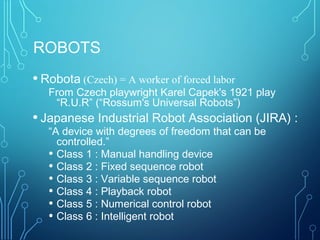

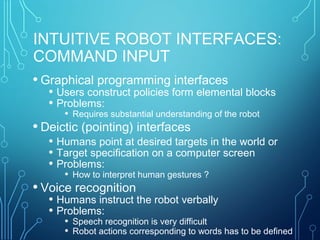

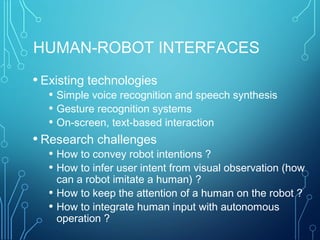

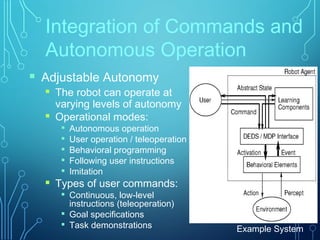

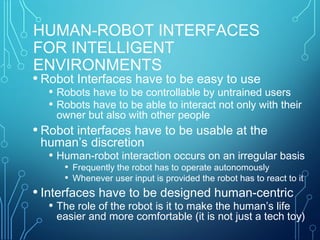

This document discusses smart home technologies including automation and robotics. It describes how intelligent environments aim to improve inhabitant experience through automating functions, providing services, and making decisions through execution of commands. Automation examples include control of physical environments like blinds, thermostats, doors. Robotics examples include personal service robots for tasks like cleaning, lawn mowing, and assisting the elderly. The document also discusses requirements for autonomous robots in intelligent environments like adaptability, intuitive interfaces for humans, and integration of commands and autonomous operation.