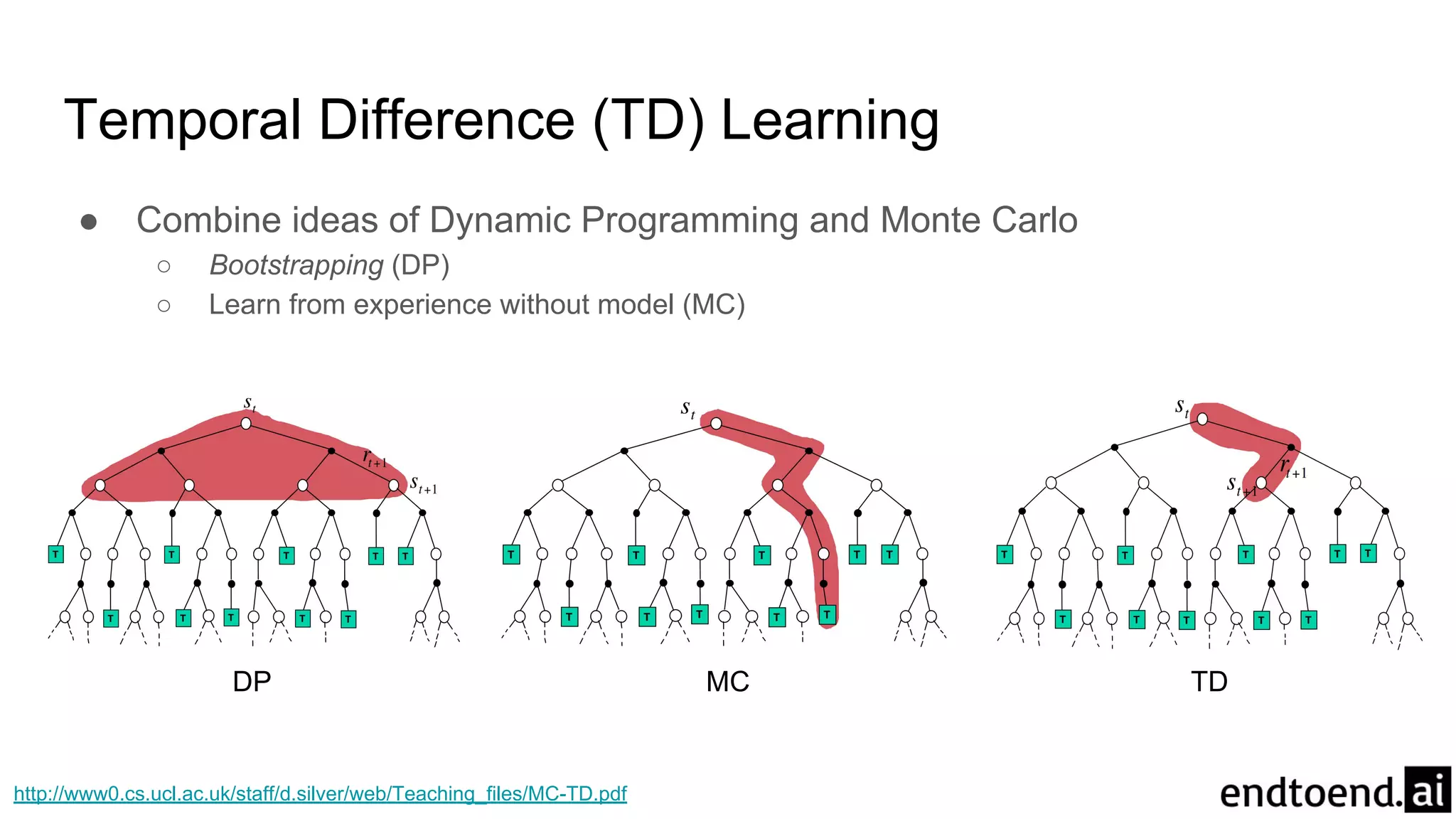

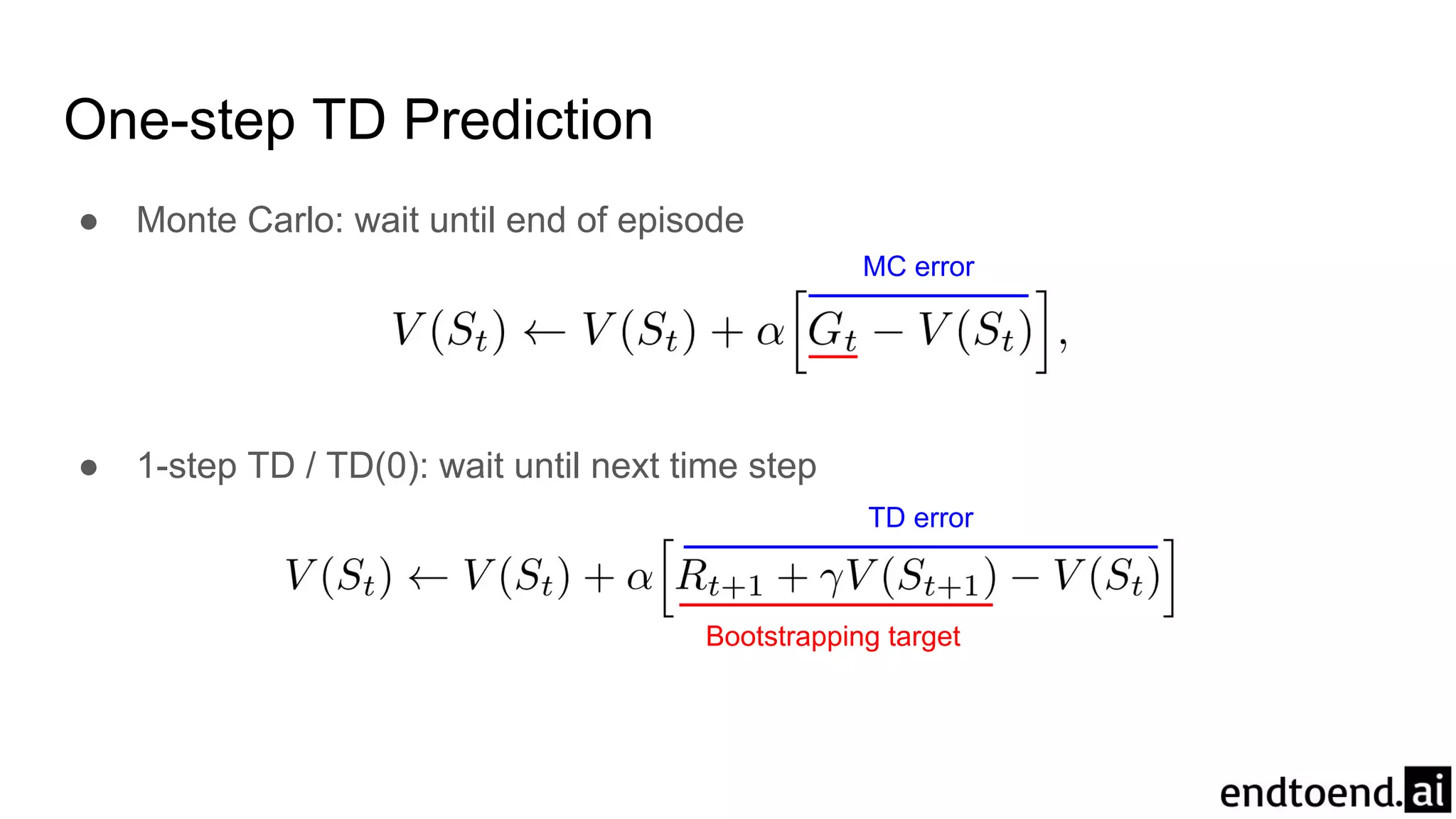

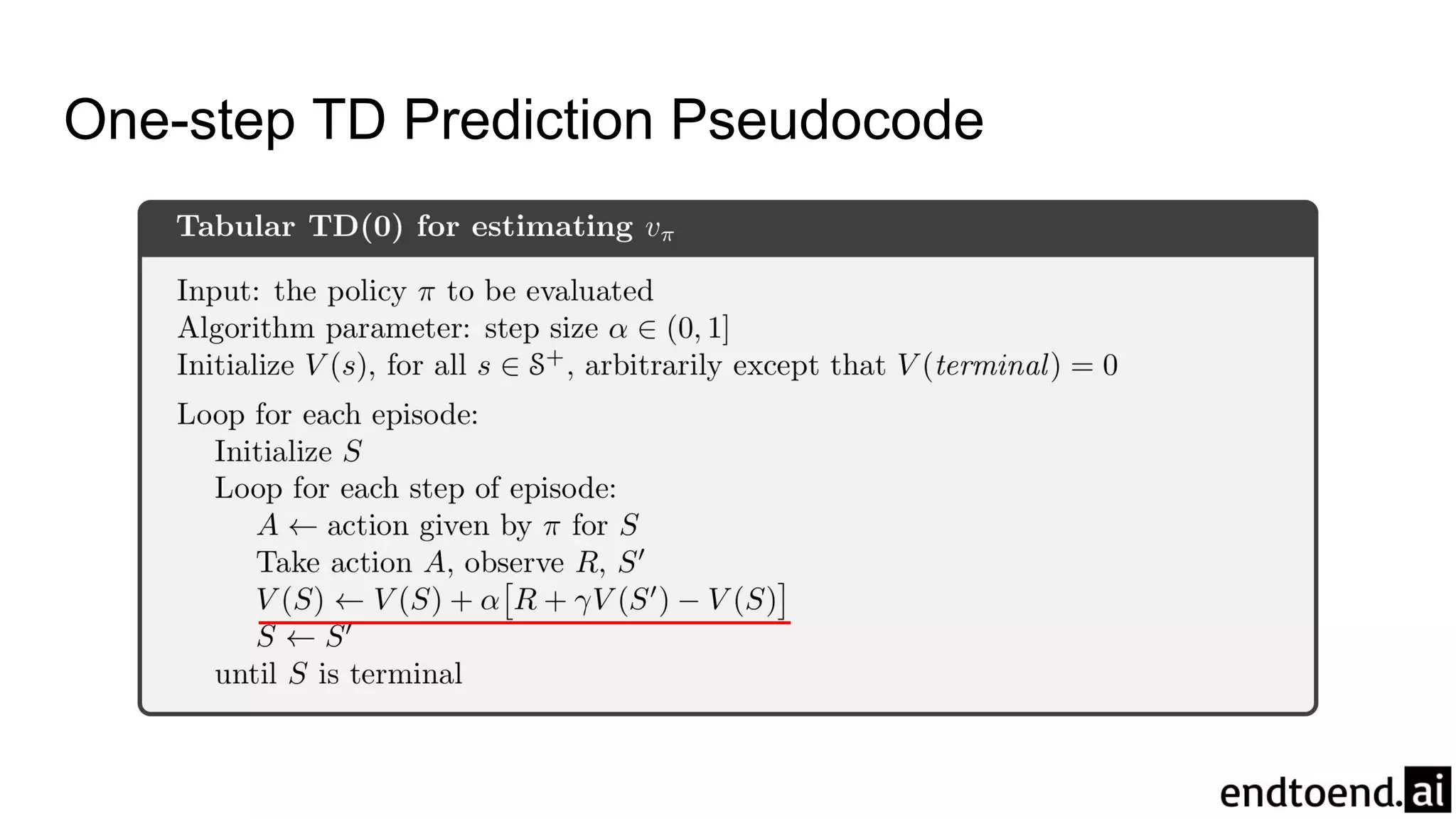

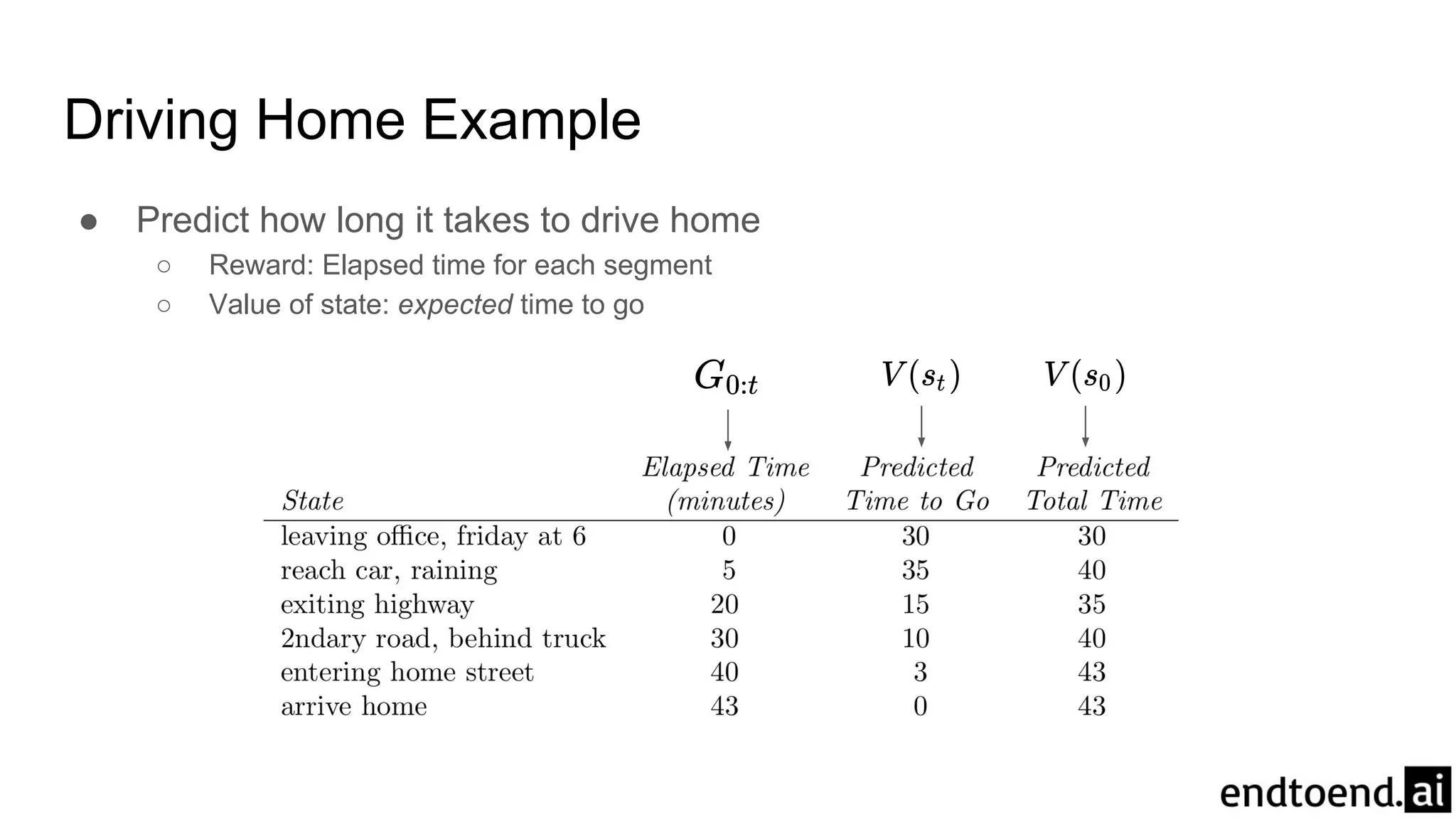

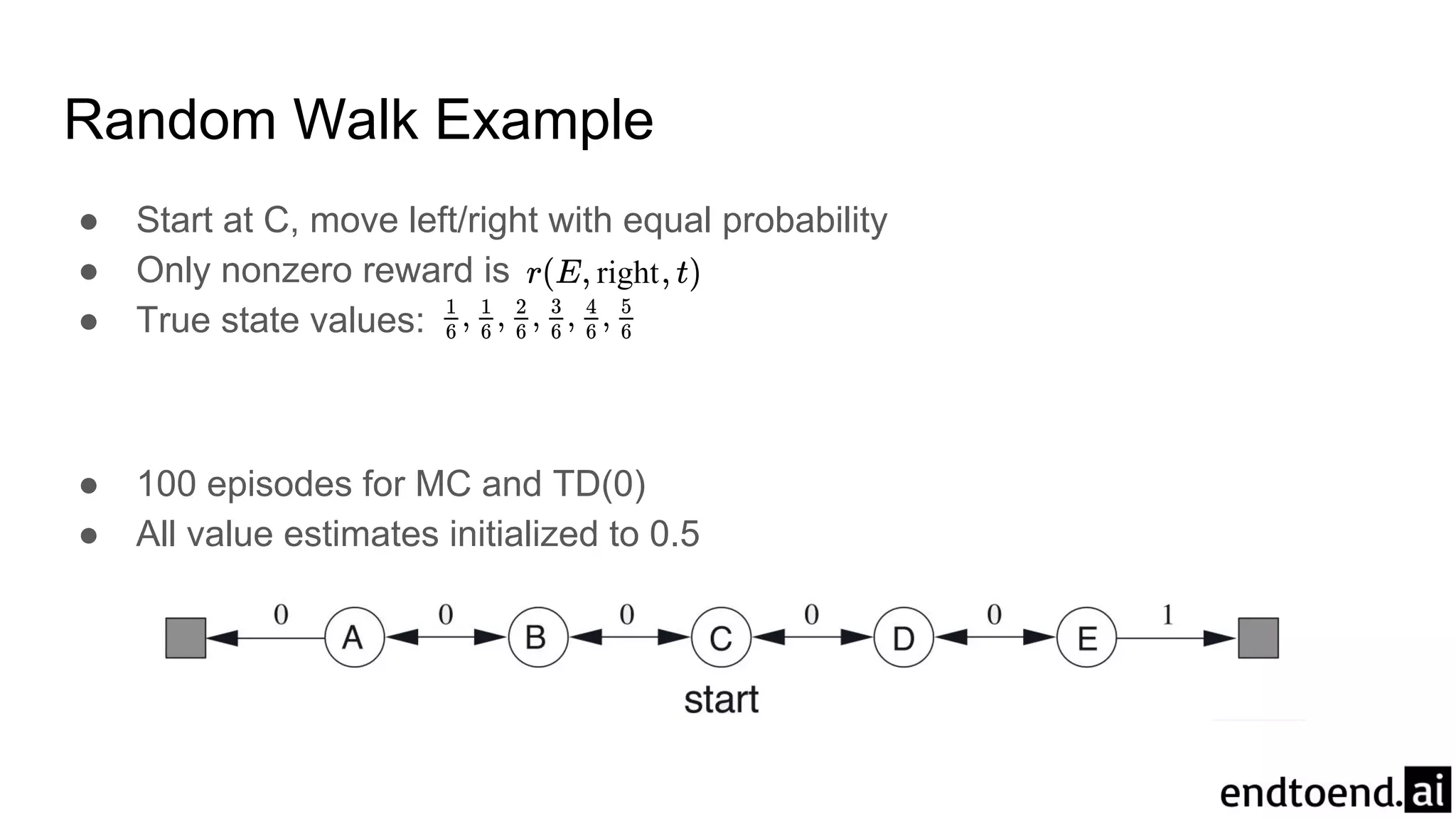

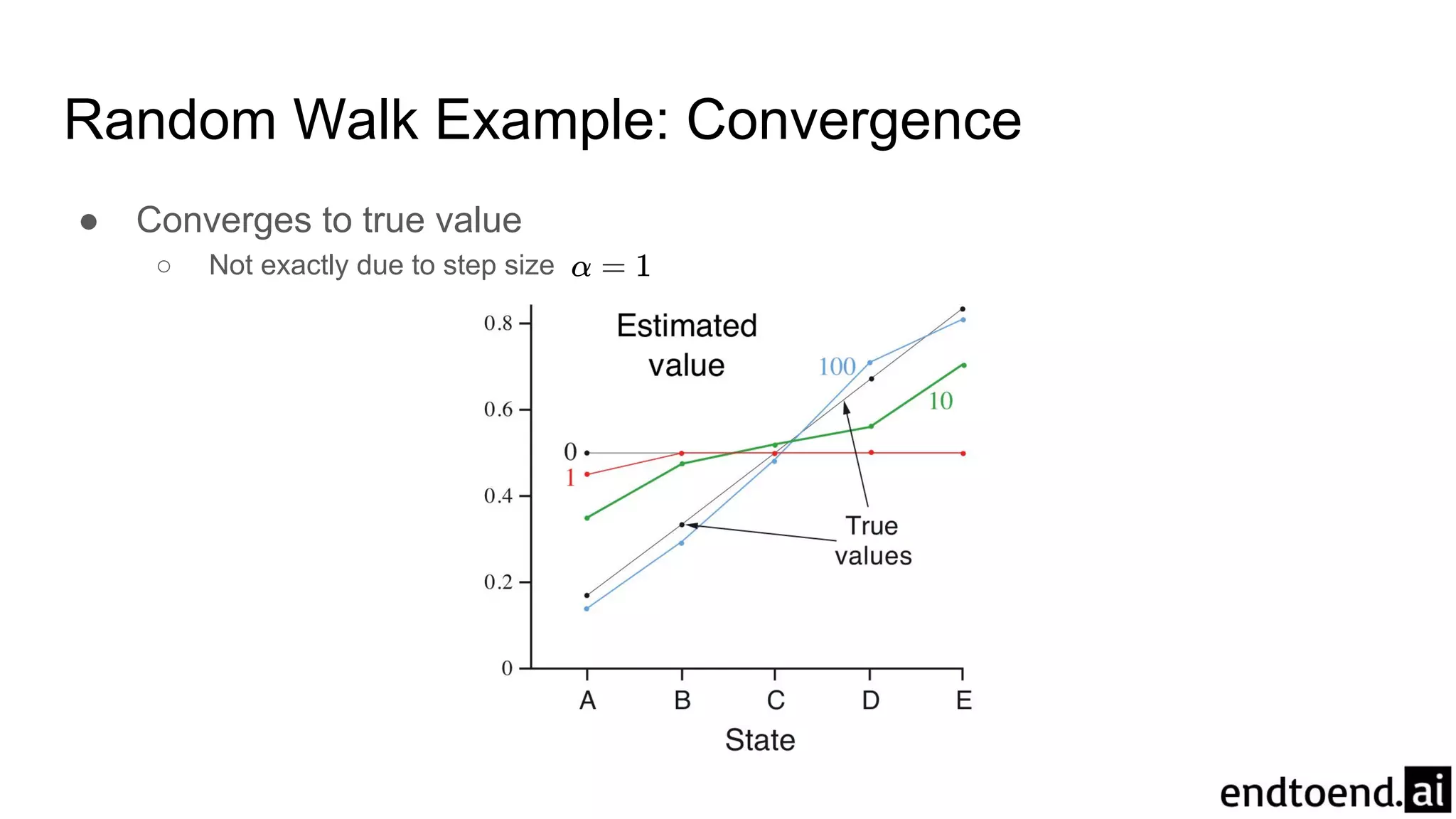

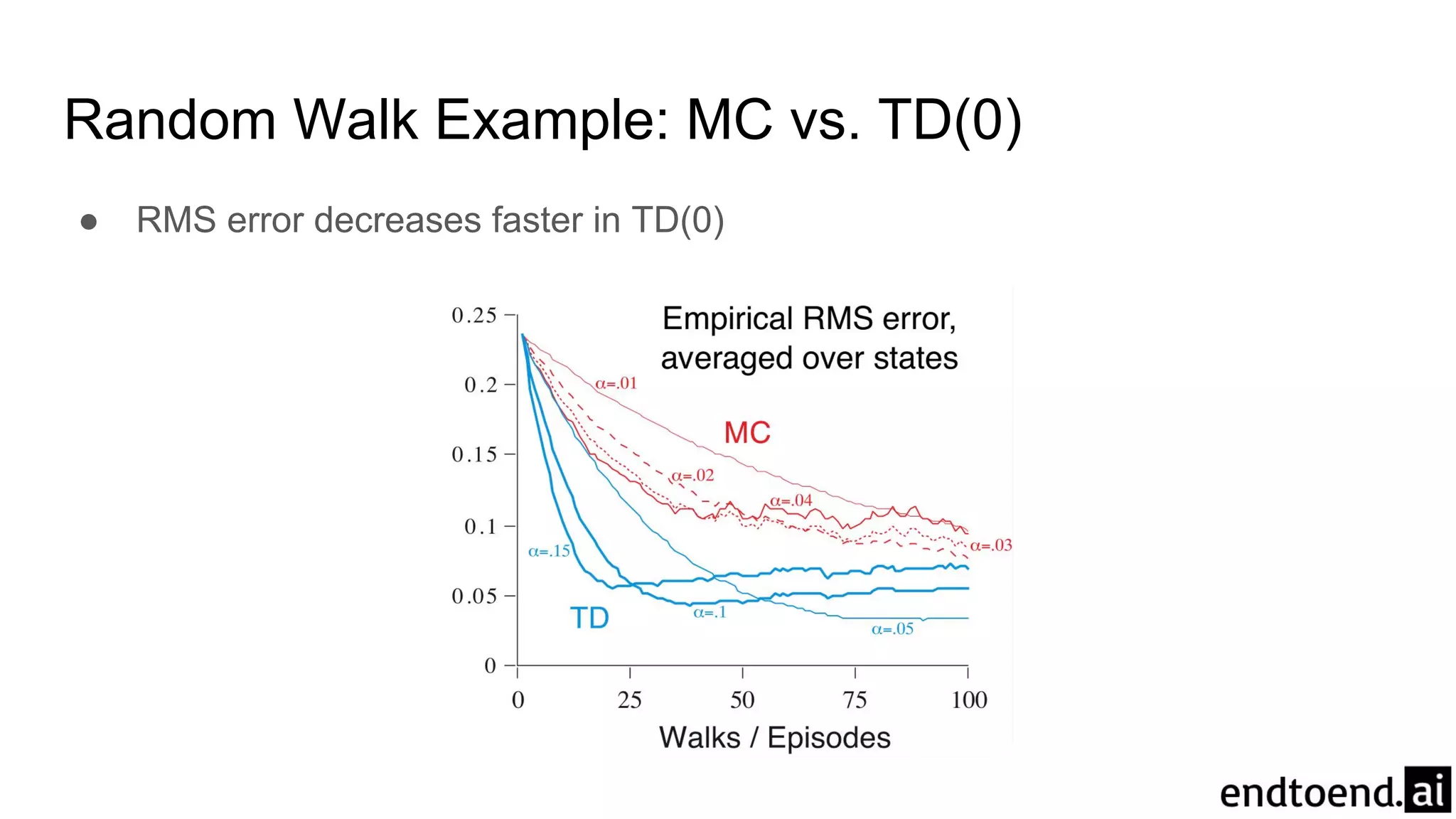

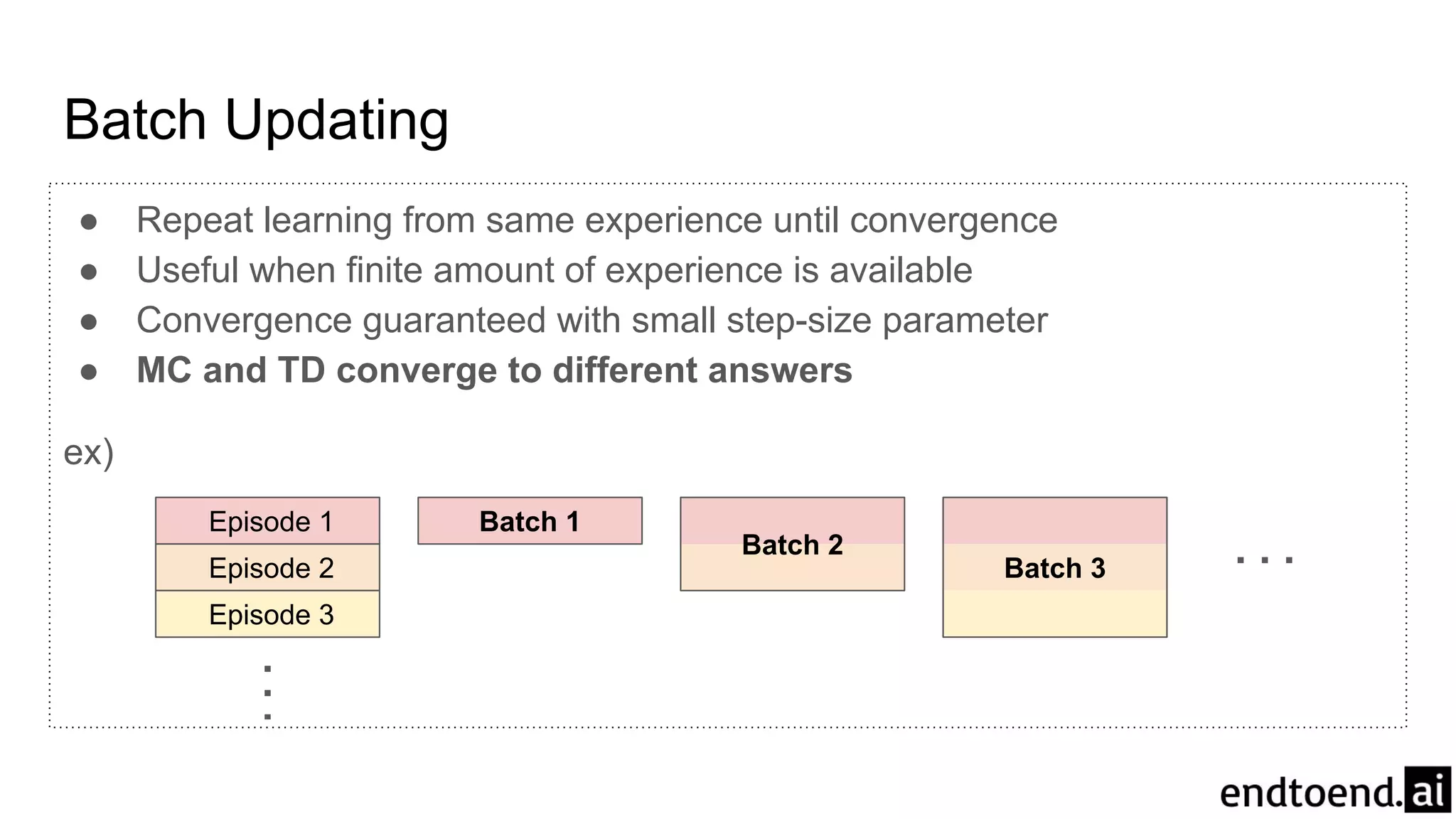

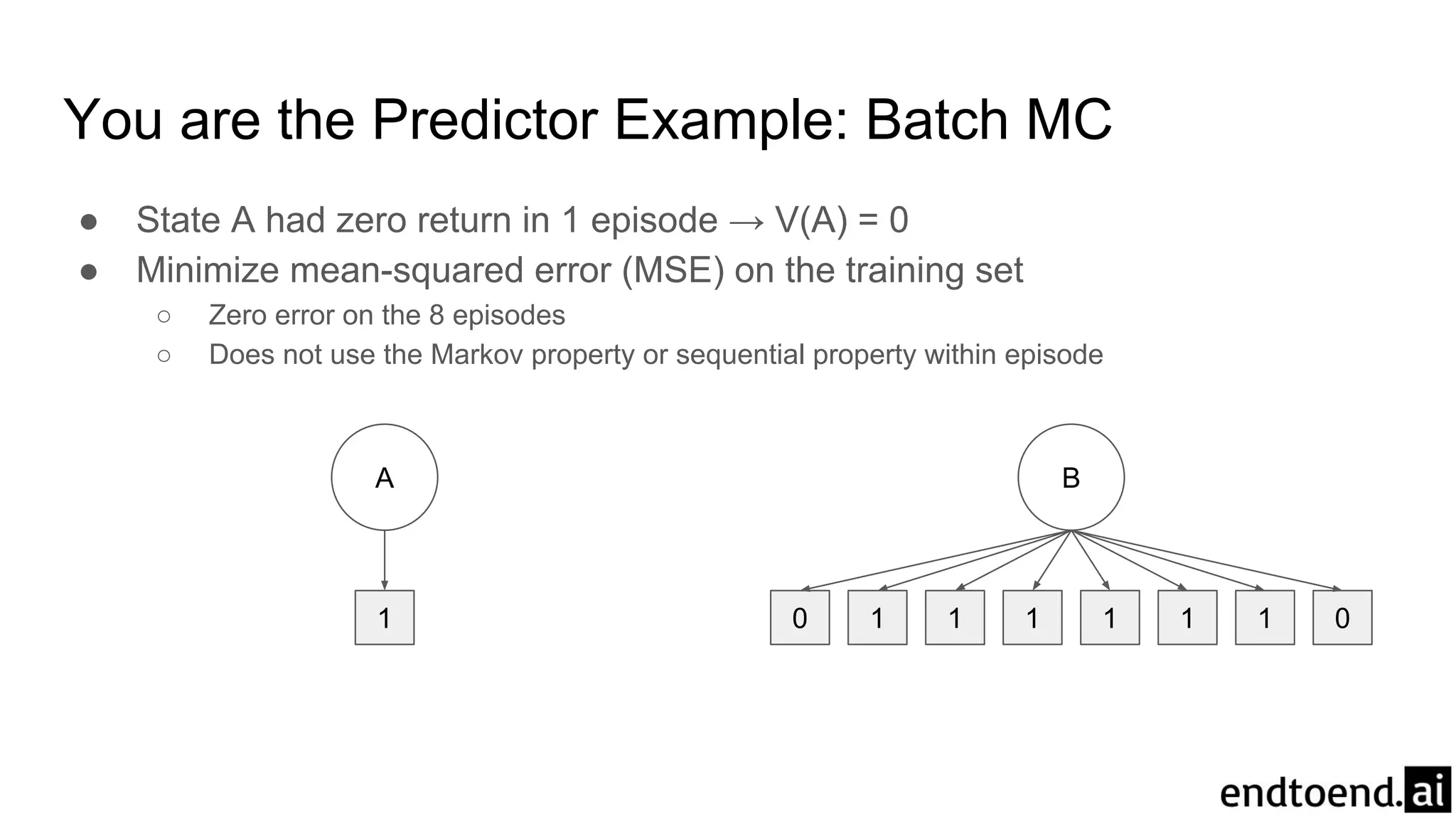

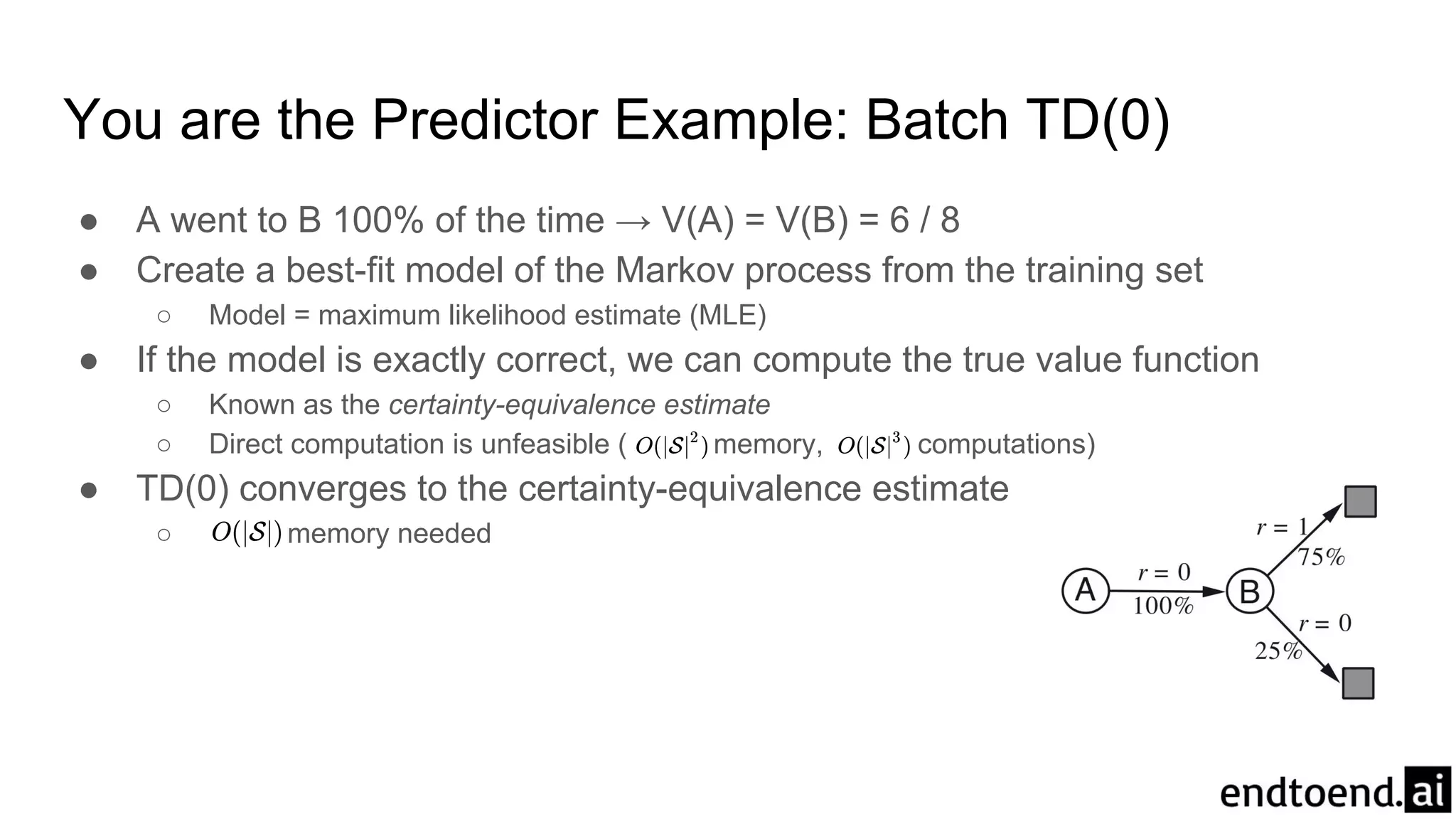

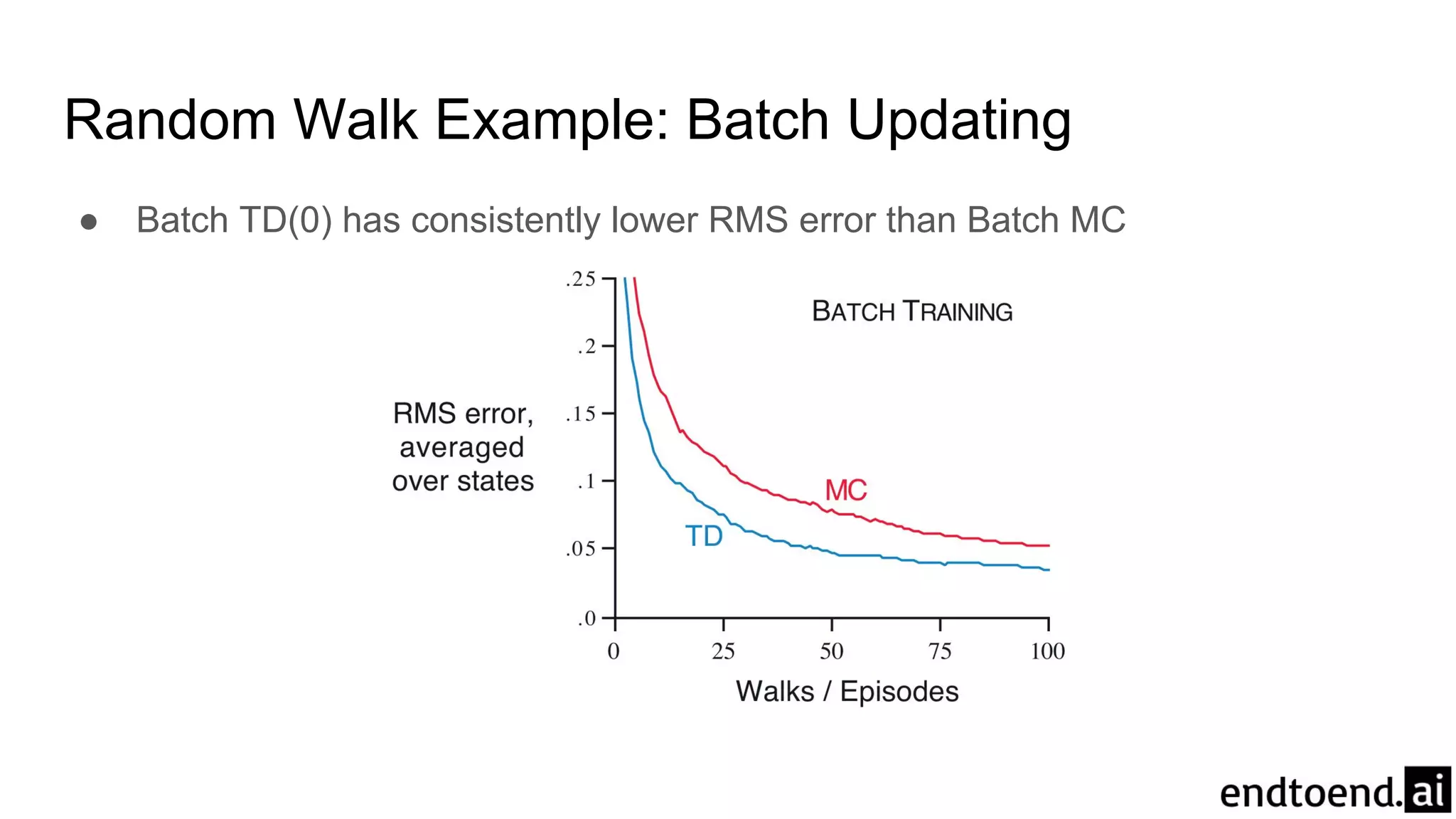

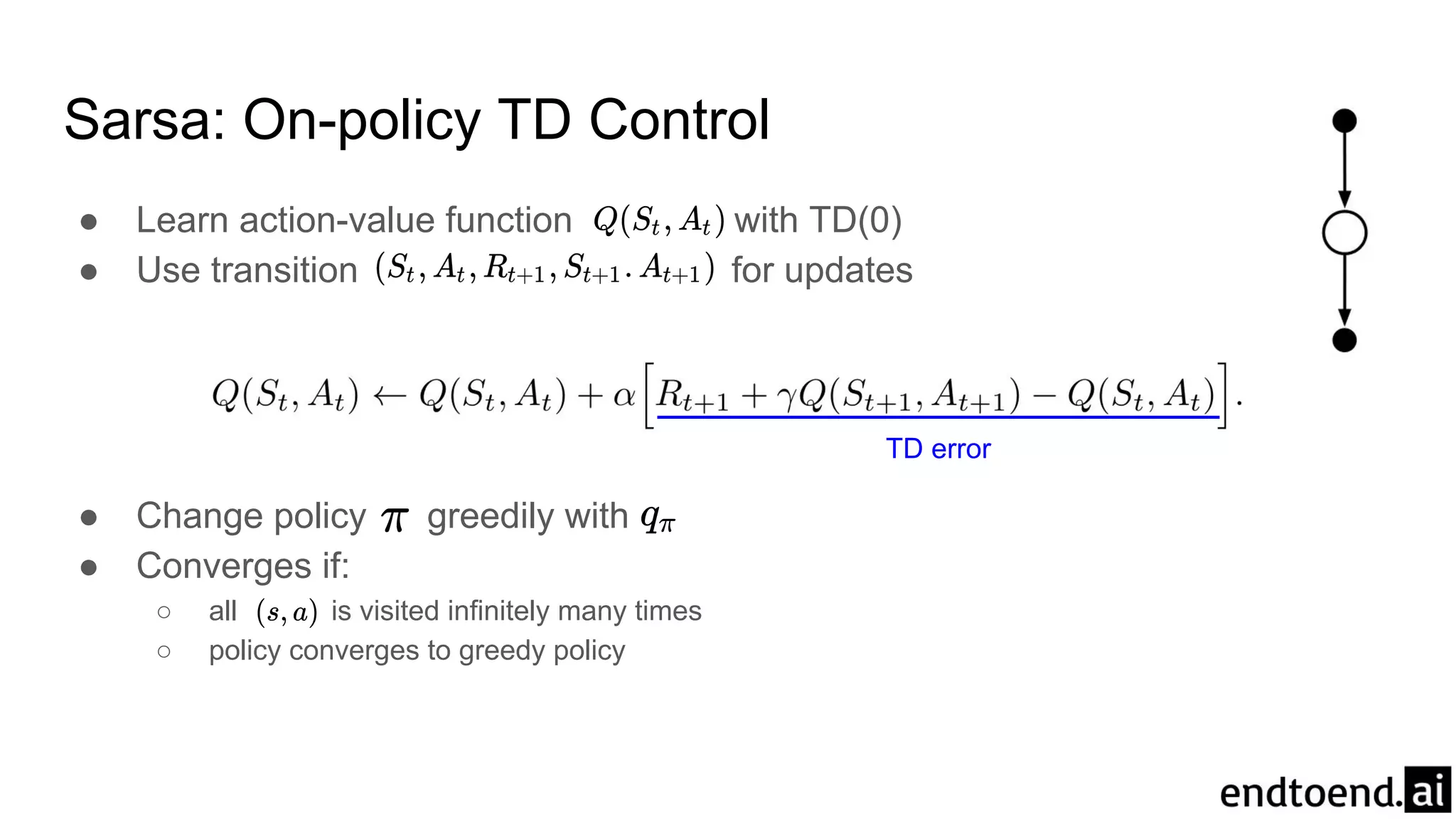

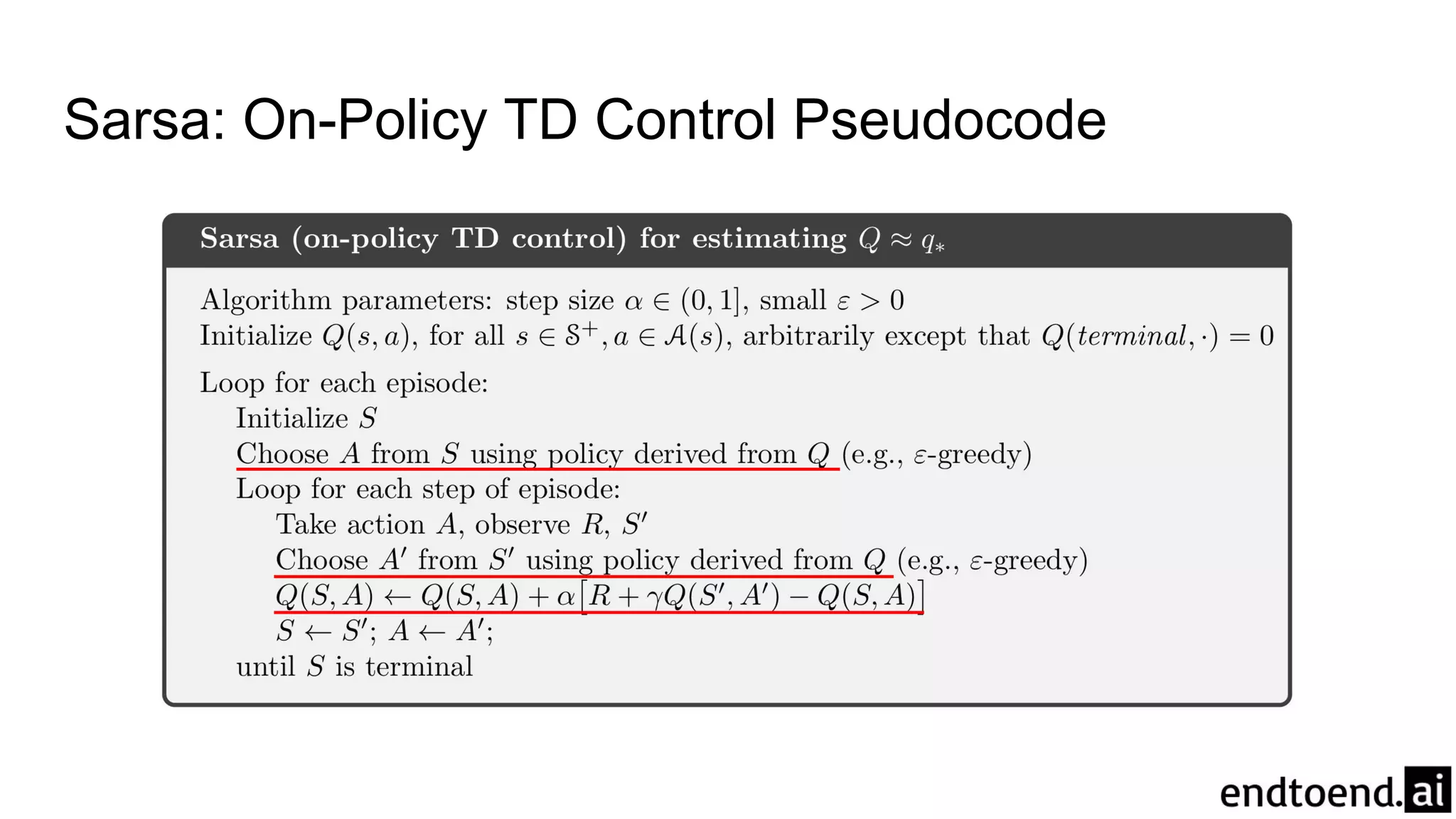

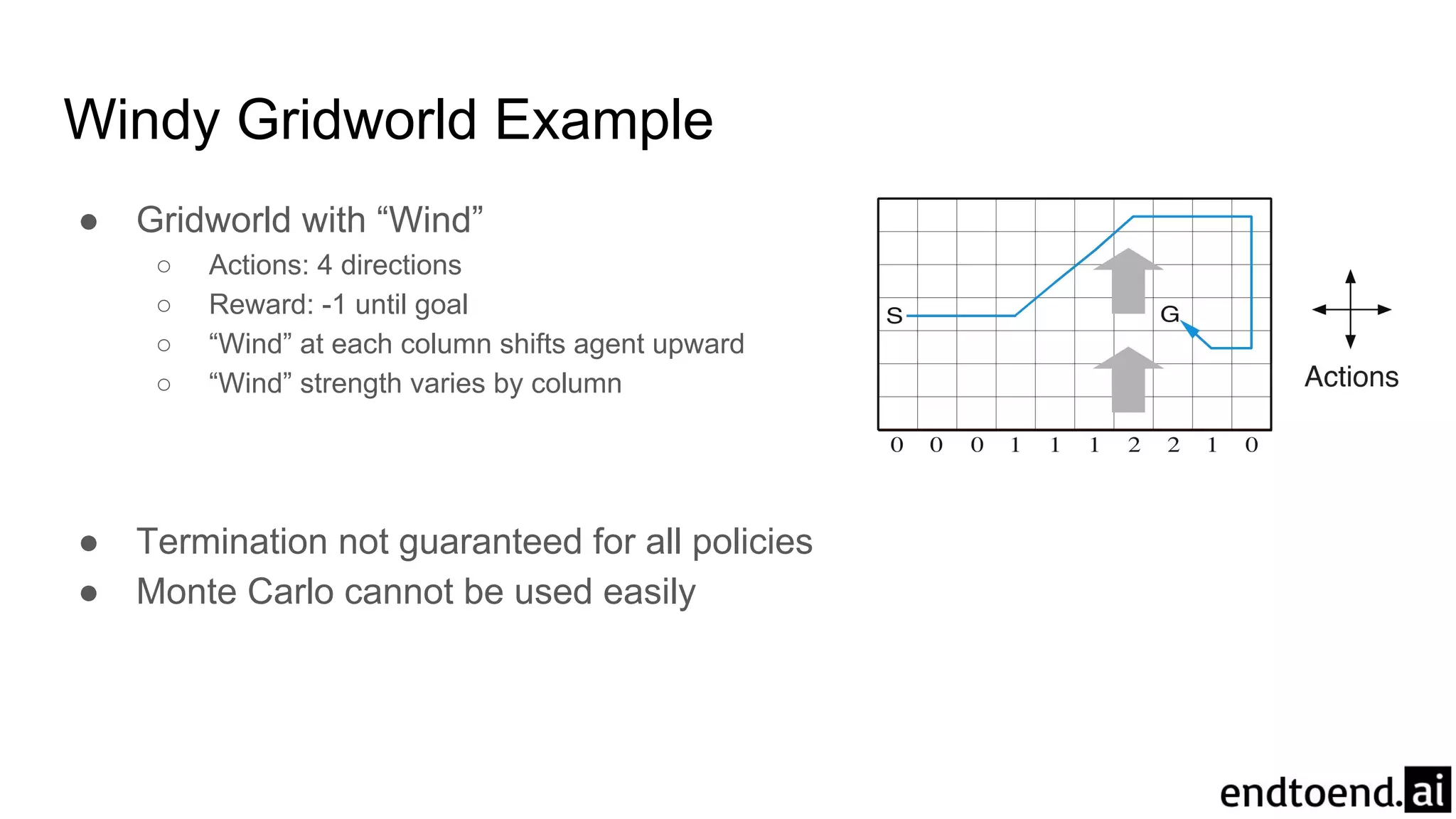

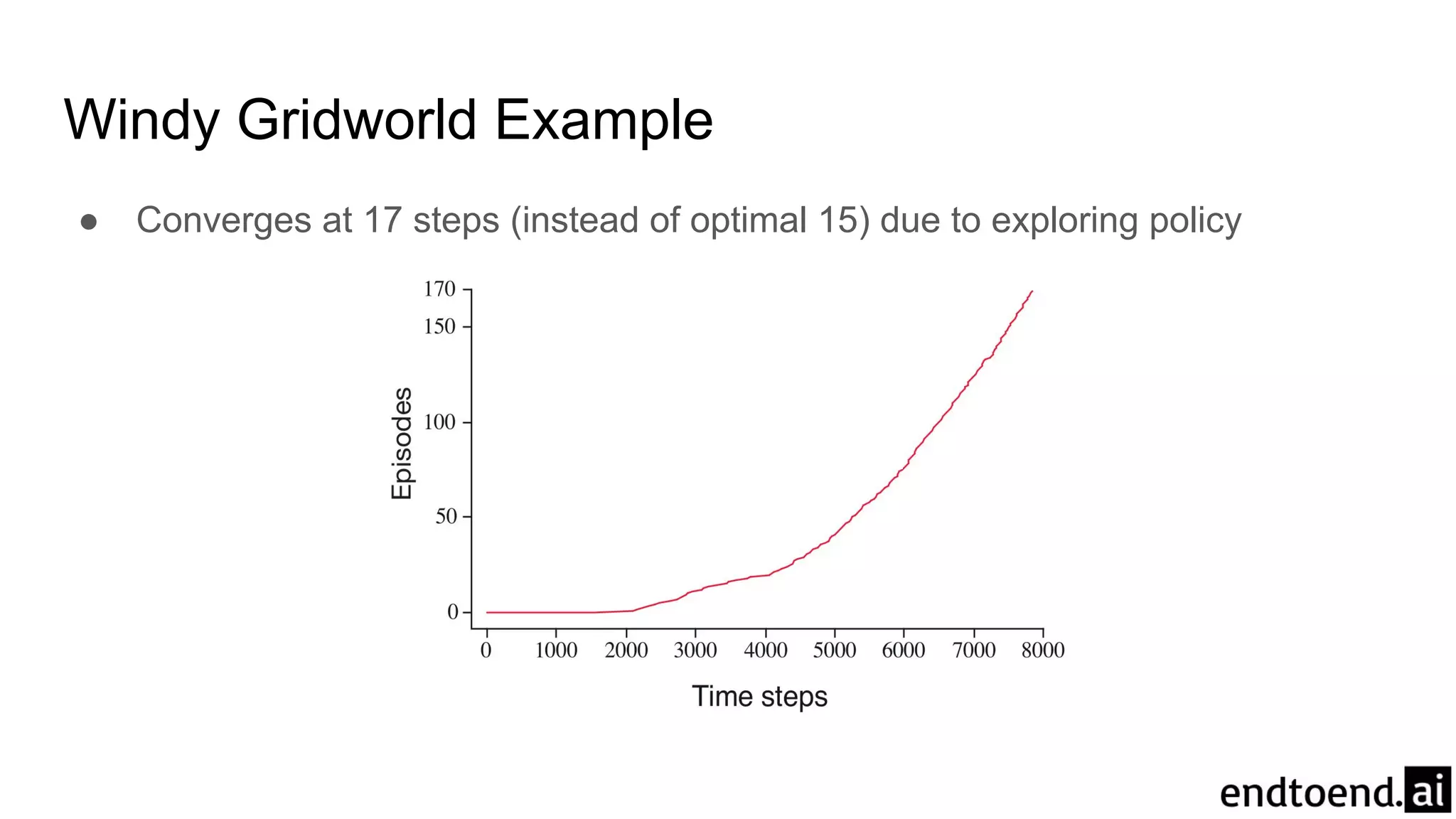

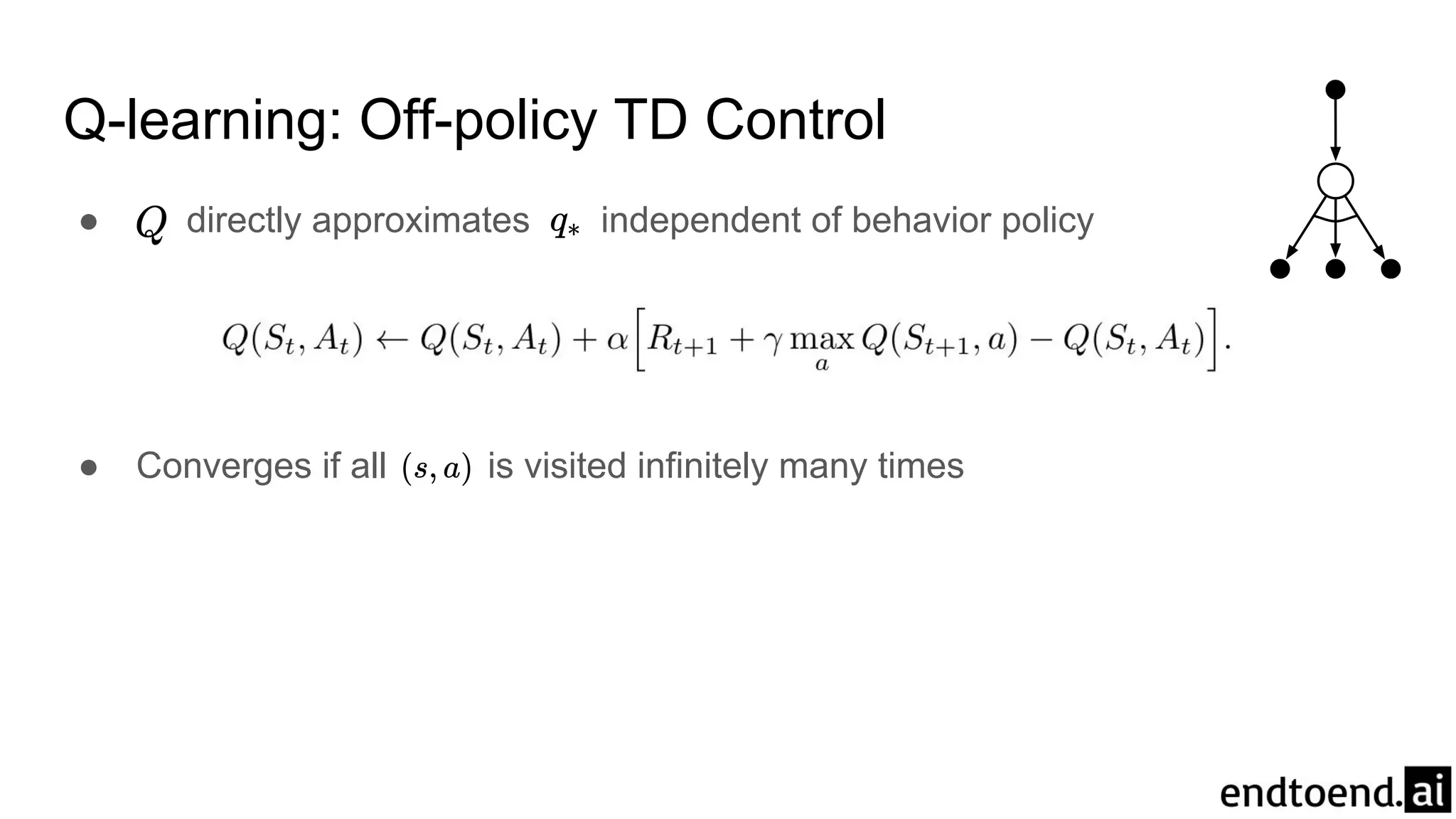

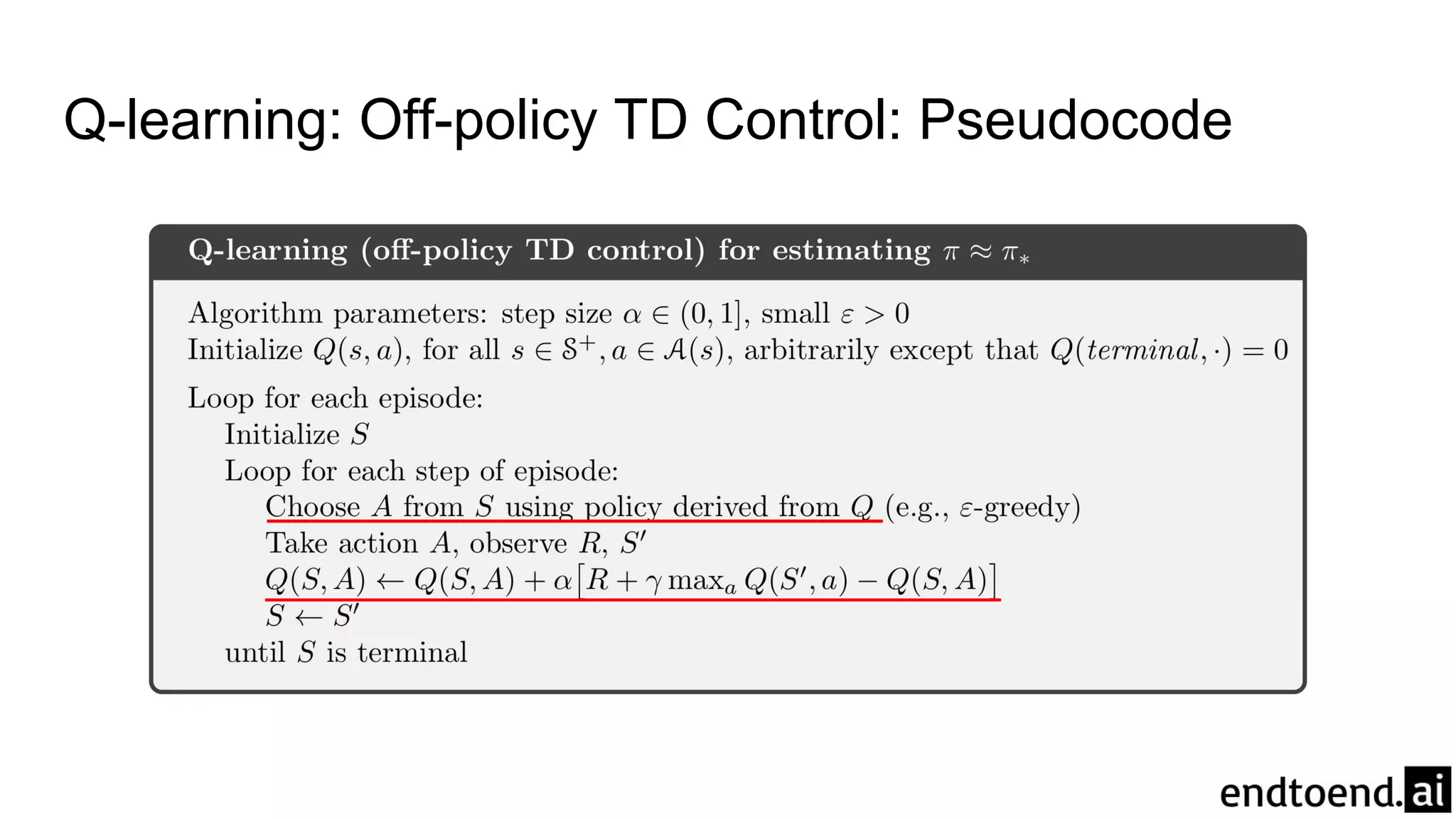

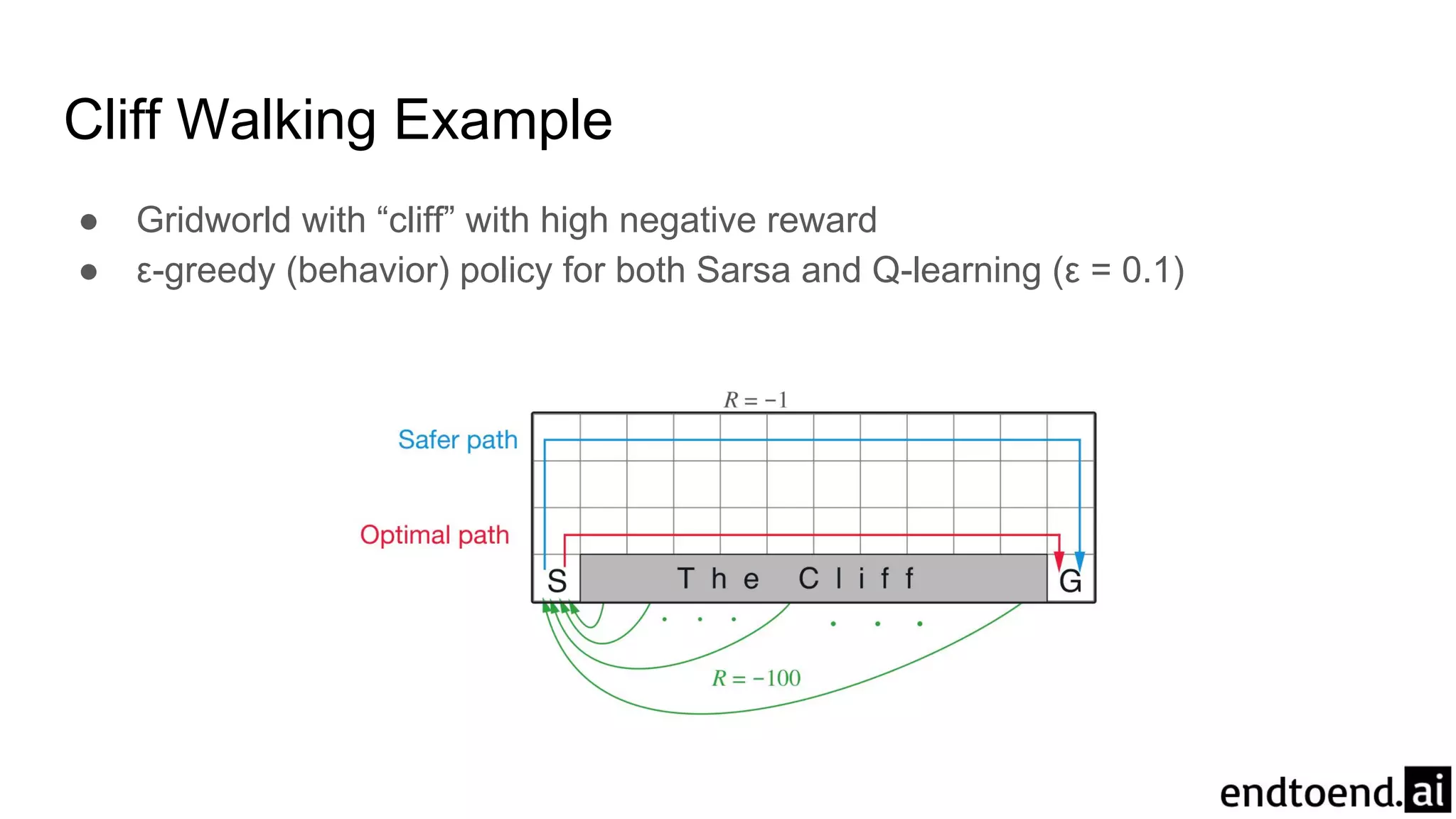

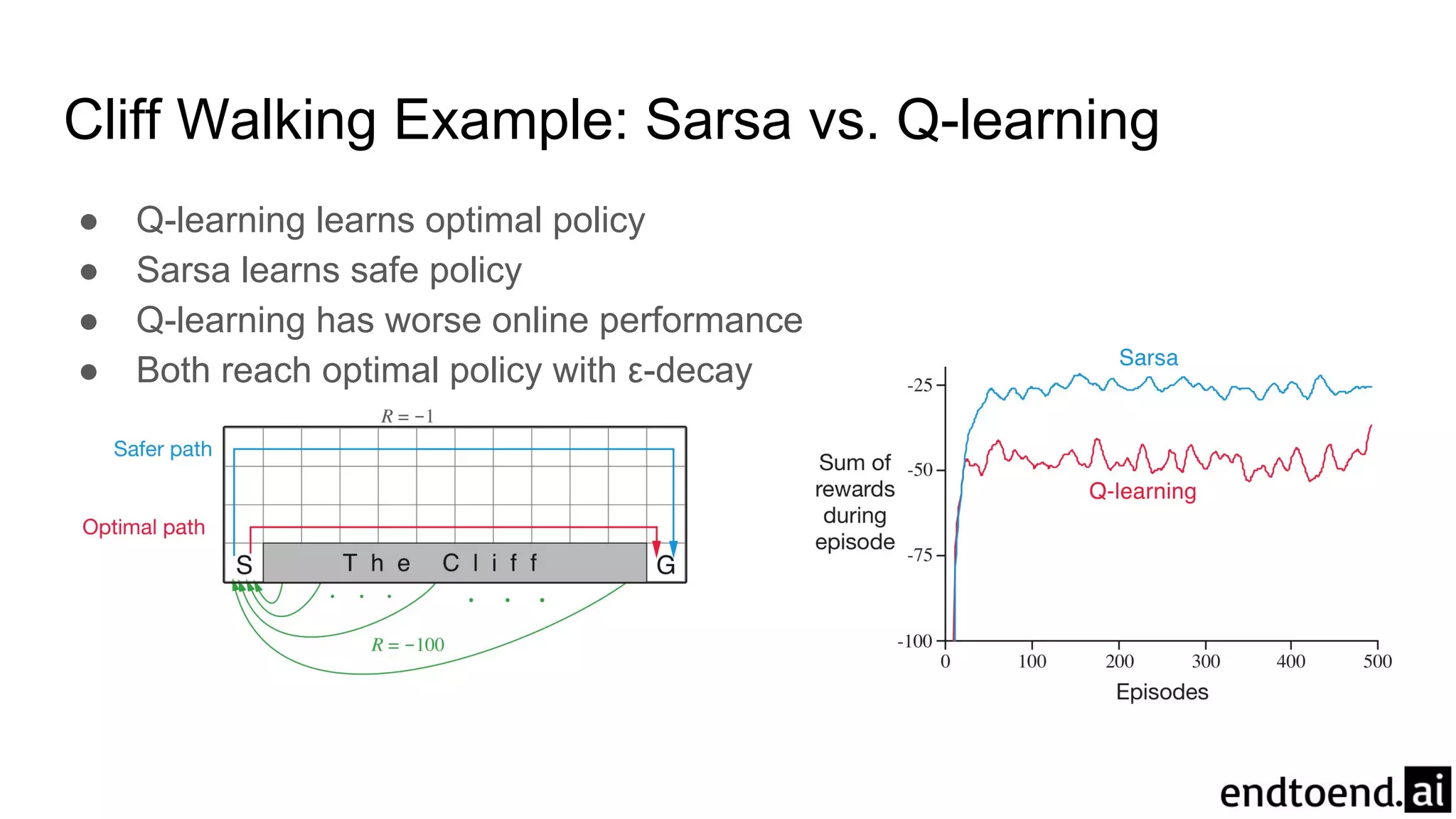

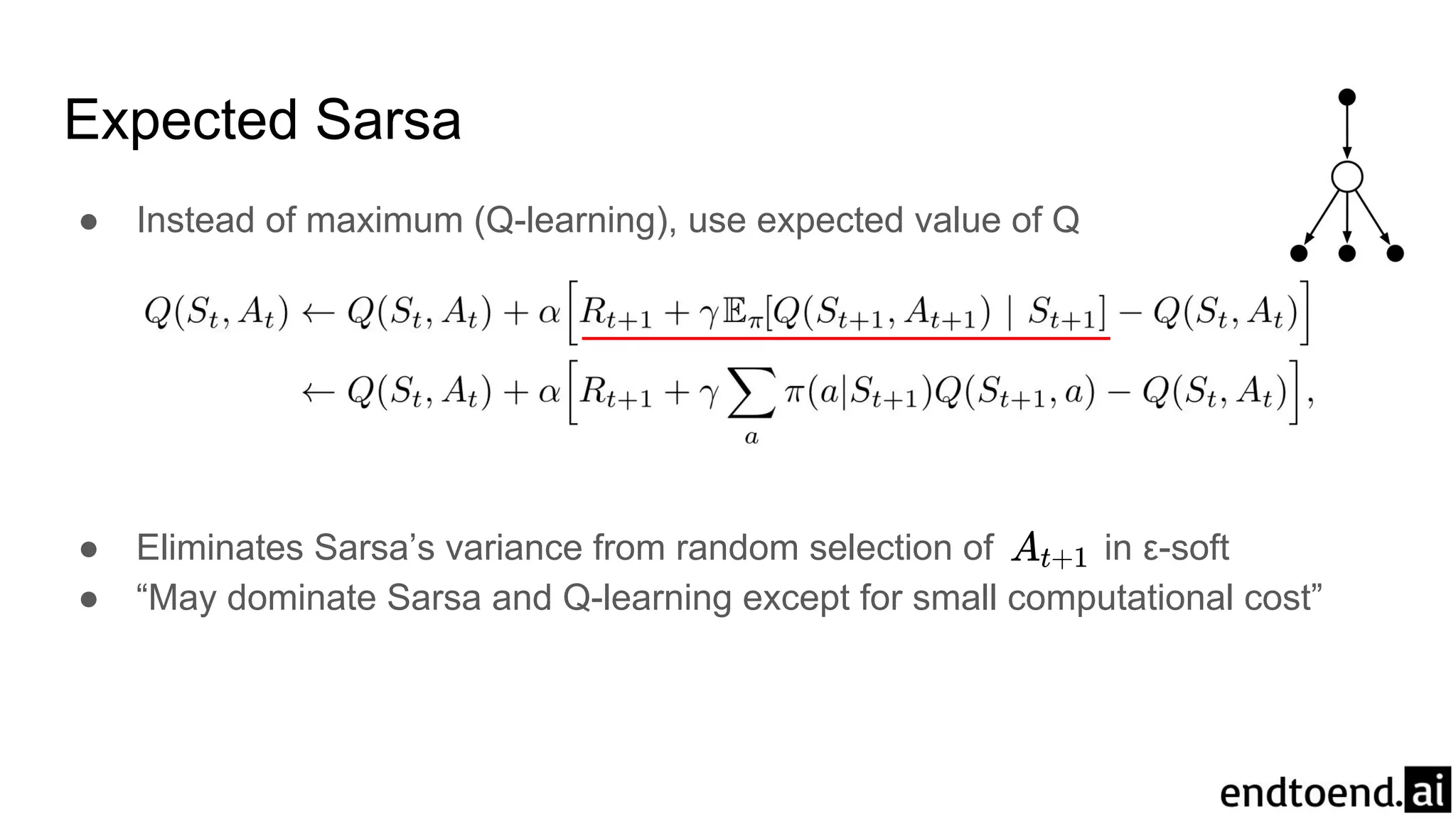

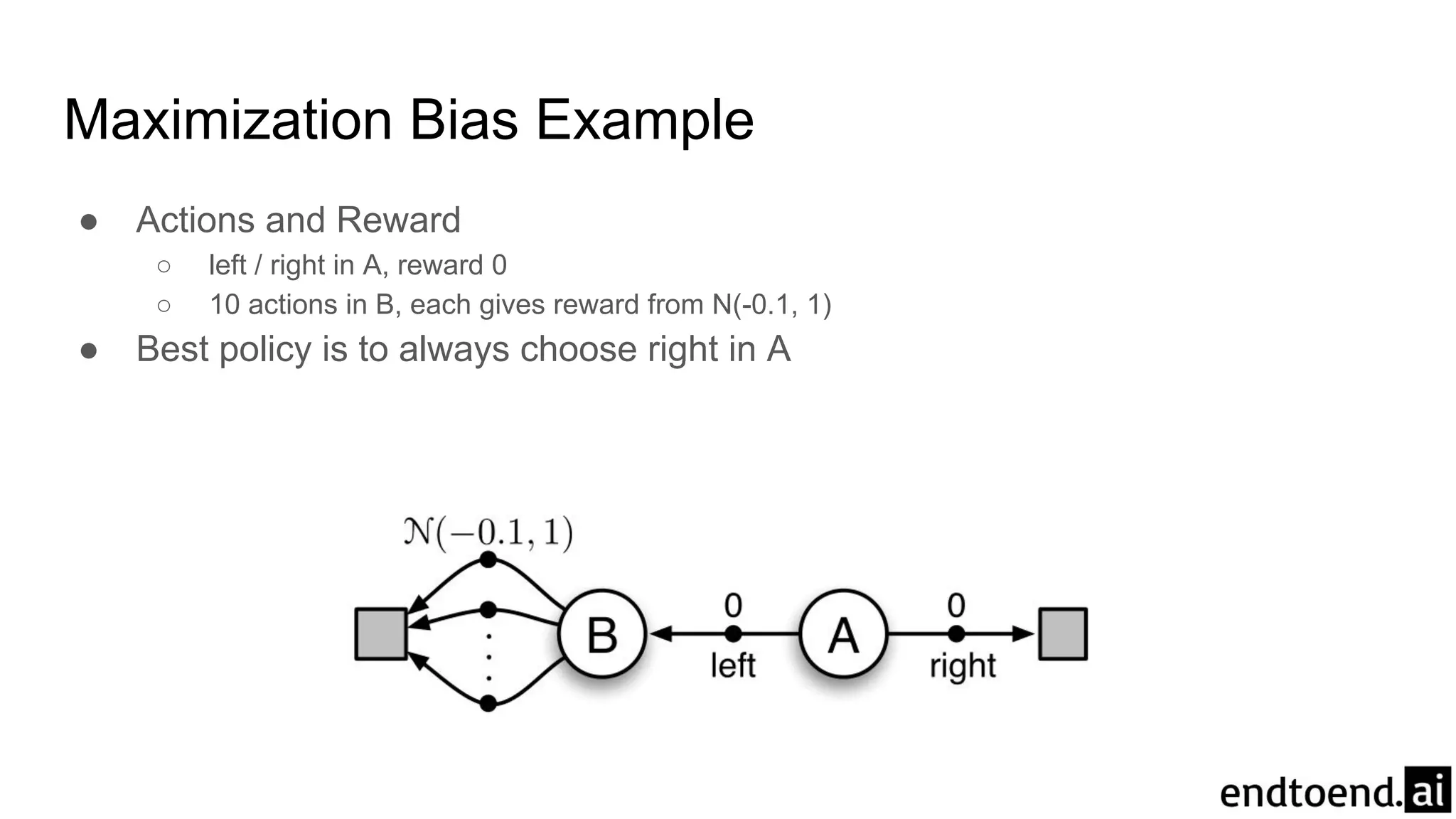

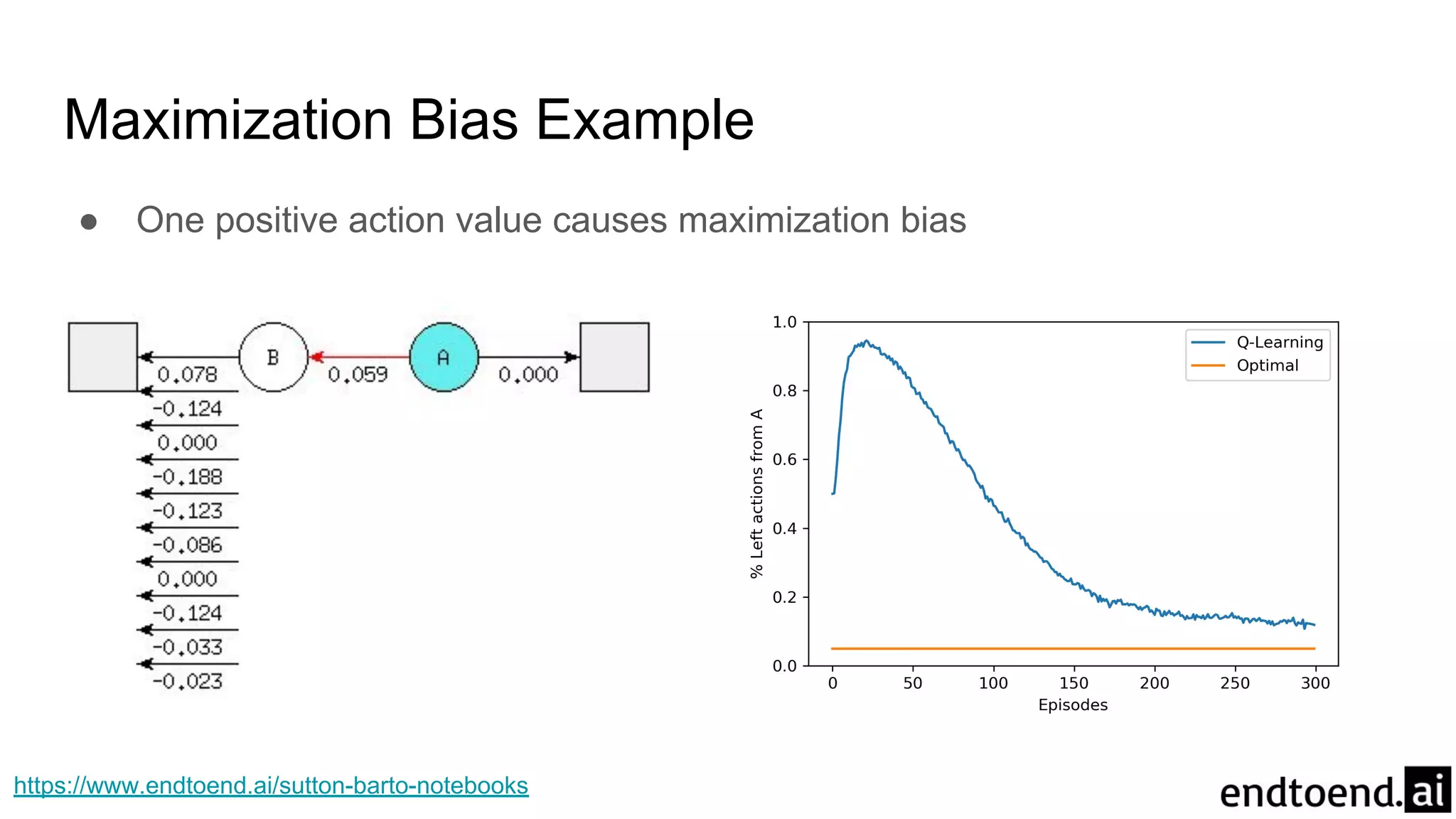

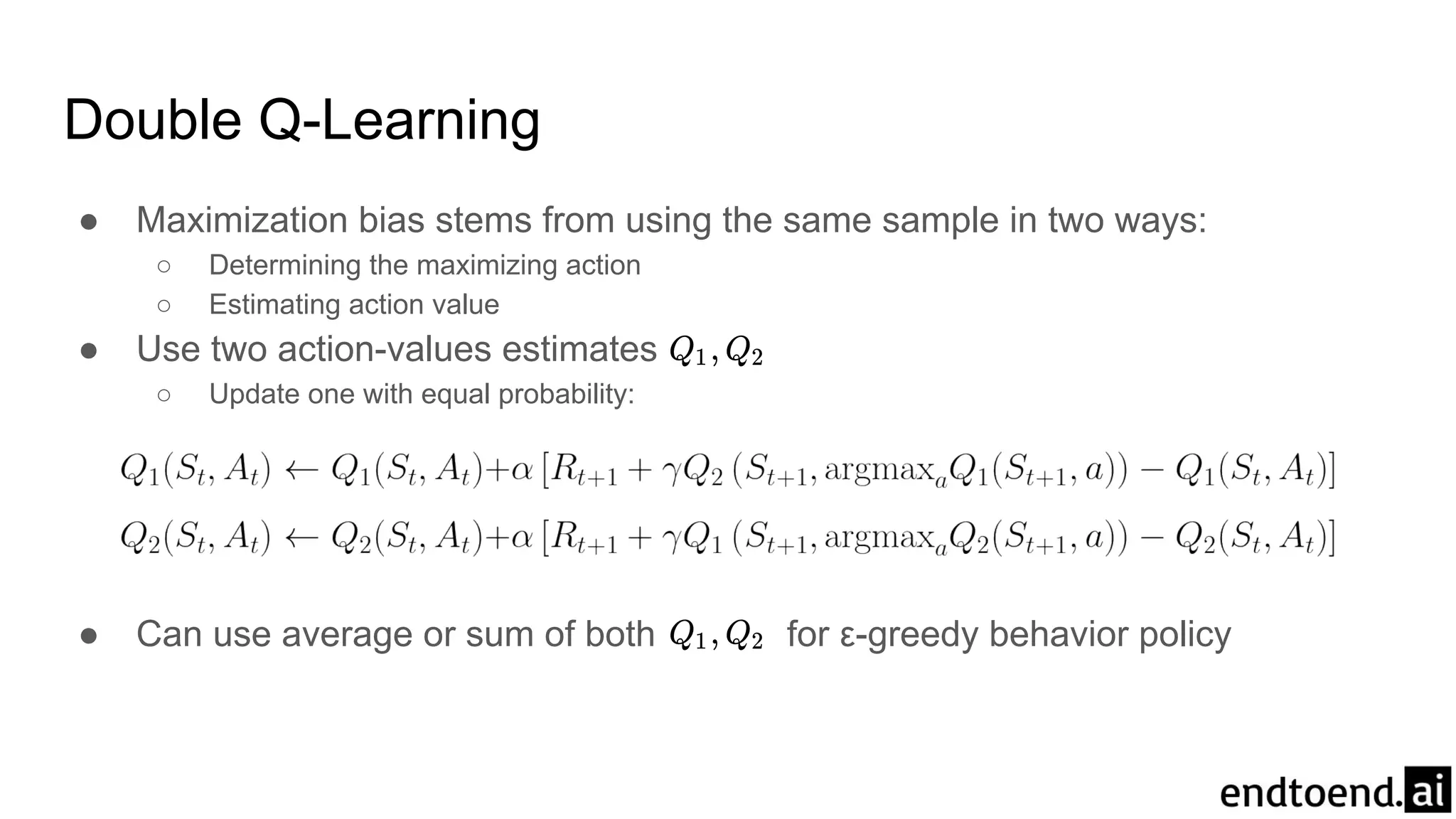

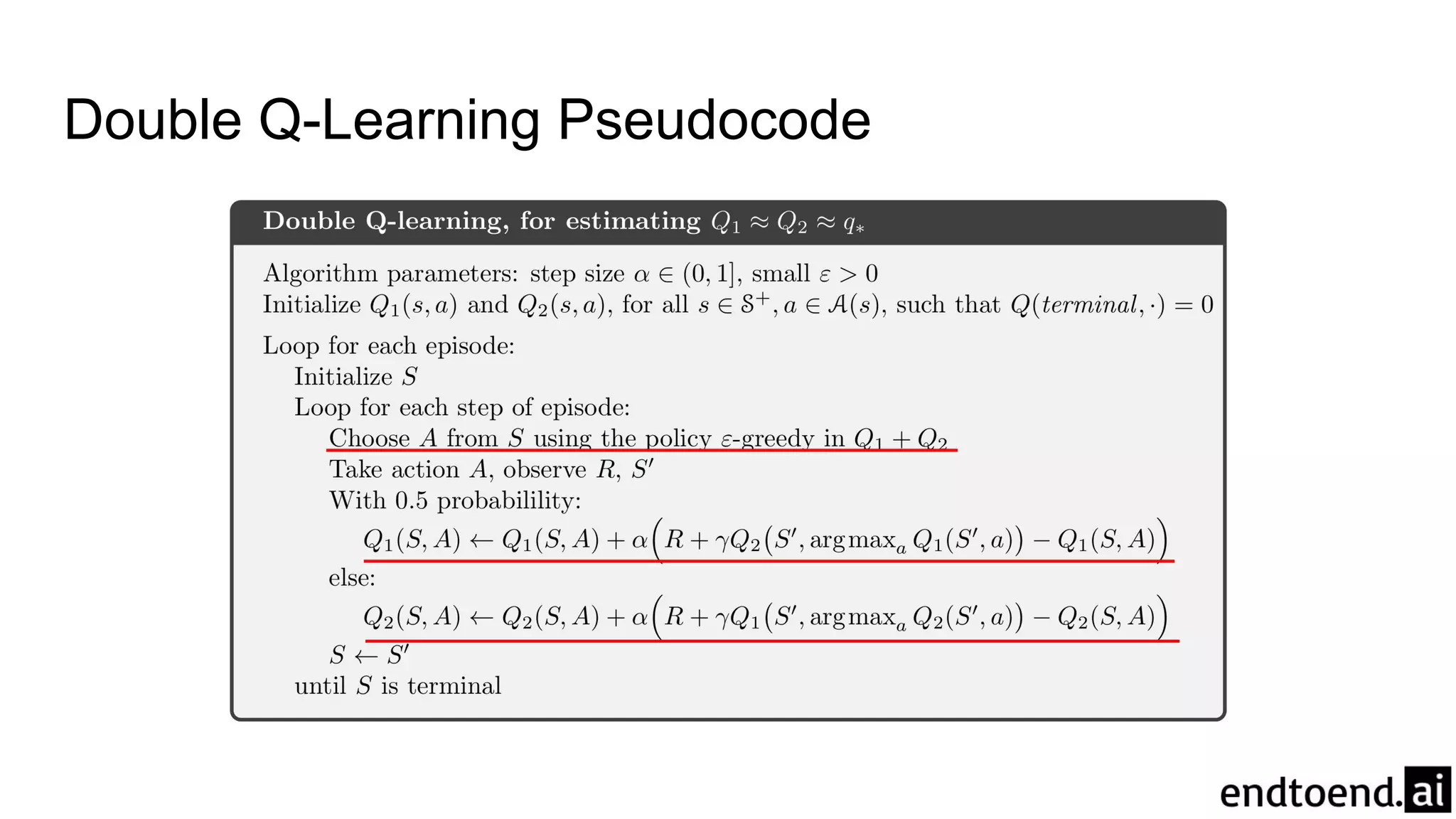

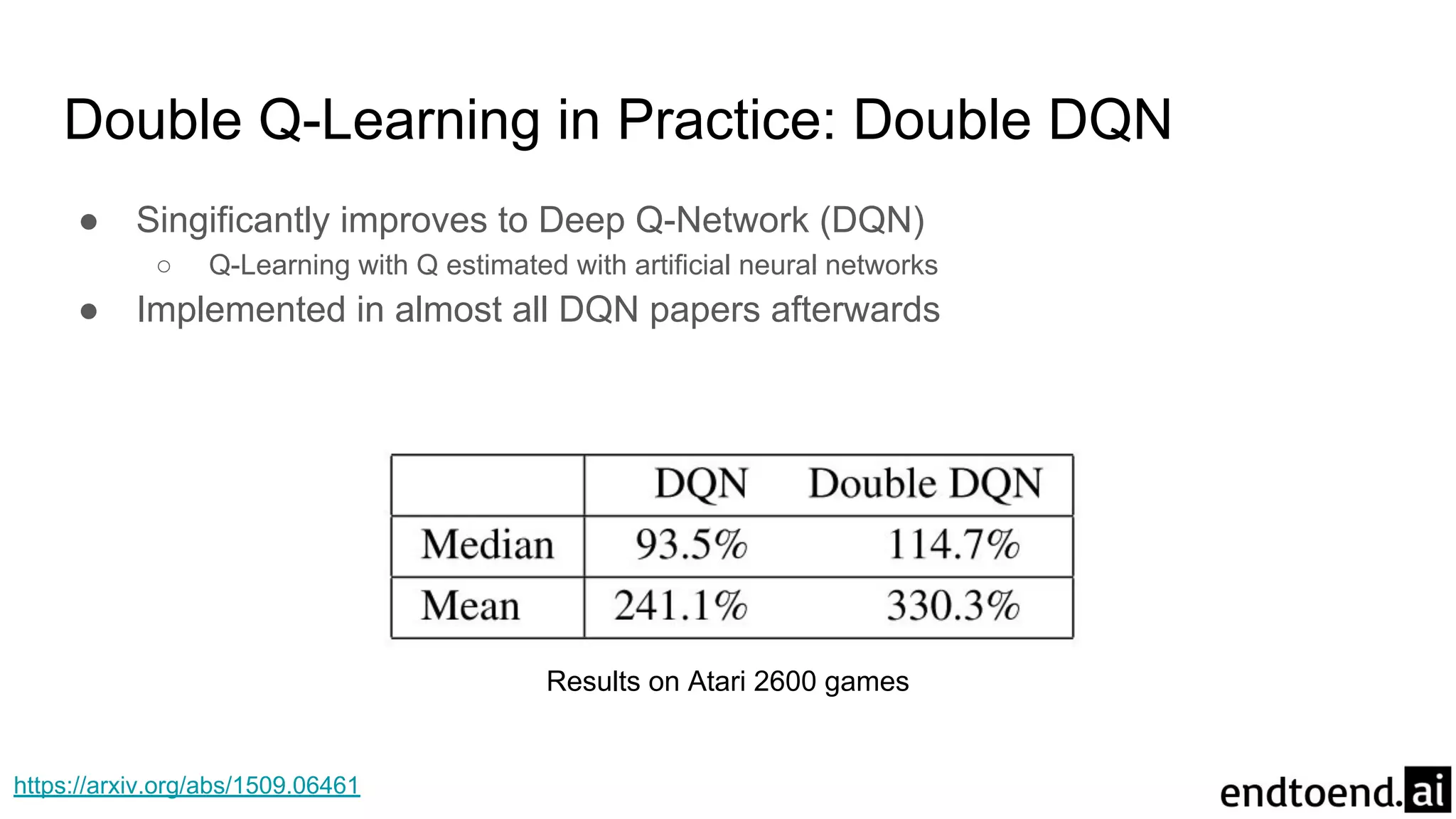

Chapter 6 discusses Temporal-Difference (TD) learning, which combines dynamic programming and Monte Carlo methods for online learning without requiring a model. It highlights the advantages of TD learning over Monte Carlo, including faster convergence in practice and the ability to learn from experience incrementally. The chapter also covers specific TD algorithms like SARSA and Q-learning, as well as challenges like maximization bias and improvements through approaches like Double Q-Learning.