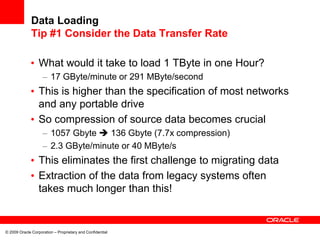

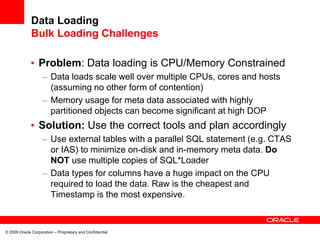

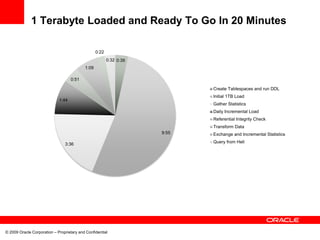

The document outlines an agenda for a performance summit on data warehousing. It includes sessions on data warehousing, data loading, and questions to ask experts. The summit will cover interpreting system monitoring, loading 1TB of data, and challenges of data loading such as CPU/memory constraints and throughput of data sources.