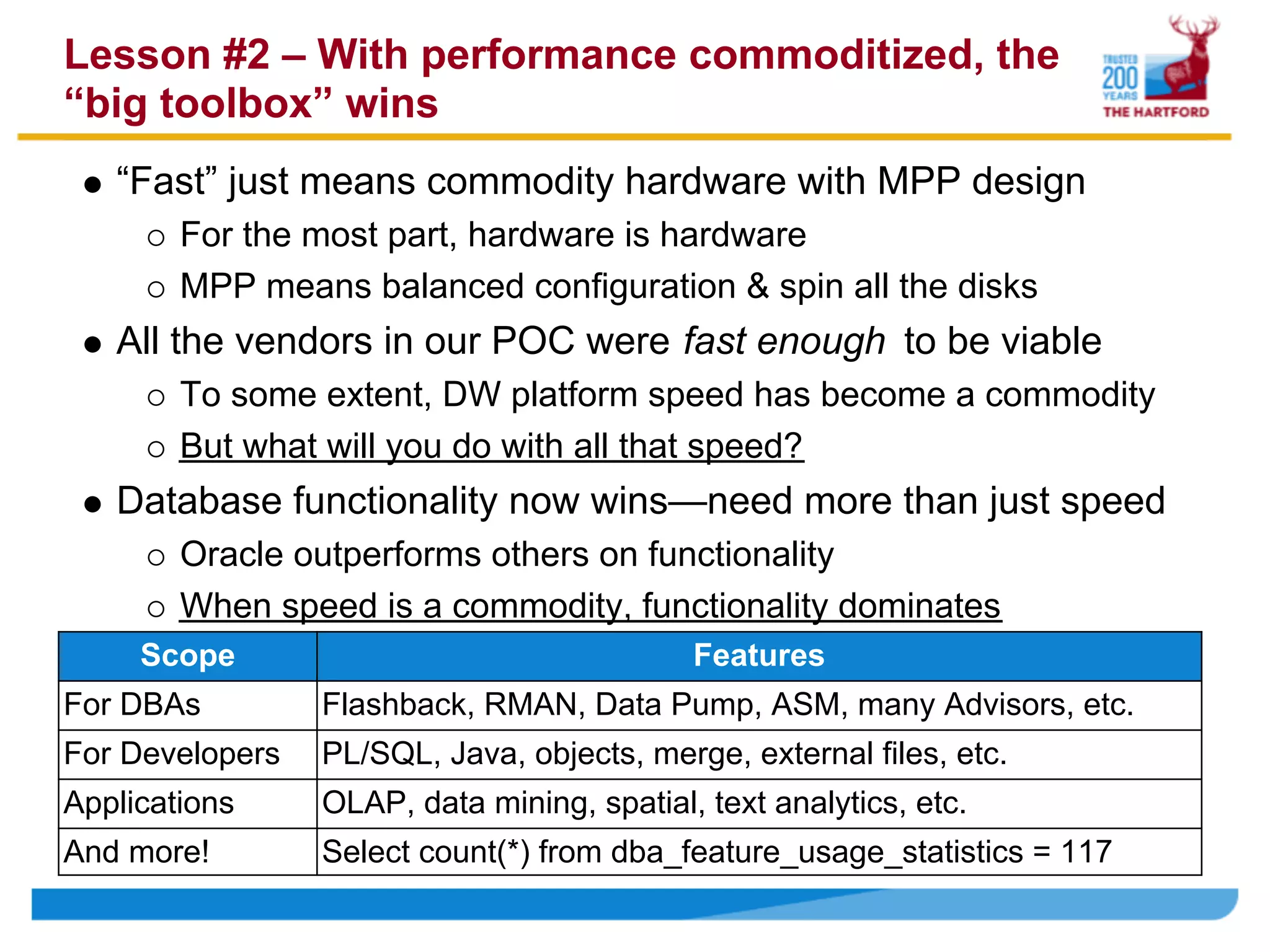

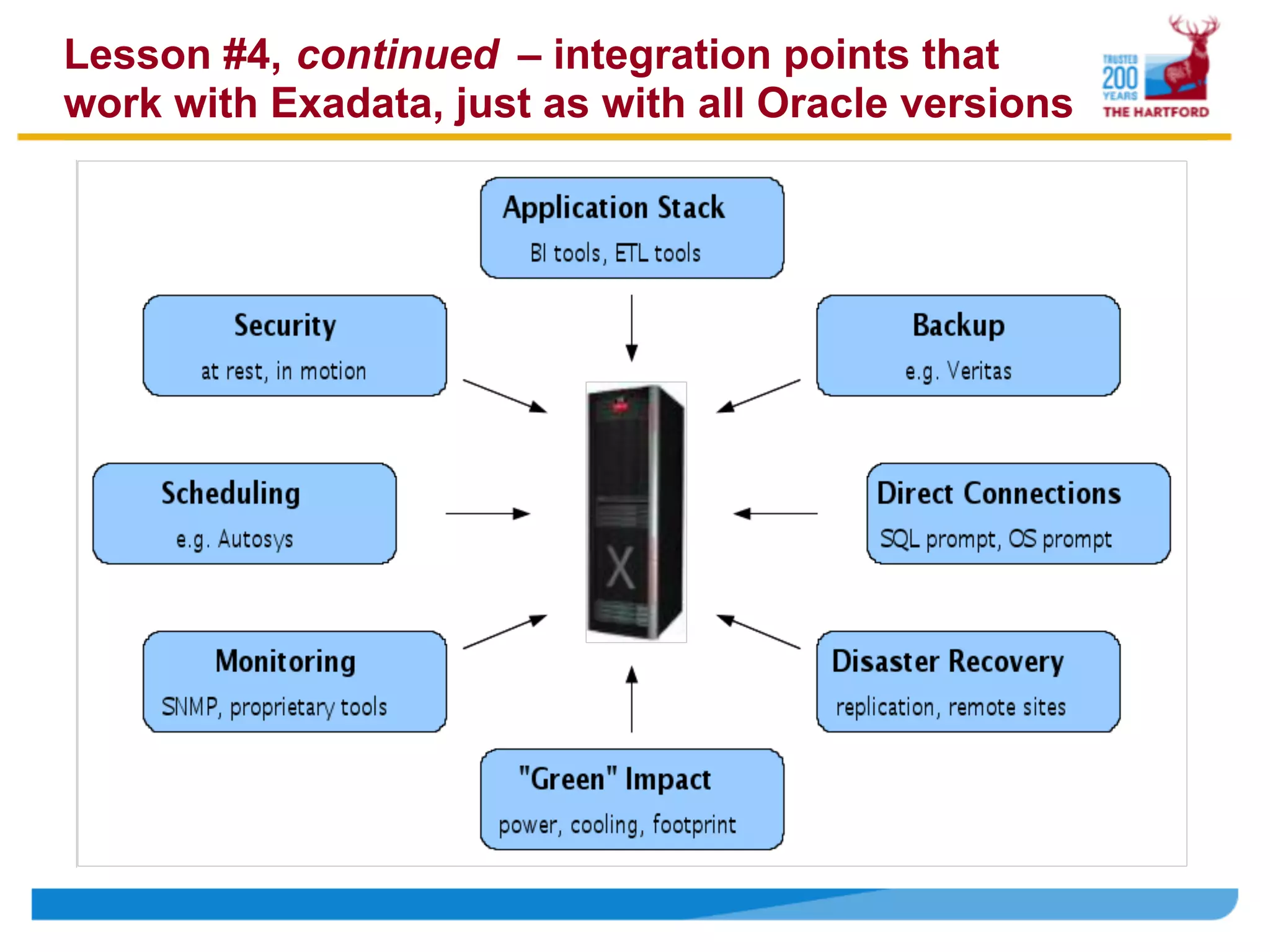

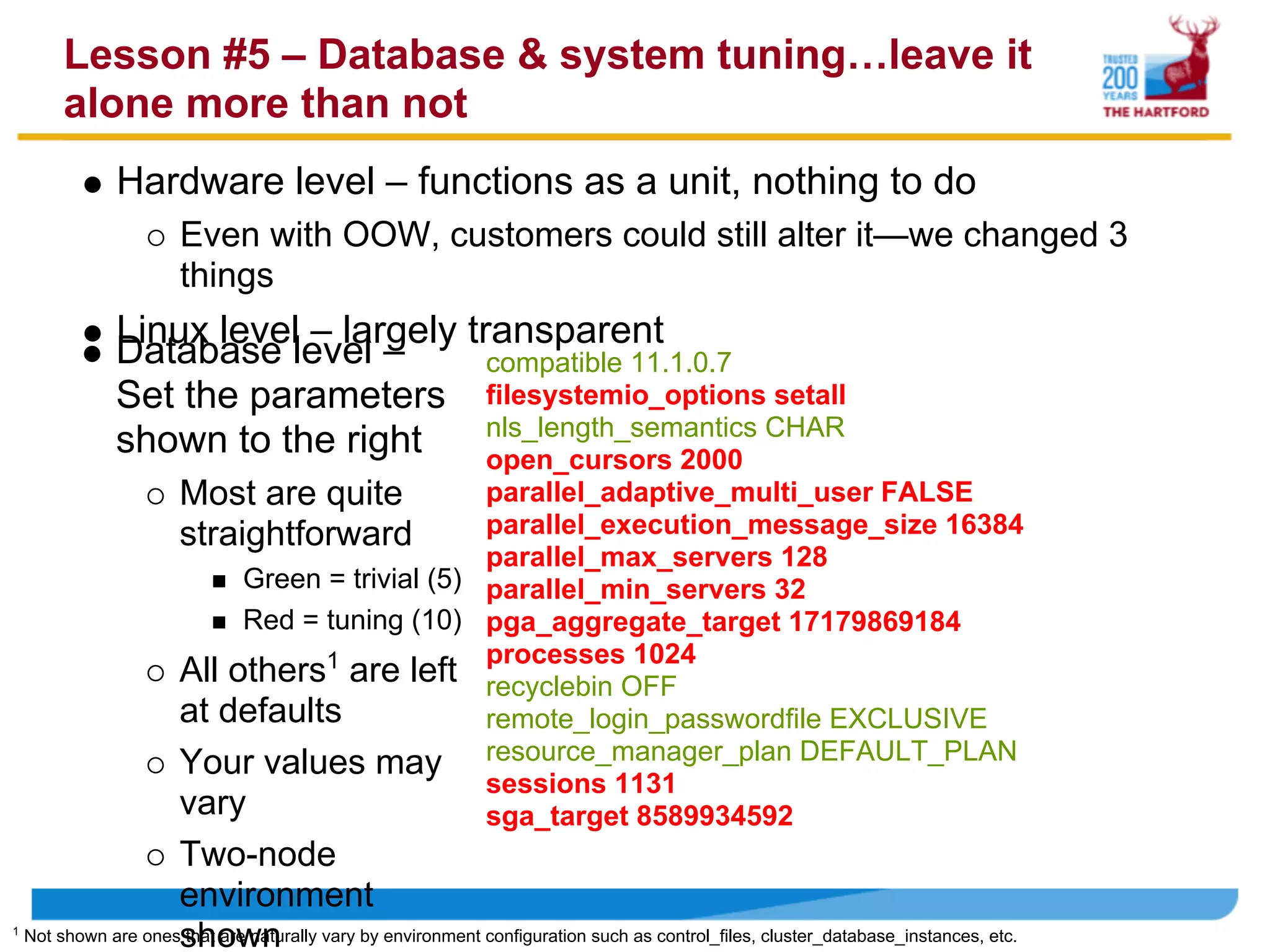

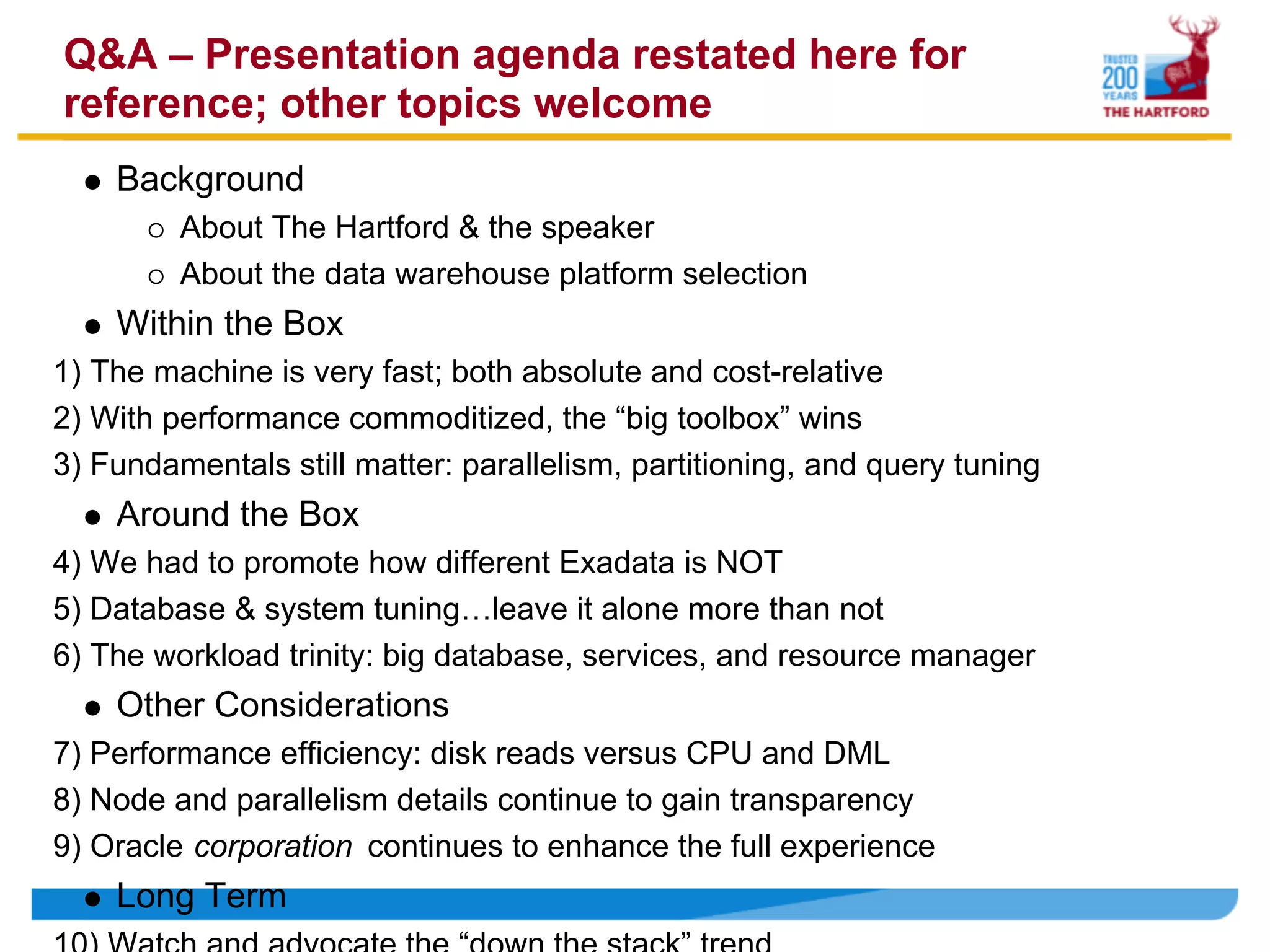

The document summarizes lessons learned from implementing Oracle Exadata at The Hartford insurance company. Some key points include: (1) Exadata provided a 400x performance gain over their previous system and was very cost effective; (2) With performance now commoditized across vendors, Exadata's advanced functionality and features give it an advantage; (3) Fundamentals like parallelism, partitioning and query tuning still matter. The implementation required promoting how Exadata was compatible with existing Oracle systems, leaving many database and system settings at default, and consolidating databases and implementing resource management. Ongoing enhancements by Oracle position Exadata well for the future by continuing to push more functionality directly into storage hardware.