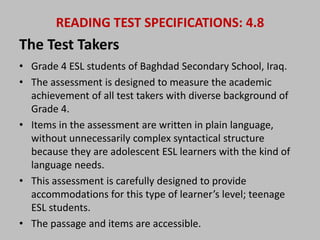

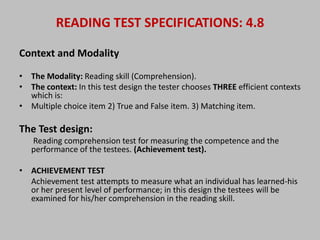

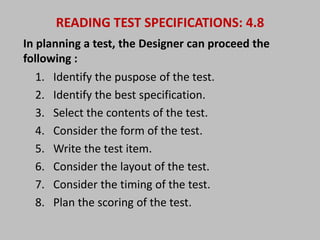

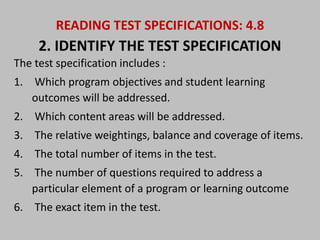

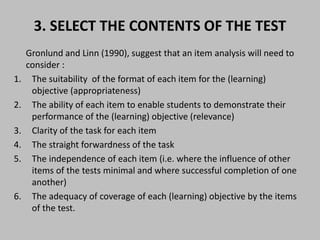

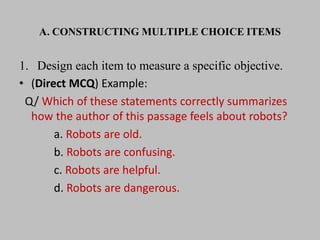

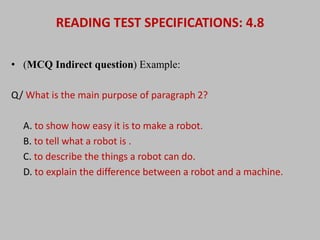

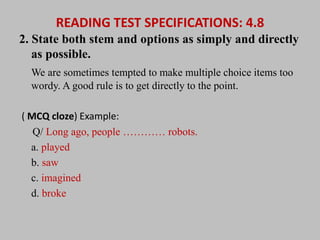

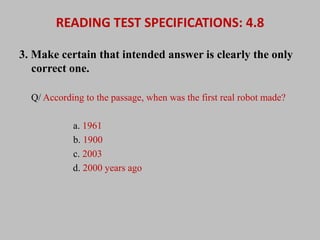

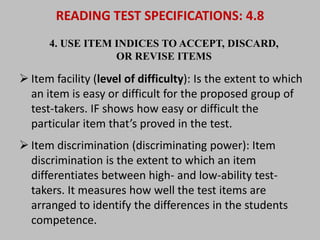

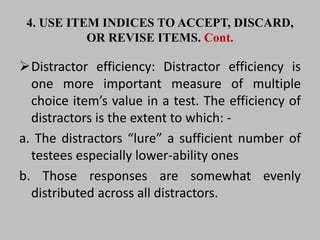

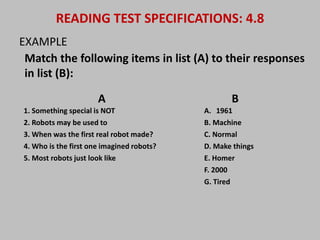

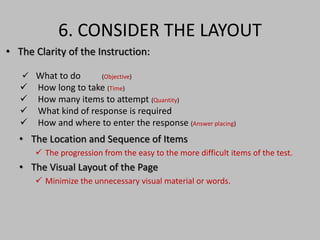

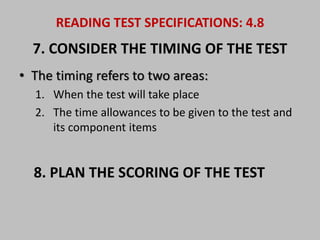

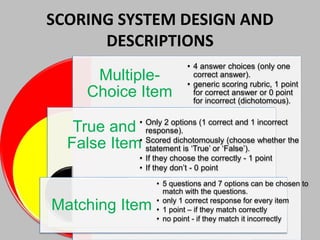

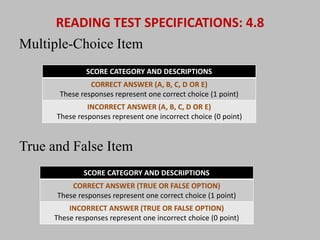

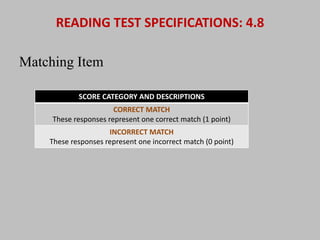

This document outlines the test specifications for a reading comprehension assessment for 4th grade ESL students in Iraq. It will include multiple choice, true/false, and matching questions to measure students' reading achievement based on the semester curriculum. The test aims to place students in appropriate classes for the next semester. It provides accommodations for adolescent ESL learners and uses clear, plain language in passages and items. Scoring will be dichotomous with 1 point for a correct answer and 0 for incorrect.