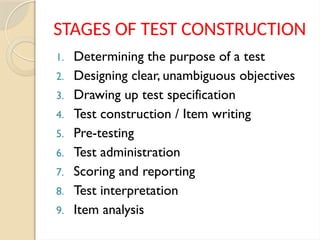

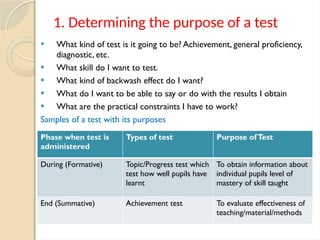

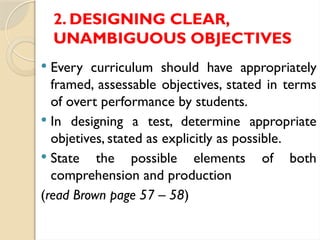

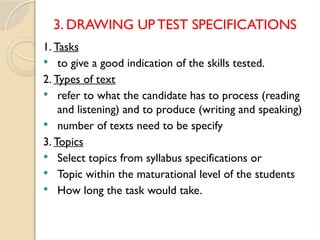

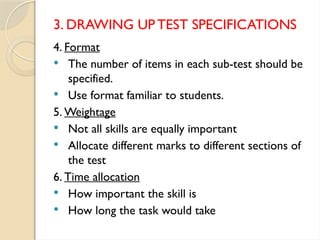

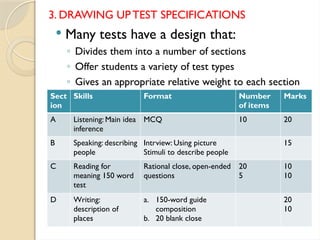

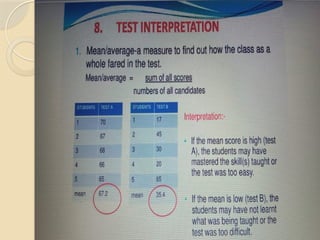

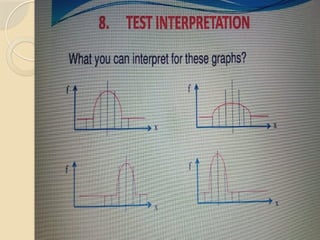

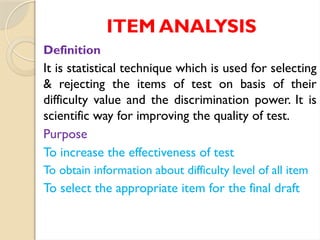

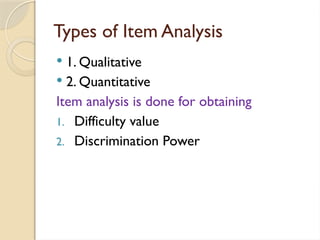

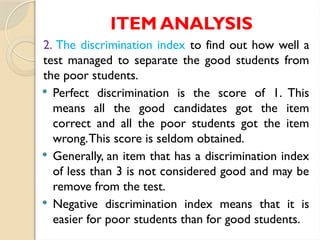

The document outlines the stages of test construction, including determining the purpose, designing objectives, creating specifications, item writing, administration, and scoring. It emphasizes the importance of developing clear objectives, pre-testing items for effectiveness, and conducting item analysis for quality assurance. Key elements include test format, types of assessments, and statistical techniques to ensure the test effectively differentiates between skilled and less skilled students.