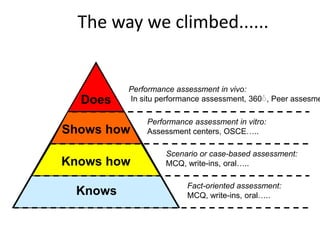

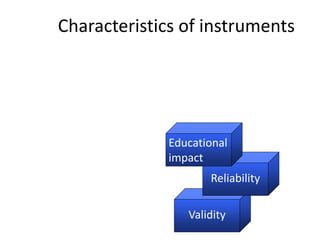

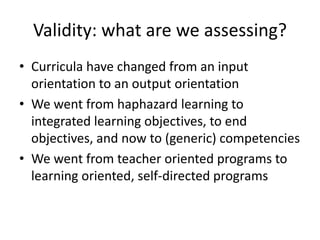

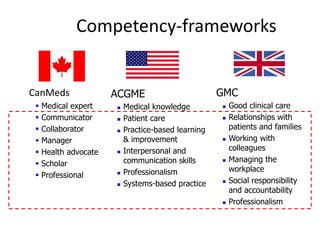

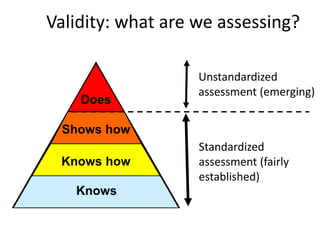

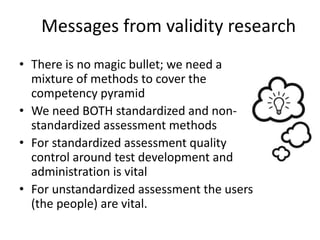

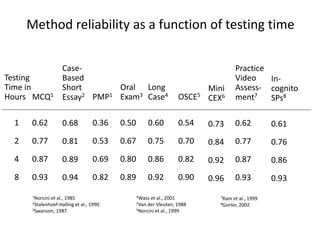

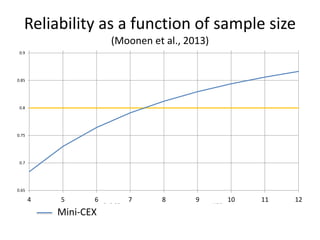

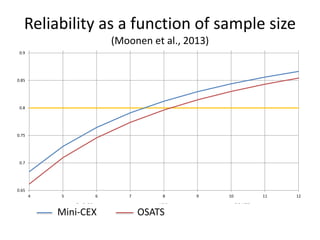

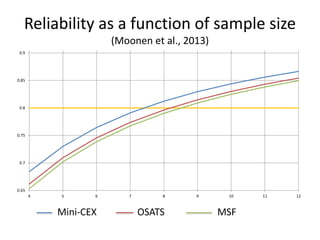

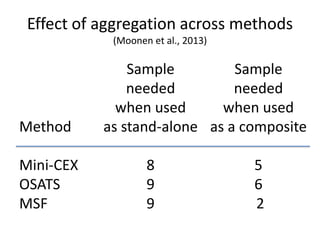

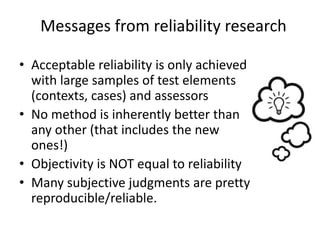

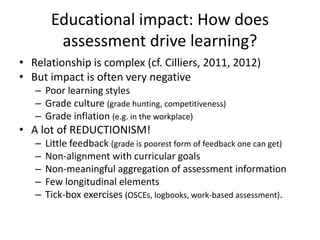

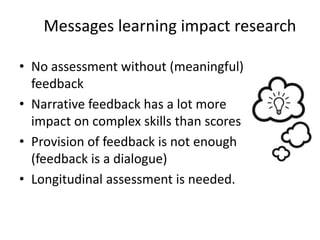

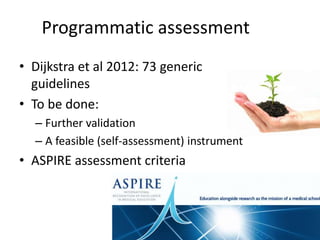

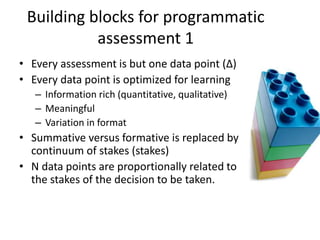

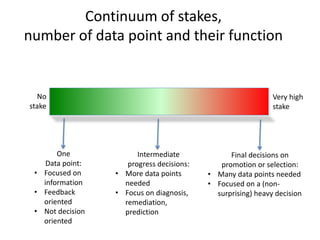

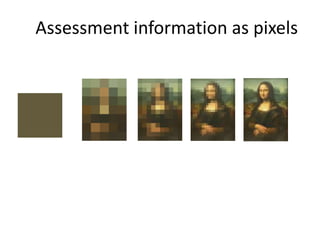

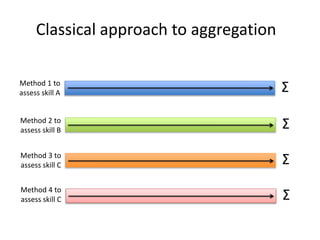

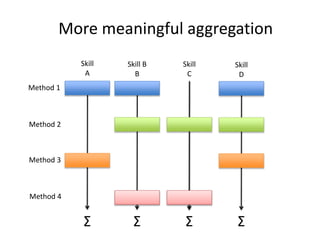

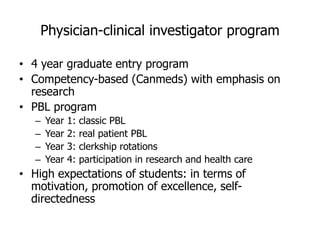

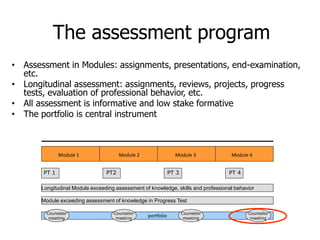

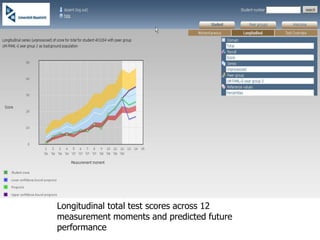

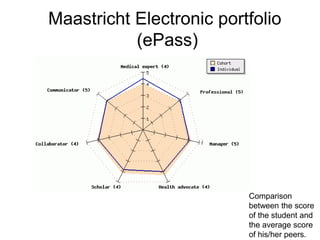

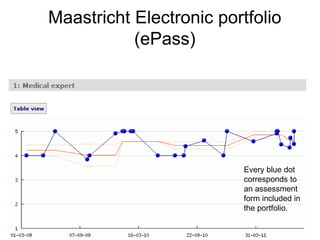

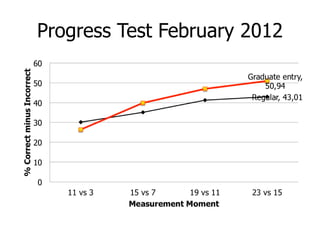

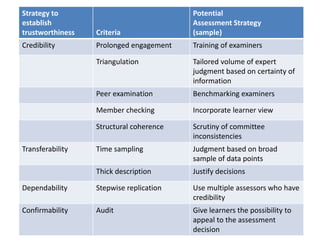

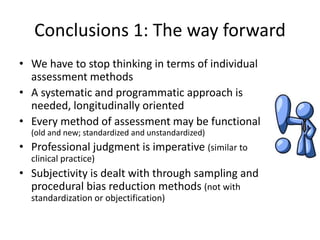

The document discusses competency assessment in education, emphasizing the importance of a programmatic approach that incorporates various assessment methods to ensure reliability, validity, and educational impact. It outlines the shift from traditional input-oriented curricula to output-oriented competencies and highlights the necessity of longitudinal assessment and meaningful feedback. The conclusions advocate for a systematic implementation of diverse assessment methods and the need for professional judgment in evaluating competencies.