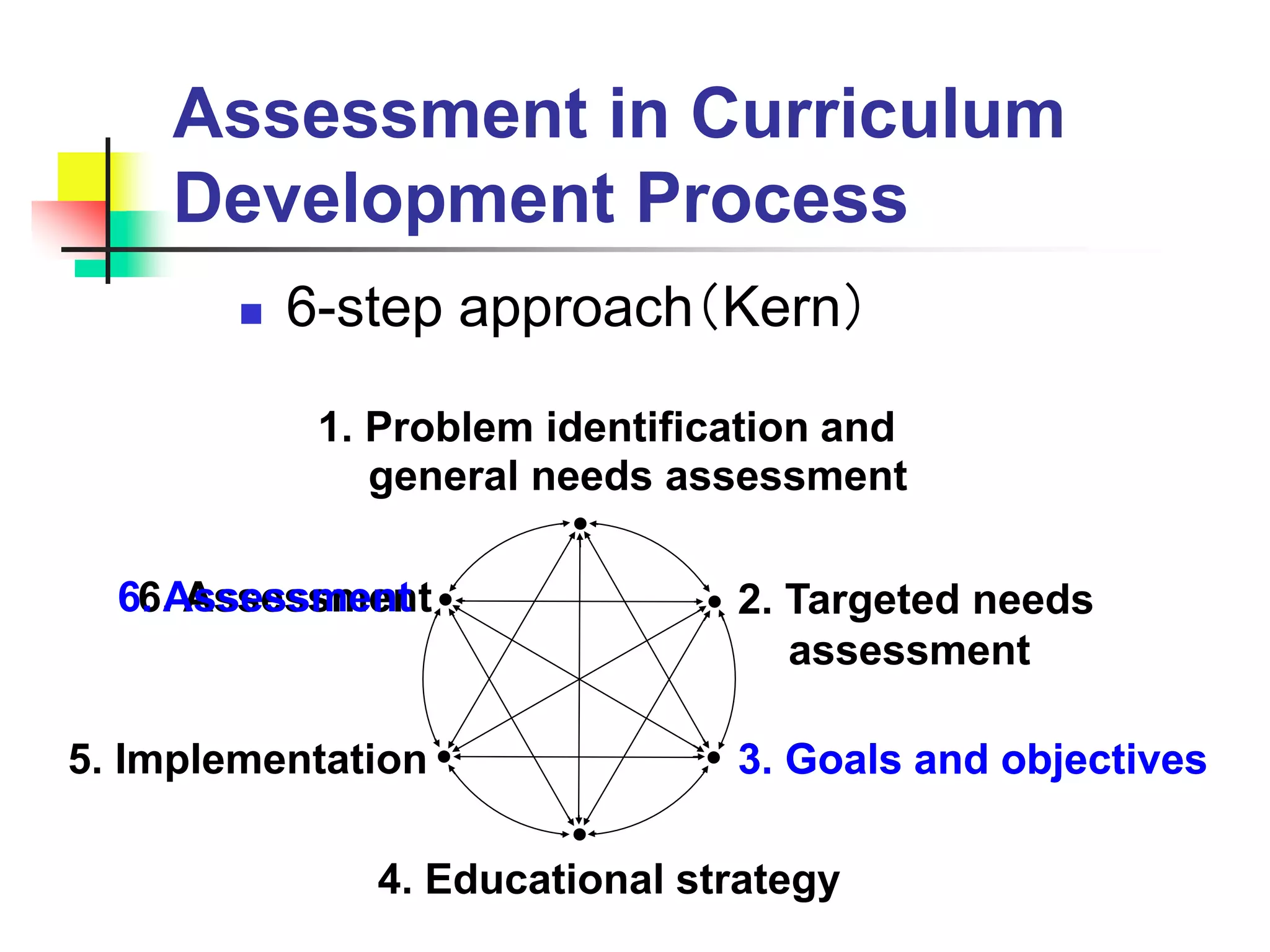

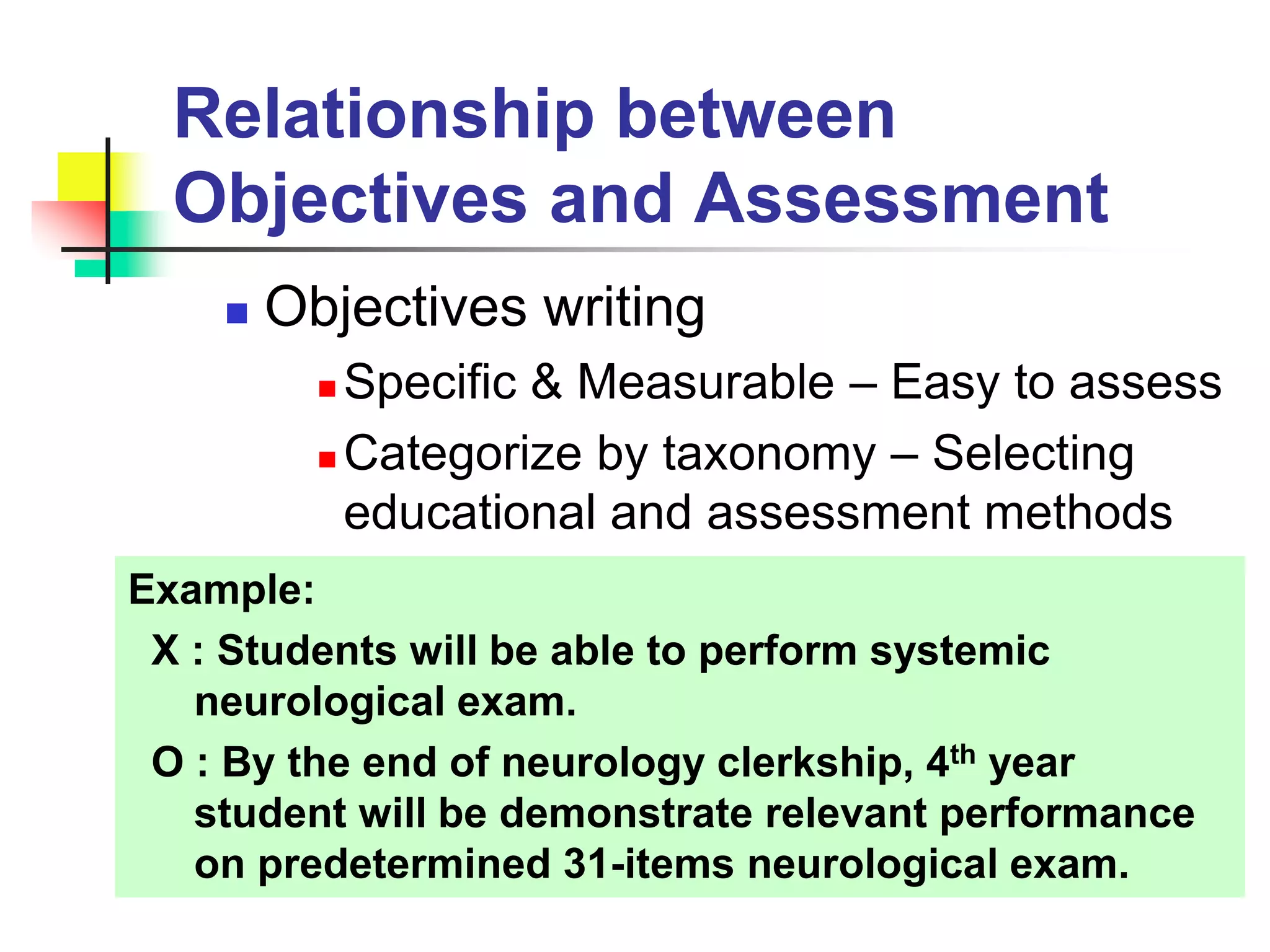

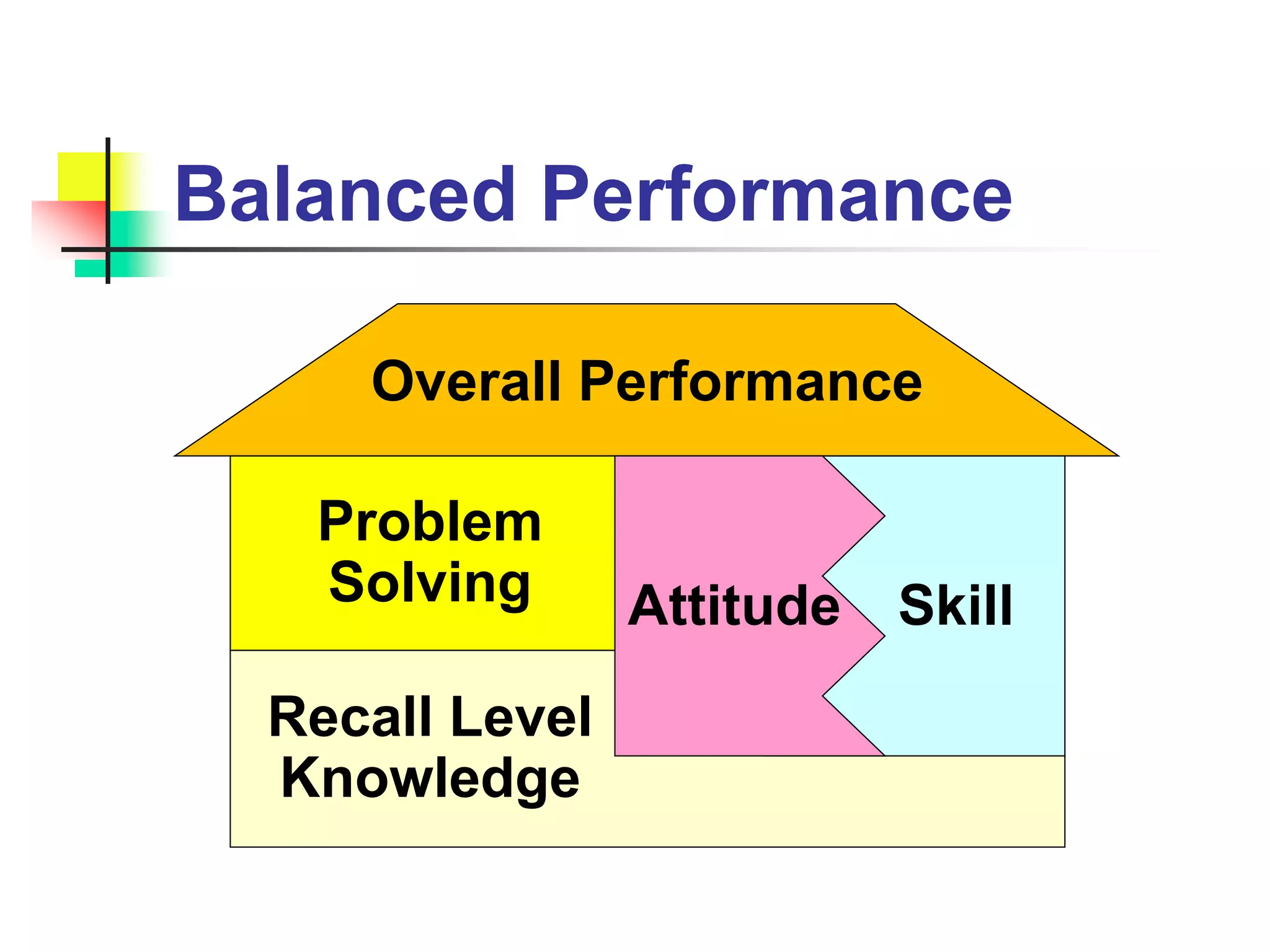

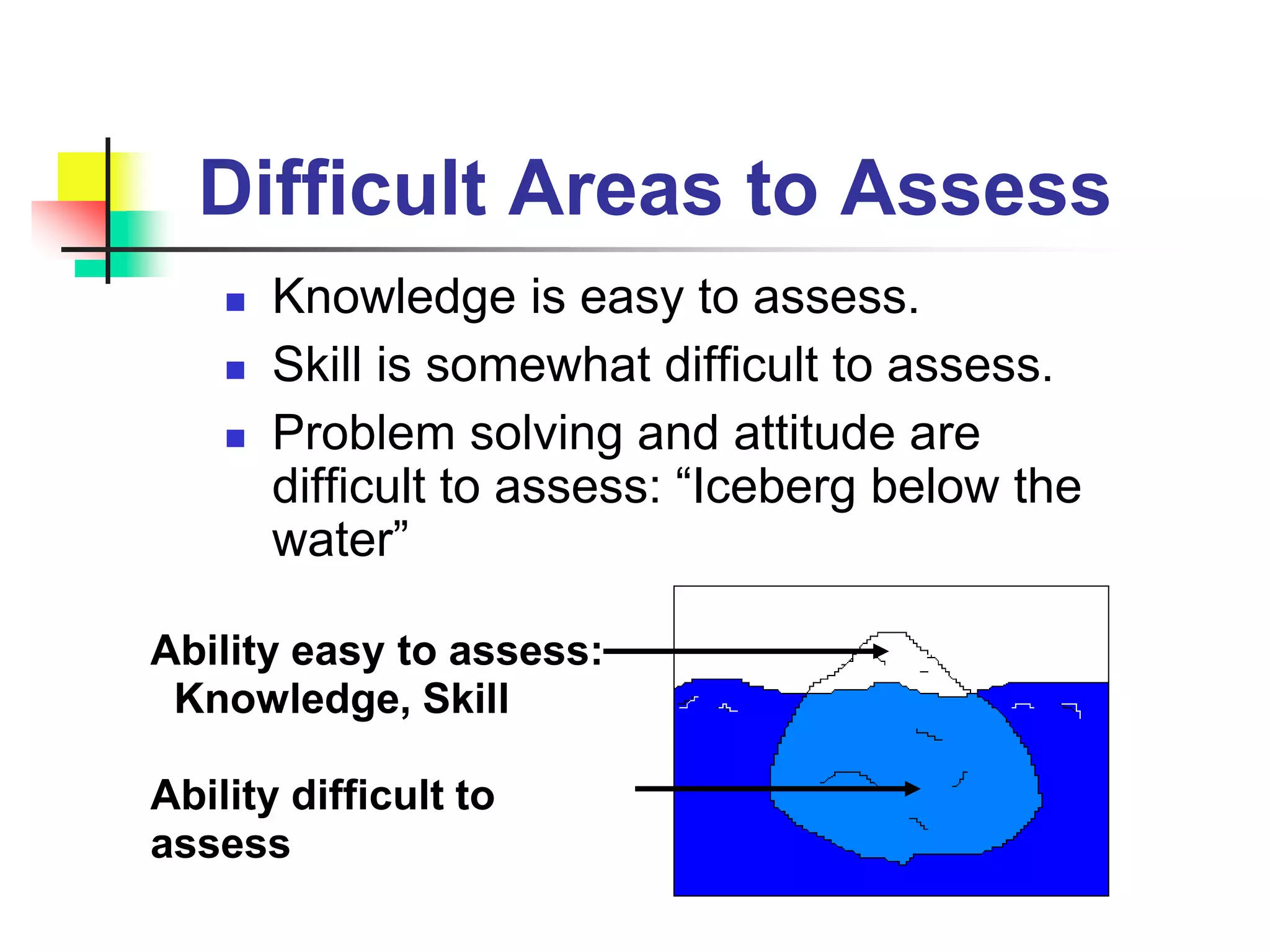

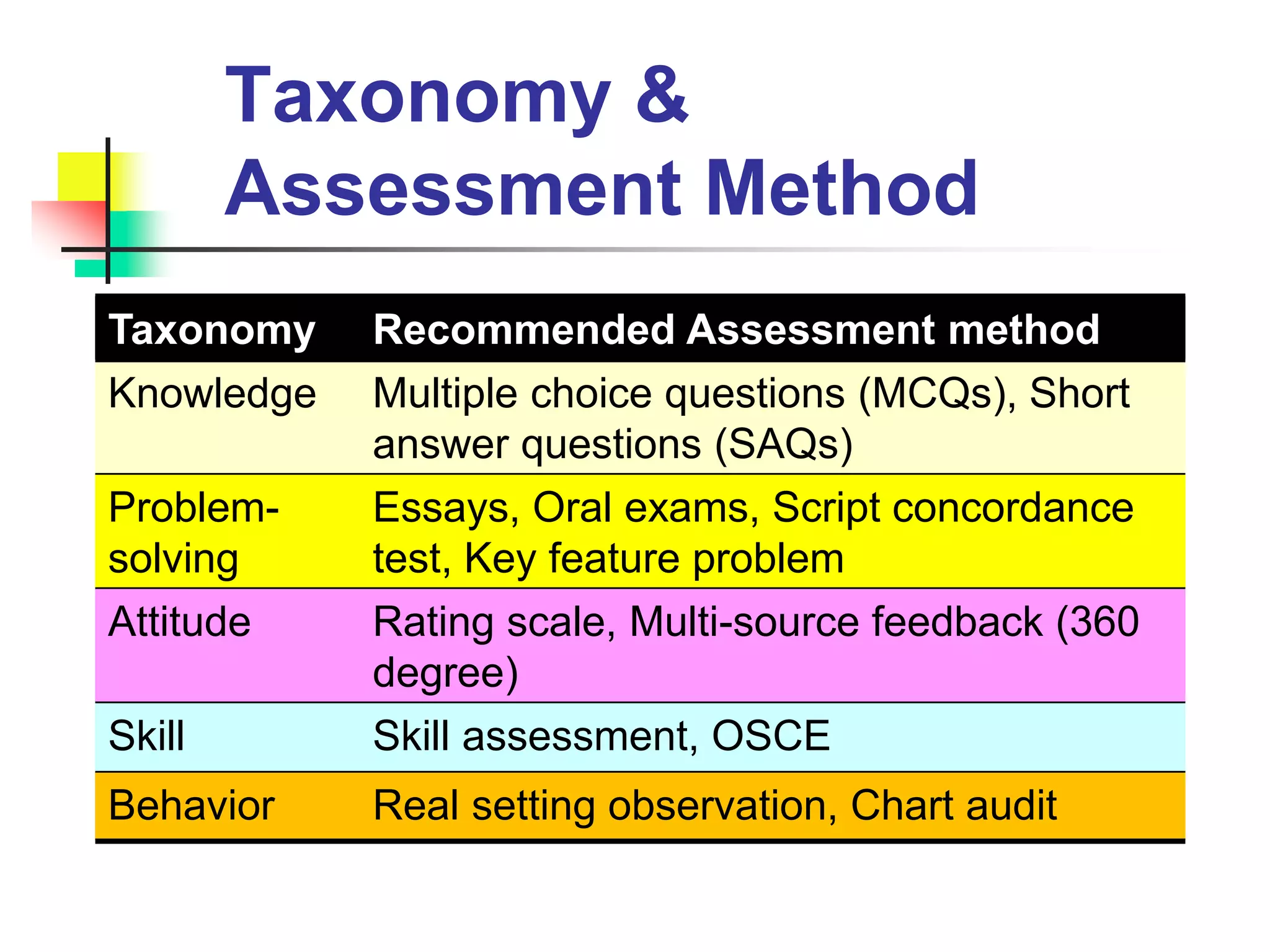

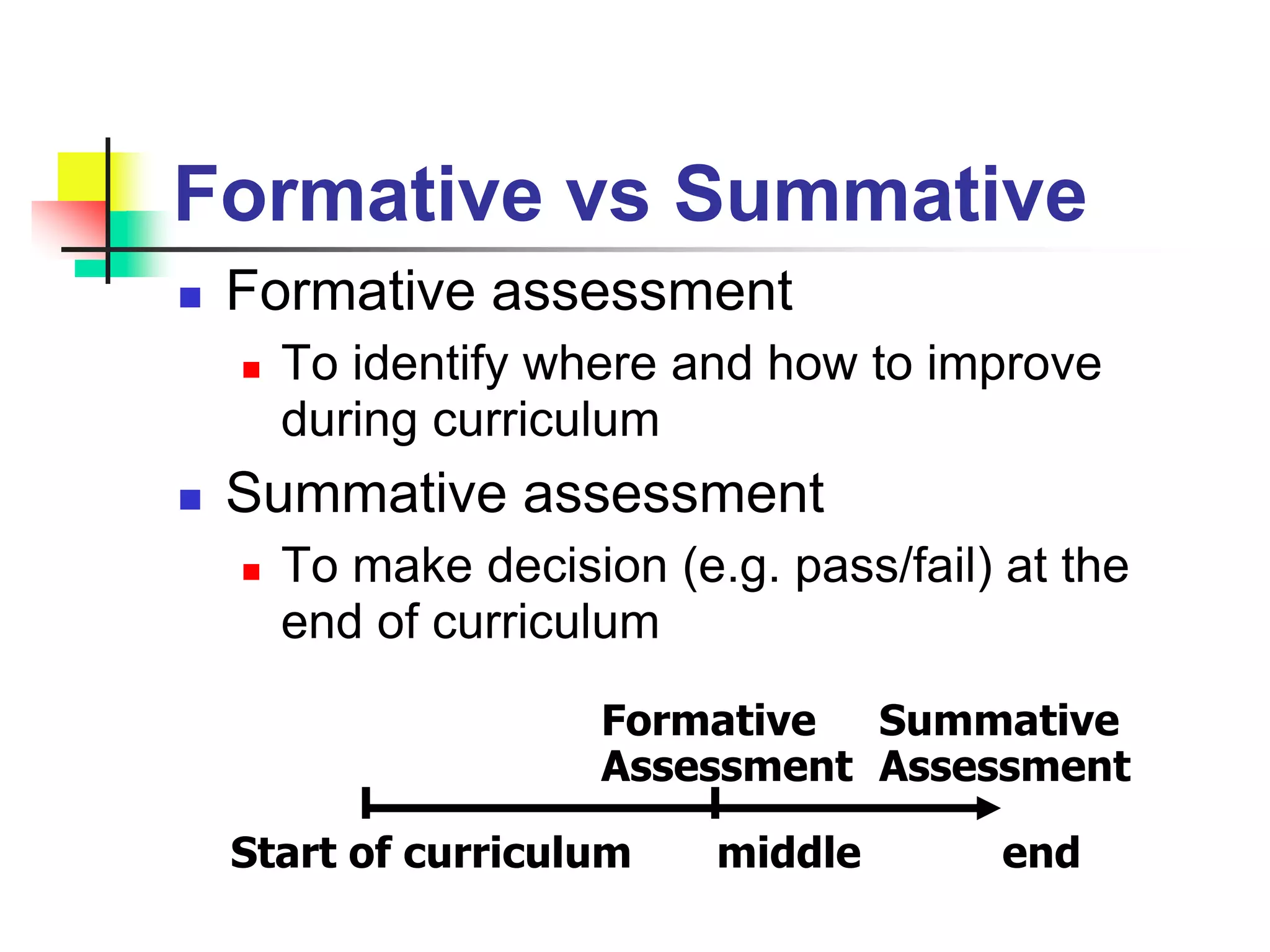

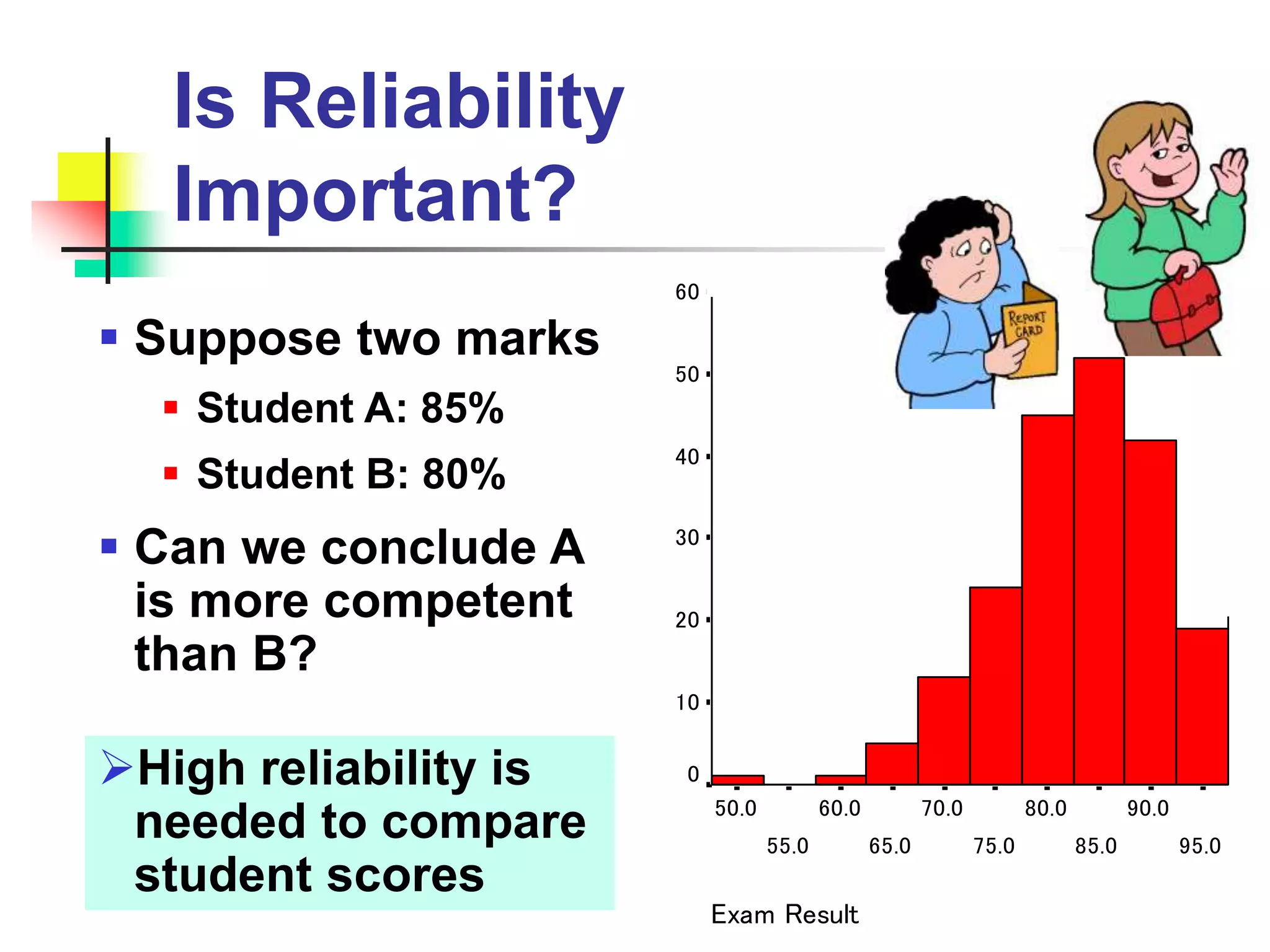

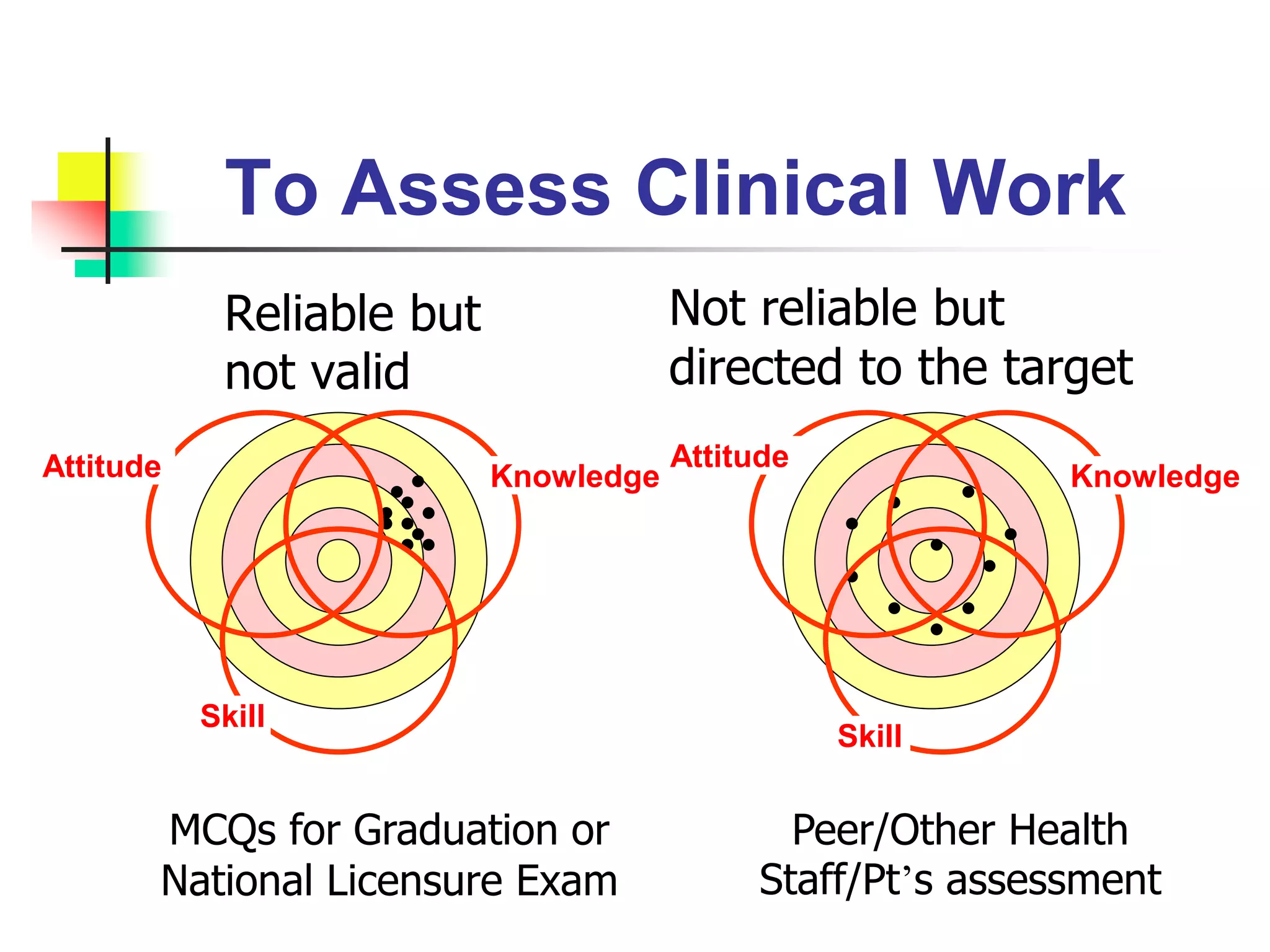

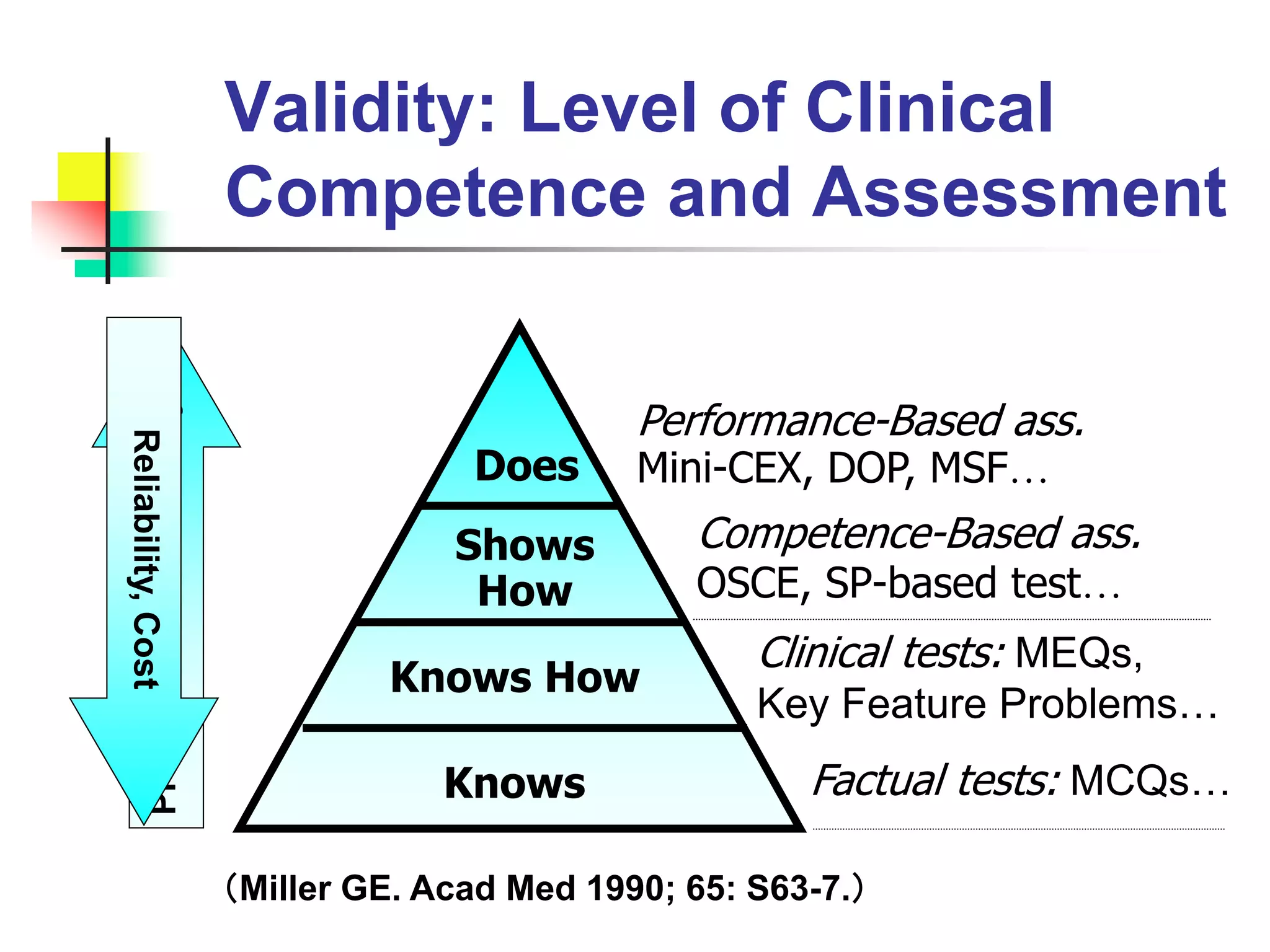

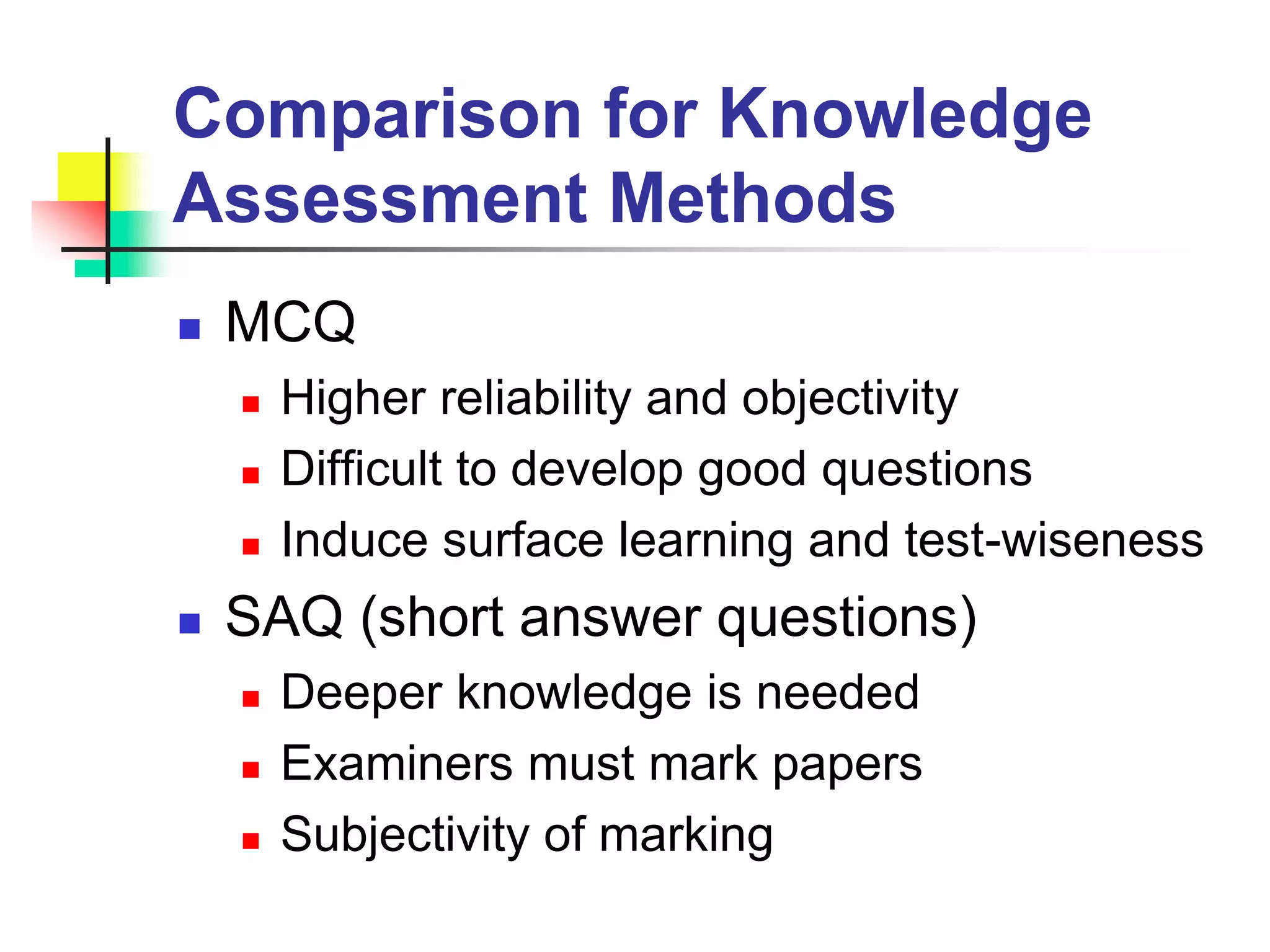

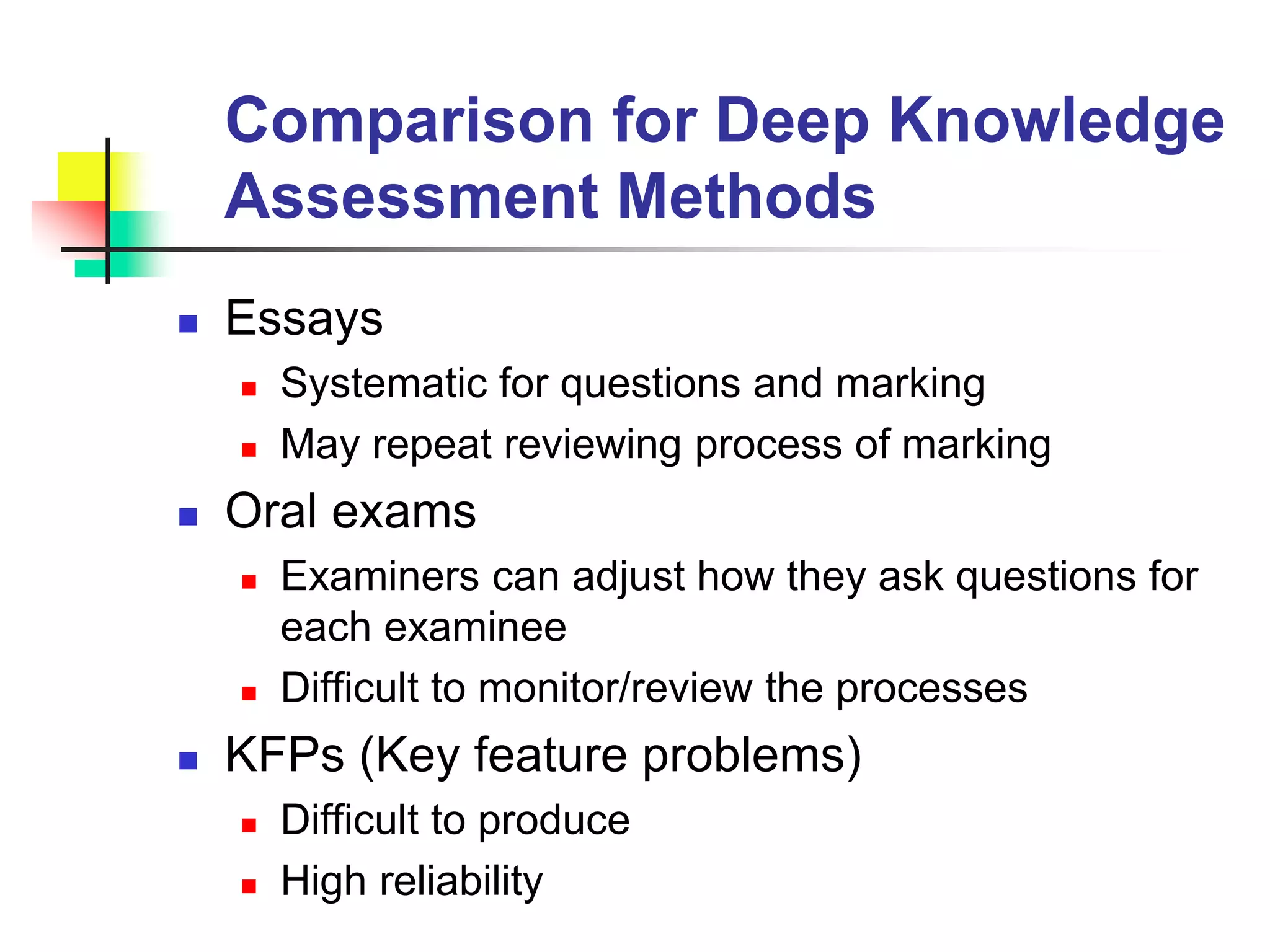

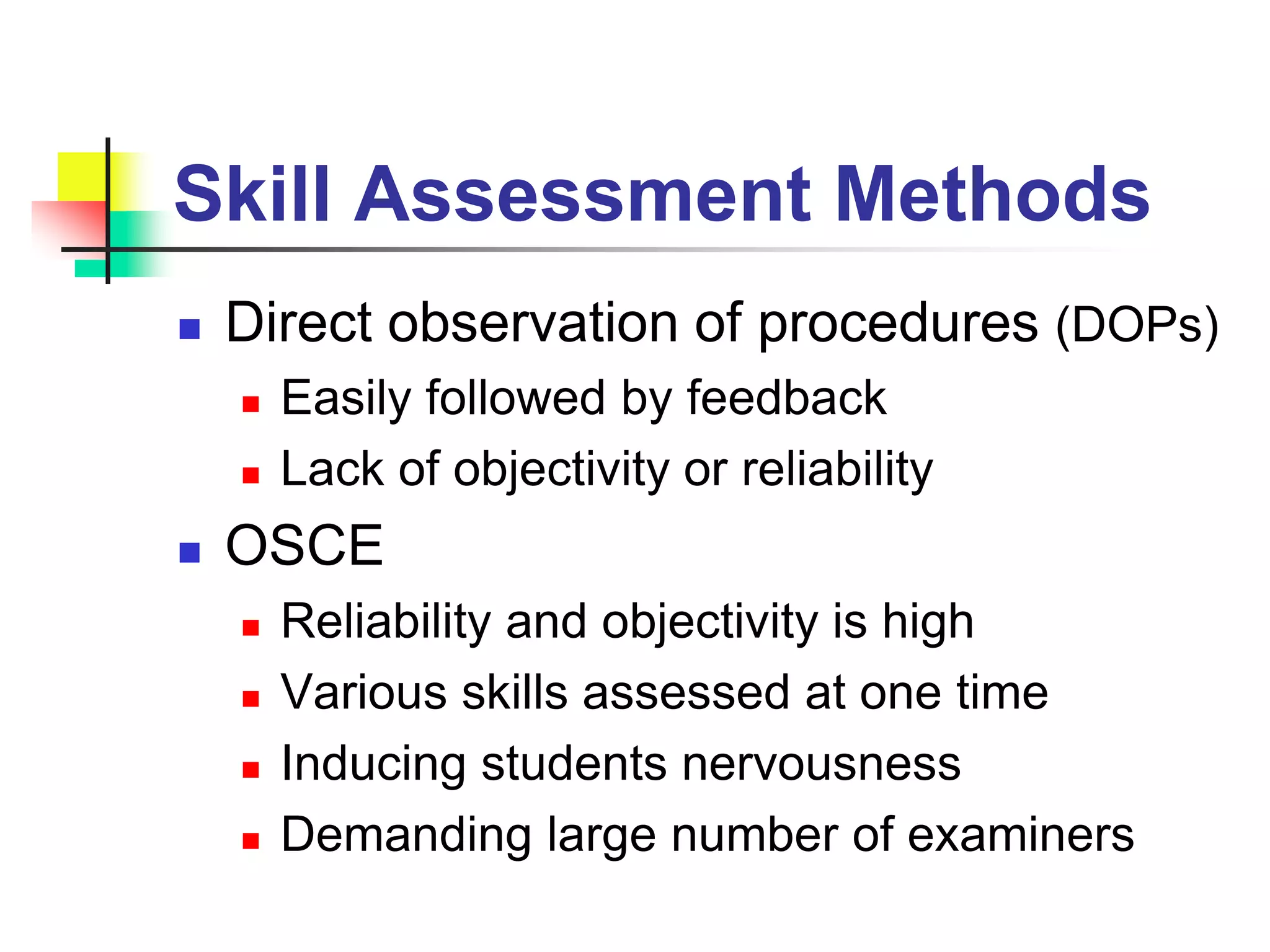

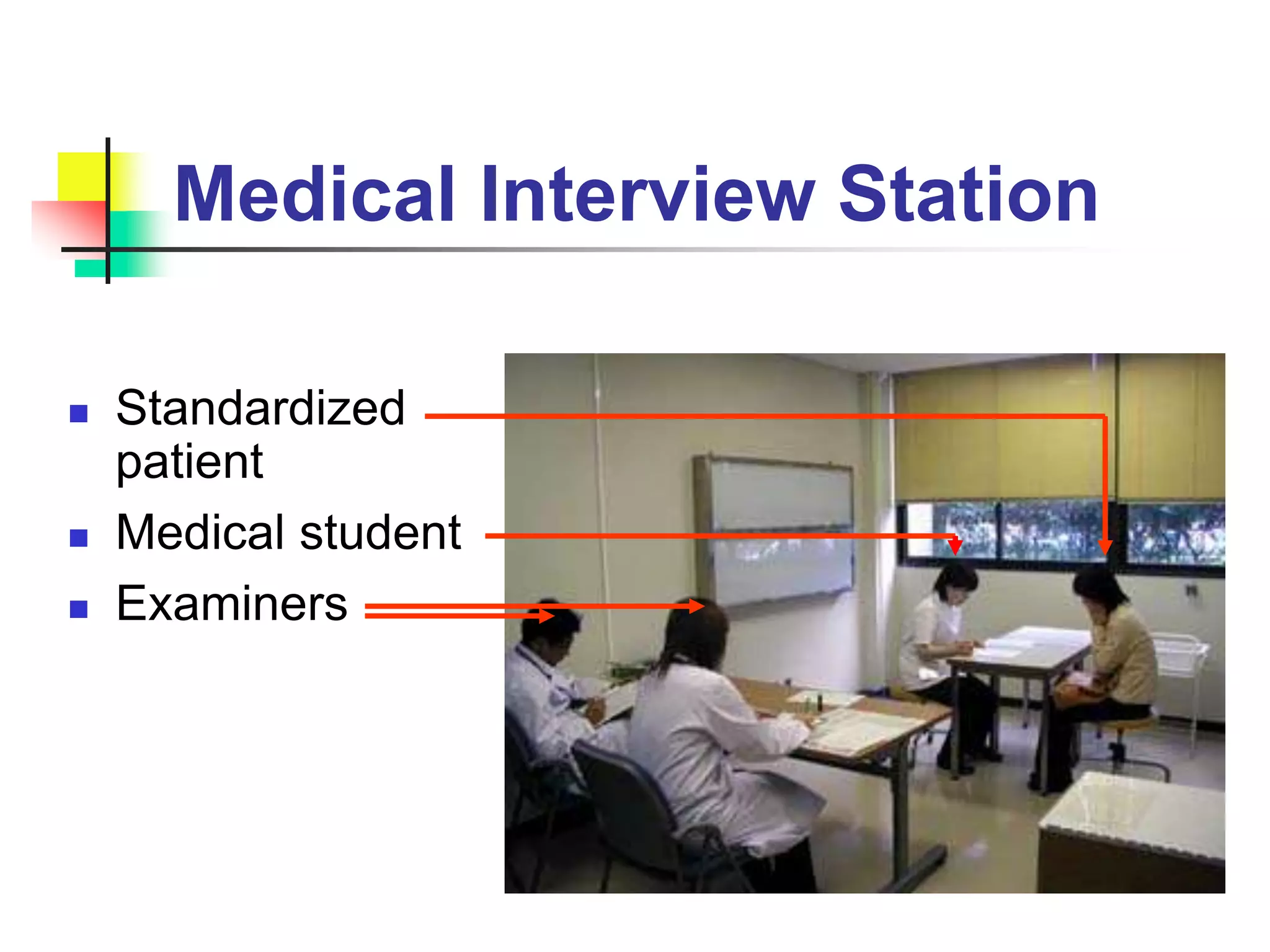

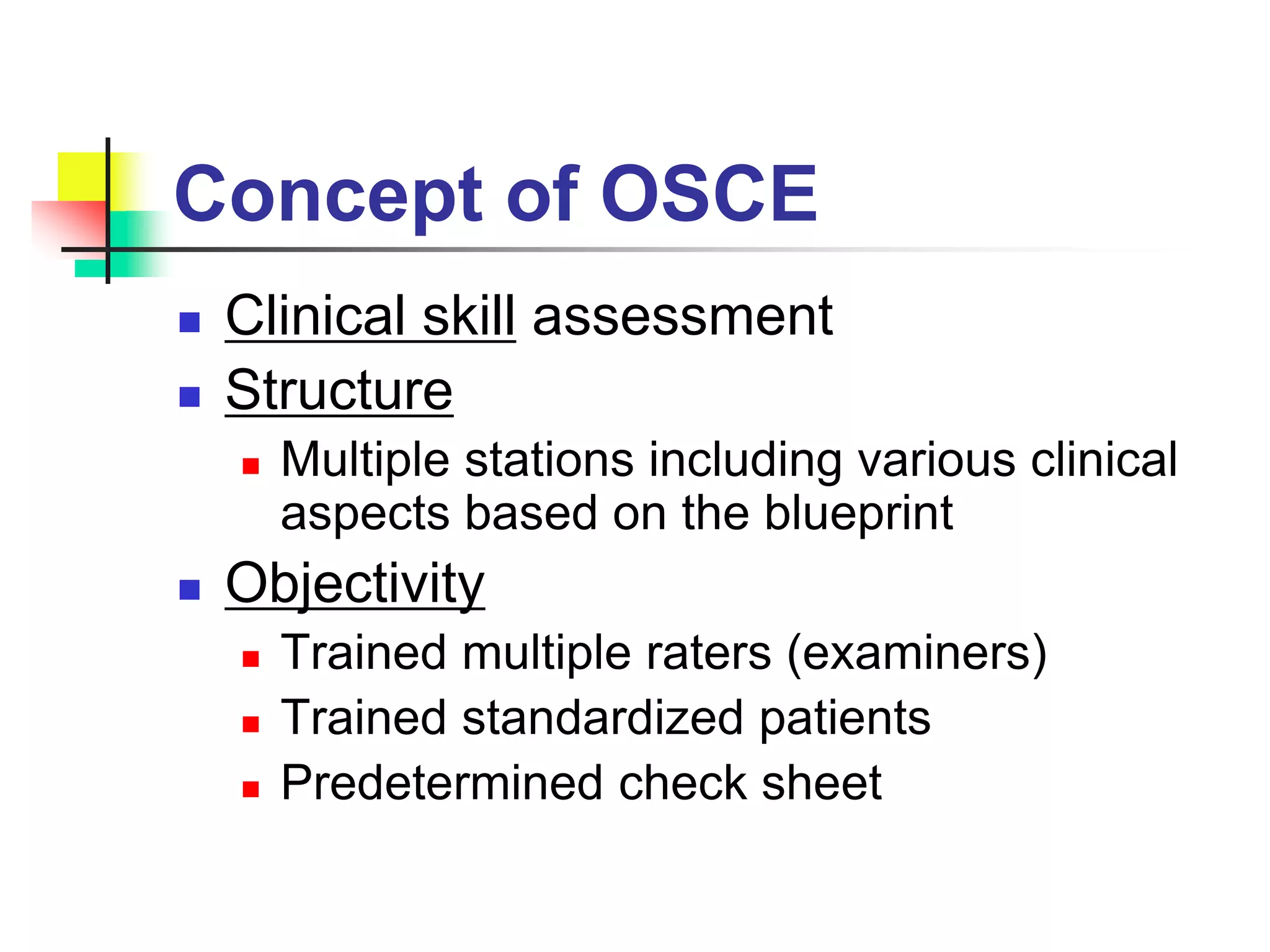

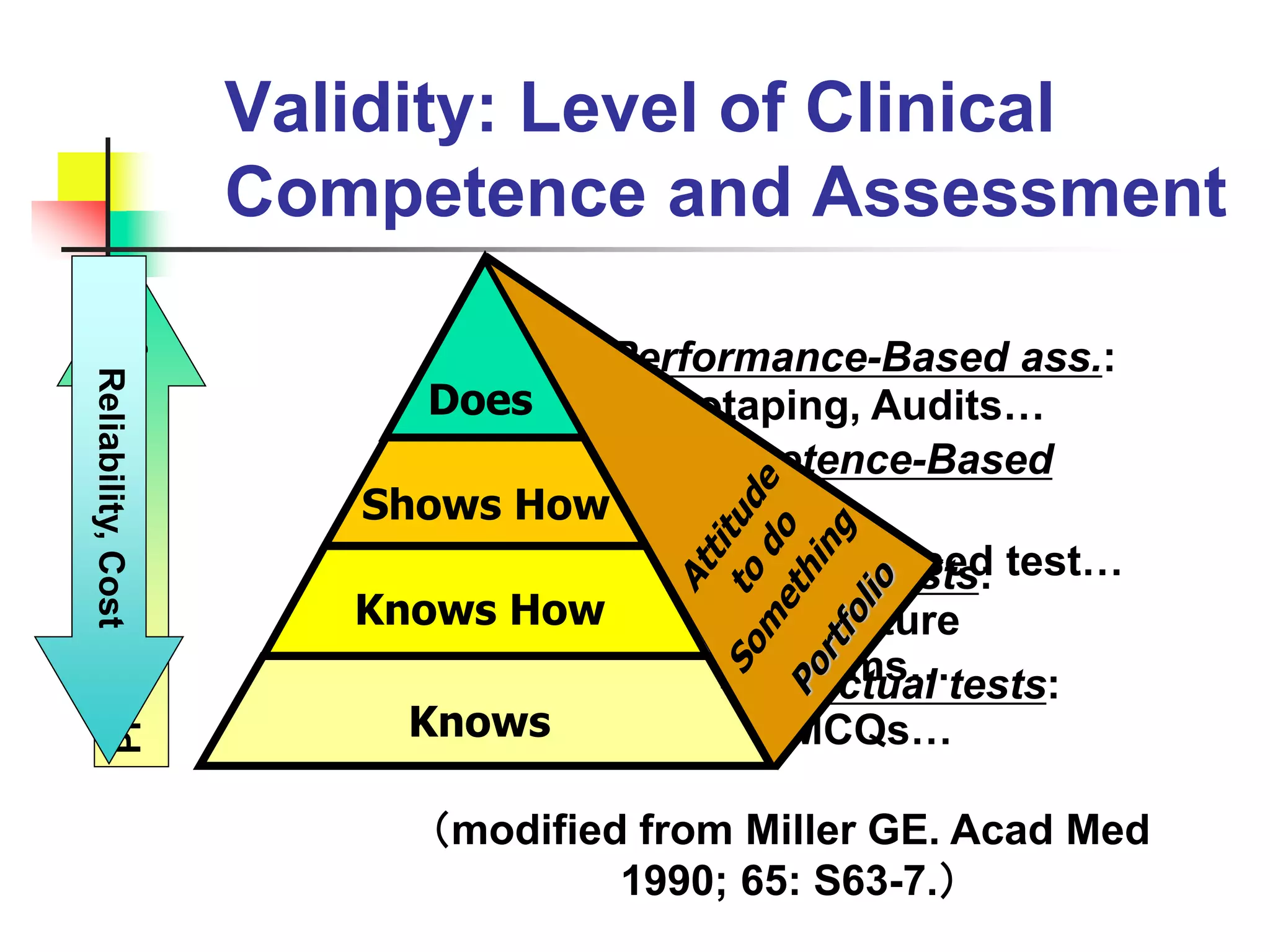

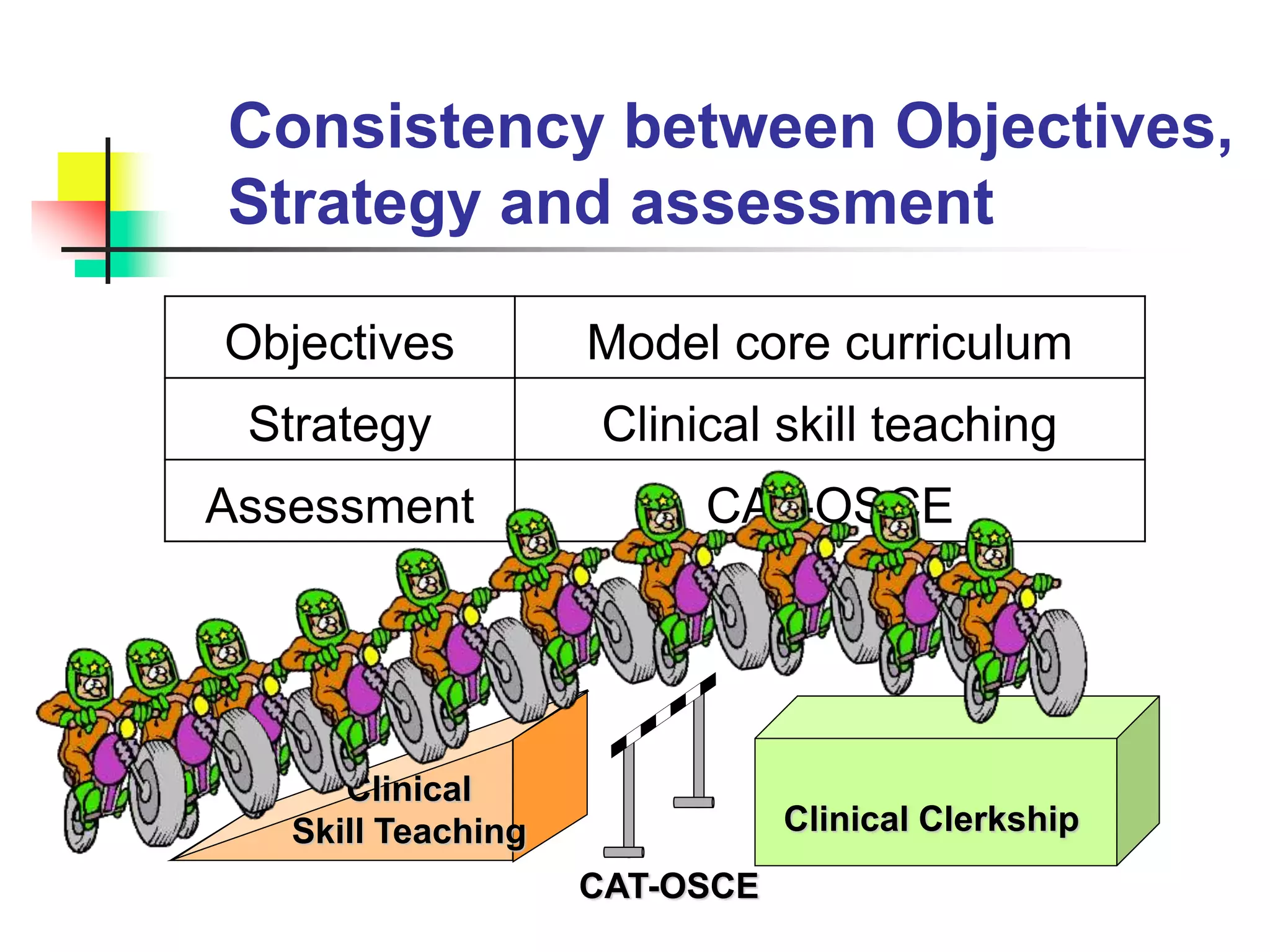

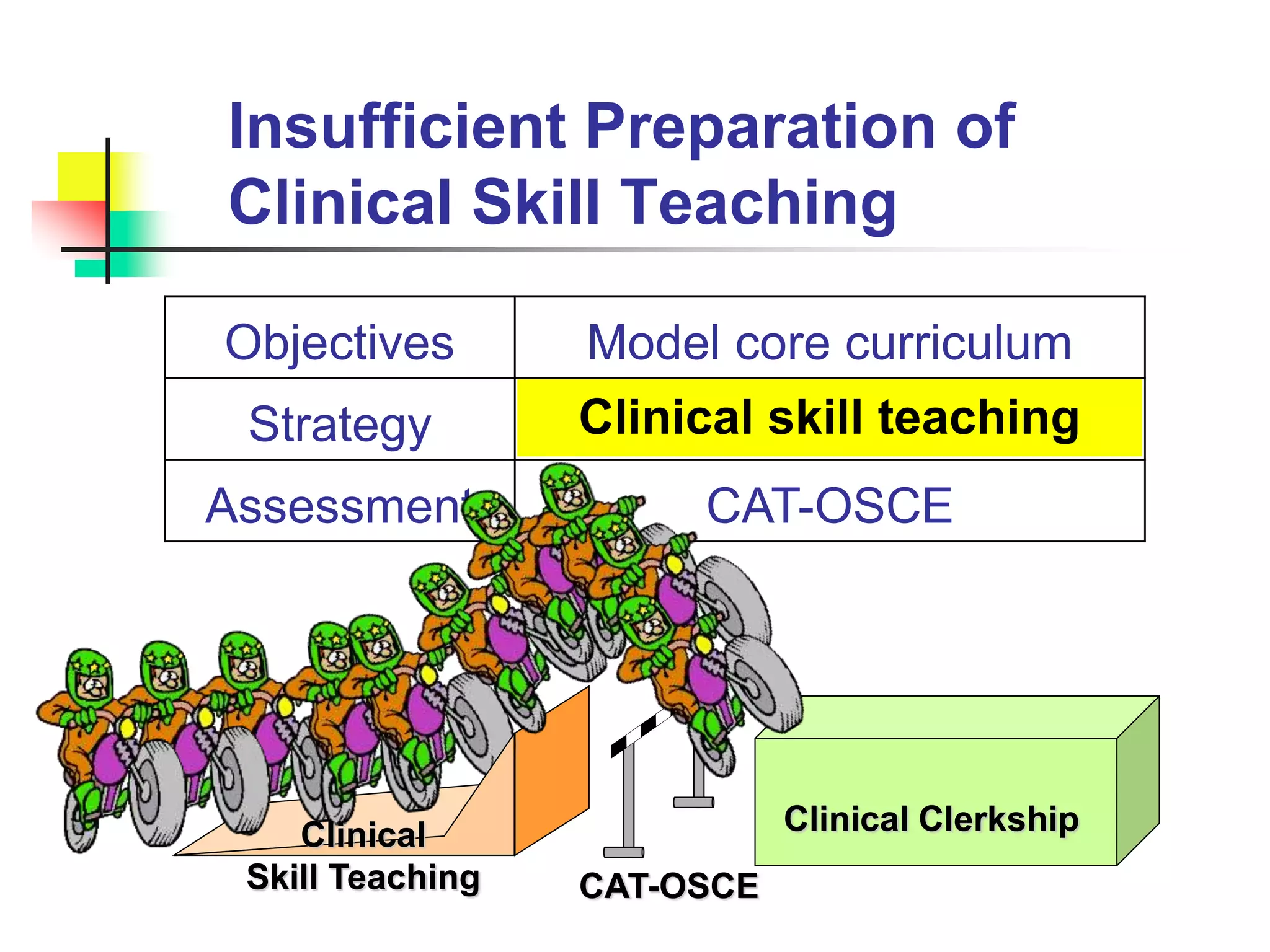

The document discusses various methods for assessing learners, including formative and summative assessments, and highlights the importance of balancing reliability, validity, cost and authenticity when selecting assessment tools. It compares methods for assessing different domains like knowledge, skills, problem-solving and attitudes. Taxonomies are useful for classifying learning objectives and selecting appropriate assessment methods matched to the level of learning.