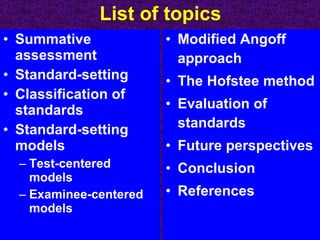

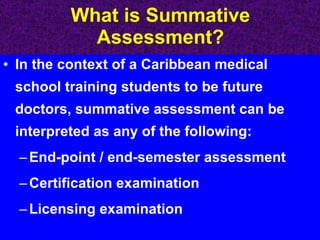

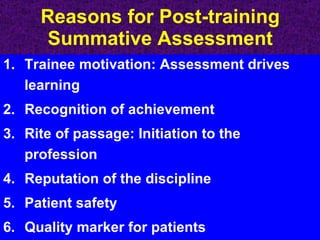

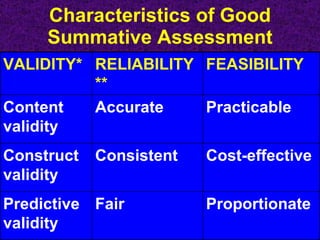

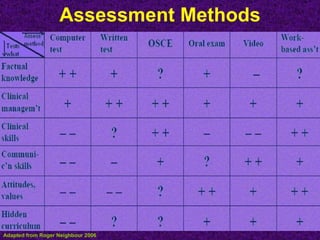

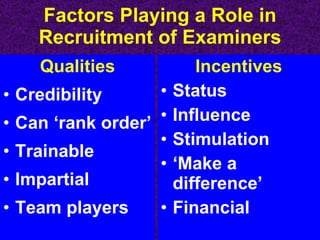

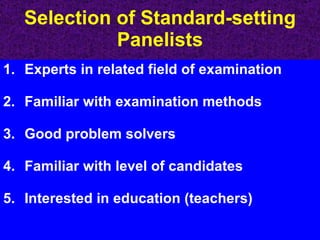

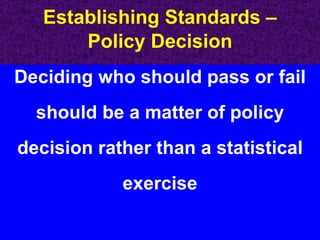

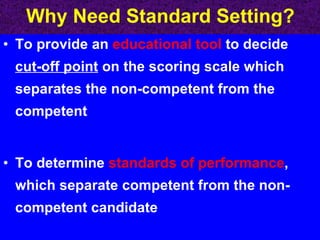

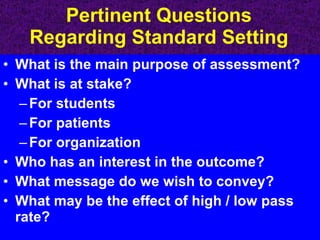

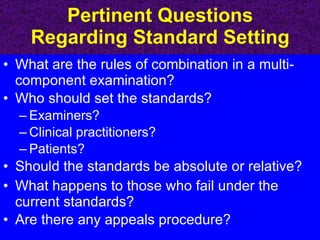

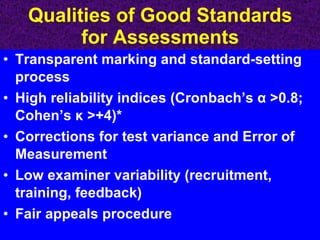

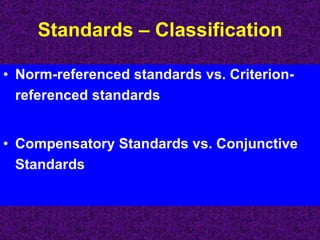

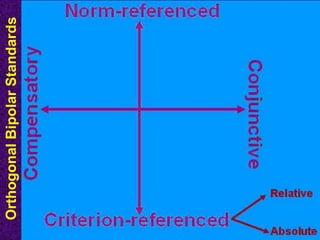

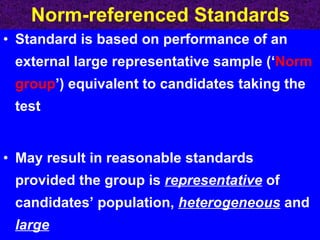

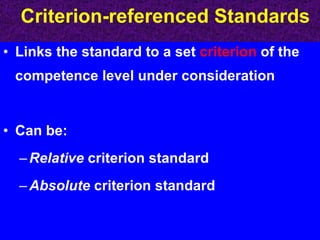

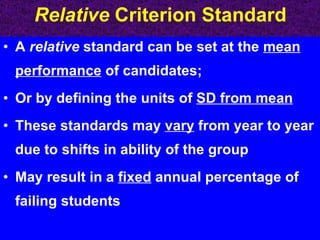

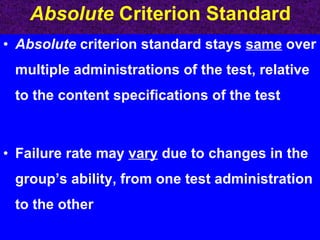

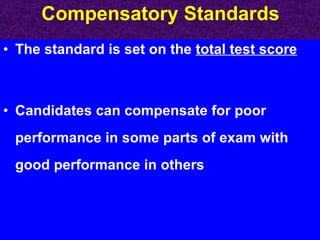

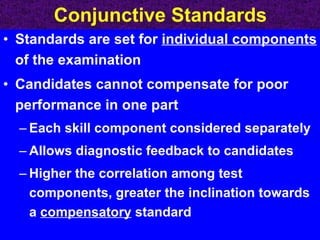

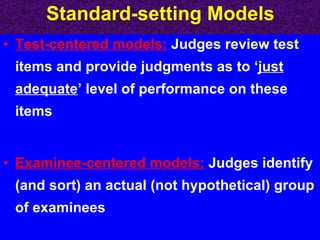

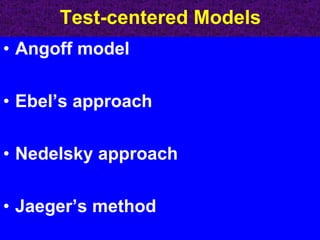

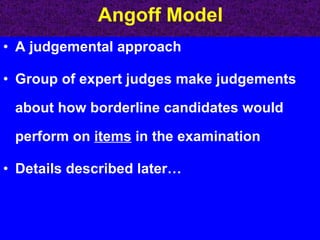

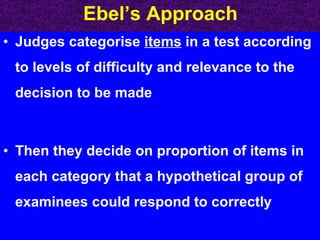

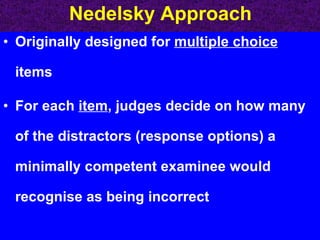

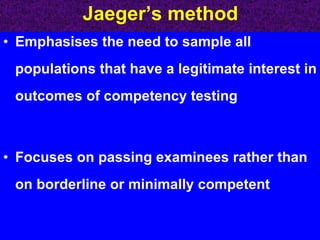

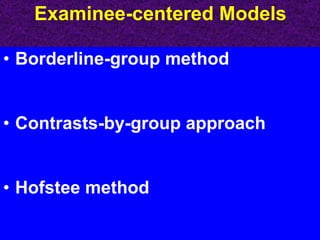

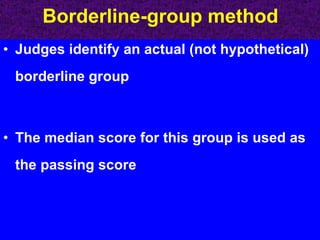

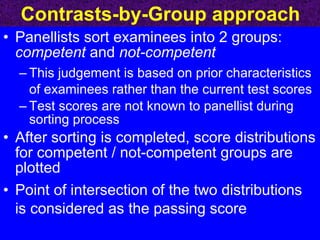

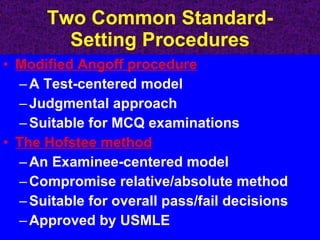

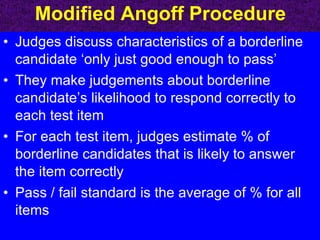

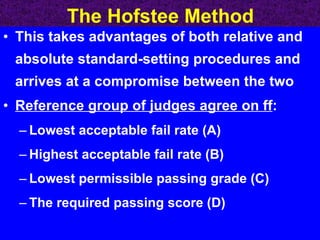

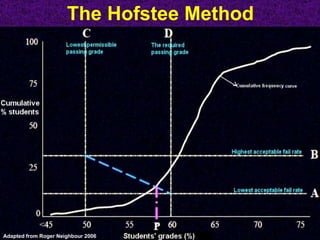

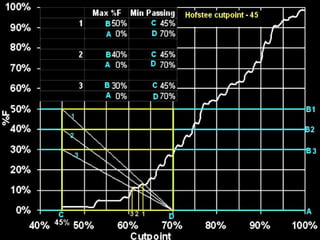

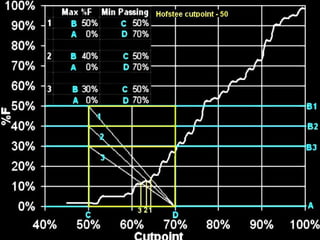

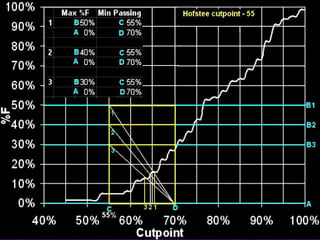

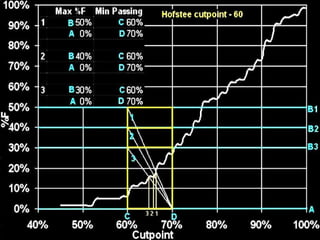

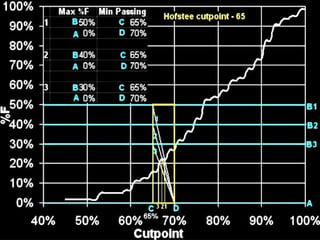

The document discusses the importance of standard setting in summative assessments for medical students, highlighting various models and methods, such as the modified Angoff approach and the Hofstee method. It emphasizes the need for effective standard-setting procedures to differentiate competent from non-competent candidates and to enhance the quality of medical education and patient safety. Future perspectives on improving these processes and ensuring construct validity are also addressed.

![Standard Setting and Medical Students’ Assessment Dr. Sanjoy Sanyal Associate Professor – Neurosciences Medical University of the Americas Nevis, St. Kitts-Nevis, WI [email_address]](https://image.slidesharecdn.com/xstandardsettinginmedicalexams-091129191641-phpapp01/75/Standard-Setting-In-Medical-Exams-1-2048.jpg)