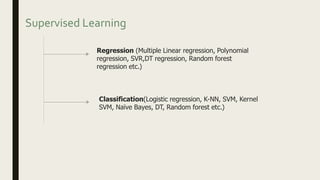

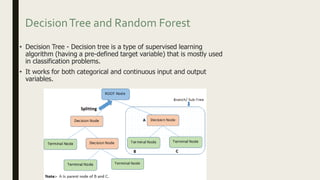

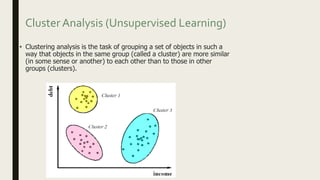

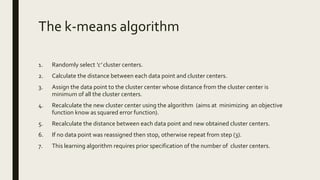

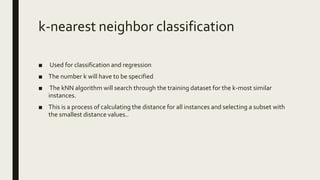

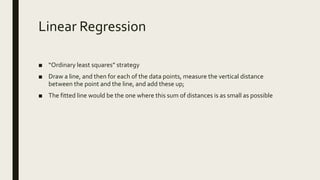

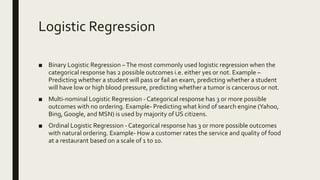

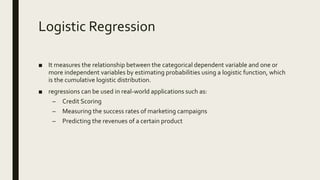

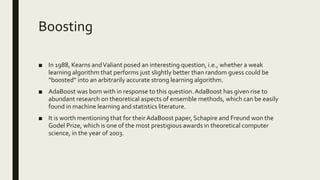

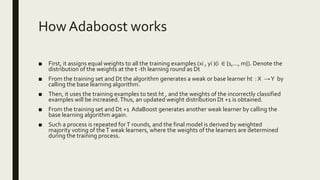

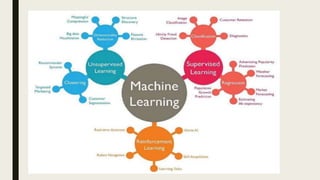

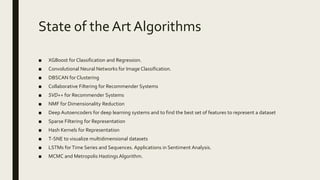

This document provides an overview of major data mining algorithms, including supervised learning techniques like decision trees, random forests, support vector machines, naive Bayes, and logistic regression. Unsupervised techniques discussed include clustering algorithms like k-means and EM, as well as association rule learning using the Apriori algorithm. Application areas and advantages/disadvantages of each technique are described. Libraries for implementing these algorithms in Python and R are also listed.