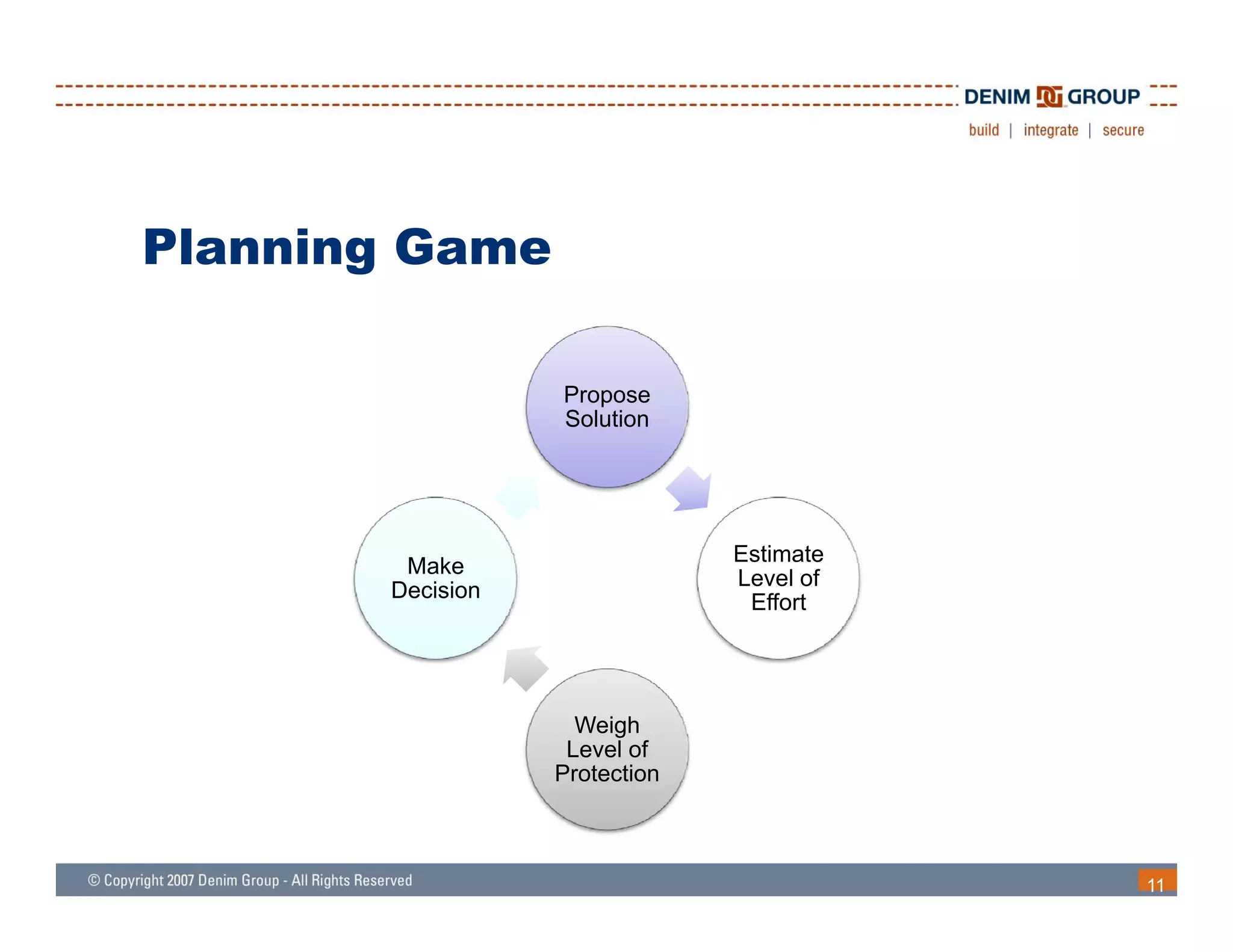

The document outlines a process for remediating vulnerabilities in web applications, emphasizing the importance of a business-driven approach and stakeholder involvement. It discusses techniques for identifying and classifying vulnerabilities, planning remediation efforts, and the necessity of automated testing in the remediation process. Additionally, it highlights the significance of communication and learning from past experiences to improve future security practices.