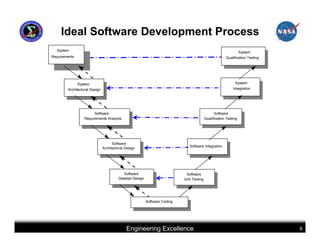

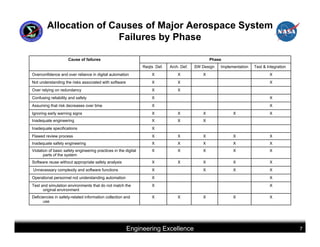

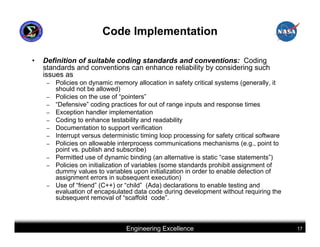

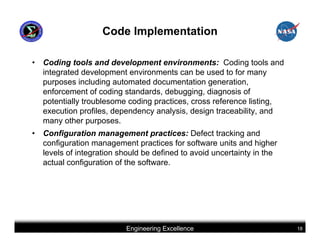

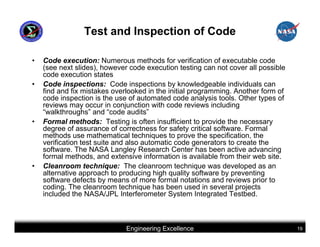

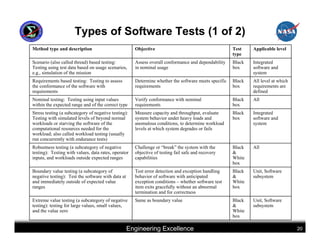

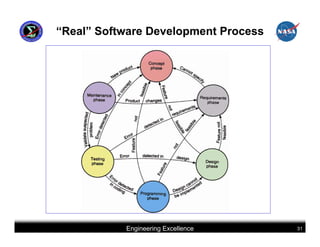

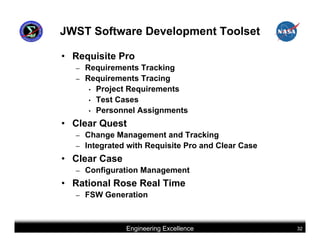

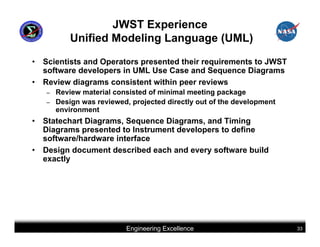

This document summarizes best practices for software development for human-rated spacecraft. It discusses approaches to increasing software reliability through defect prevention and fault tolerance. It also outlines key aspects of an ideal software development process including requirements analysis, architectural design, detailed design, coding, testing and integration. Finally, it discusses considerations for requirements validation and verification, requirements management, and software architectural trades.