Embed presentation

Downloaded 213 times

![Bibliography

• [1] Understanding LSTM Networks

• [2] Back Propagation Through Time and Vanishing Gradients](https://image.slidesharecdn.com/rnn-lstm-161106132927/85/Understanding-RNN-and-LSTM-14-320.jpg)

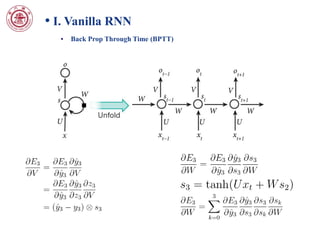

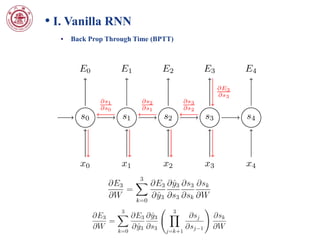

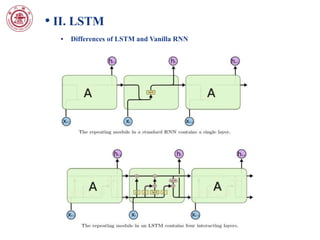

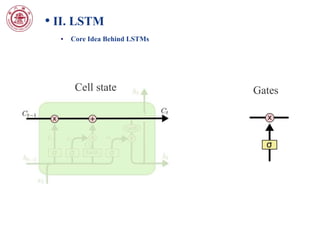

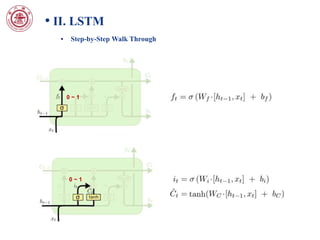

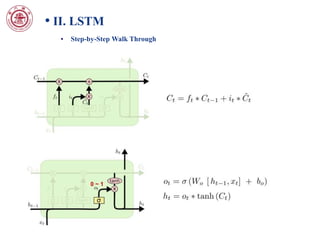

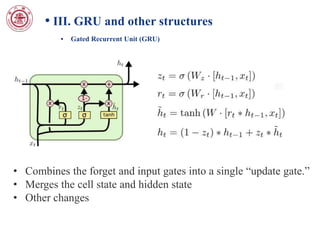

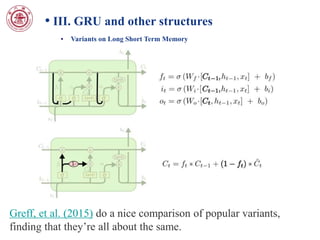

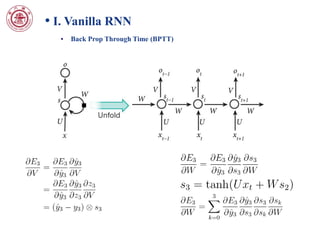

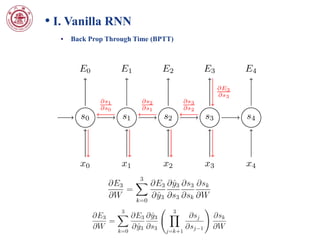

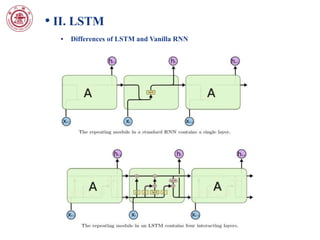

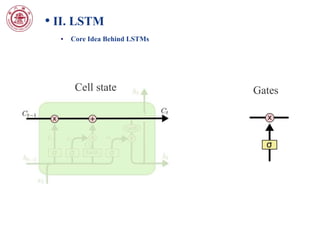

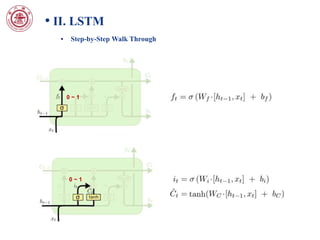

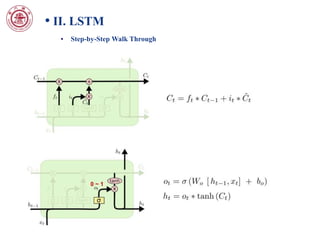

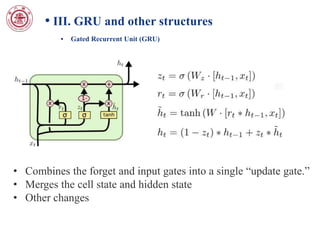

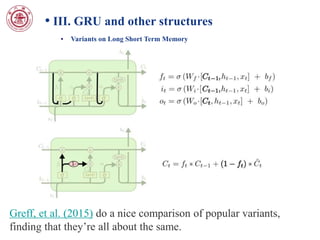

This document discusses different types of recurrent neural networks (RNNs) including vanilla RNNs, LSTMs, and GRUs. It notes that while vanilla RNNs are theoretically capable of handling long-term dependencies, they often fail to do so in practice due to the gradient vanishing problem. LSTMs address this issue through their use of cell states and gates. The document provides a step-by-step explanation of how LSTMs work and compares their architecture to GRUs, which combine the forget and input gates of LSTMs.

![Bibliography

• [1] Understanding LSTM Networks

• [2] Back Propagation Through Time and Vanishing Gradients](https://image.slidesharecdn.com/rnn-lstm-161106132927/85/Understanding-RNN-and-LSTM-14-320.jpg)