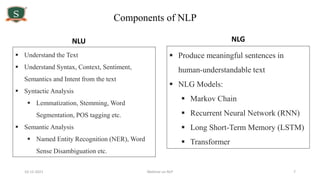

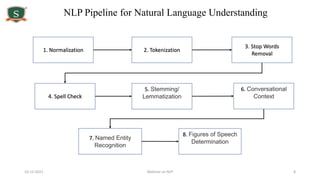

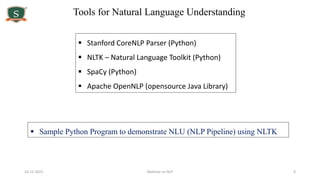

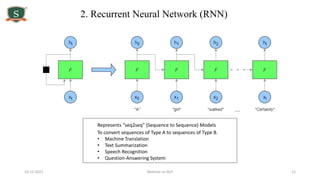

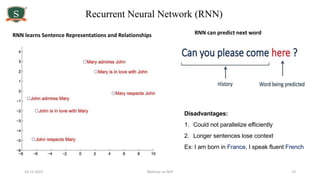

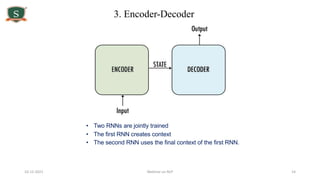

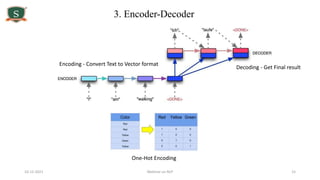

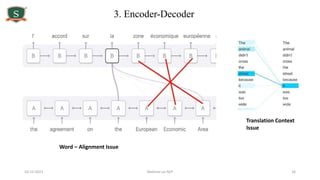

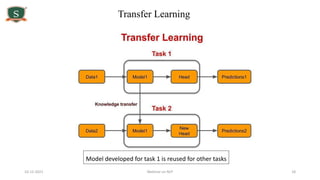

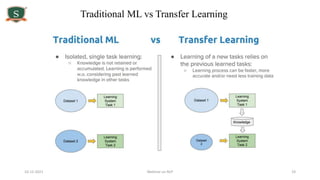

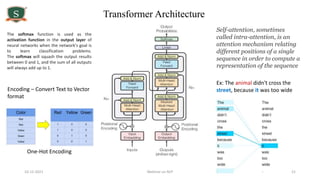

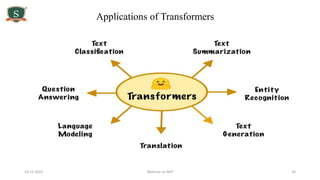

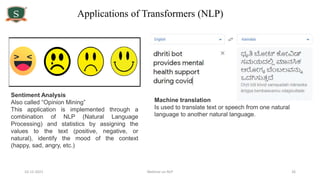

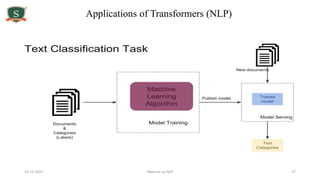

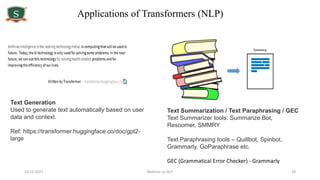

The document outlines a webinar on natural language processing (NLP) presented by Dr. Shreyas Rao, covering the basics, research, applications, and trends in the field. It highlights the importance of NLP in enabling machines to understand human language and details various models, including neural networks and transformers, that are pivotal to NLP tasks. The webinar also discusses research and project opportunities for students in NLP, emphasizing the significance of transformer models in modern applications like chatbots, sentiment analysis, and machine translation.