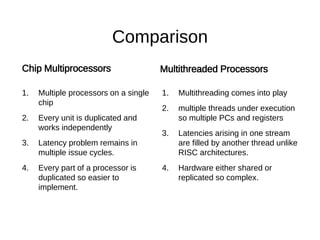

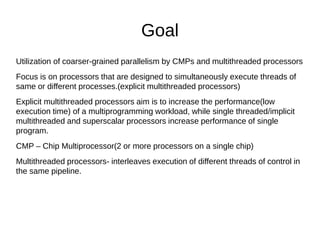

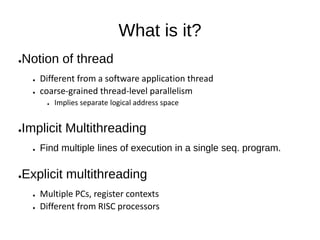

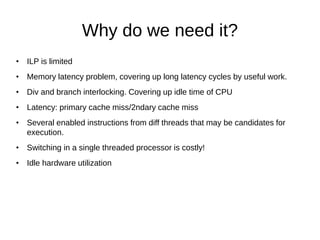

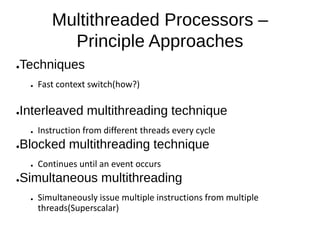

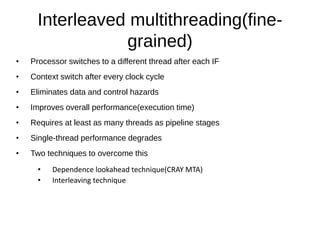

The document discusses multithreaded processors and their aim to leverage coarser-grained parallelism to enhance the performance of multiprogramming workloads. It compares explicit multithreaded processors with single-threaded and superscalar processors, outlining various multithreading techniques such as interleaved, blocked, and simultaneous multithreading. Additionally, it explores the advantages and challenges of these techniques, particularly in managing latency and context switching, while summarizing findings from notable surveys on the subject.

![Taken from [2]. Survey of processors with explicit multithreading.](https://image.slidesharecdn.com/multithreadedprocessorsppt-150424233814-conversion-gate01/85/Multithreaded-processors-ppt-6-320.jpg)

![Taken from [2]. Survey of processors with explicit multithreading.](https://image.slidesharecdn.com/multithreadedprocessorsppt-150424233814-conversion-gate01/85/Multithreaded-processors-ppt-13-320.jpg)