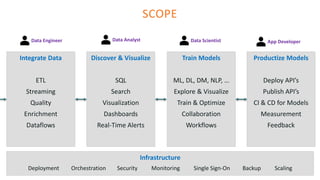

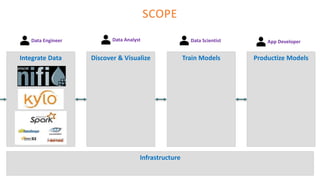

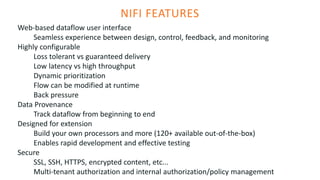

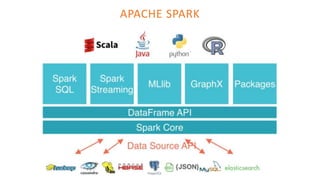

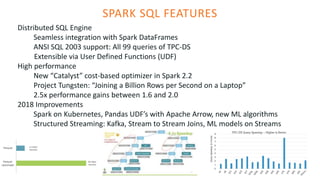

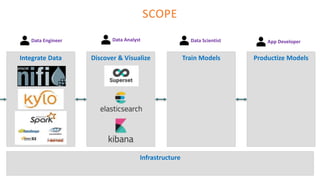

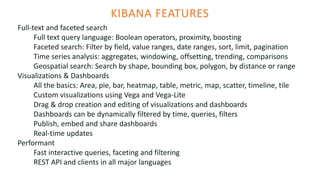

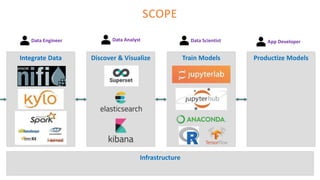

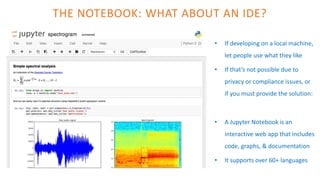

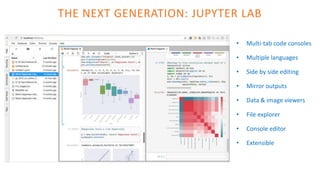

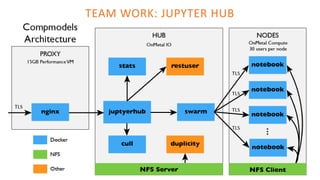

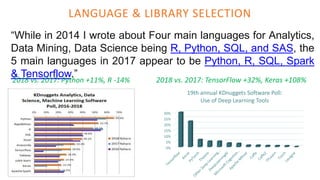

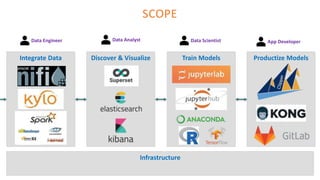

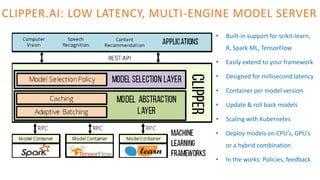

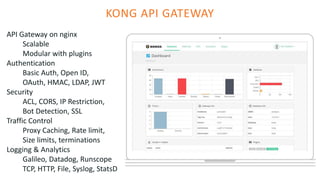

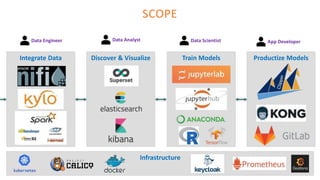

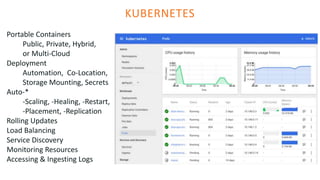

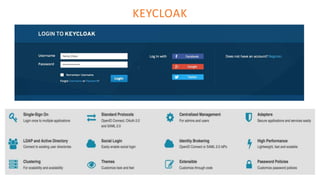

The document outlines the architecture of an open-source data science platform, detailing its components such as data integration, model training, and visualization tools. It emphasizes the use of technologies like Apache NiFi, Spark, and Jupyter for data processing and analysis, while discussing deployment considerations with Kubernetes and API management. The platform aims for enterprise scalability and modularity without relying on commercial software, advocating for incremental evolution over time.