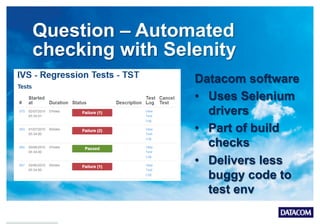

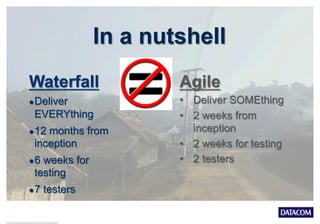

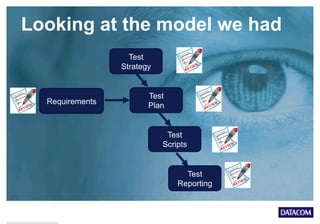

The document discusses transitioning from a traditional waterfall testing model to an agile testing model. It advocates focusing on the needs of the customer rather than rigid processes and deliverables. An effective agile testing approach focuses on relationships, lightweight processes, transparency, and making testing work visible. Testers should avoid the "cargo cult" trap of using old procedures just because and should continuously review and improve testing practices.

![Test Reporting

What testing is

complete?

What problems have

there been?

[Defects]

What testing have

we done?](https://image.slidesharecdn.com/miketalksagilenz-deprogrammingthecargocultagilenzconferencesconflictedcopy-150909015045-lva1-app6892/85/Mike-Talks-Datacom-24-320.jpg)

![That’s a lot of questions …

What testing is

complete?

What problems

have there

been?

[Defects]

What testing

have we done?

What testing are

we going to do?

What

requirement/

feature are we

testing?

What testing

have we done?

How do new

testers learn/ test

on the system?

What are we

going to test?

Who is going to

test?

How long is it

going to take?

How do we go

about delivering

testing?](https://image.slidesharecdn.com/miketalksagilenz-deprogrammingthecargocultagilenzconferencesconflictedcopy-150909015045-lva1-app6892/85/Mike-Talks-Datacom-25-320.jpg)

![Testing Cycle 3: Doing

The Testing (pt3) –

Problems!

What problems have

there been?

[Defects]](https://image.slidesharecdn.com/miketalksagilenz-deprogrammingthecargocultagilenzconferencesconflictedcopy-150909015045-lva1-app6892/85/Mike-Talks-Datacom-42-320.jpg)