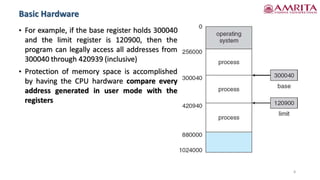

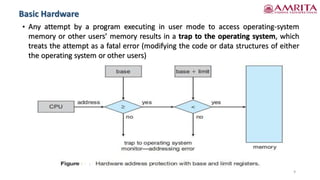

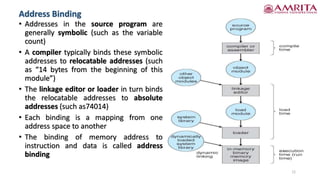

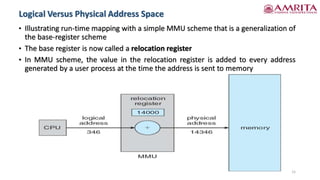

The document discusses memory management techniques in computer systems, focusing on methods like paging and segmentation, and the role of hardware in protecting memory spaces. It explains address binding stages, including compile time, load time, and execution time, highlighting the importance of logical and physical addresses, as well as dynamic loading and linking. These topics are critical for optimizing CPU utilization and improving system performance.