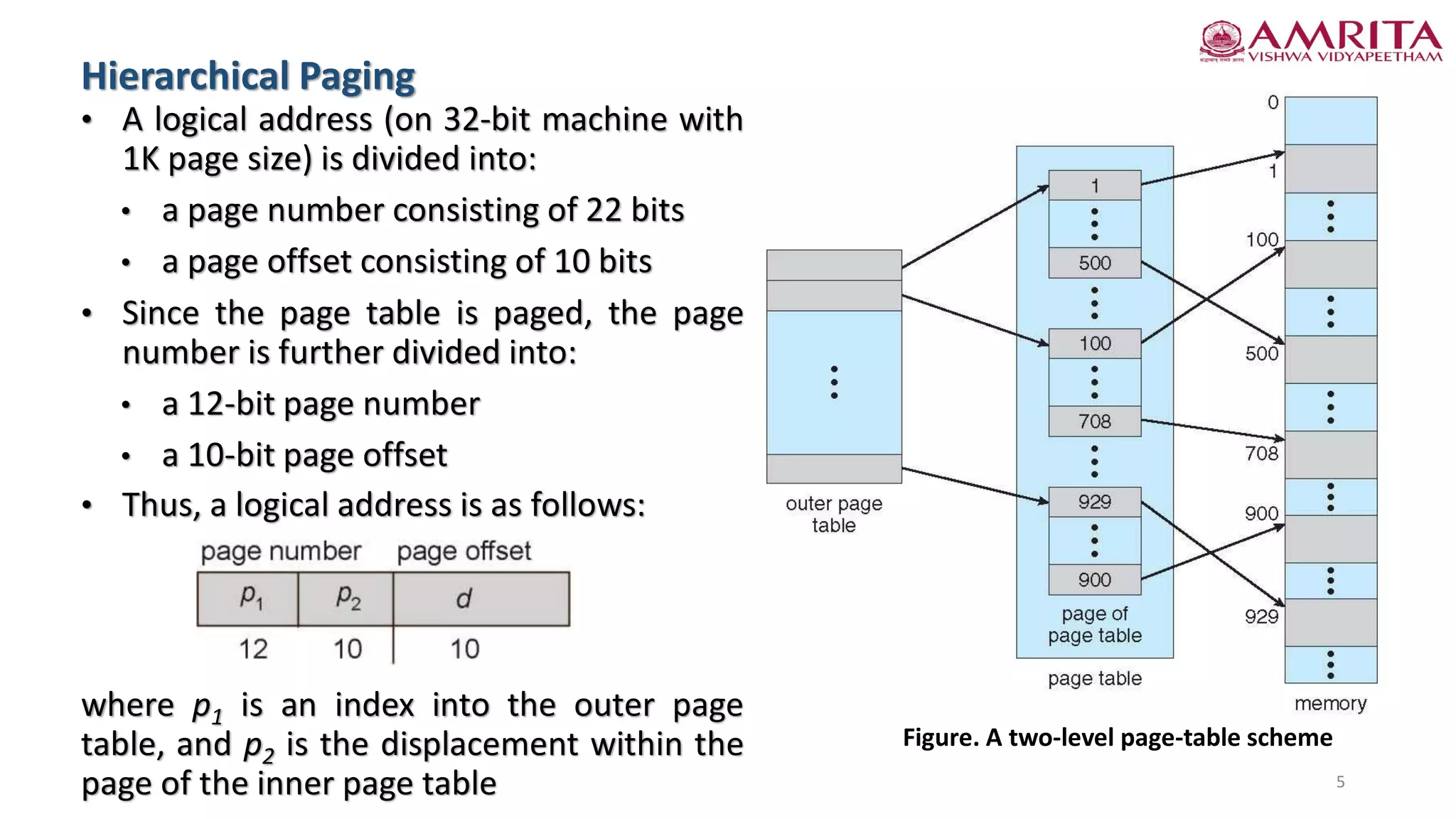

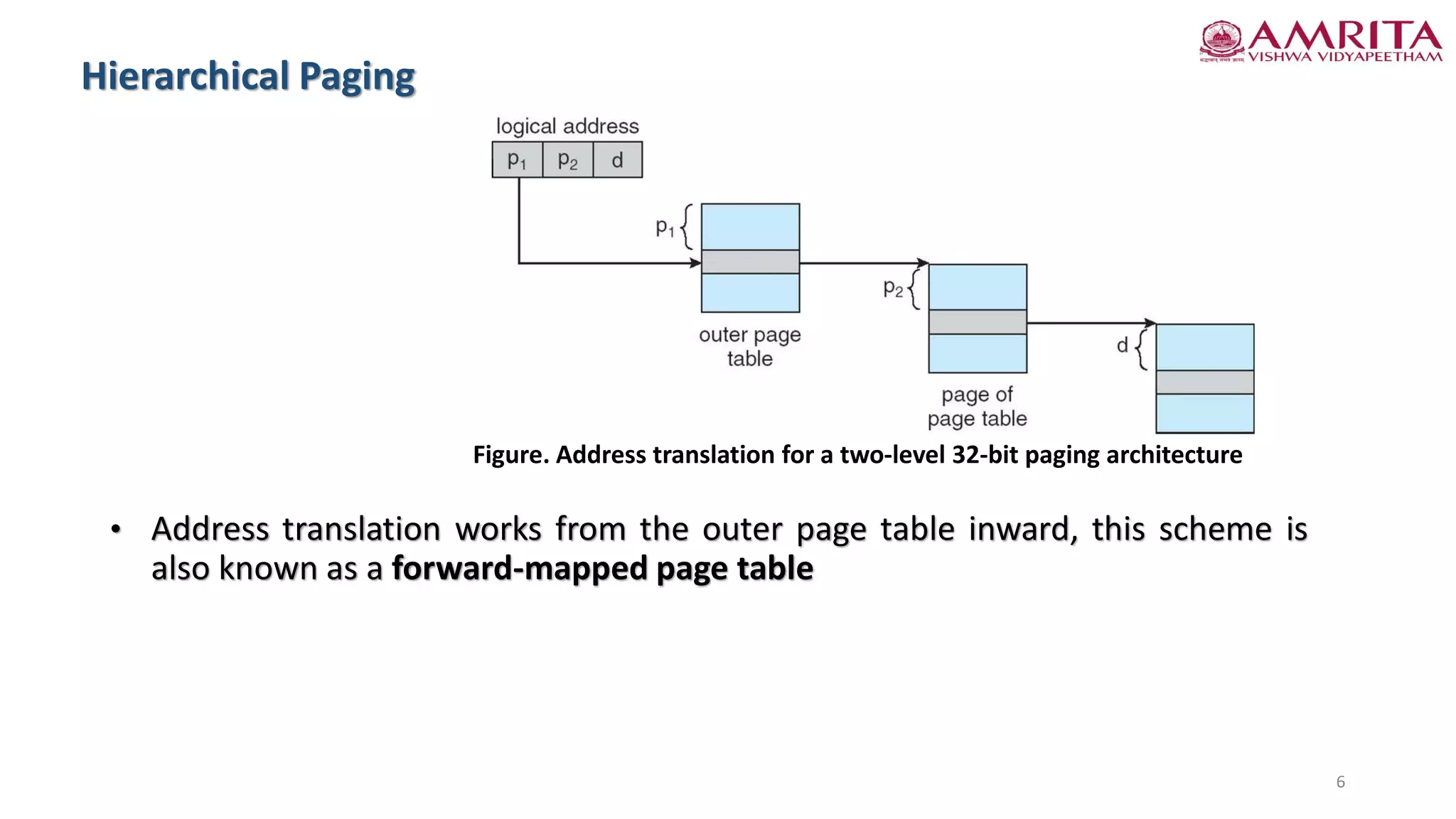

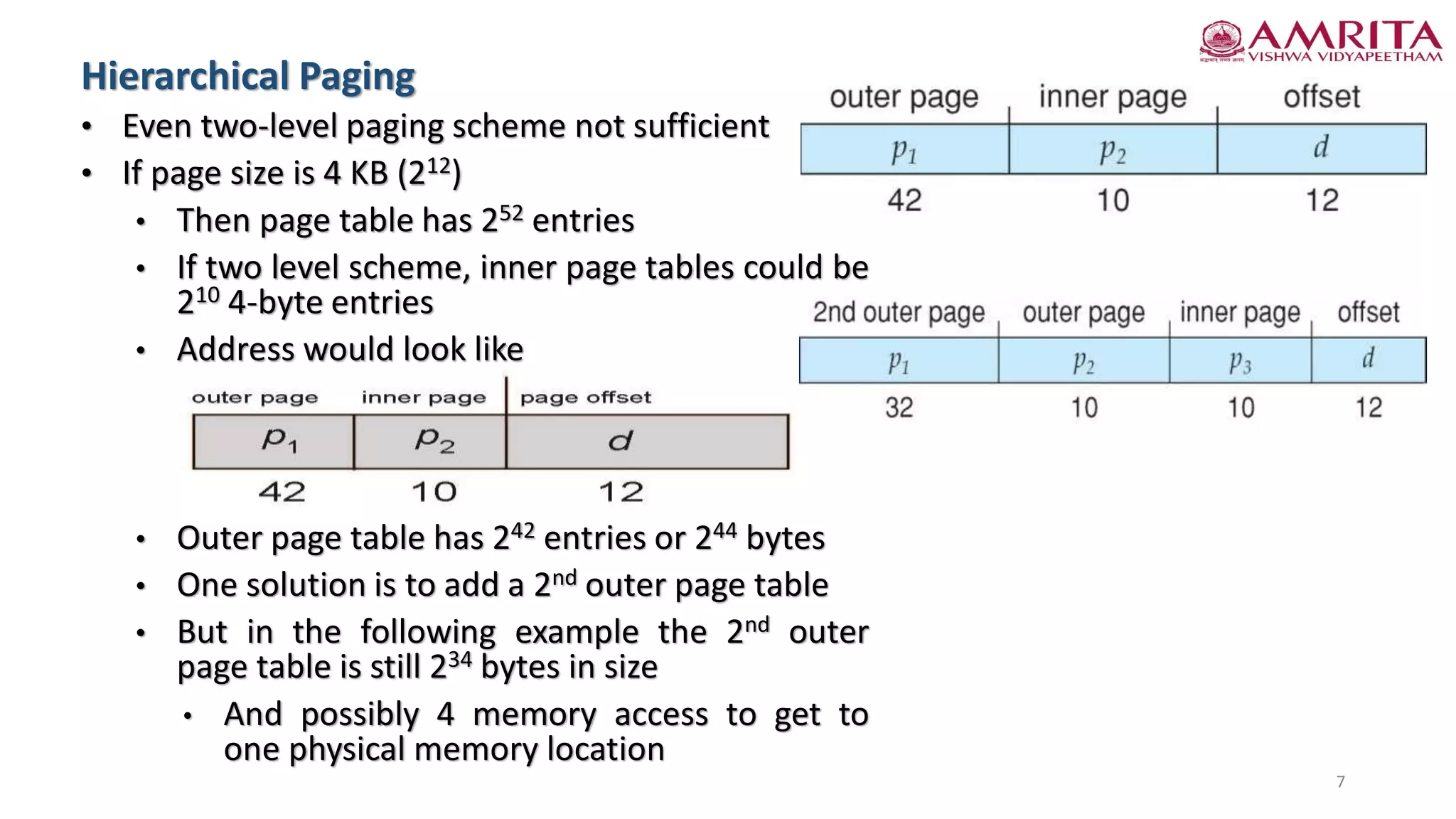

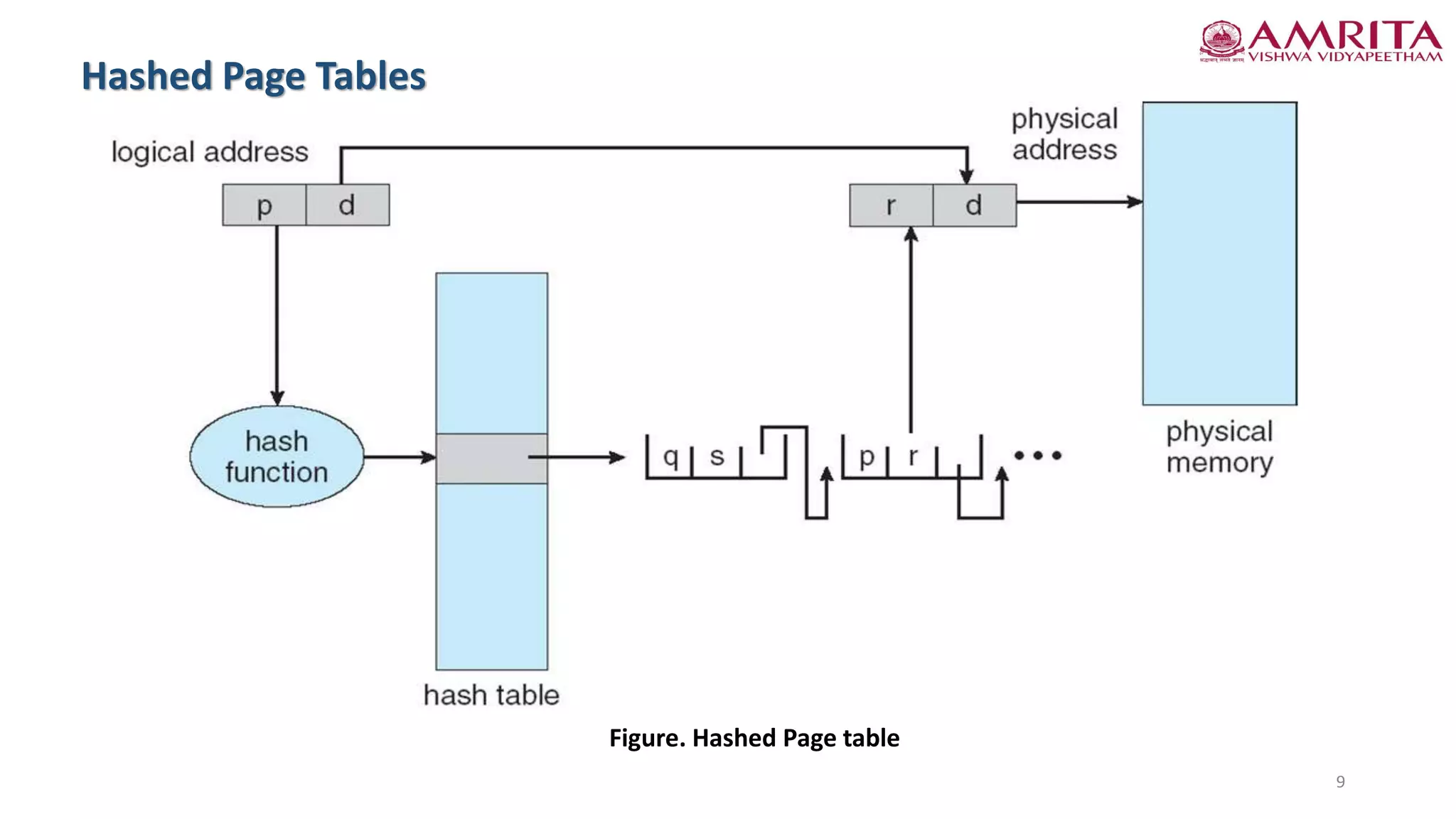

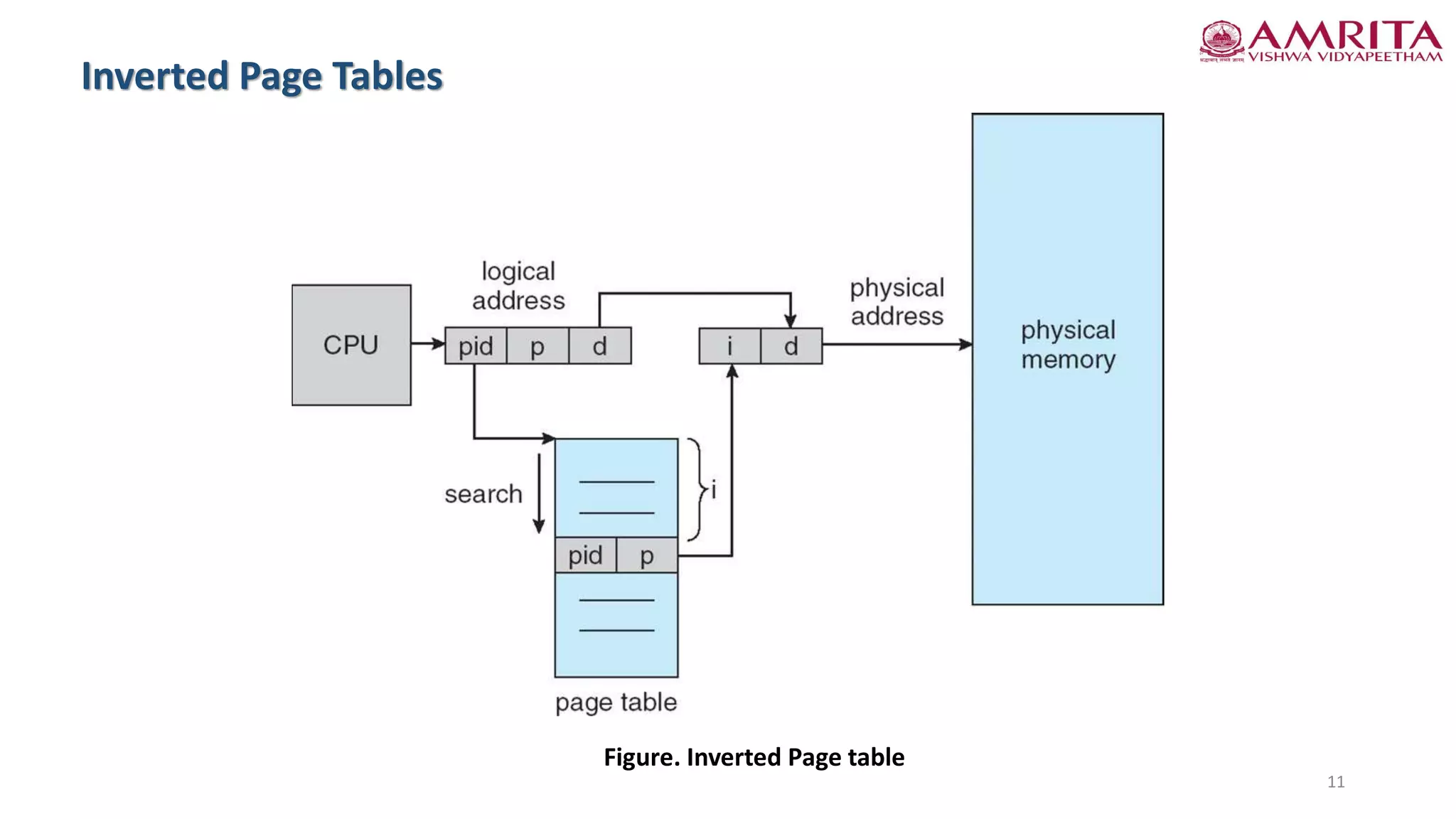

The document discusses memory management techniques in computer systems, focusing on methods like hierarchical paging, hashed page tables, and inverted page tables to efficiently handle large page tables in modern operating systems. It highlights the challenges of contiguous memory allocation for page tables and presents solutions to reduce memory usage while improving performance. Key examples and figures are provided to illustrate two-level paging schemes and hashing mechanisms for address translation.