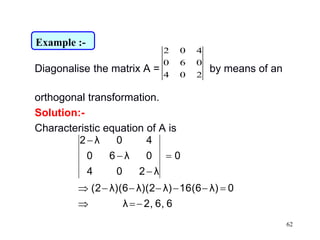

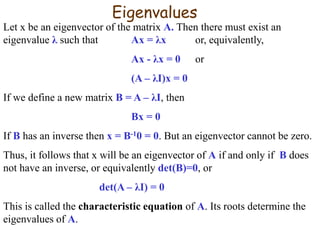

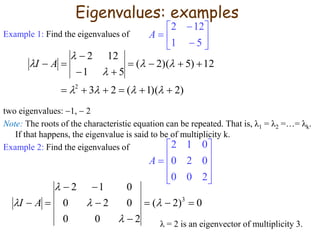

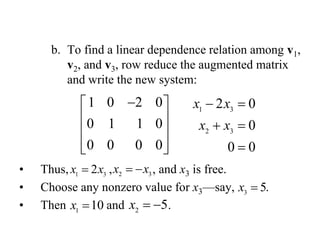

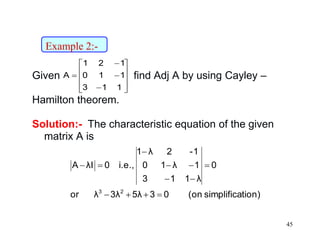

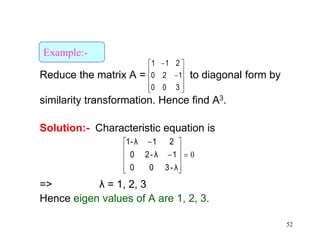

Here are the key steps to find the eigenvalues of the given matrix:

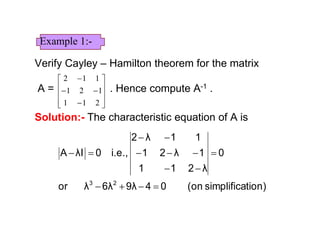

1) Write the characteristic equation: det(A - λI) = 0

2) Expand the determinant: (1-λ)(-2-λ) - 4 = 0

3) Simplify and factor: λ(λ + 1)(λ + 2) = 0

4) Find the roots: λ1 = 0, λ2 = -1, λ3 = -2

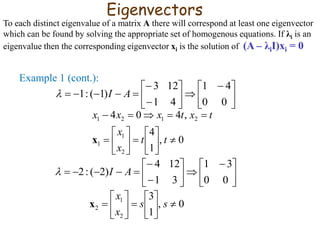

Therefore, the eigenvalues of the given matrix are -1 and -2.

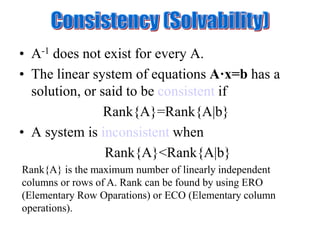

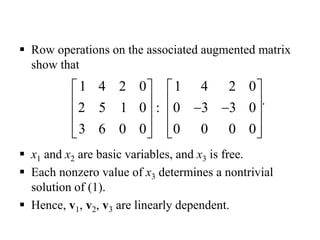

![• The following operations applied to the augmented

matrix [A|b], yield an equivalent linear system

– Interchanges: The order of two rows/columns can

be changed

– Scaling: Multiplying a row/column by a nonzero

constant

– Sum: The row can be replaced by the sum of that

row and a nonzero multiple of any other row.

One can use ERO and ECO to find the Rank as follows:

EROminimum # of rows with at least one nonzero entry

or

ECOminimum # of columns with at least one nonzero entry](https://image.slidesharecdn.com/matricesppt-171108100049/85/Matrices-ppt-15-320.jpg)

![CAYLEY HAMILTON THEOREM

Every square matrix satisfies its own

characteristic equation.

Let A = [aij]n×n be a square matrix

then,

nnnn2n1n

n22221

n11211

a...aa

................

a...aa

a...aa

A

](https://image.slidesharecdn.com/matricesppt-171108100049/85/Matrices-ppt-37-320.jpg)

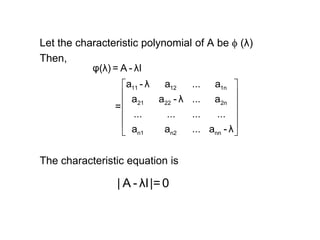

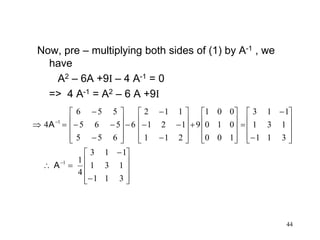

![Note 1:- Premultiplying equation (1) by A-1 , we

have

n n-1 n-2

0 1 2 n

n n-1 n-2

0 1 2 n

We are to prove that

p λ +p λ +p λ +...+p = 0

p A +p A +p A +...+p I= 0 ...(1)

I

n-1 n-2 n-3 -1

0 1 2 n-1 n

-1 n-1 n-2 n-3

0 1 2 n-1

n

0 =p A +p A +p A +...+p +p A

1

A =- [p A +p A +p A +...+p I]

p](https://image.slidesharecdn.com/matricesppt-171108100049/85/Matrices-ppt-39-320.jpg)

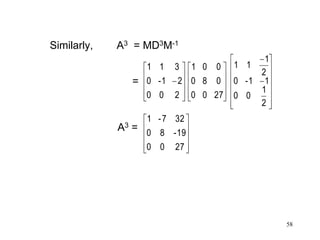

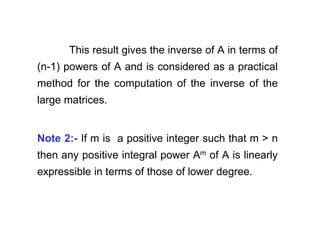

![57

Now, since D = M-1AM

=> A = MDM-1

A2 = (MDM-1) (MDM-1)

= MD2M-1 [since M-1M =

I]

300

020

001

200

21-0

311

300

120

211

2

1

00

11-0

2

1

11

AMM 1](https://image.slidesharecdn.com/matricesppt-171108100049/85/Matrices-ppt-57-320.jpg)