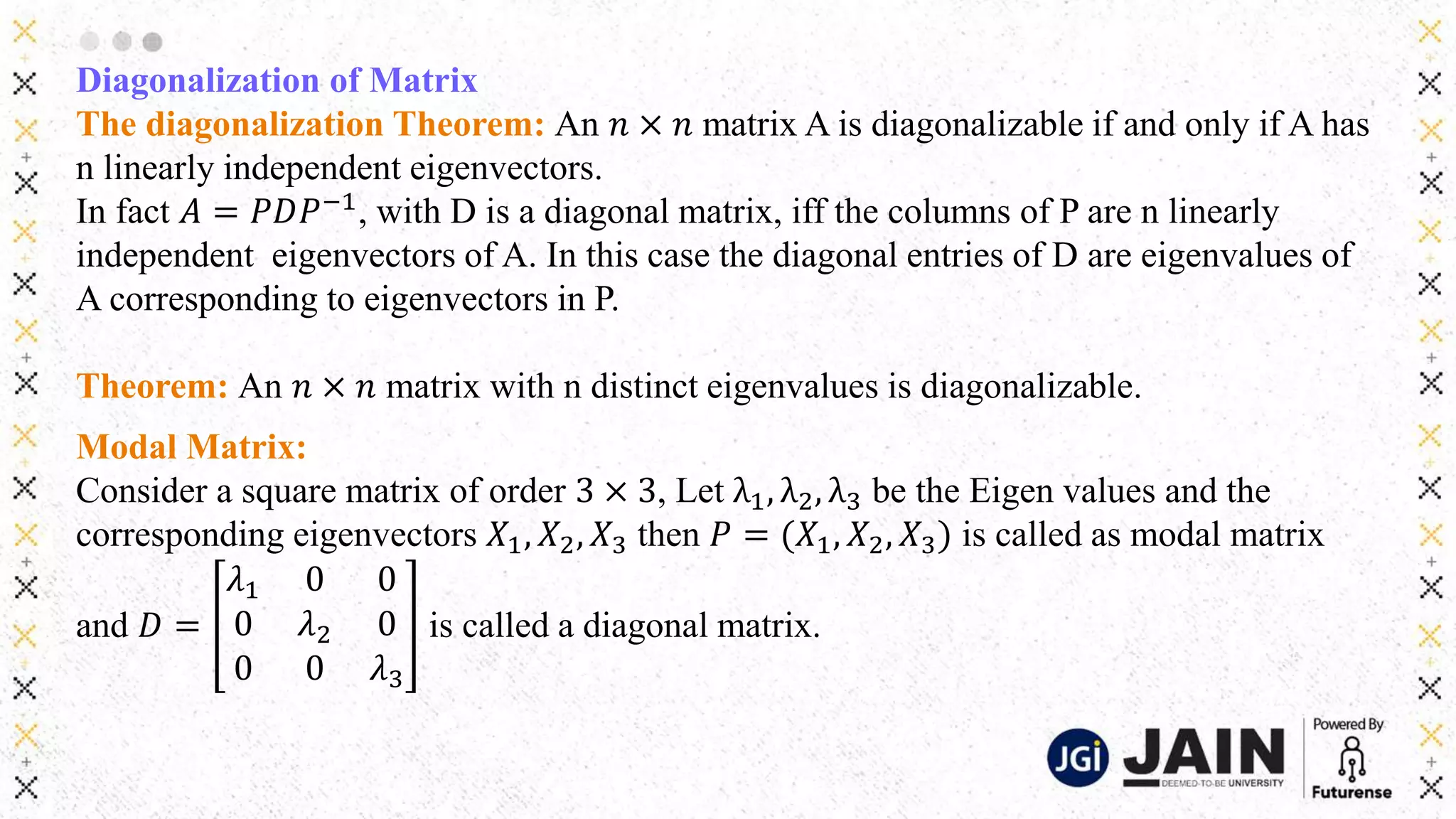

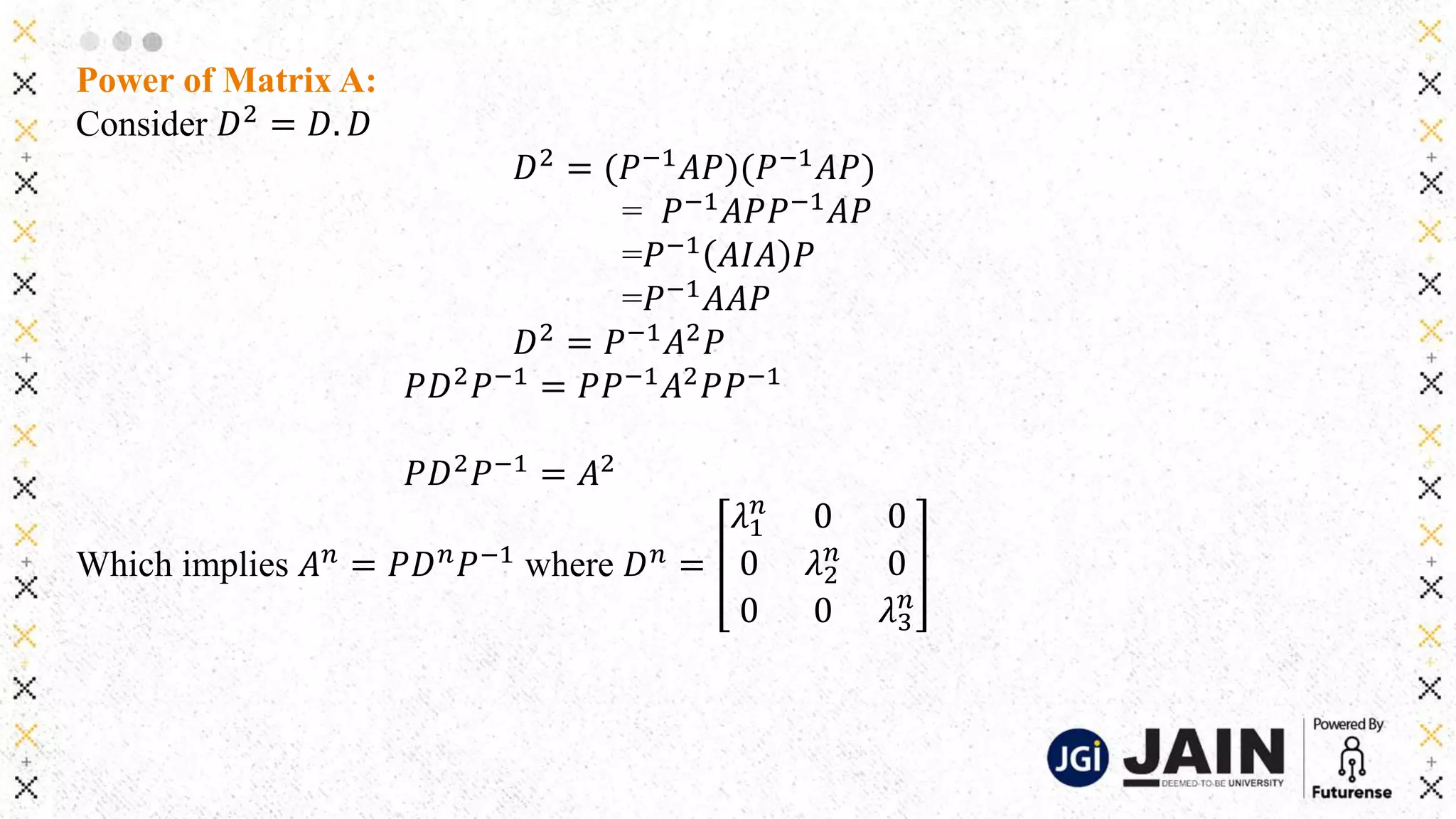

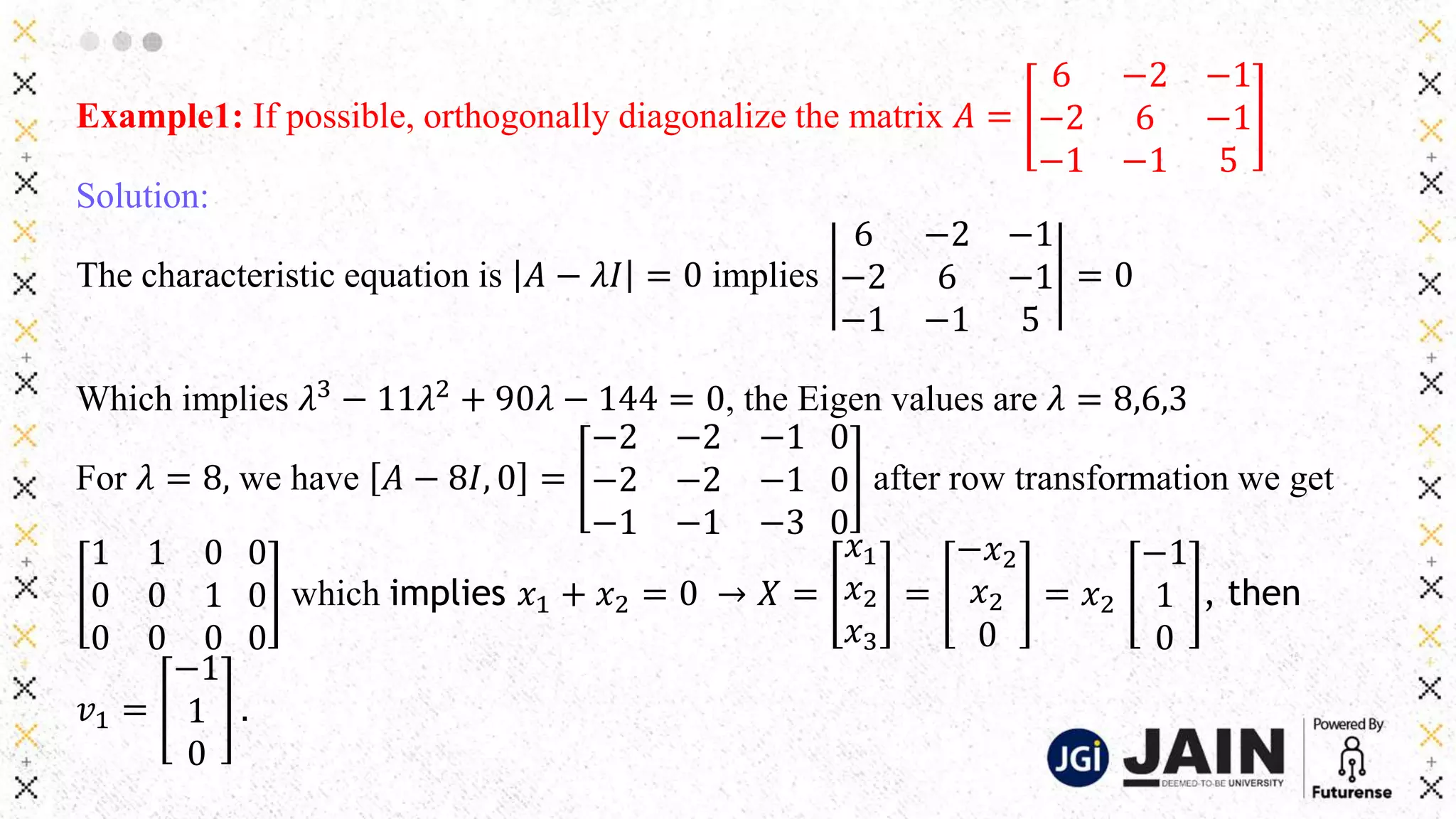

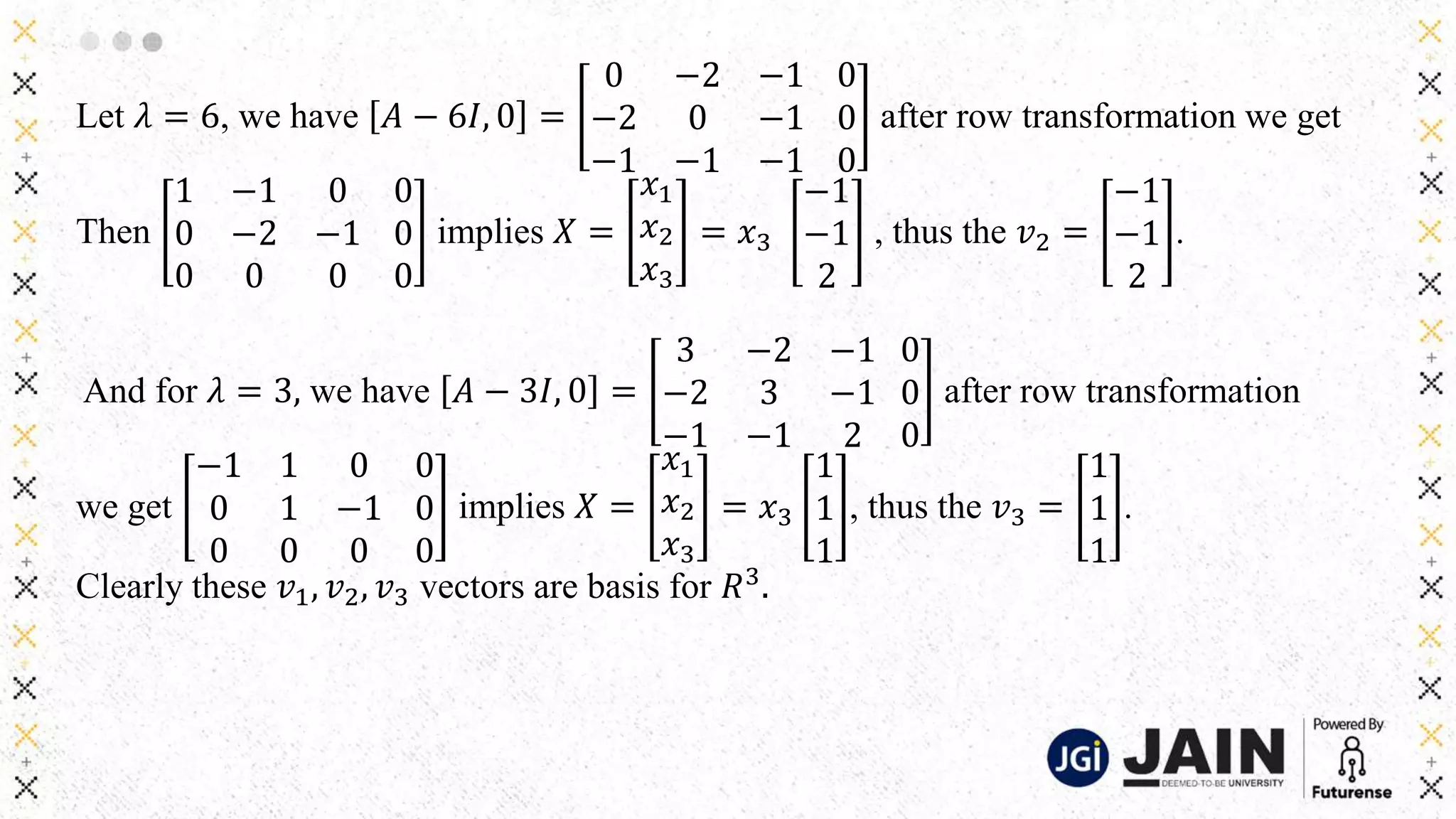

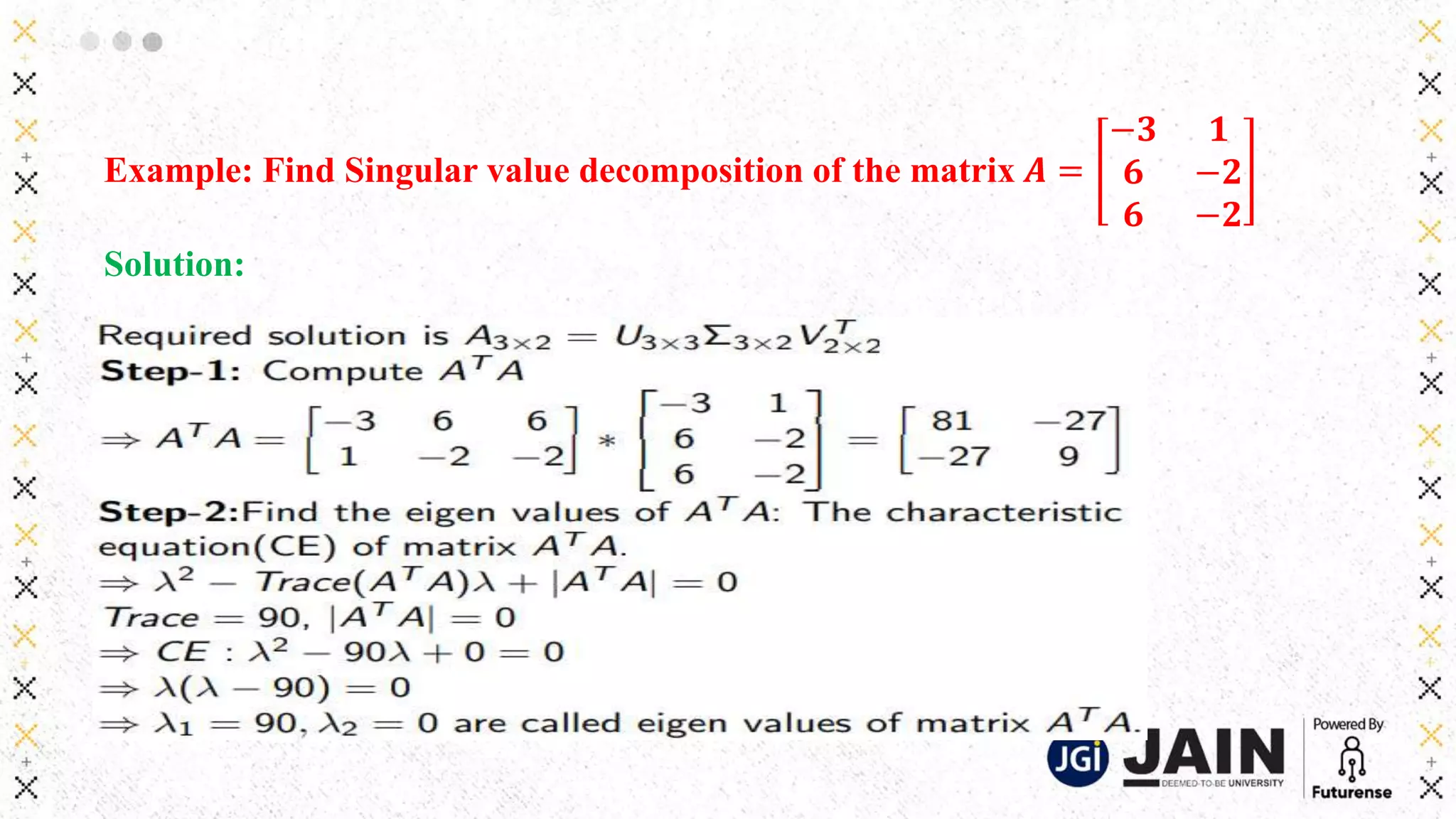

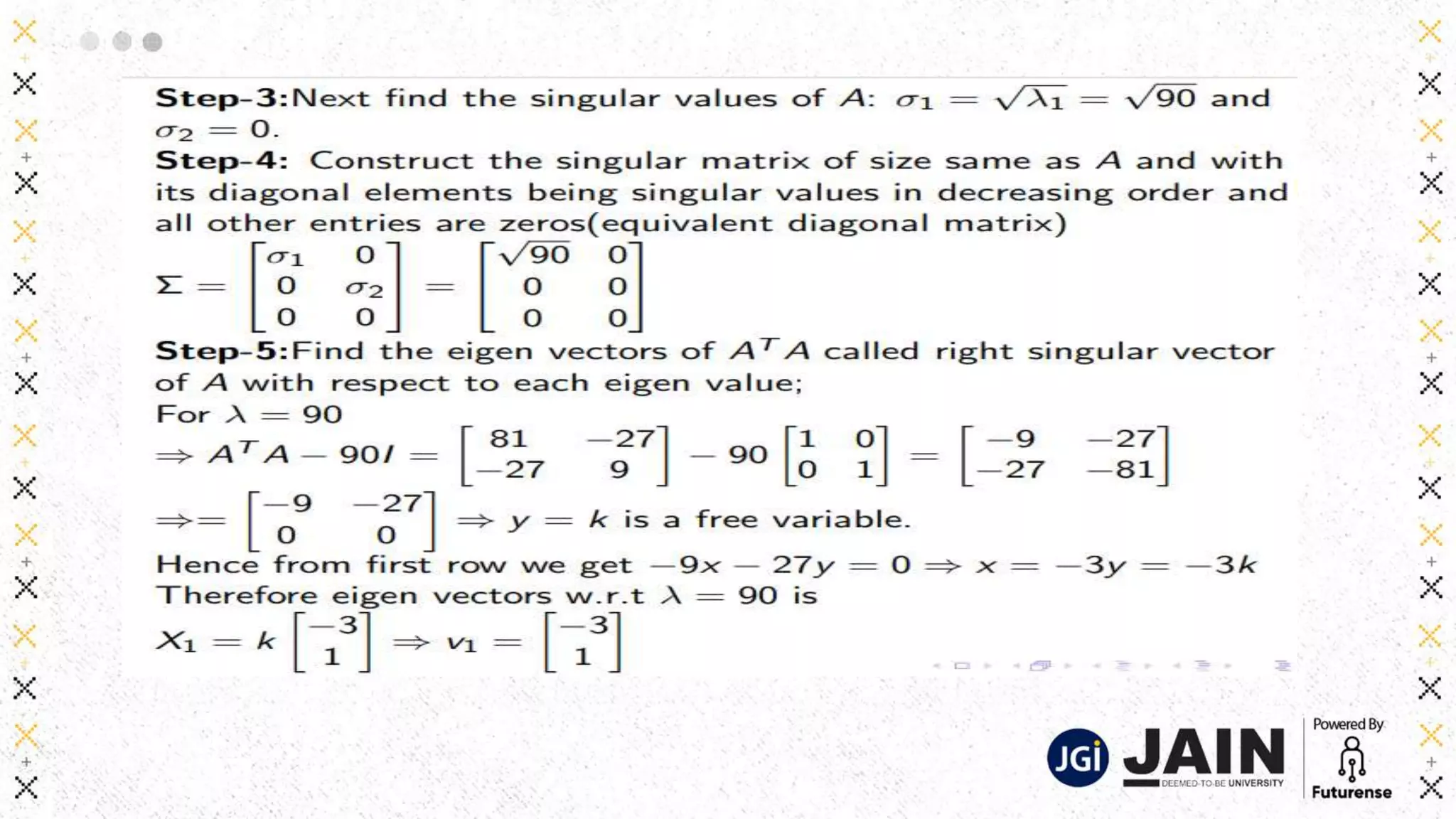

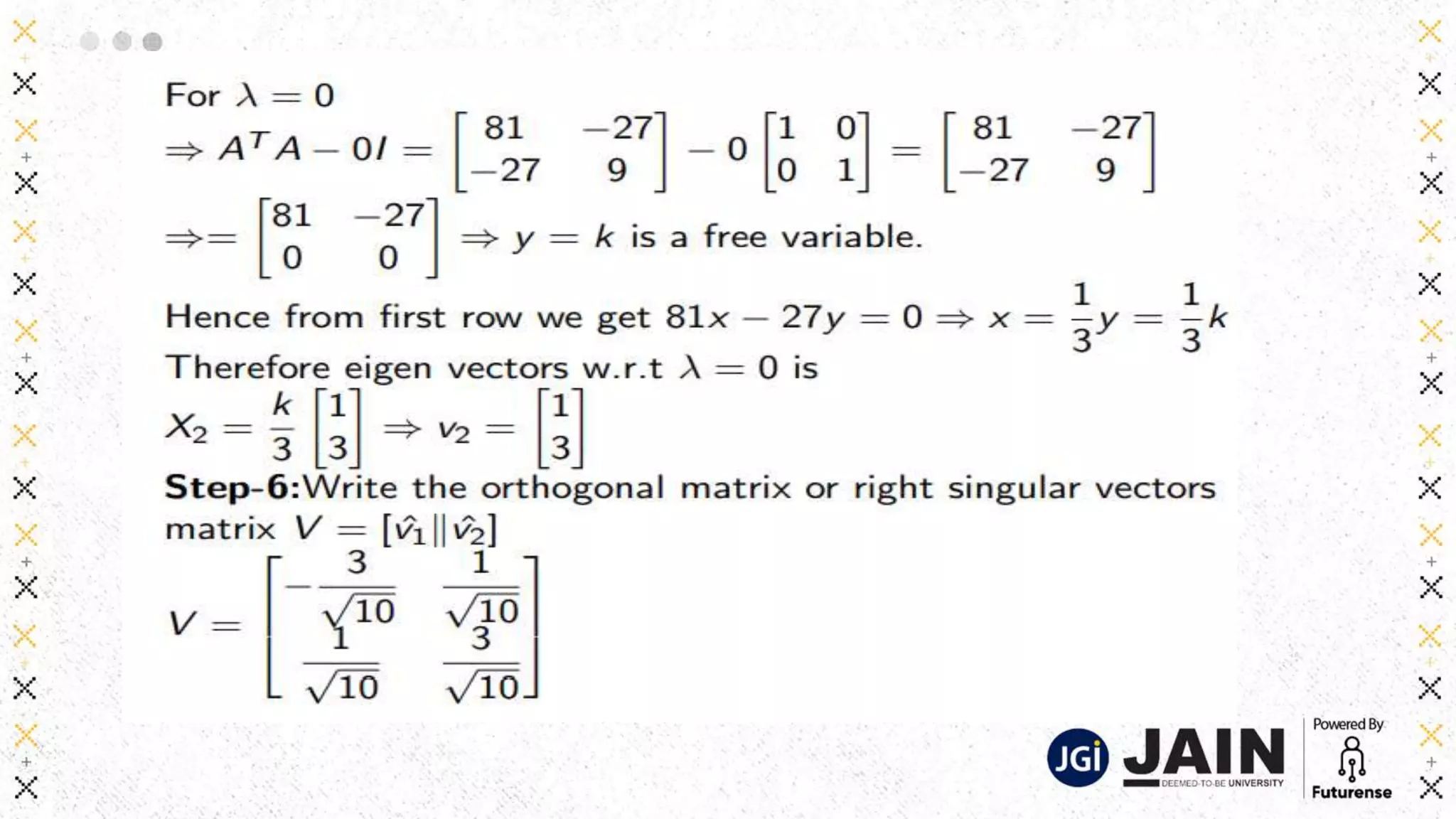

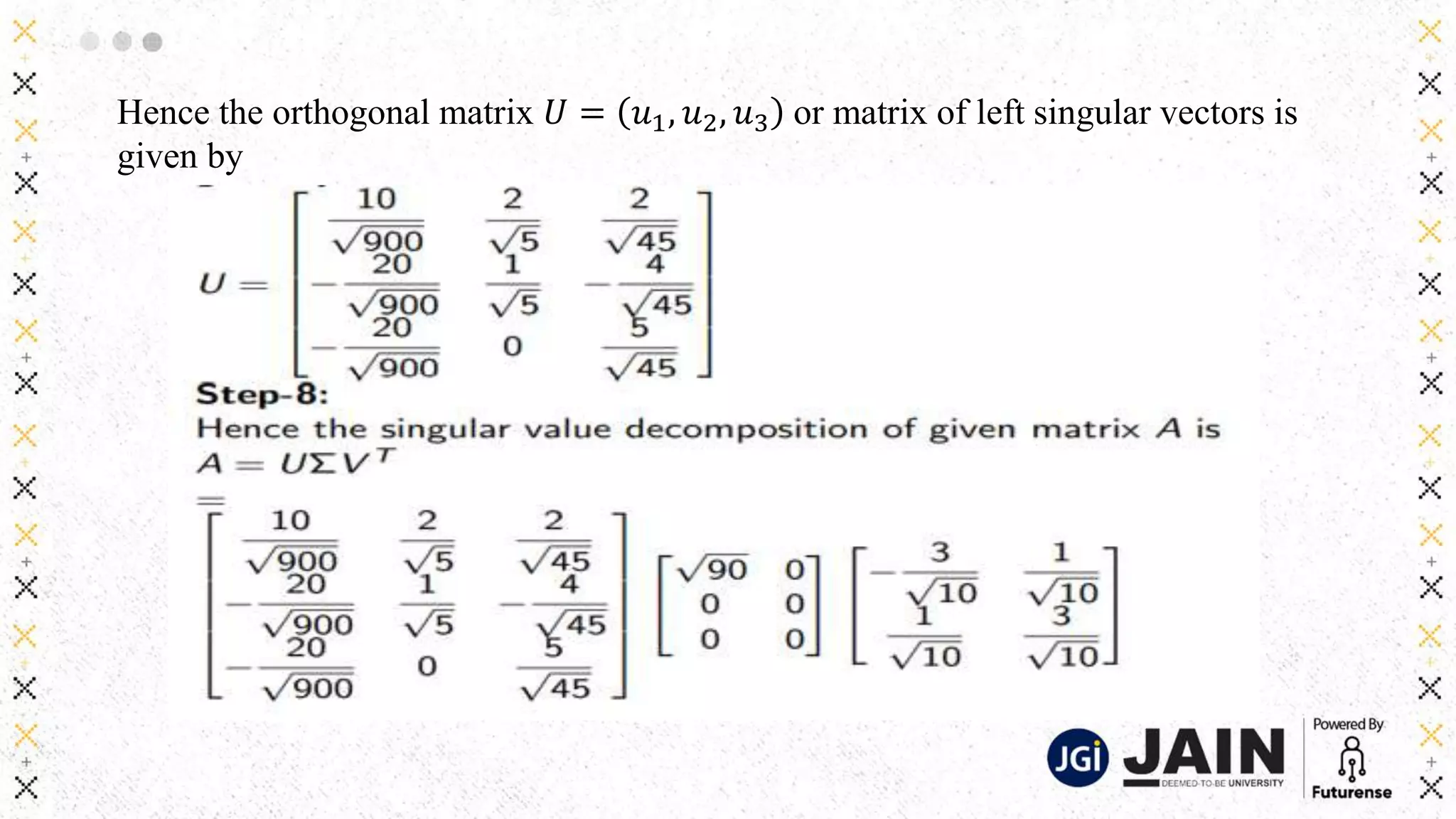

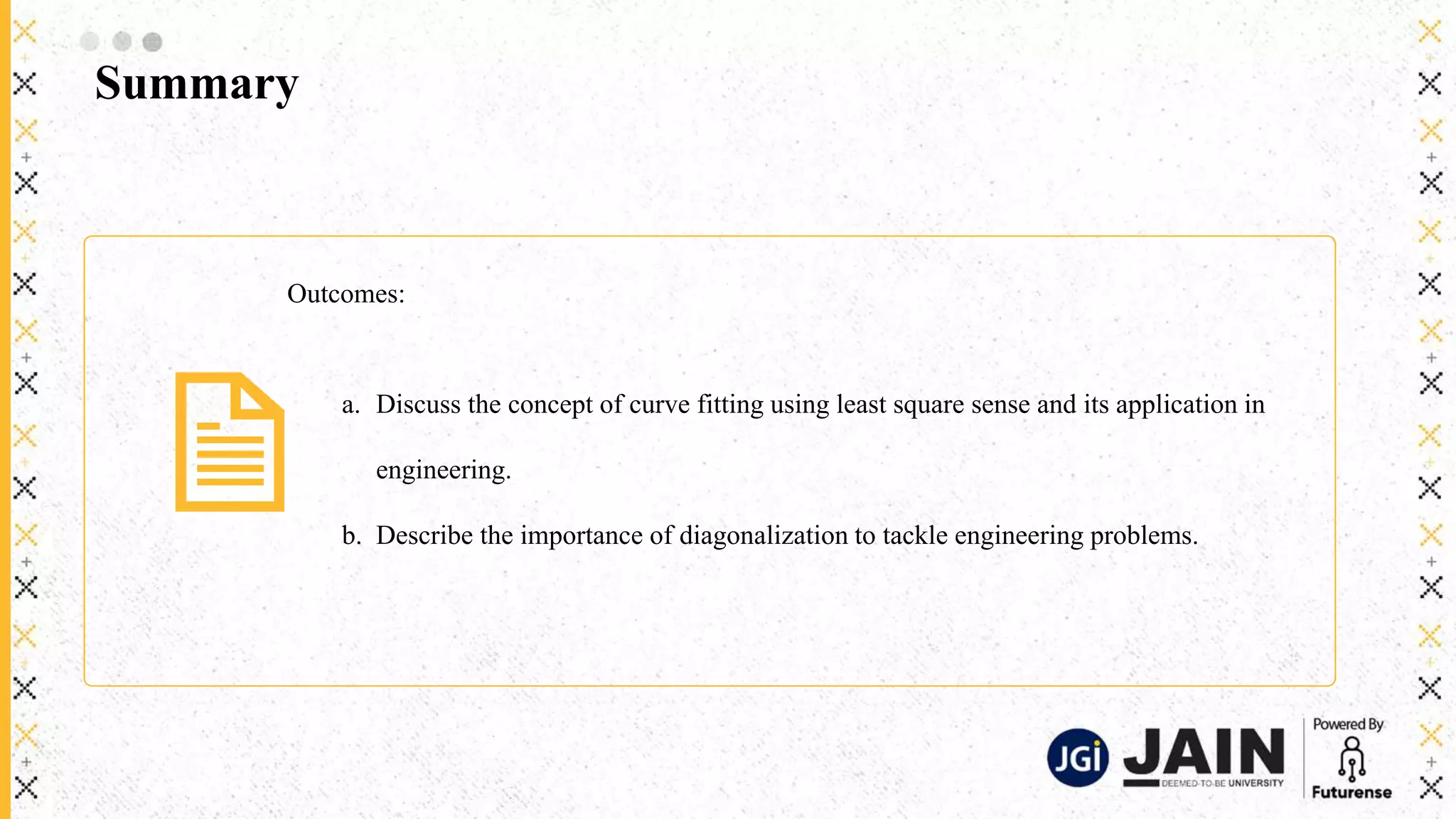

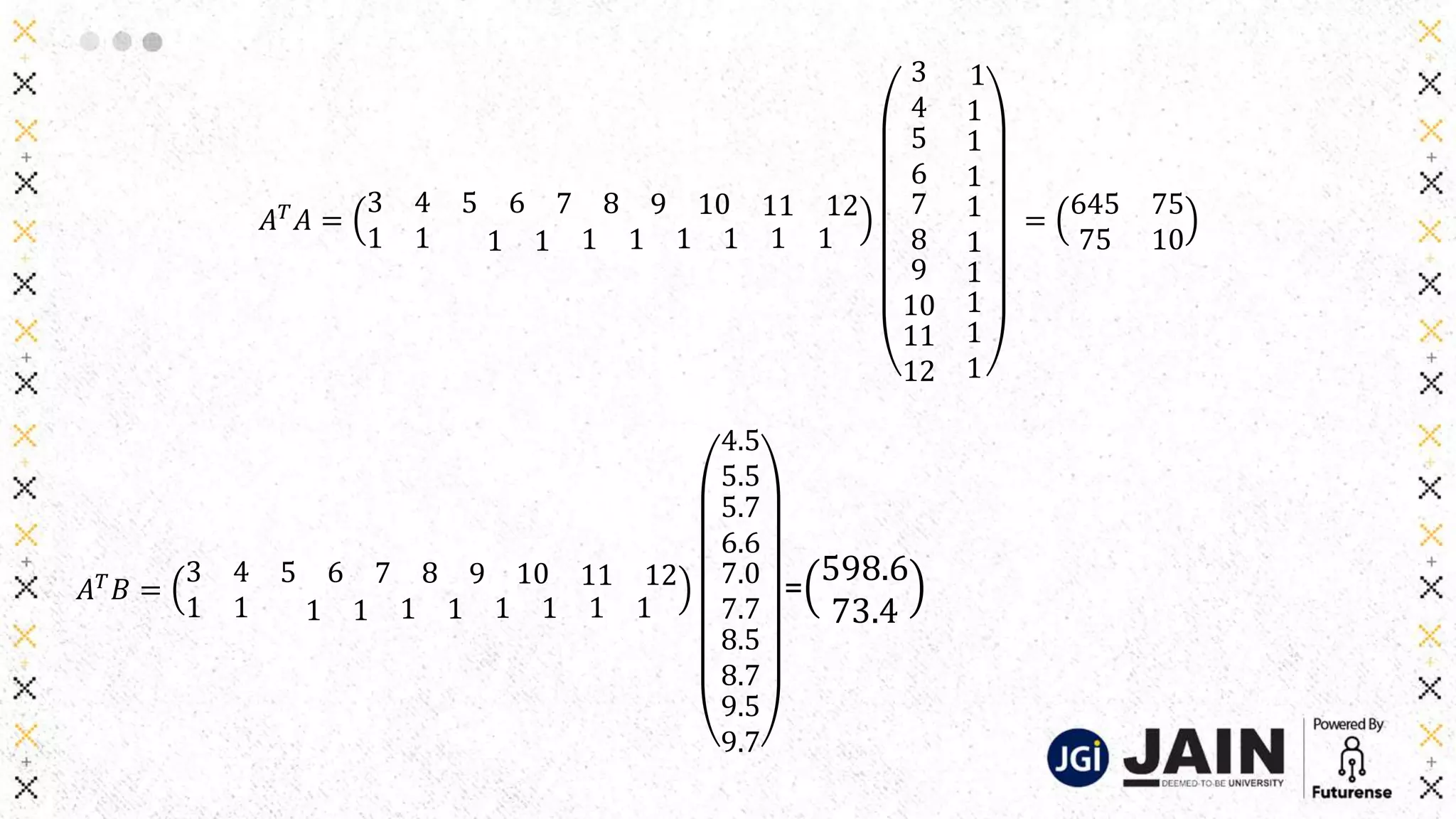

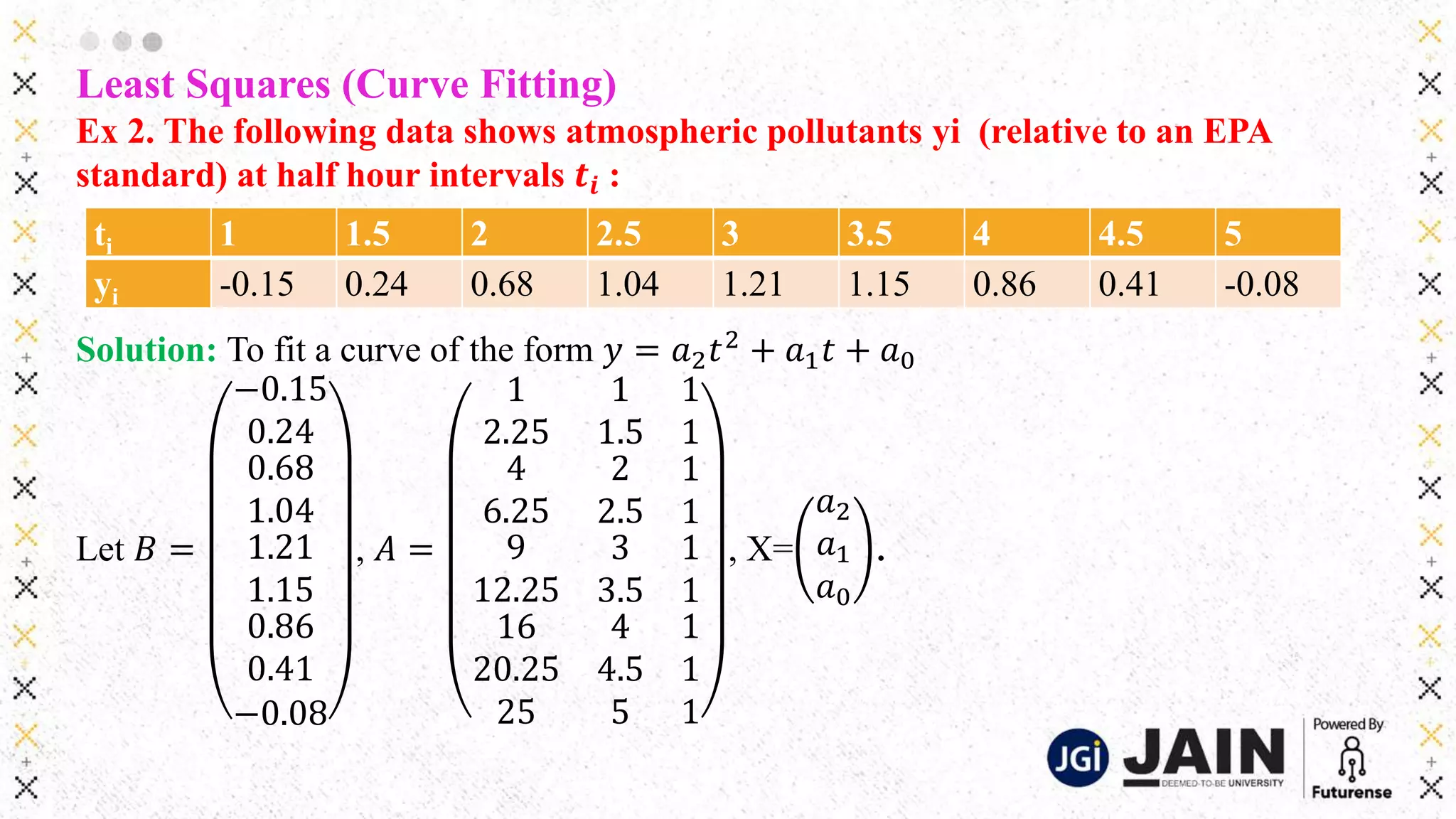

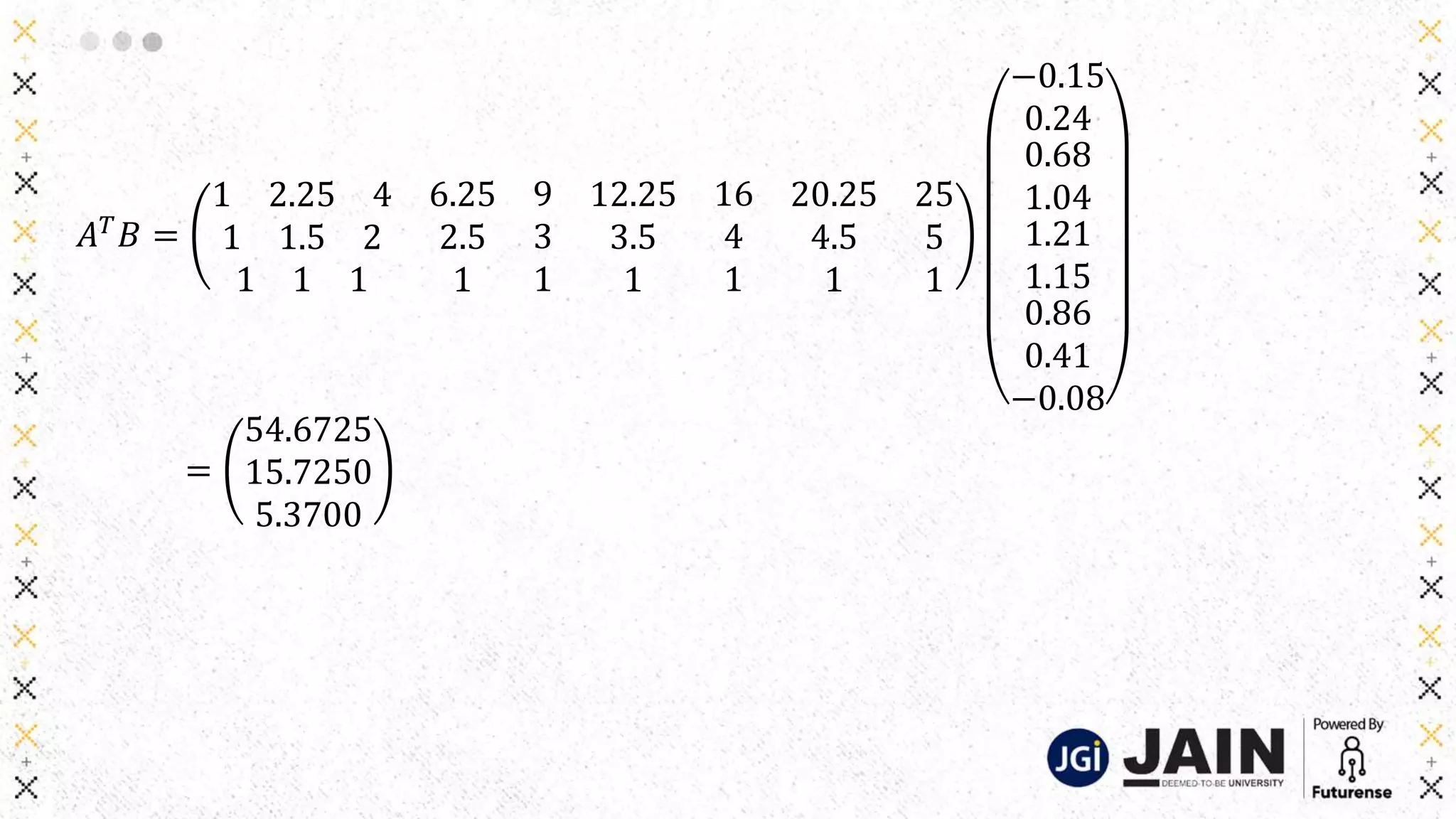

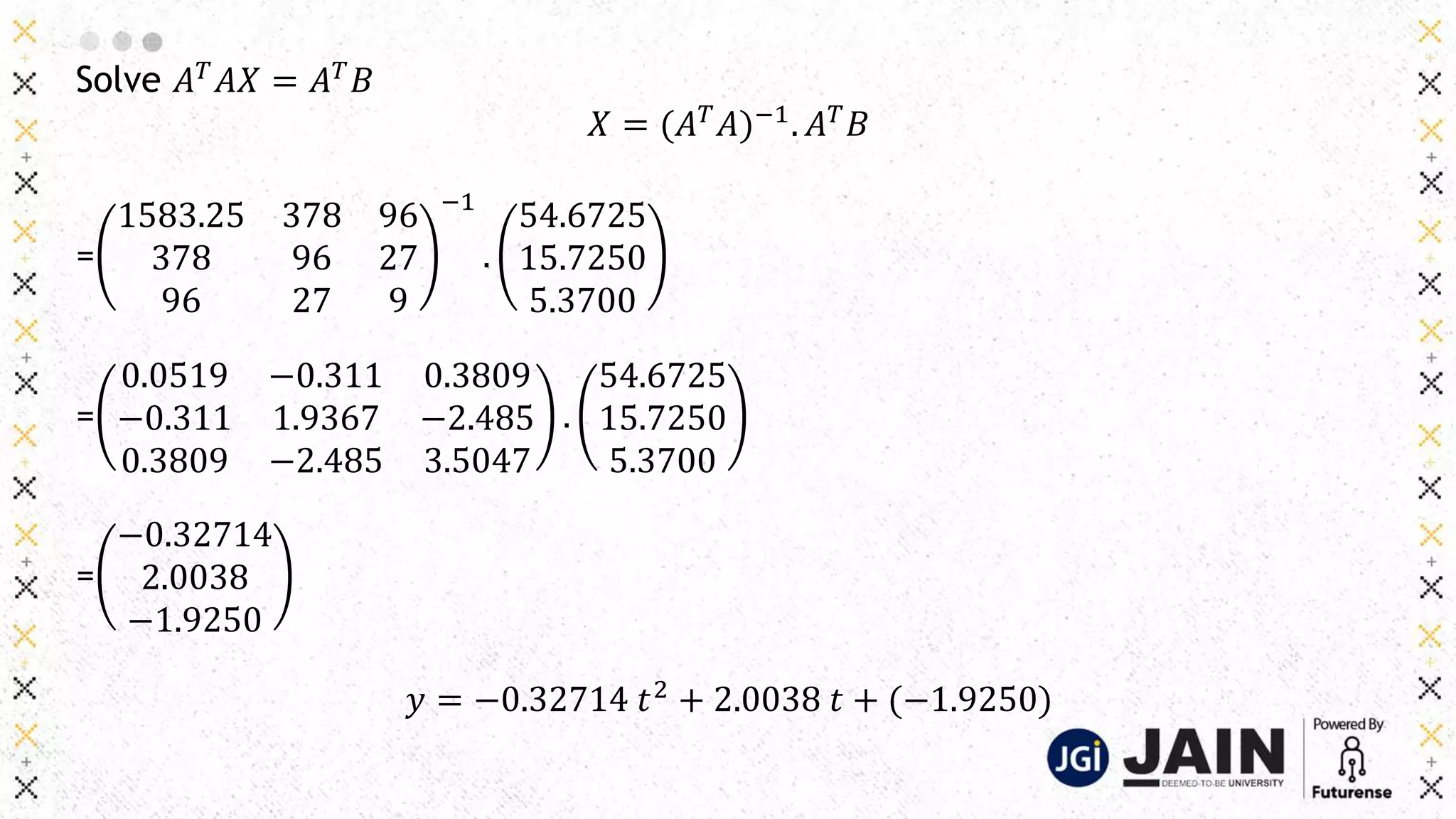

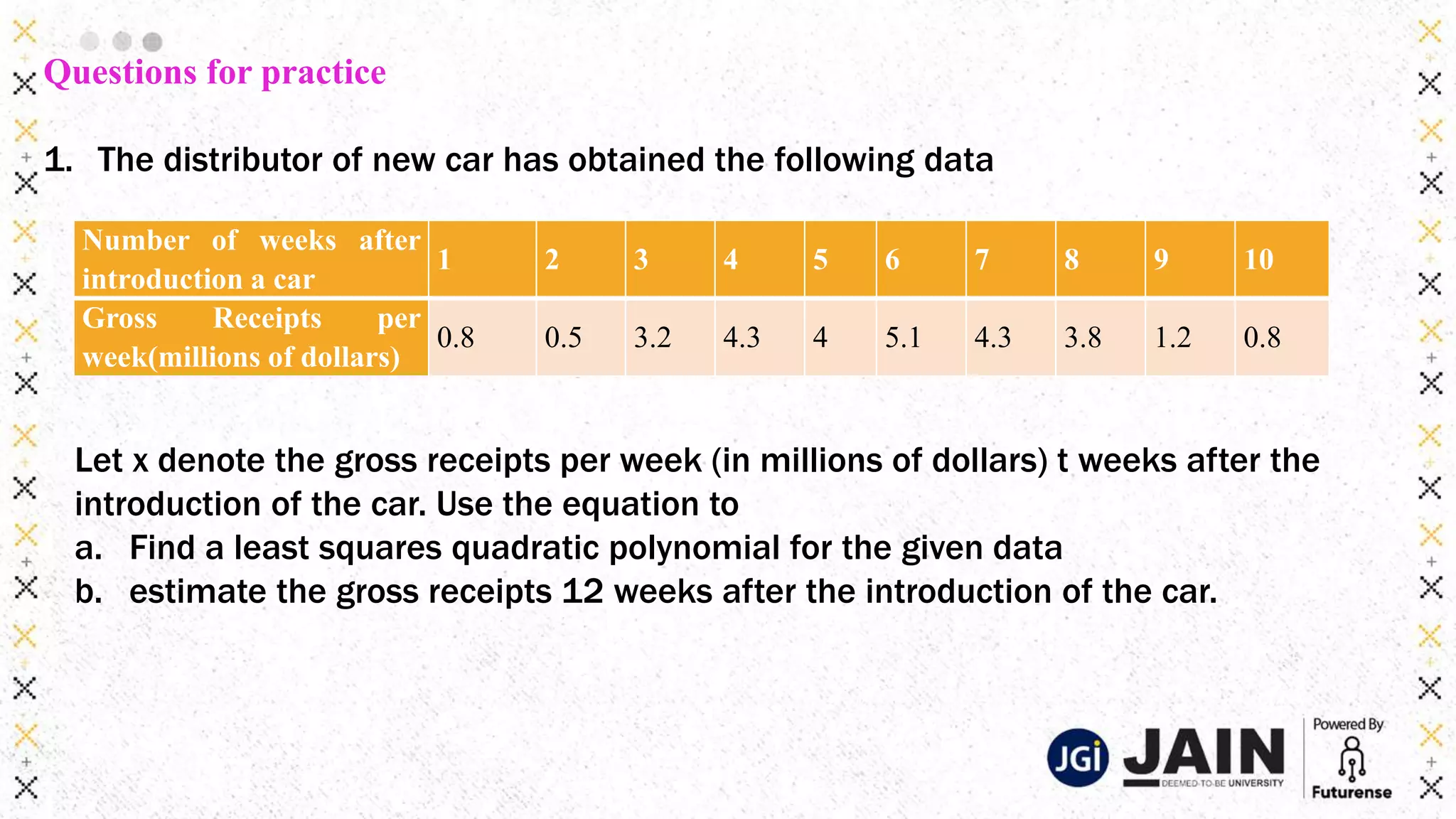

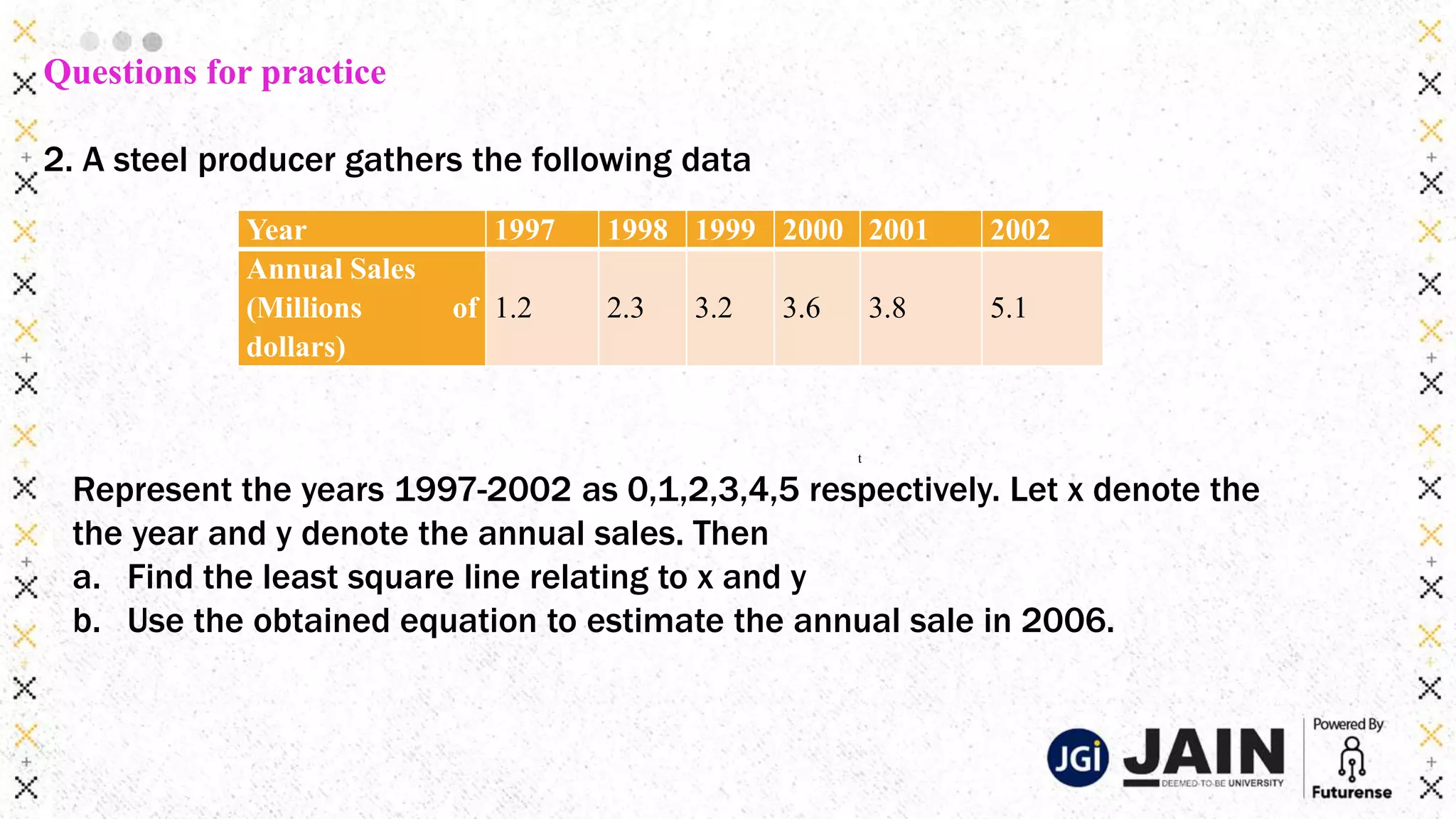

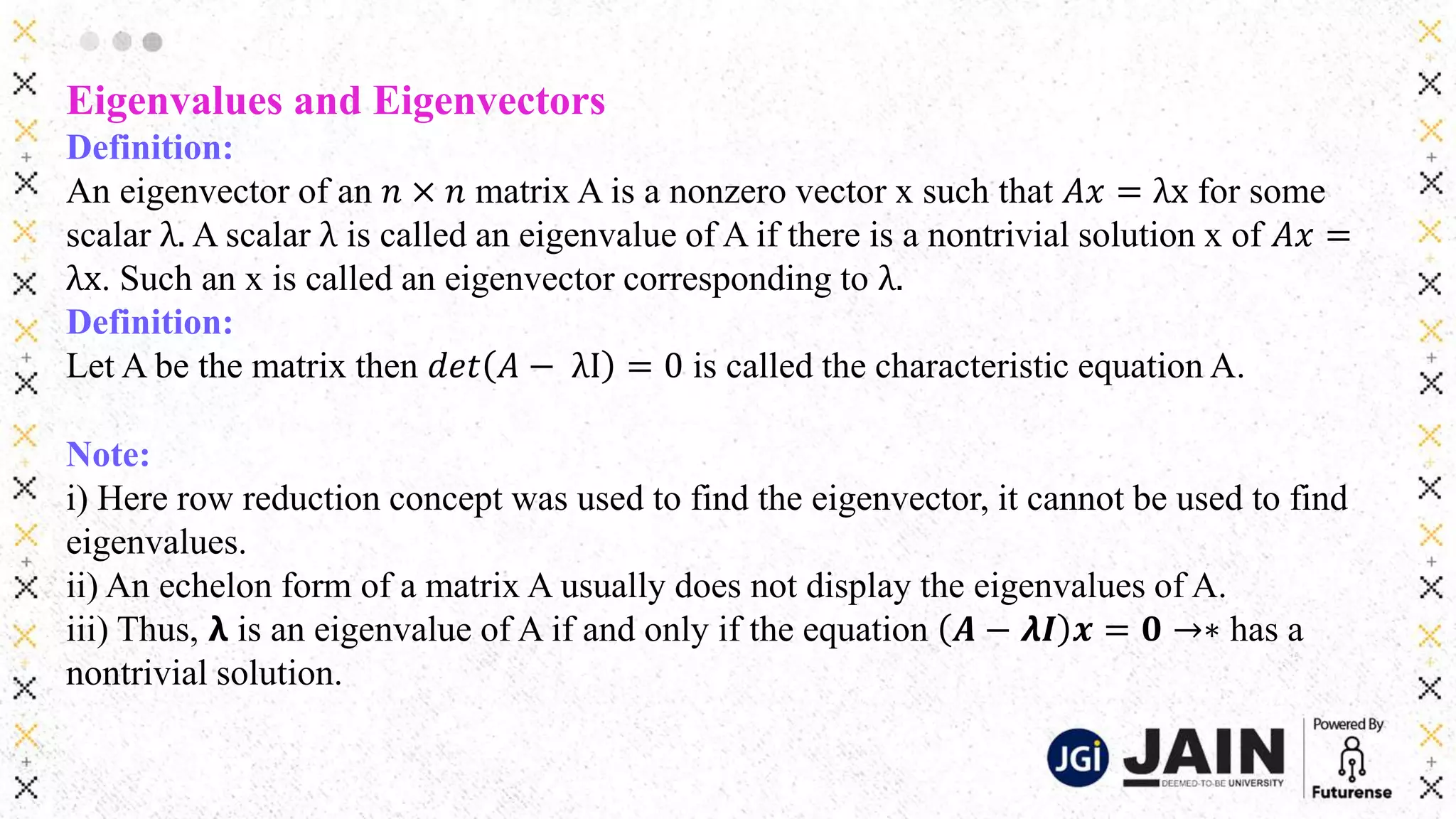

This document discusses various matrix decomposition techniques including least squares, eigendecomposition, and singular value decomposition. It begins with an introduction to the importance of linear algebra and decompositions for applications. Then it provides examples of using least squares to fit curves to data and find regression lines. It defines eigenvalues and eigenvectors and provides examples of eigendecomposition. It also discusses diagonalization of matrices and using the eigendecomposition to raise matrices to powers. Finally, it discusses singular value decomposition and its applications.

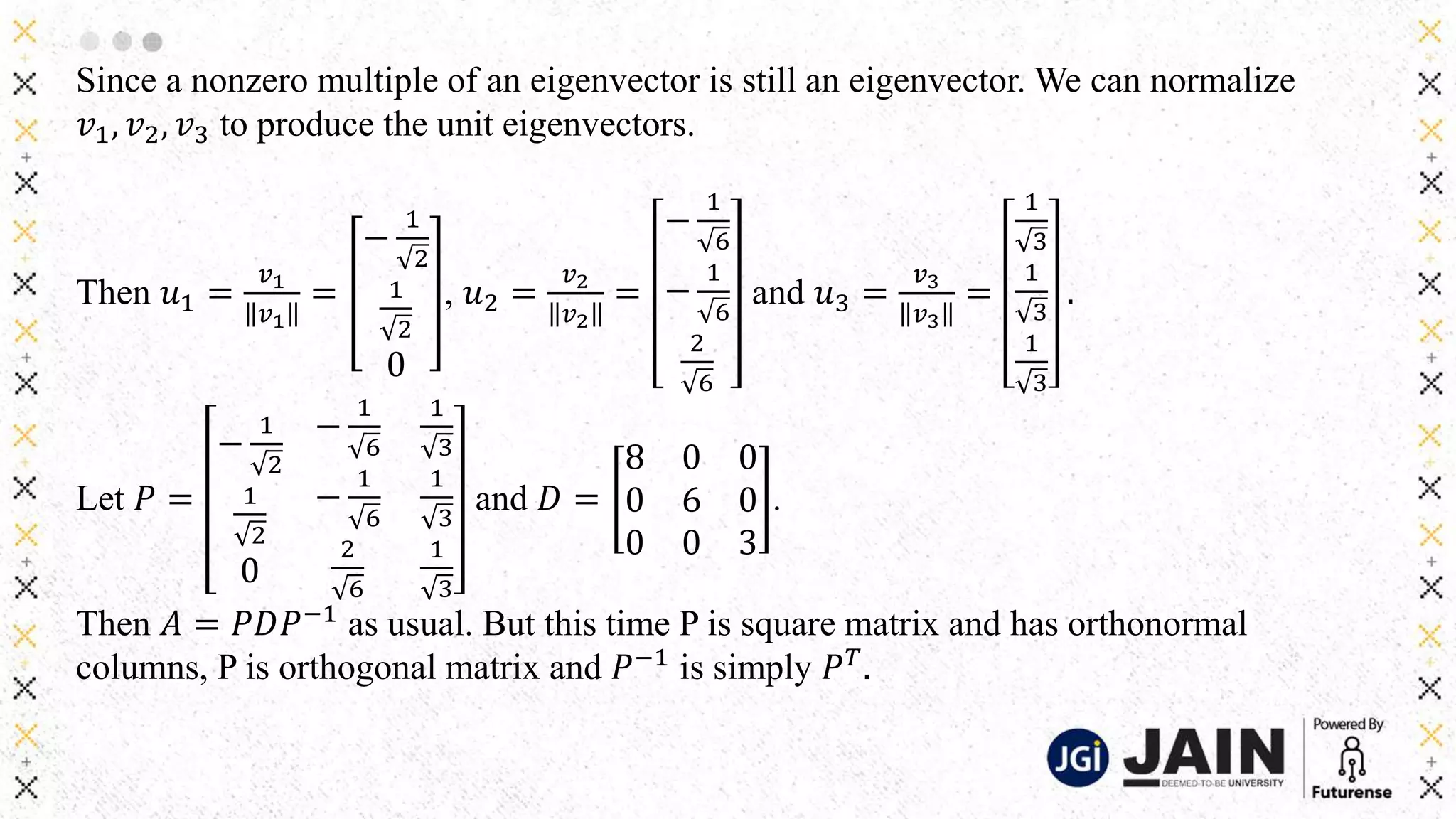

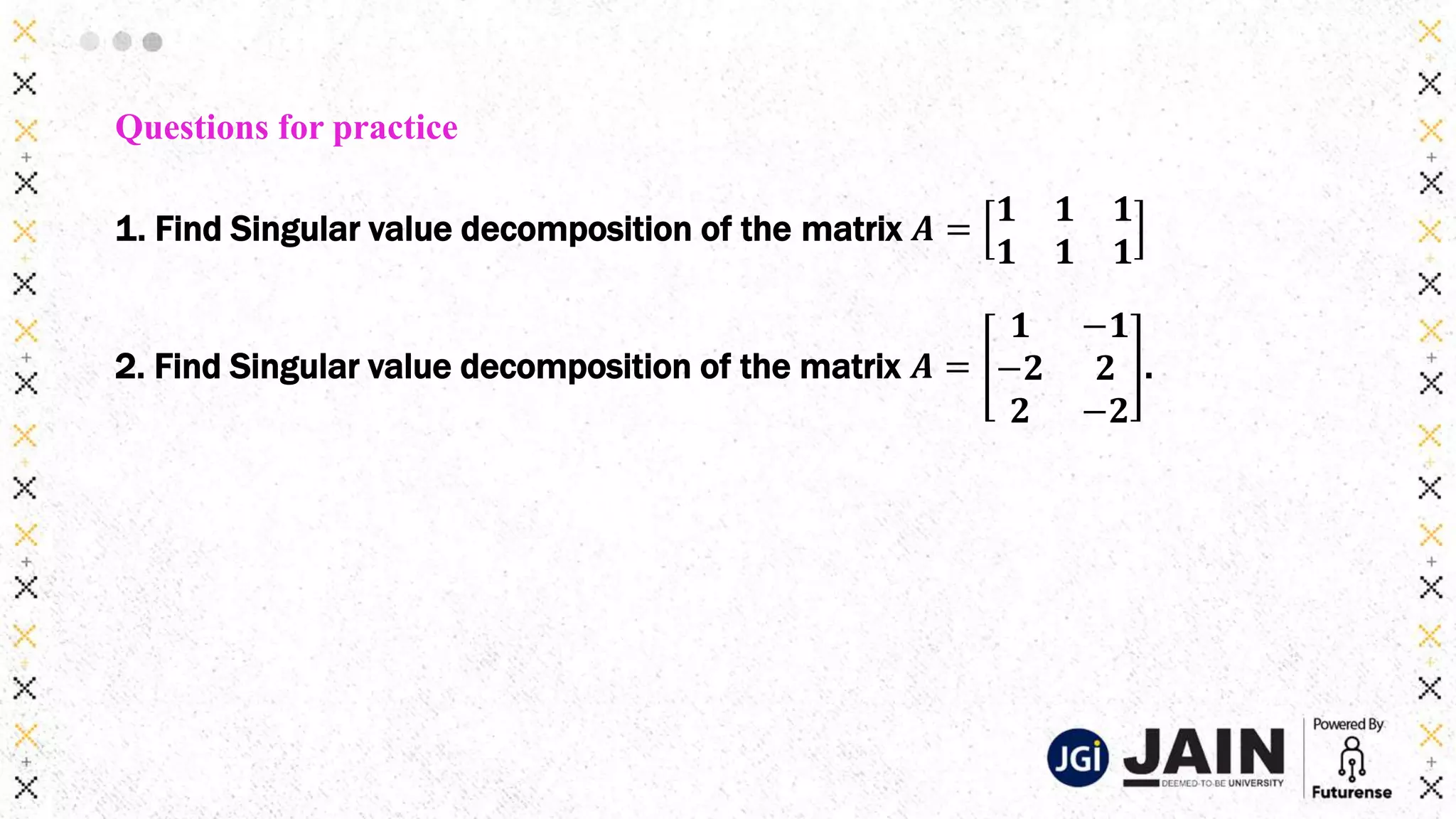

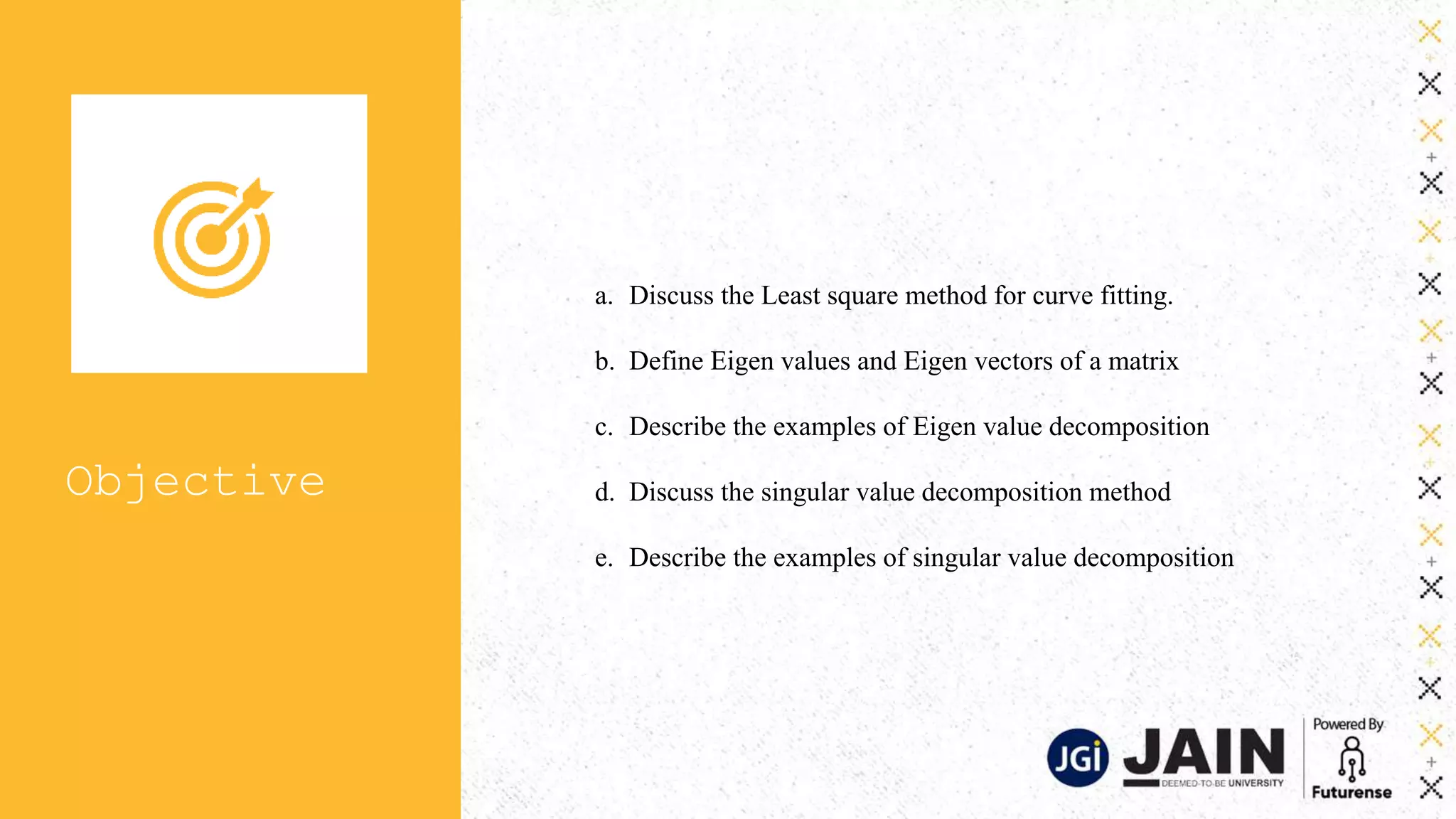

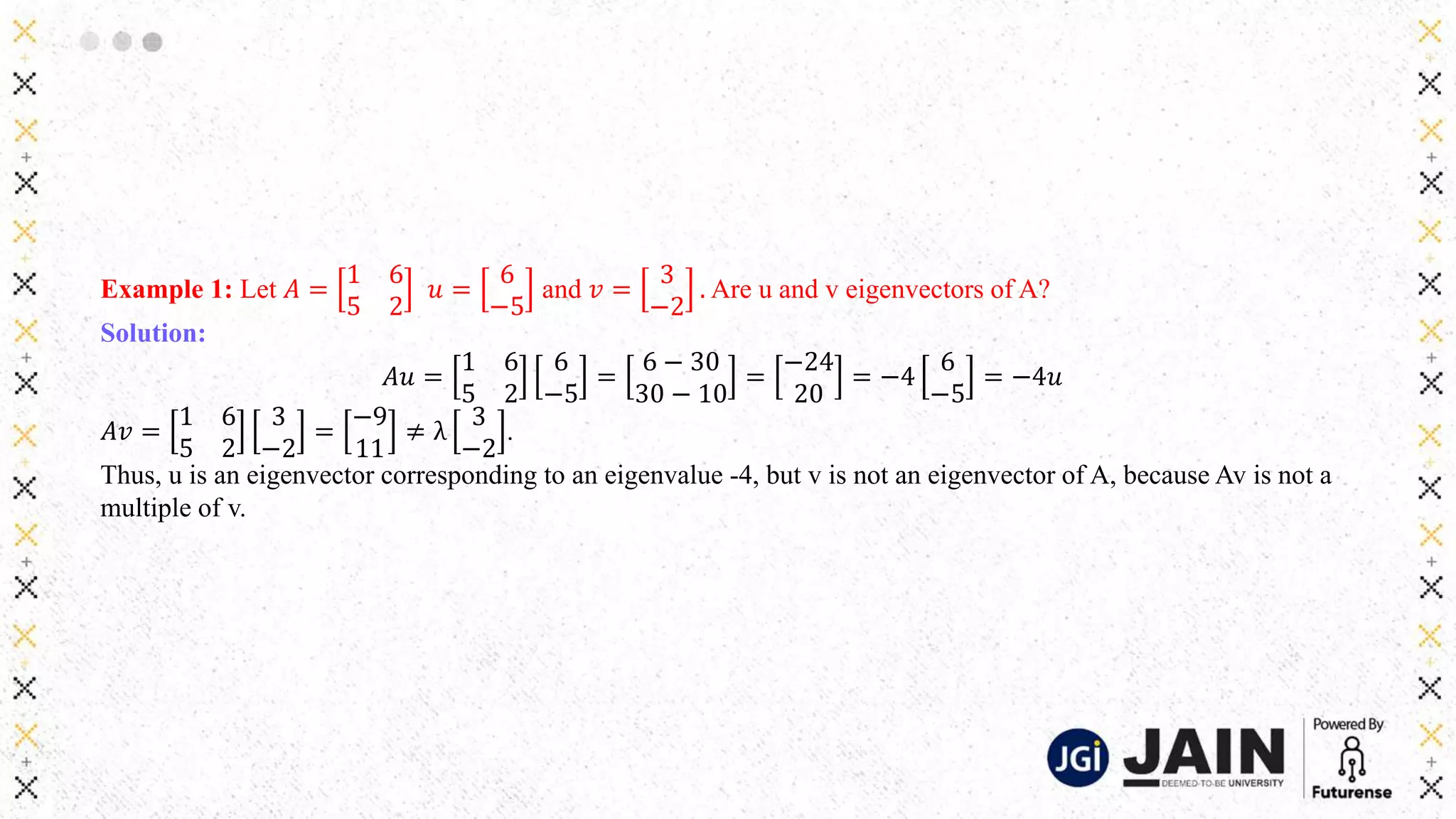

![Example 2: Show that 7 is an eigenvalue of the matrix 𝑨 =

𝟏 𝟔

𝟓 𝟐

and find the

corresponding eigenvectors.

Solution:

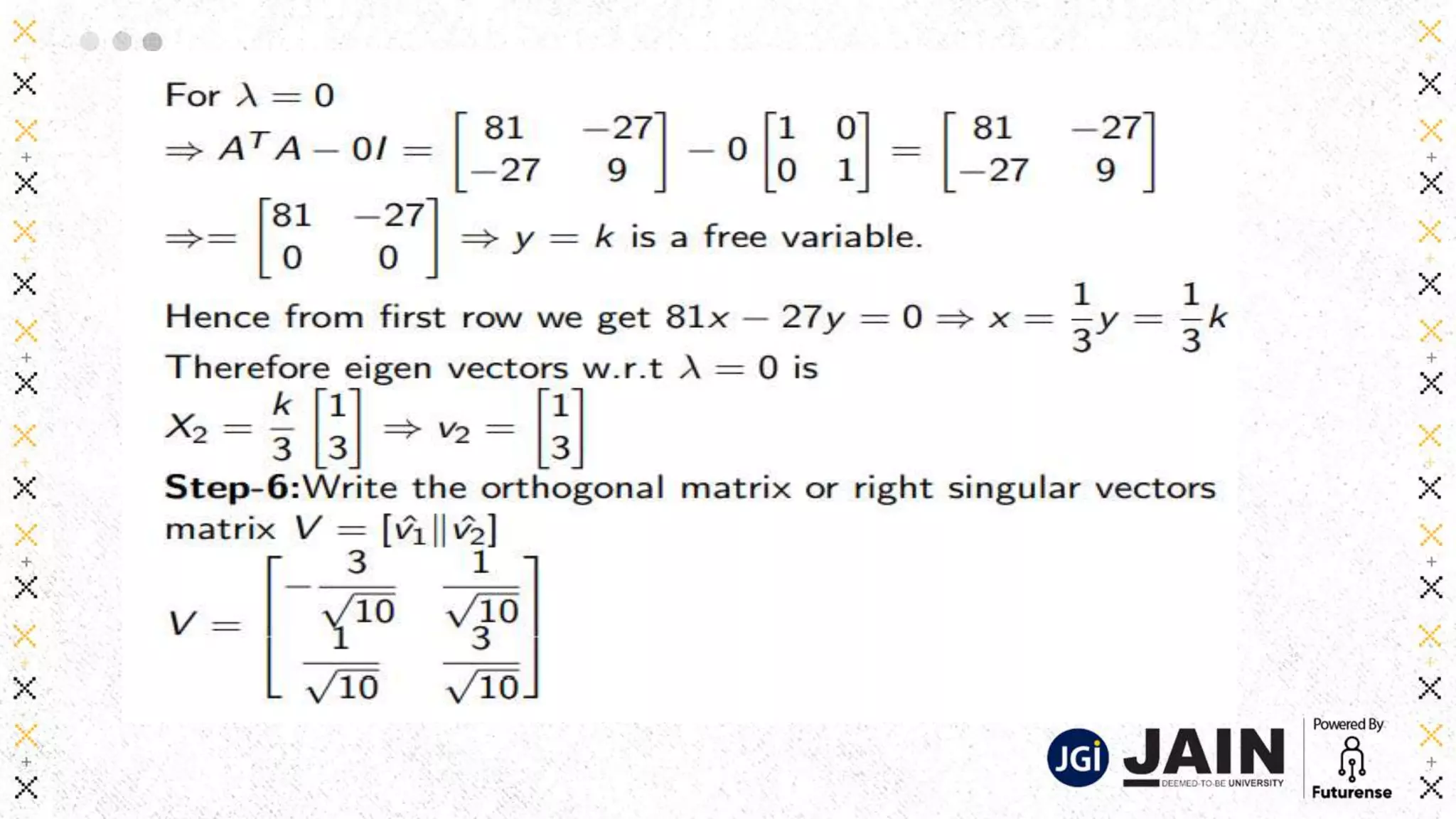

The scalar 7 is an eigenvalue of A iff the equation 𝐴𝑥 = 7𝑥 has a nontrivial solution, i.e.

𝐴𝑥 − 7𝑥 = 0 𝑜𝑟 𝐴 − 7𝐼 𝑥 = 0 → (1)

To solve this homogeneous equation, form the matrix 𝐴 − 7𝐼 =

−6 6

5 −5

.

The columns of 𝐴 − 7𝐼 are obviously linear dependent (multiple of each other). So

equation(1) has nontrivial solution. Thus 7 is an Eigen value of A.

To find the corresponding eigenvectors use row operations

Which implies[A:B]=

−6 6:

5 −5:

0

0

𝑅1 → −

1

6

𝑅1 𝑎𝑛𝑑 𝑅2 →

1

5

𝑅2

Then

1 −1:

0 0:

0

0

which gives 𝑥1 = 𝑥2, the general solution 𝑋 =

𝑥1

𝑥2

=

𝑥2

𝑥2

=

1

1

.

Each vector of this form with 𝑥2 ≠ 0 is an eigenvector corresponding 𝜆 = 7.](https://image.slidesharecdn.com/module05-matrixdecomposition-230413050335-366f9568/75/MODULE_05-Matrix-Decomposition-pptx-20-2048.jpg)