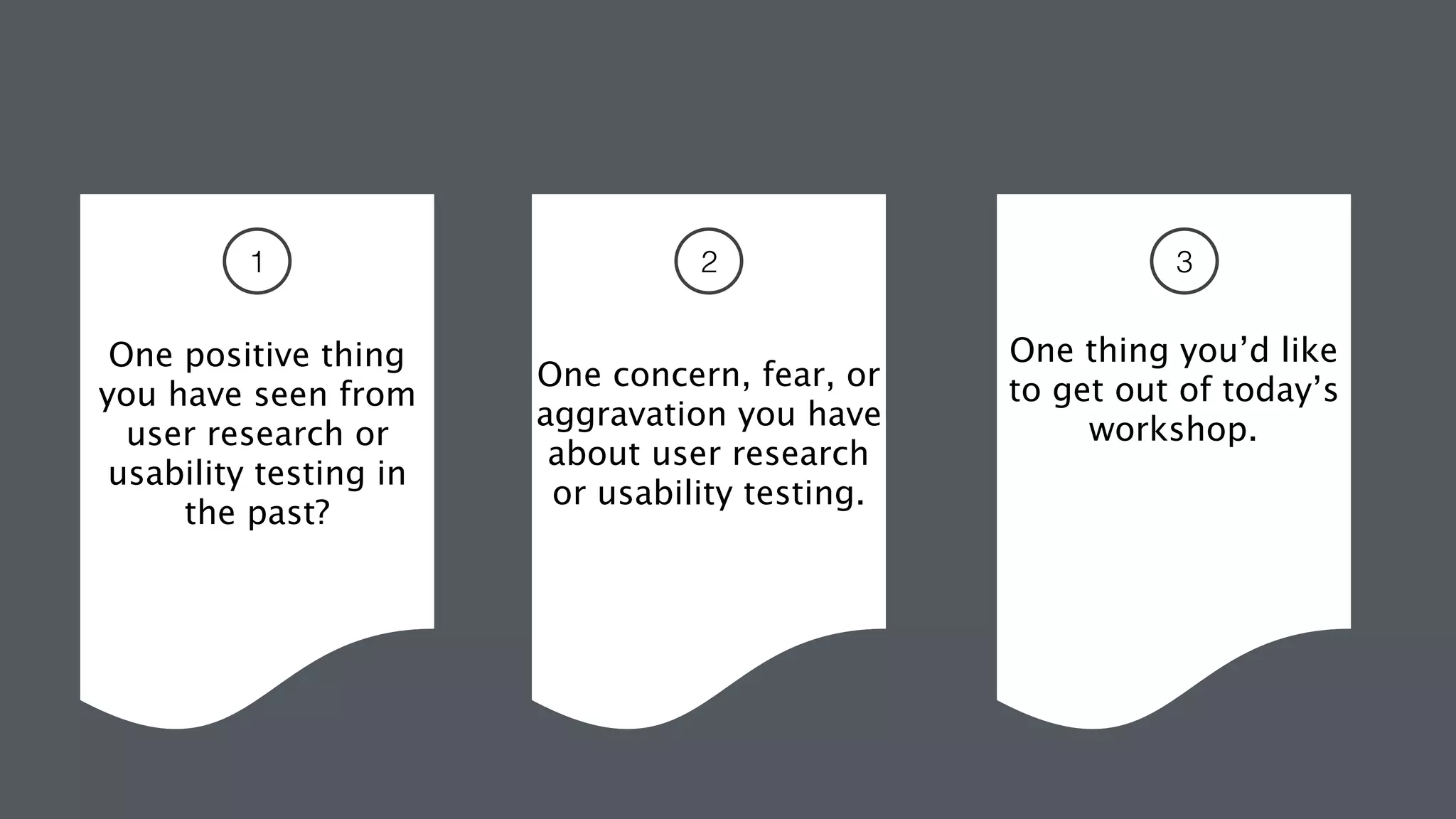

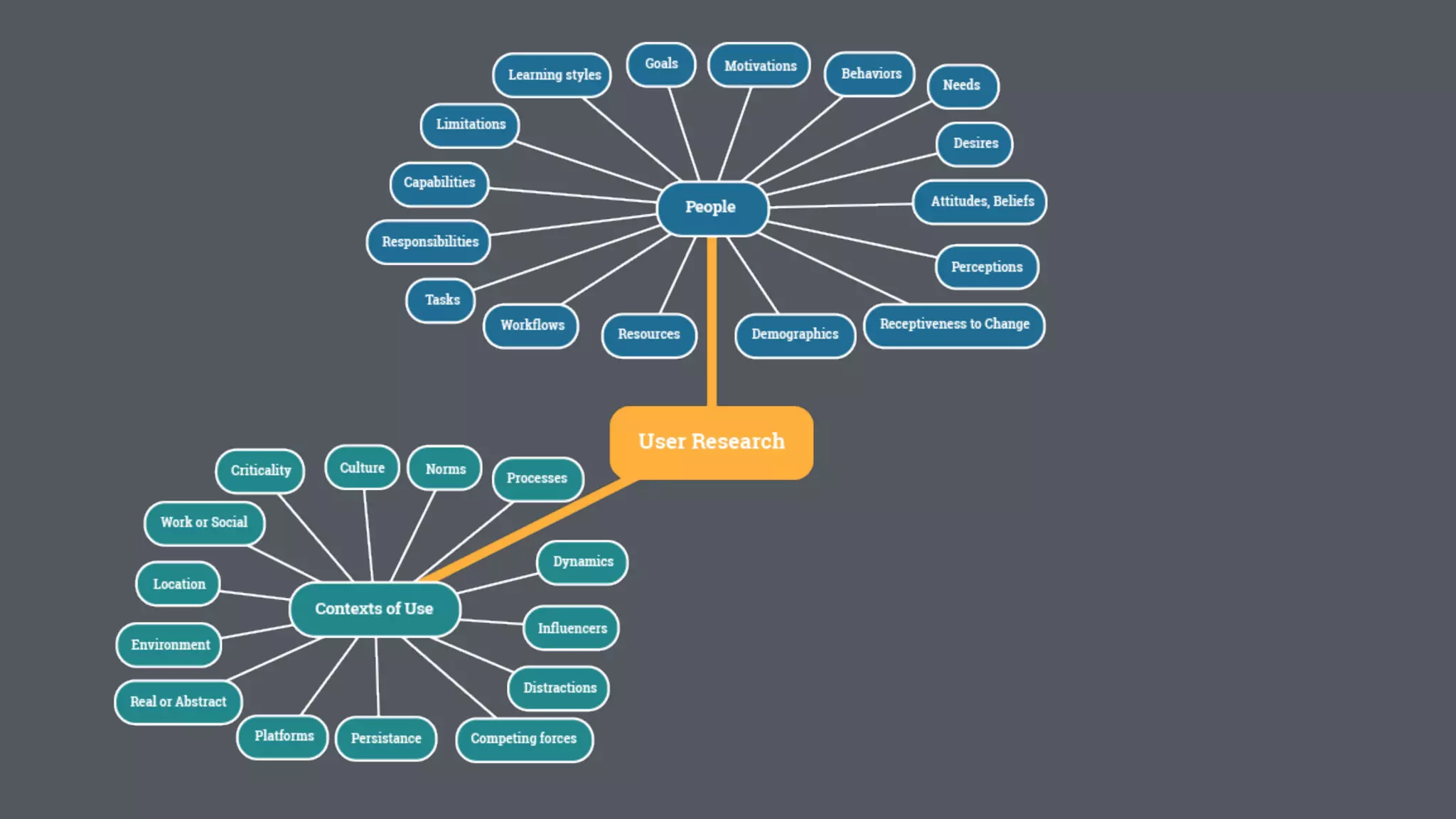

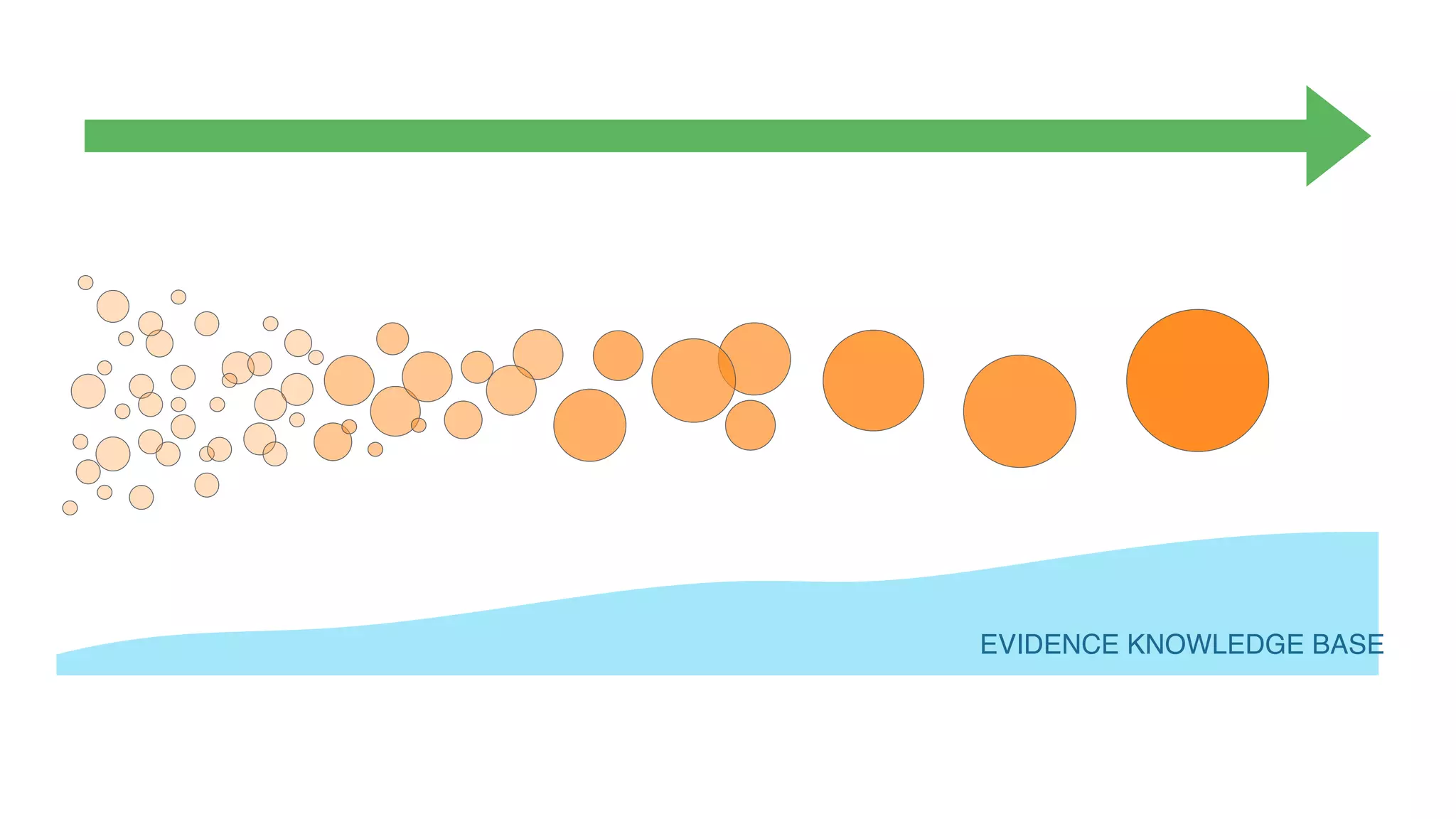

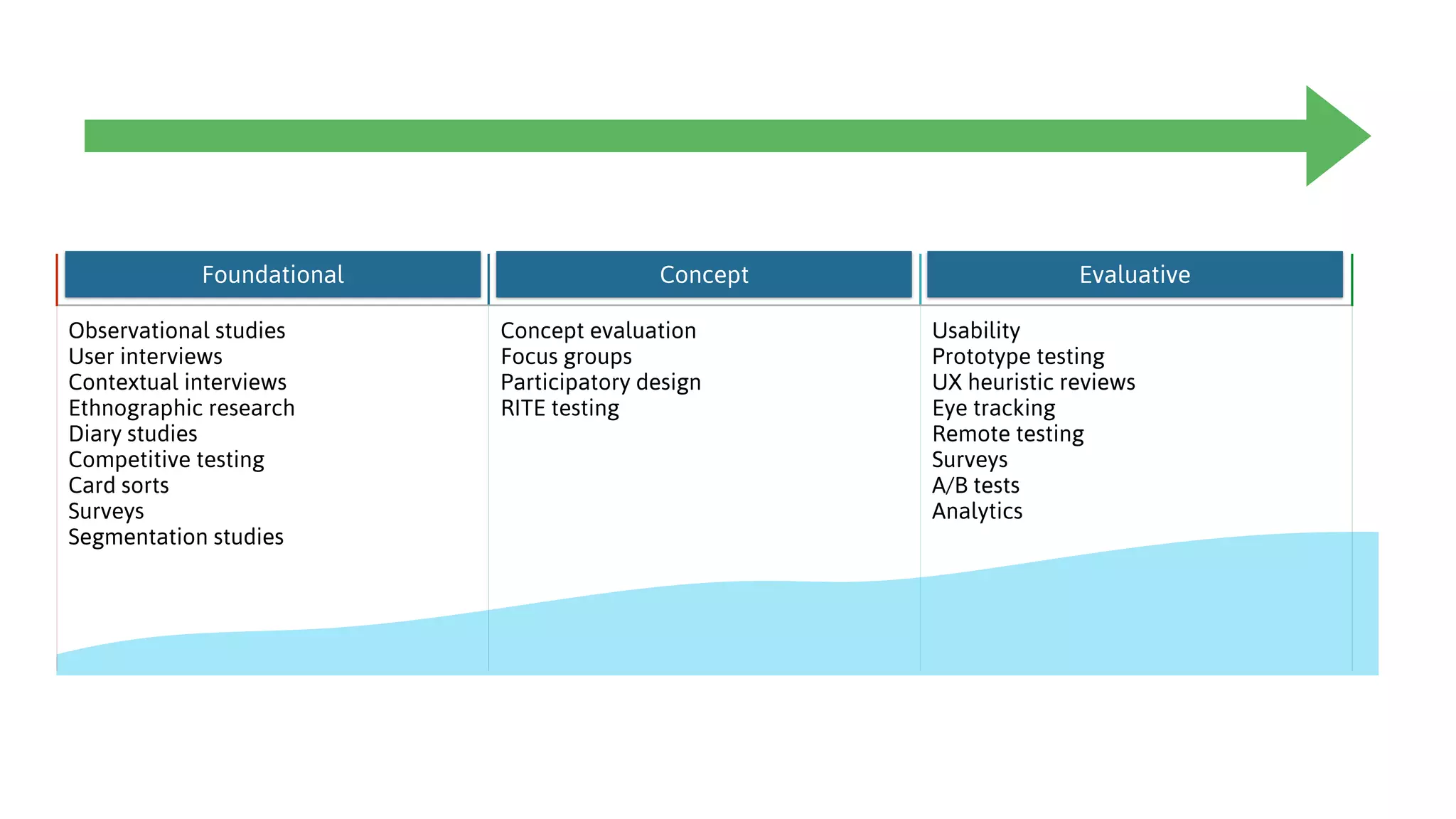

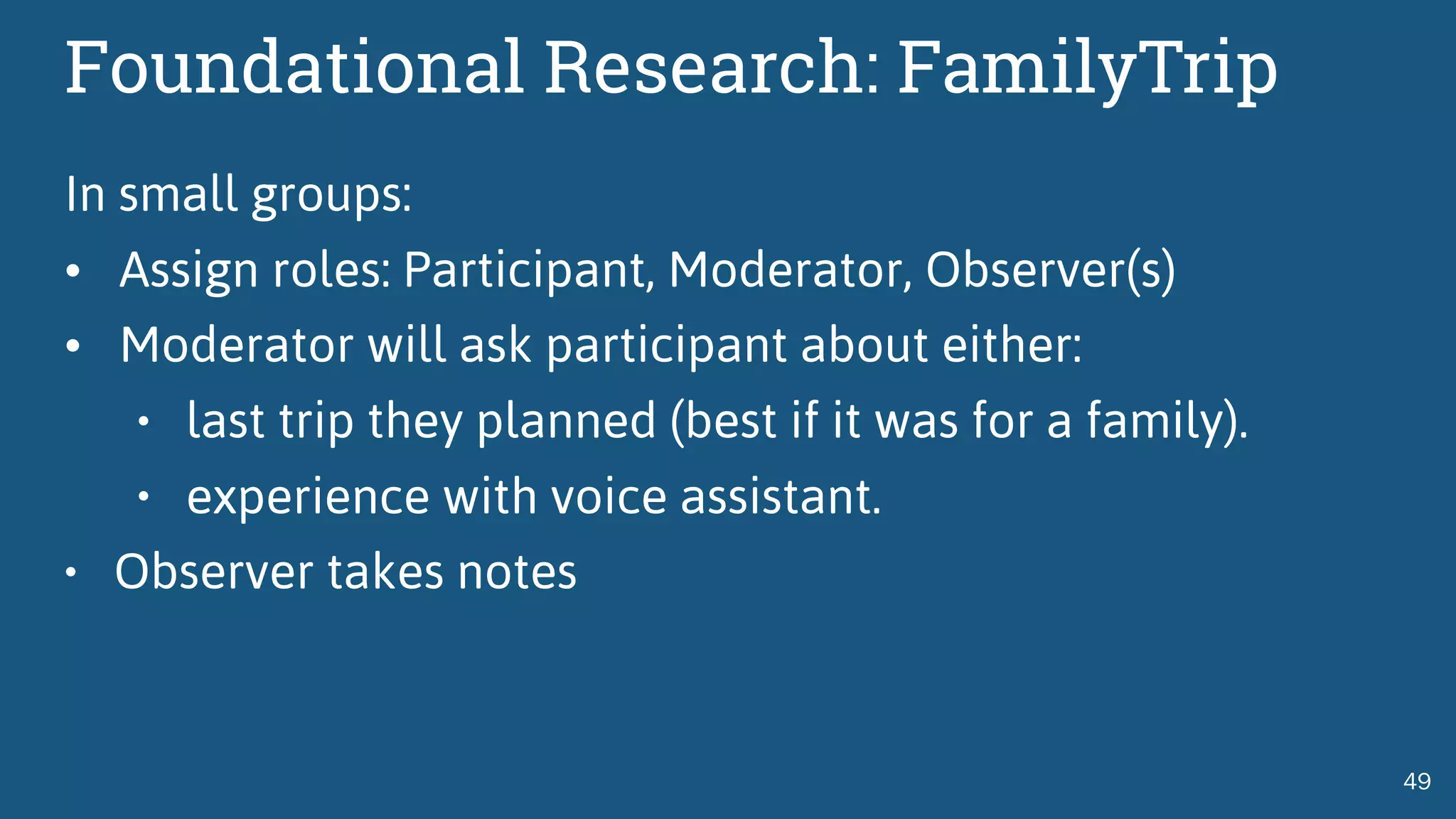

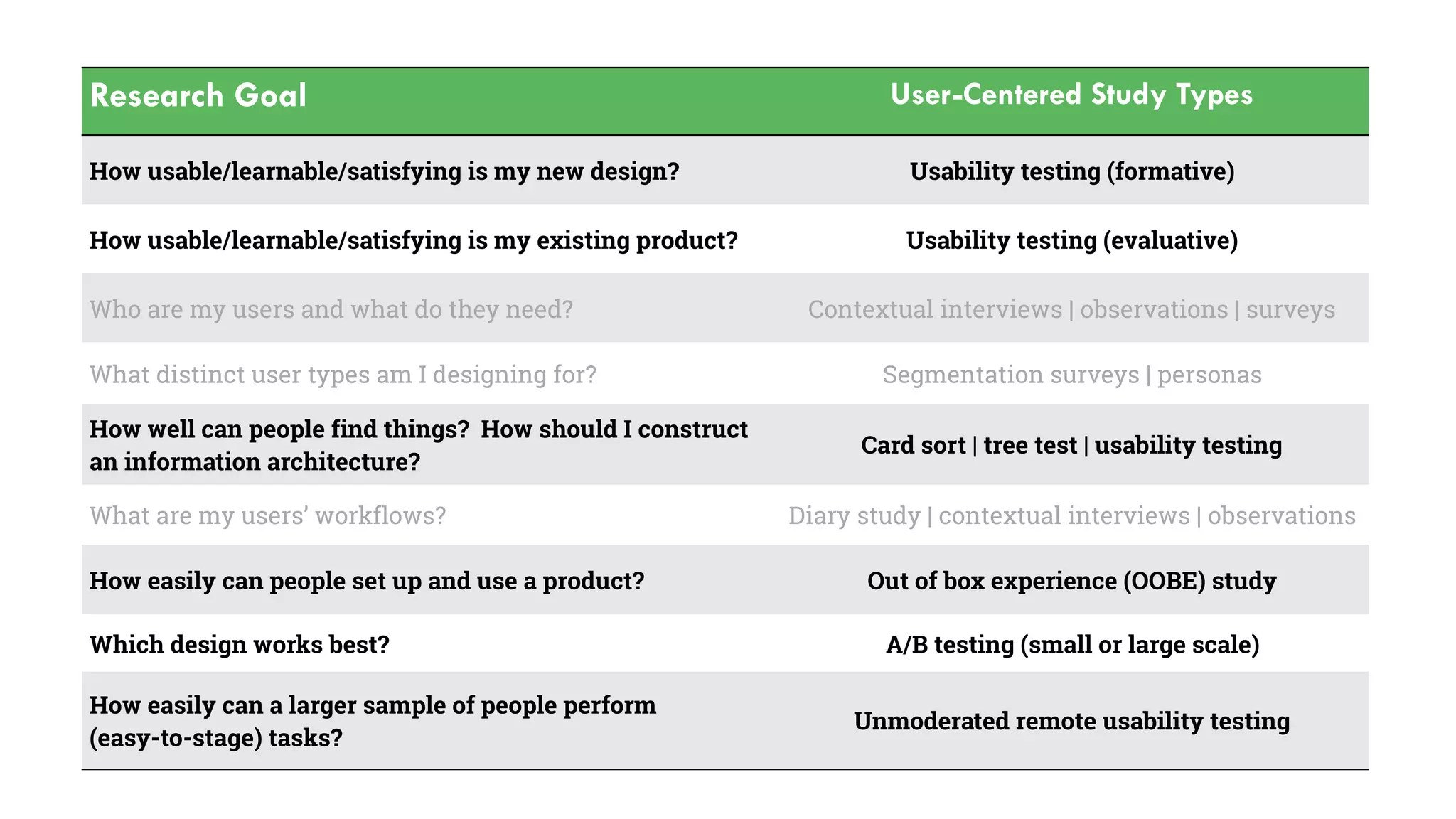

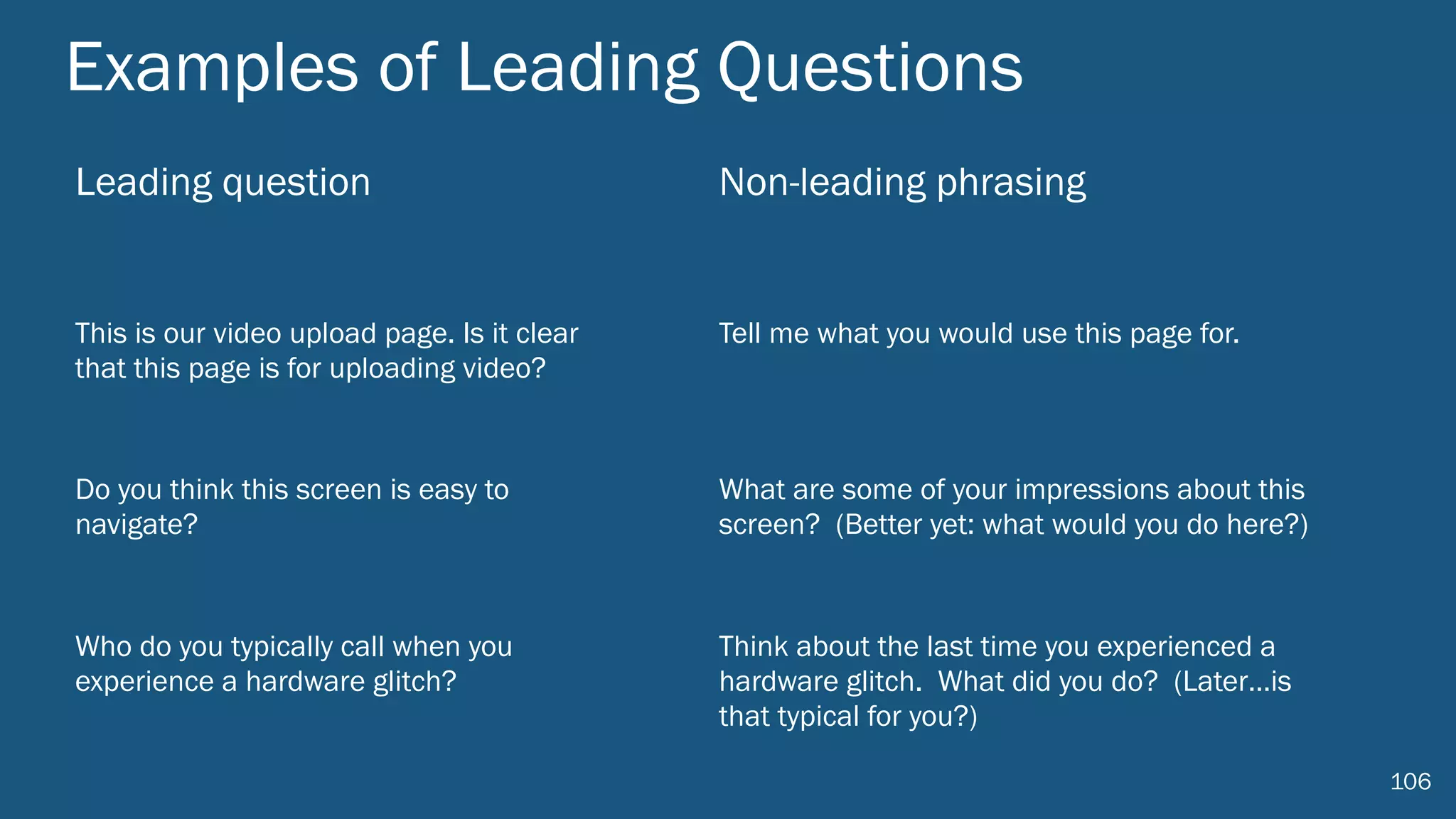

The document outlines a workshop focused on effectively integrating user research into the product development process. It emphasizes various research methodologies, including foundational, conceptual, and evaluative research to gather insights and validate design decisions. Participants are encouraged to apply these techniques to enhance their understanding of user goals and improve product outcomes, with a focus on maximizing return on investment through effective user research practices.