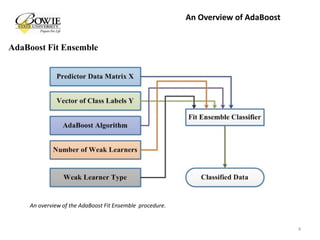

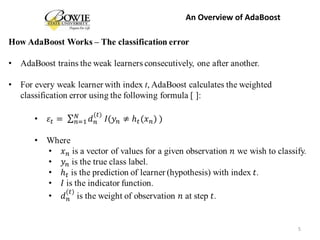

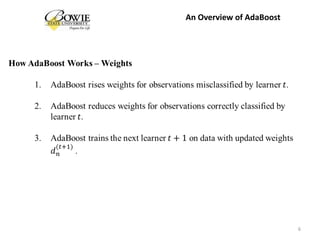

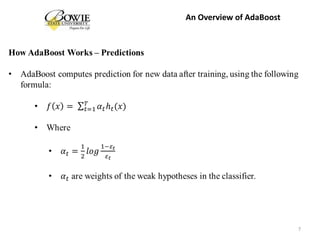

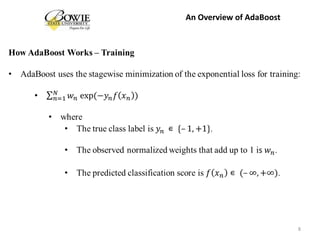

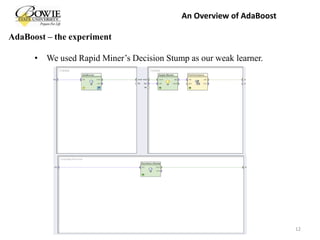

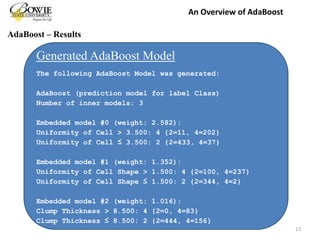

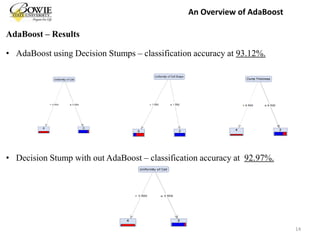

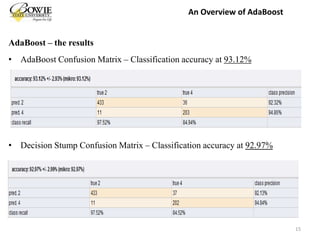

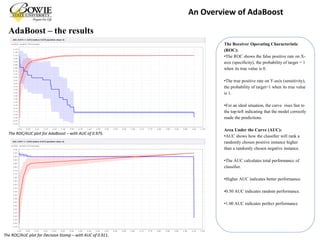

AdaBoost is a machine learning algorithm that uses multiple weak learners to create a strong learner. It works by assigning higher weights to misclassified examples from previous iterations and runs multiple iterations, each time adding a new weak learner that focuses on the examples with higher weights. The document presents an experiment using AdaBoost with decision stumps on a cancer dataset, finding a classification accuracy of 93.12% compared to 92.97% for decision stumps alone. ROC/AUC analysis showed AdaBoost with an AUC of 0.975 outperforming decision stumps with an AUC of 0.911, demonstrating that AdaBoost can create a more effective classifier than a single weak learner.