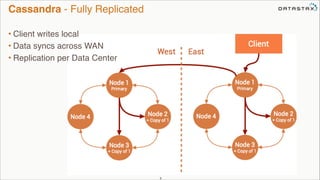

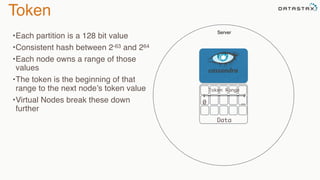

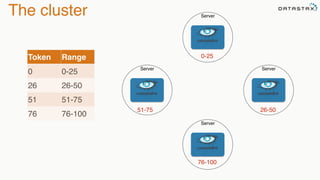

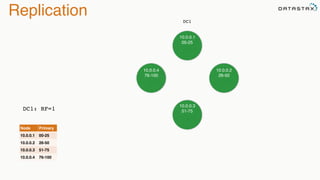

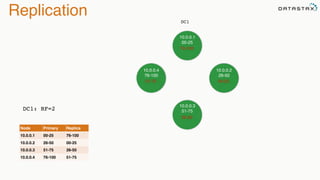

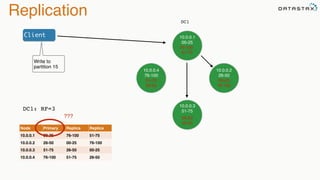

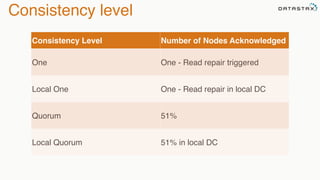

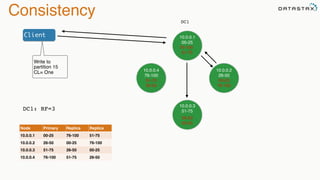

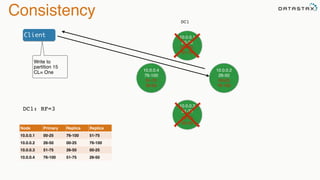

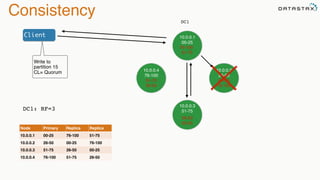

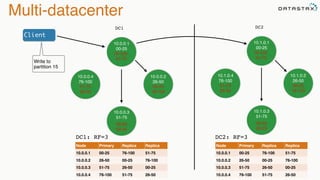

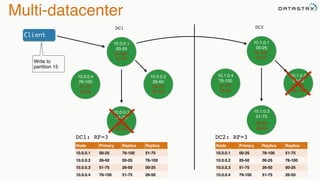

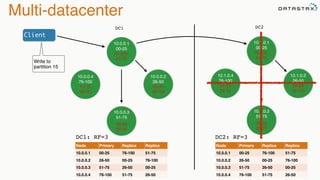

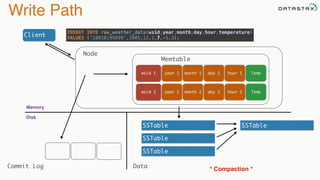

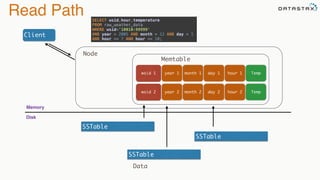

The document provides an overview of Apache Cassandra's architecture and design. It was created to address the needs of building reliable, high-performing, and always-available distributed databases. Cassandra is based on Dynamo and BigTable and uses a distributed hashing technique to partition and replicate data across nodes. It supports configurable replication across multiple data centers for high availability. Writes are sent to the local node and replicated to other nodes based on consistency level, while reads can be served from any replica.