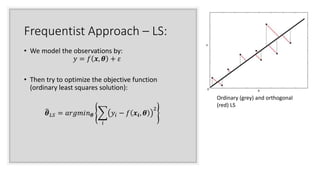

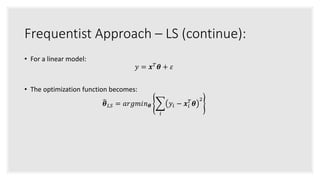

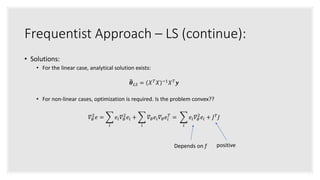

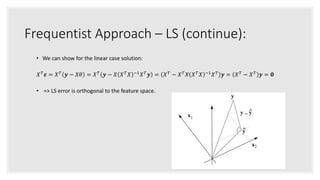

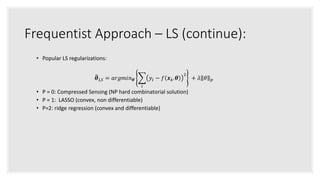

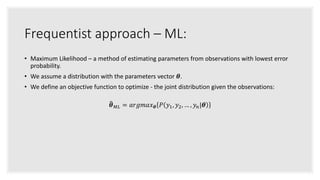

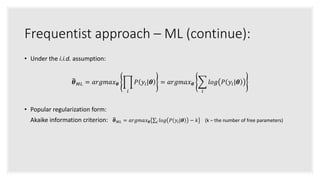

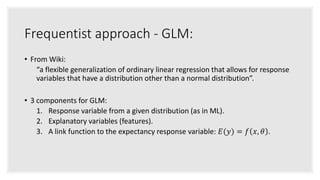

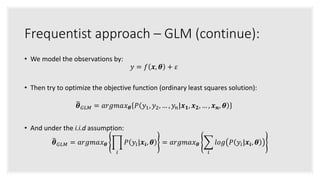

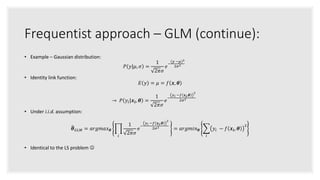

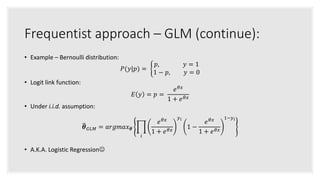

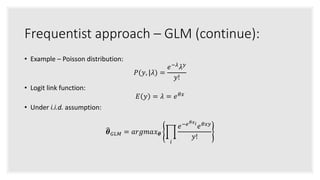

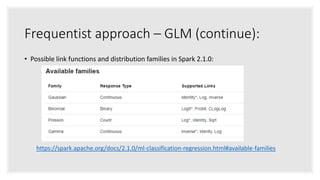

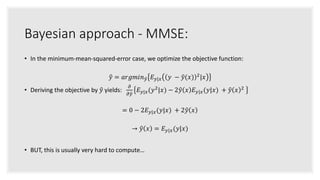

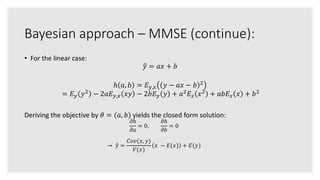

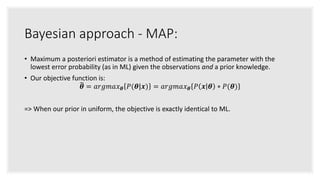

This document discusses Bayesian and frequentist approaches to statistical signal processing. It covers topics like least squares regression, maximum likelihood estimation, generalized linear models, minimum mean squared error estimation, and maximum a posteriori estimation. As examples, it discusses Gaussian, Bernoulli, and Poisson regression models within the generalized linear model framework. It also briefly covers Kalman filtering, perceptrons, and Winnow algorithms for online learning.