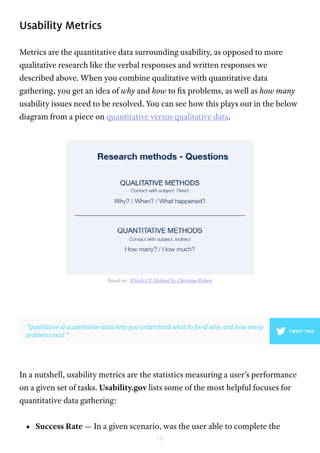

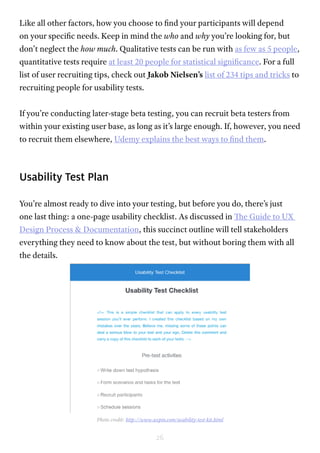

This document discusses planning usability testing by defining goals and metrics. It recommends categorizing goals into questions about the product, users, success metrics, competitors, needed research, and timing. Goals should be simple statements that can be measured, like "Can visitors find needed information?". The type of data collected, like qualitative user feedback or quantitative metrics, should depend on the goal and who will use the results. Common metrics include success rates, error rates, and task completion times. Planning goals and metrics upfront helps focus the testing and ensure it provides useful results.