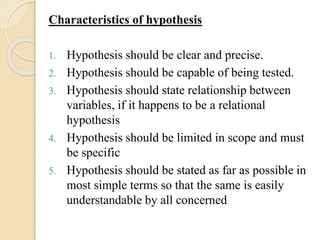

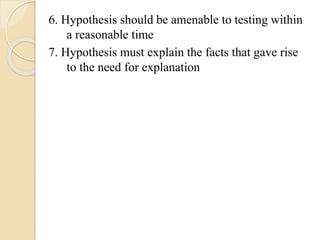

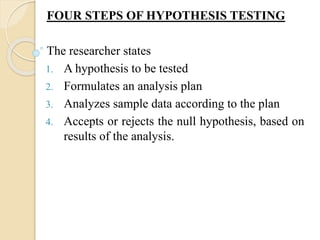

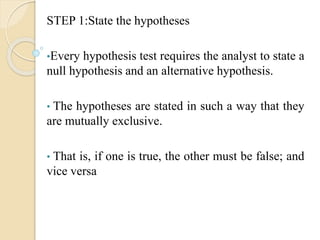

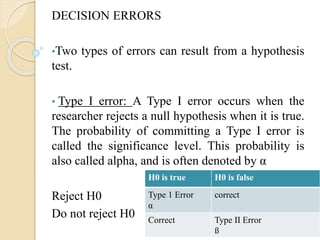

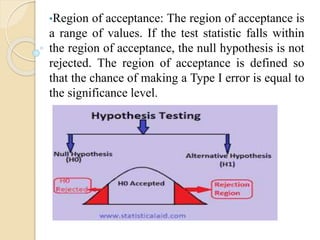

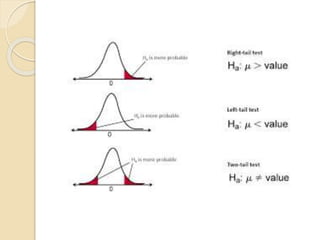

The document outlines the process of hypothesis testing, which involves formulating a null hypothesis and an alternative hypothesis, testing the null hypothesis using sample data, and making decisions based on the results of the analysis. It details the four steps of hypothesis testing, which include stating hypotheses, formulating an analysis plan, analyzing data, and interpreting results, while also addressing types of errors that may occur during testing. Furthermore, it distinguishes between one-tailed and two-tailed tests and emphasizes the importance of understanding the implications of statistical significance in research.