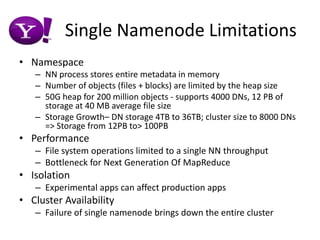

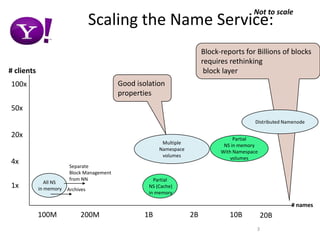

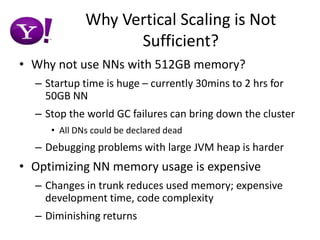

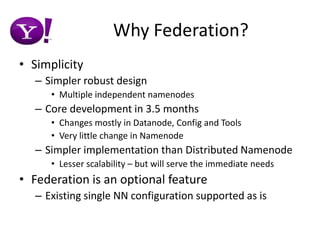

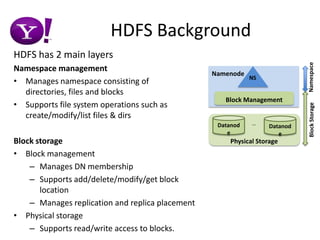

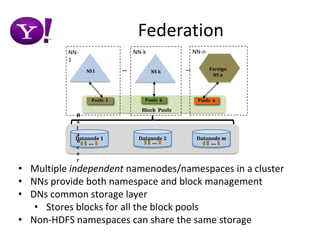

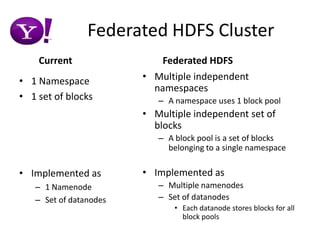

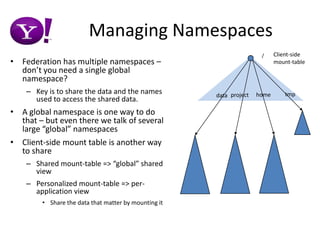

HDFS Federation allows HDFS to scale beyond the limitations of a single namenode by federating the namespace and block management across multiple independent namenodes. This simplifies the design and implementation compared to a distributed namenode approach. Existing single namenode deployments are not impacted and can continue running as is. Federation preserves the robustness of individual namenodes while scaling to more namenodes. It generalizes the block storage layer to allow multiple namenodes to share the same blocks storage. This improves isolation and availability while providing a simpler way to scale HDFS in the near term.