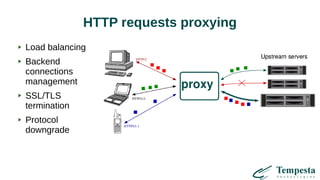

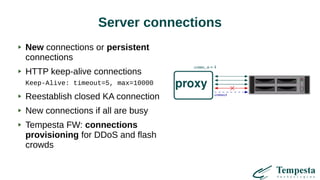

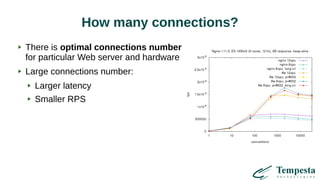

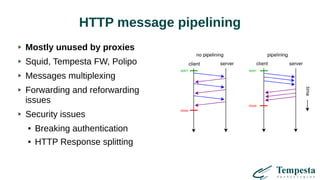

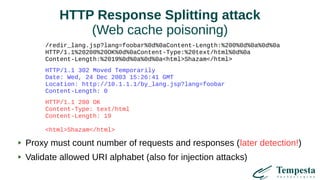

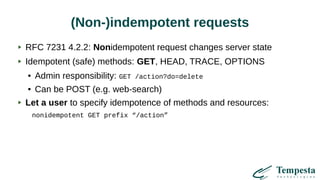

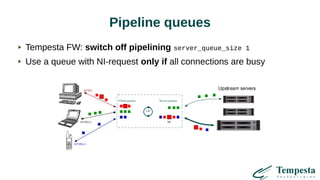

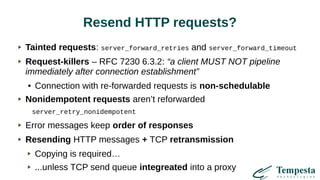

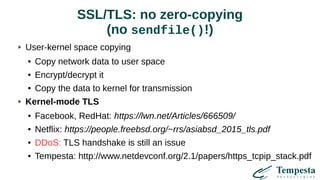

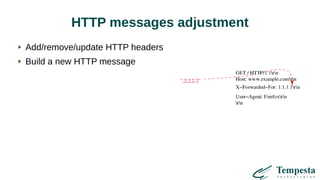

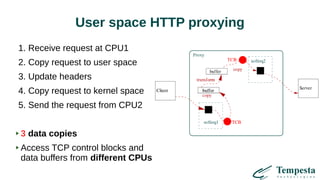

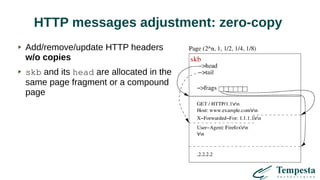

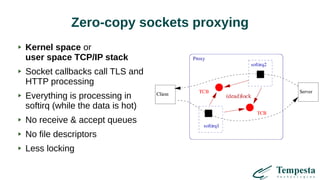

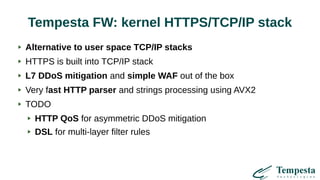

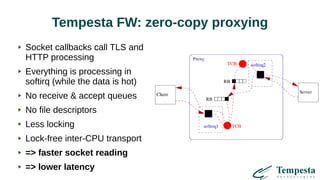

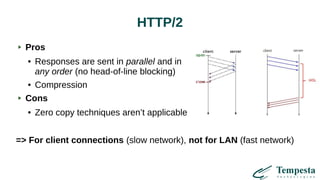

The document discusses HTTP requests proxying and related topics, including load balancing, connection management, and security issues. It covers the functionalities and considerations involved in high-performance network traffic processing, specifically in relation to Tempesta Technologies' open-source application delivery controller (ADC). Additionally, it outlines various techniques for optimizing HTTP message handling, such as zero-copy socket proxying and TCP/IP stack management.