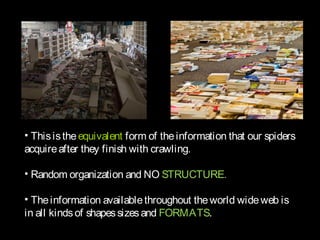

This document discusses the main parts of a search engine: spiders (or web crawlers) that fetch web pages and follow links to index their content, an indexer that structures the crawled data for searching, and search software/algorithms that determine relevance and rankings when users search. It describes how spiders crawl the web to collect information, how the indexer organizes this unstructured data, and how algorithms consider factors like keyword location, individual search engine methods, and off-site links to return relevant results.