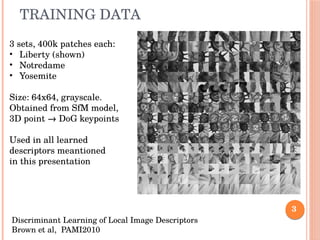

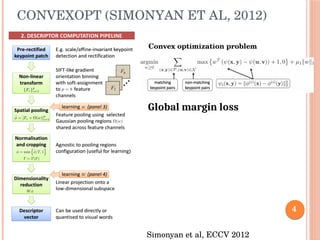

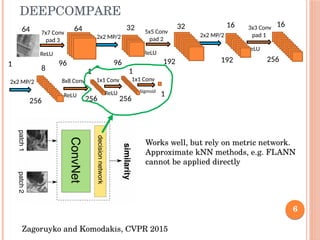

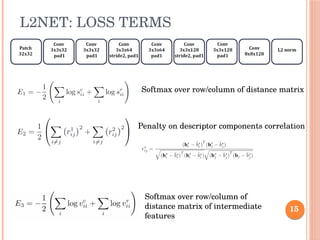

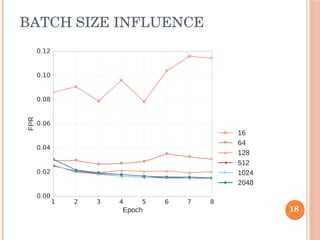

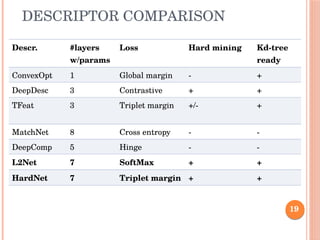

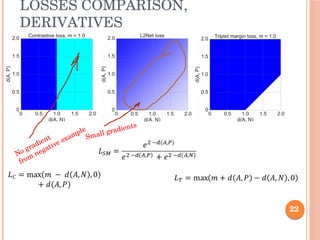

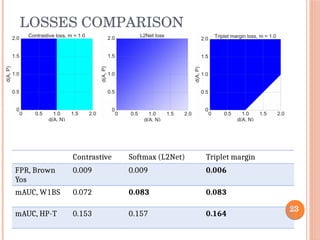

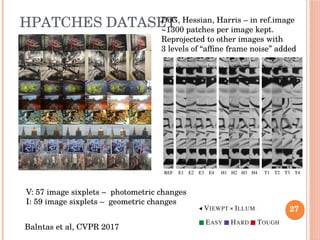

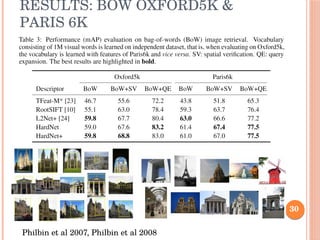

The document presents HardNet, a convolutional network designed for local image description, along with a review of various methods for learning local descriptors, including L2-Net and others. It details the architecture and loss functions used, as well as training data and benchmarks from several datasets, highlighting the performance comparisons. Additionally, the paper discusses the techniques for hard-negative mining and various results achieved across different datasets.