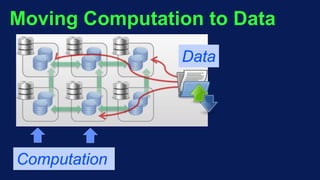

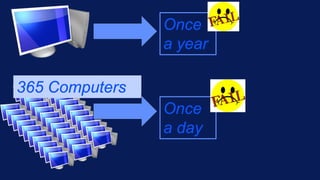

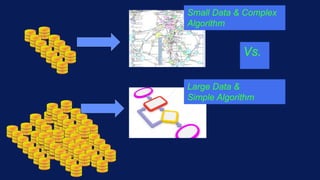

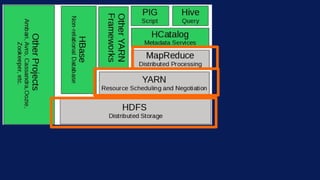

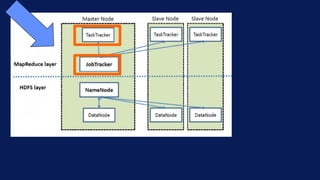

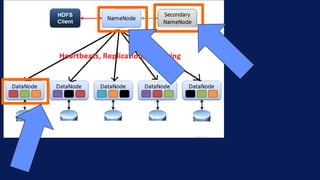

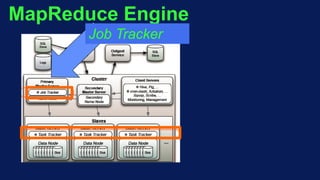

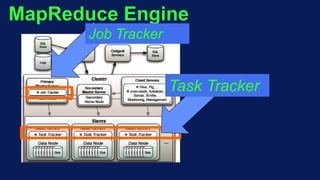

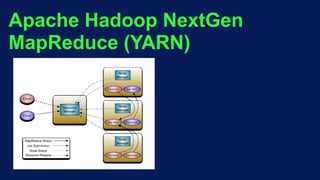

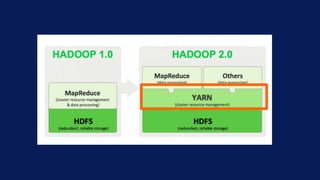

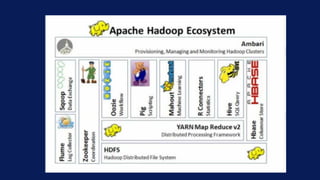

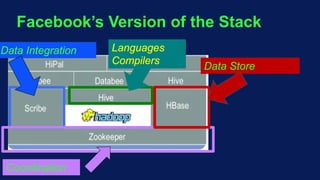

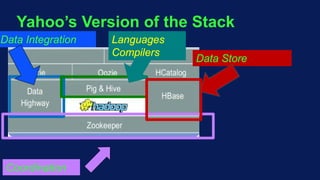

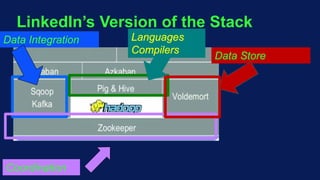

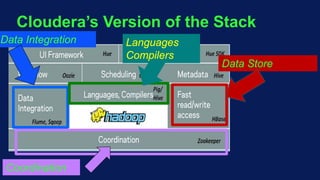

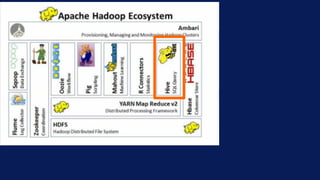

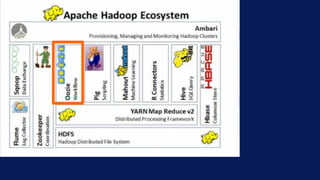

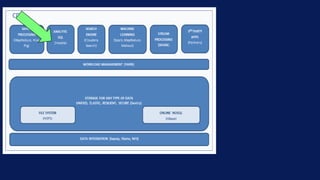

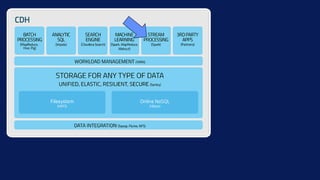

Apache Hadoop is an open source software framework created in 2005 for distributed storage and processing of large datasets across clusters of computers. It allows for reliable and scalable computation across large amounts of data and nodes. The core modules include Hadoop Common, HDFS for distributed file storage, YARN for job scheduling and cluster resource management, and MapReduce for distributed processing of large datasets. The Hadoop ecosystem has grown significantly and includes additional popular components such as Pig, Hive, HBase, Zookeeper, Oozie, Flume, Spark and Impala.