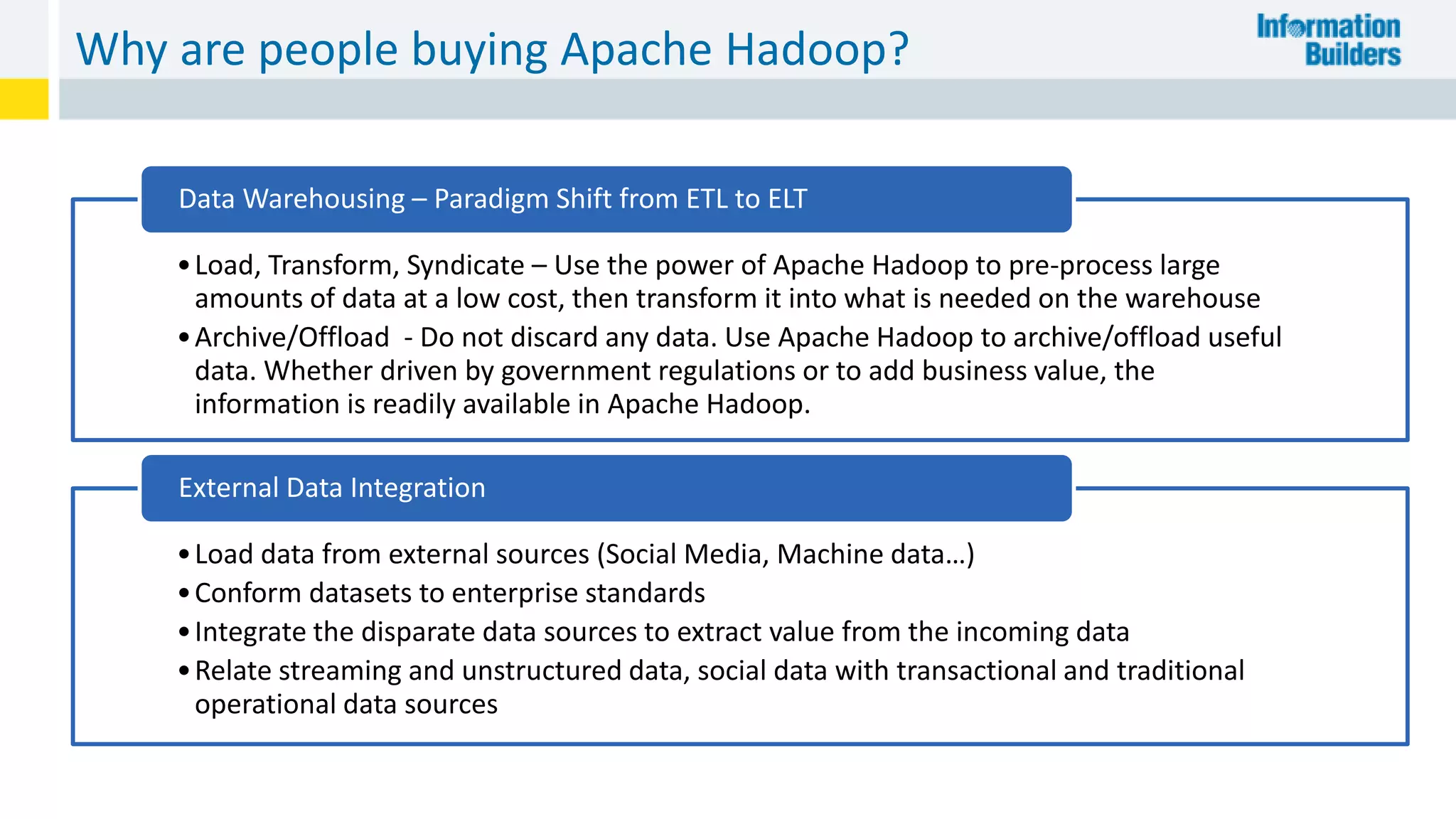

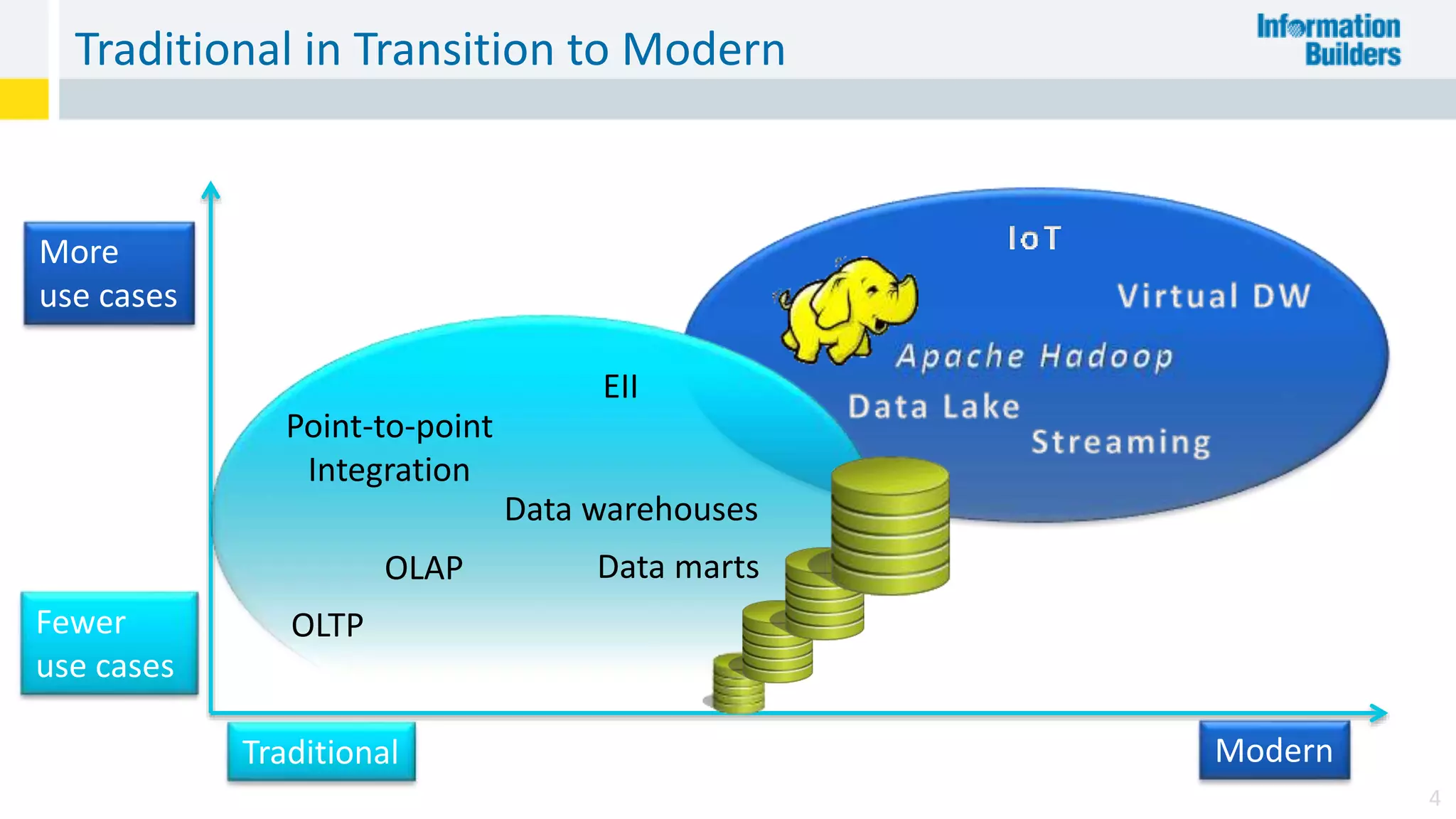

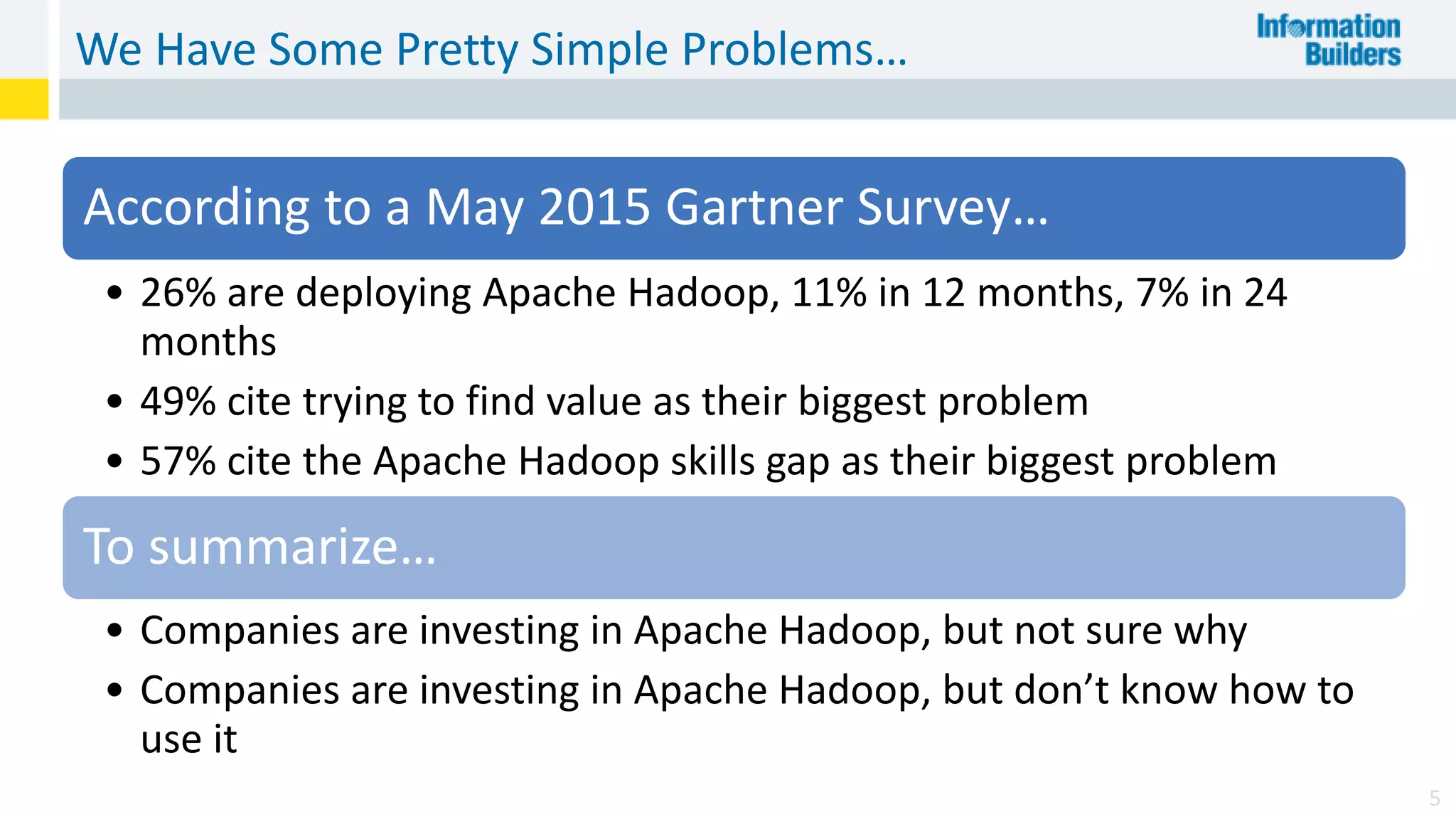

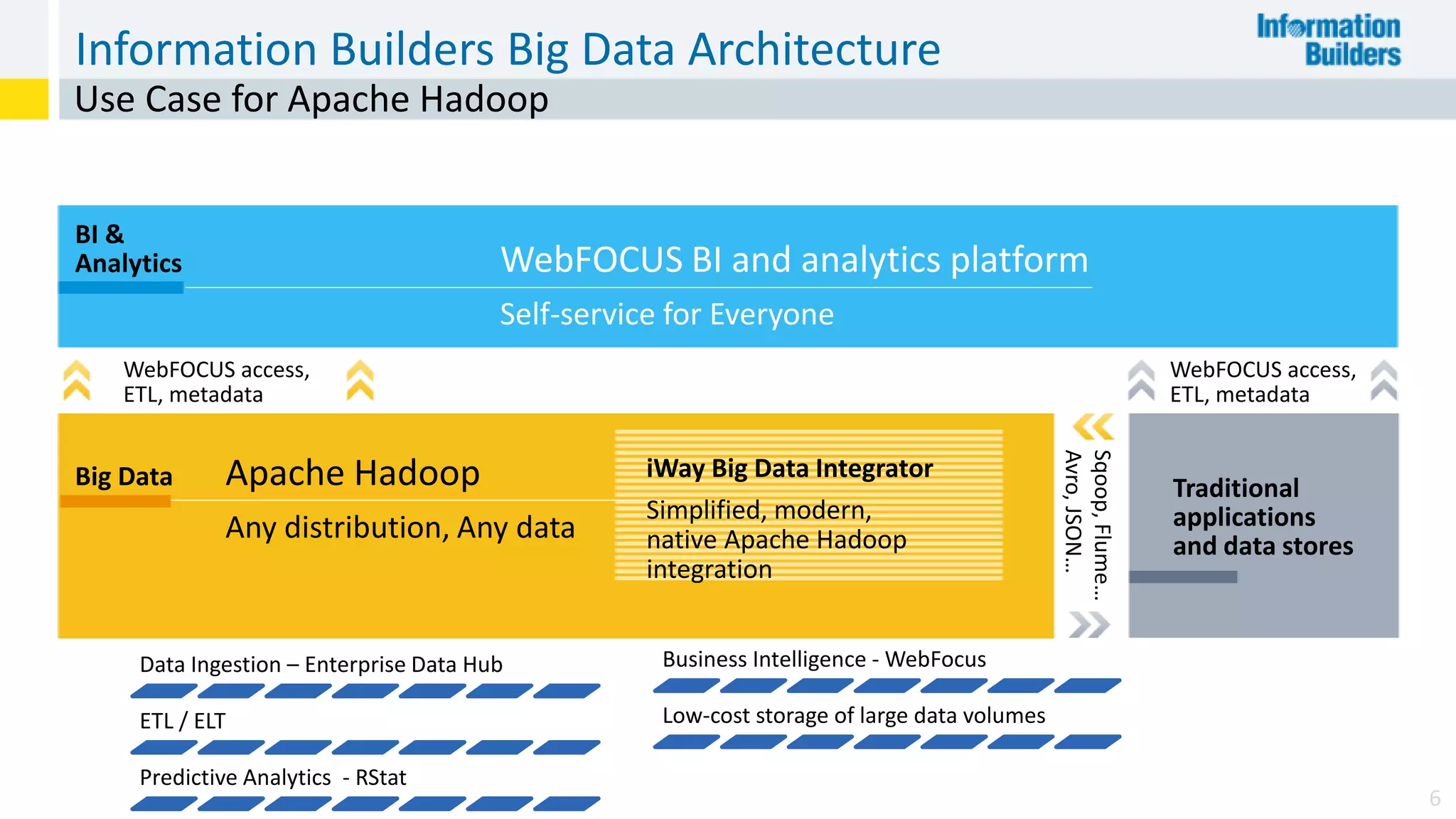

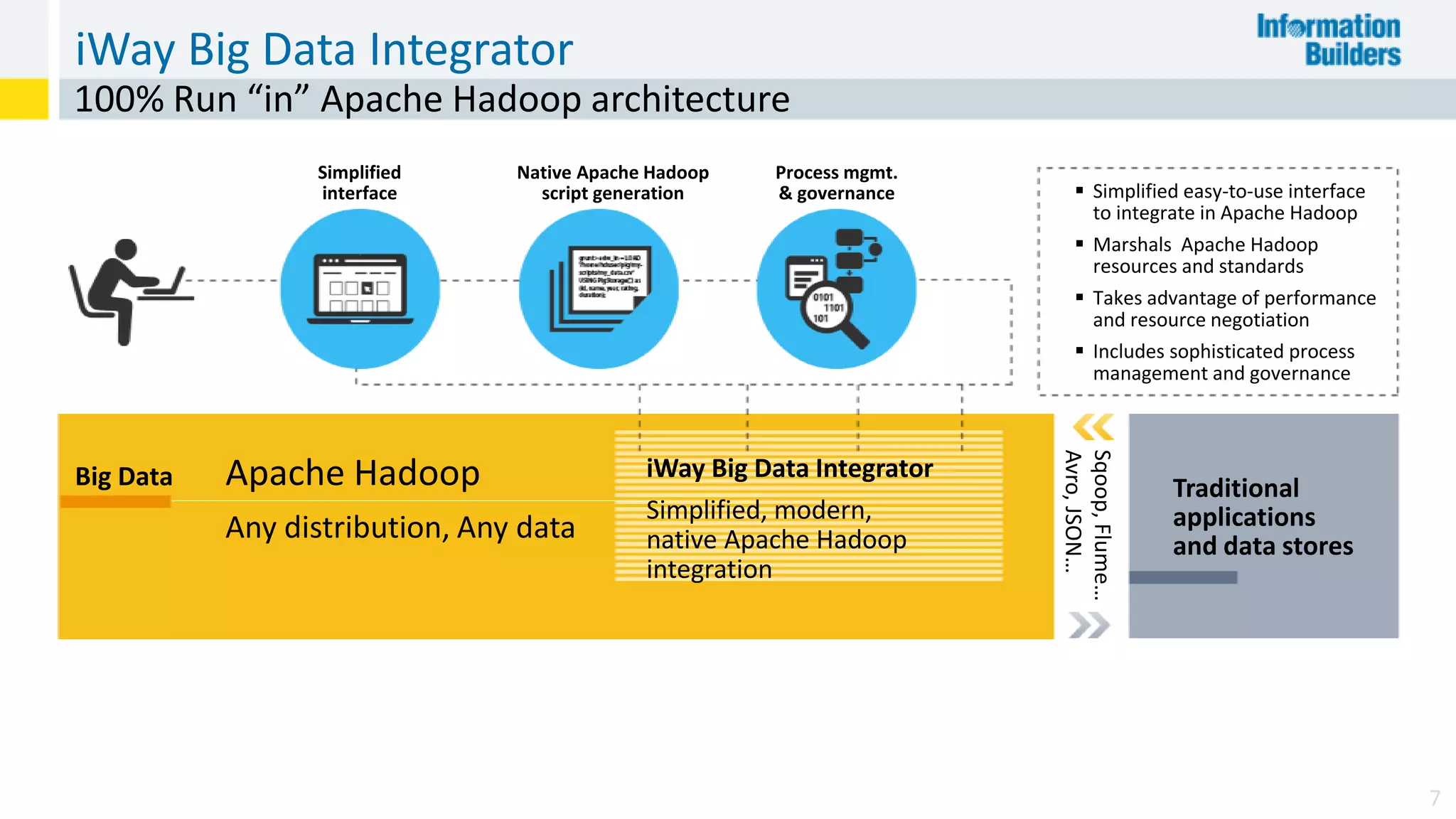

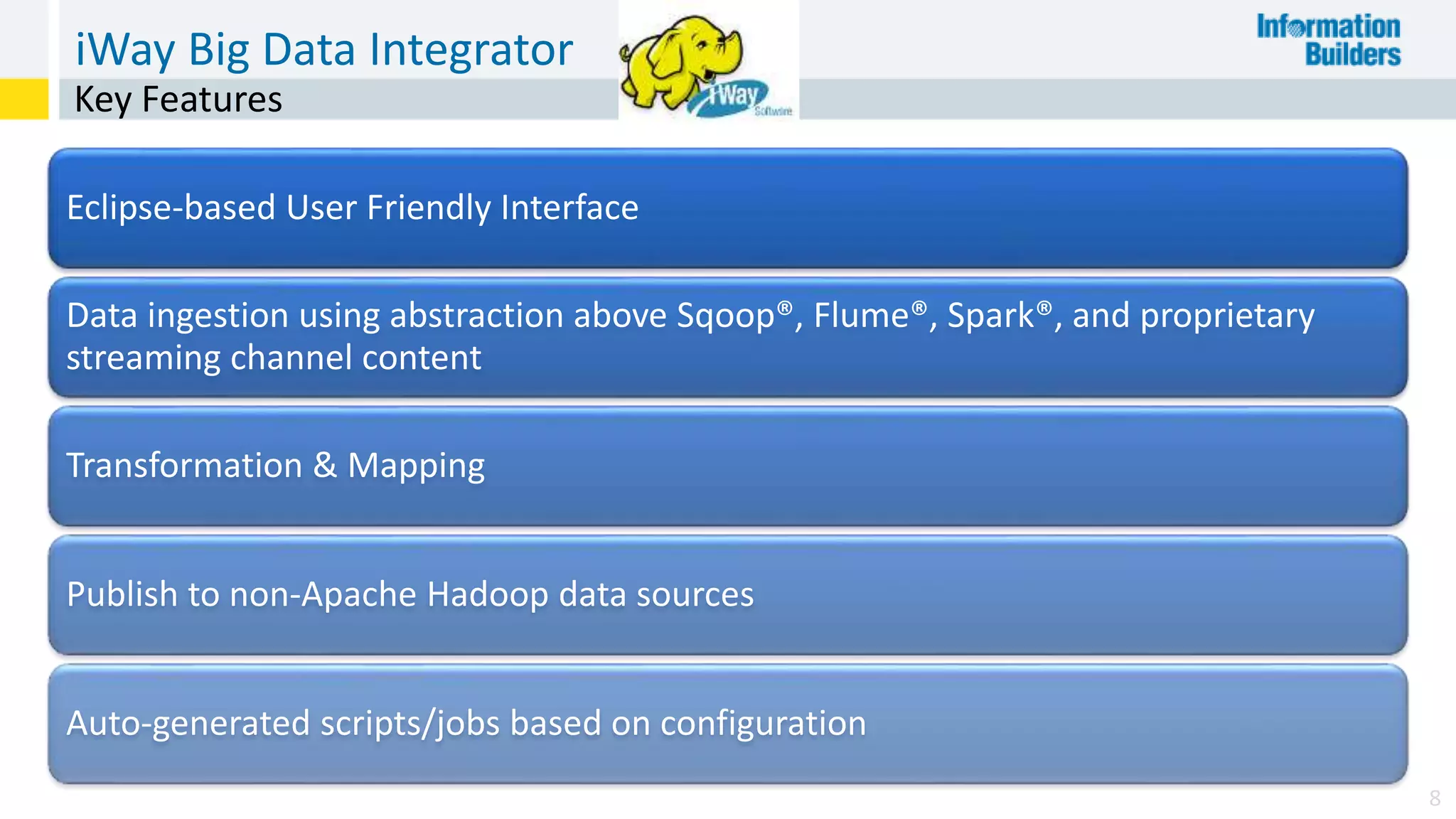

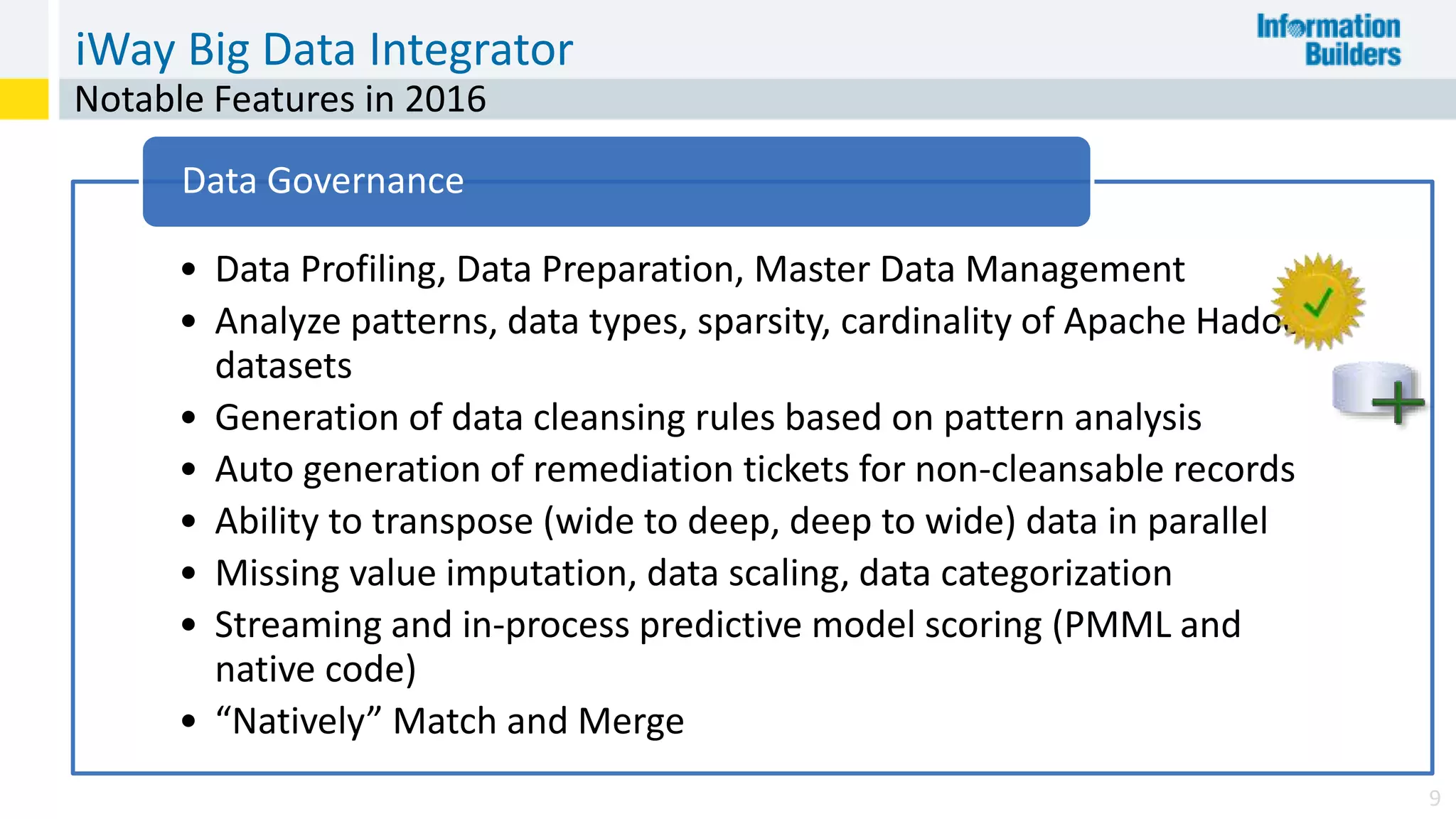

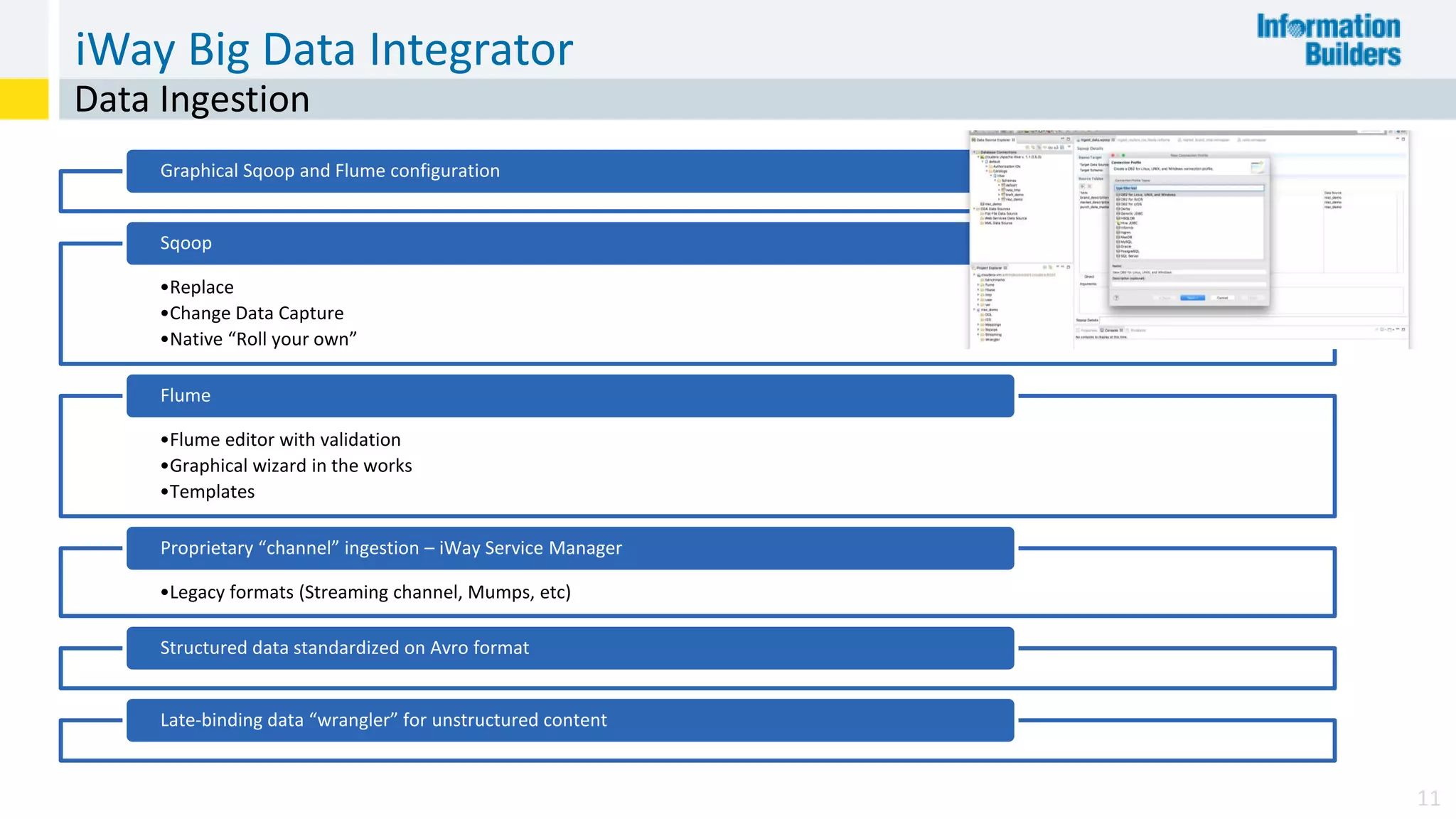

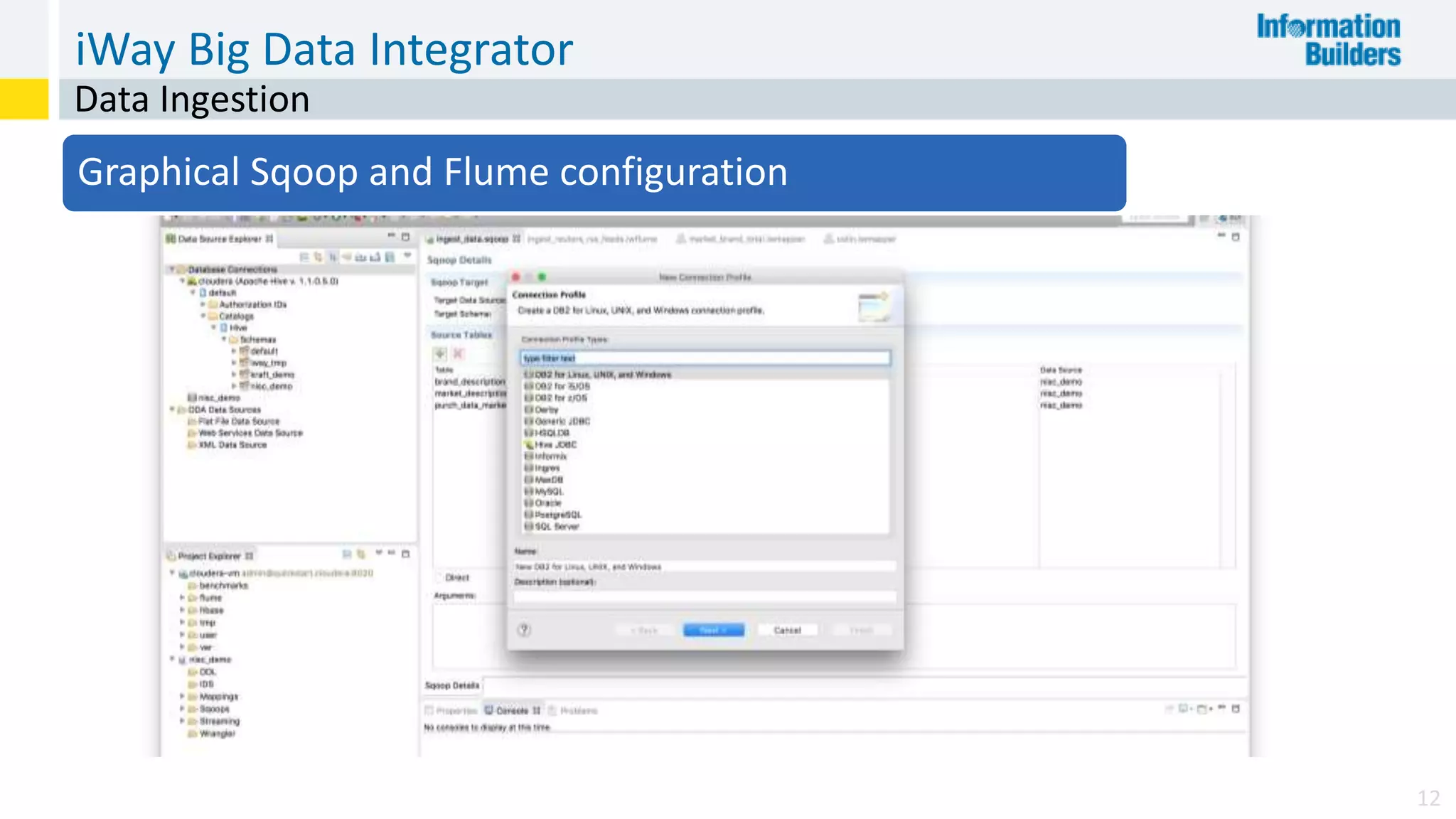

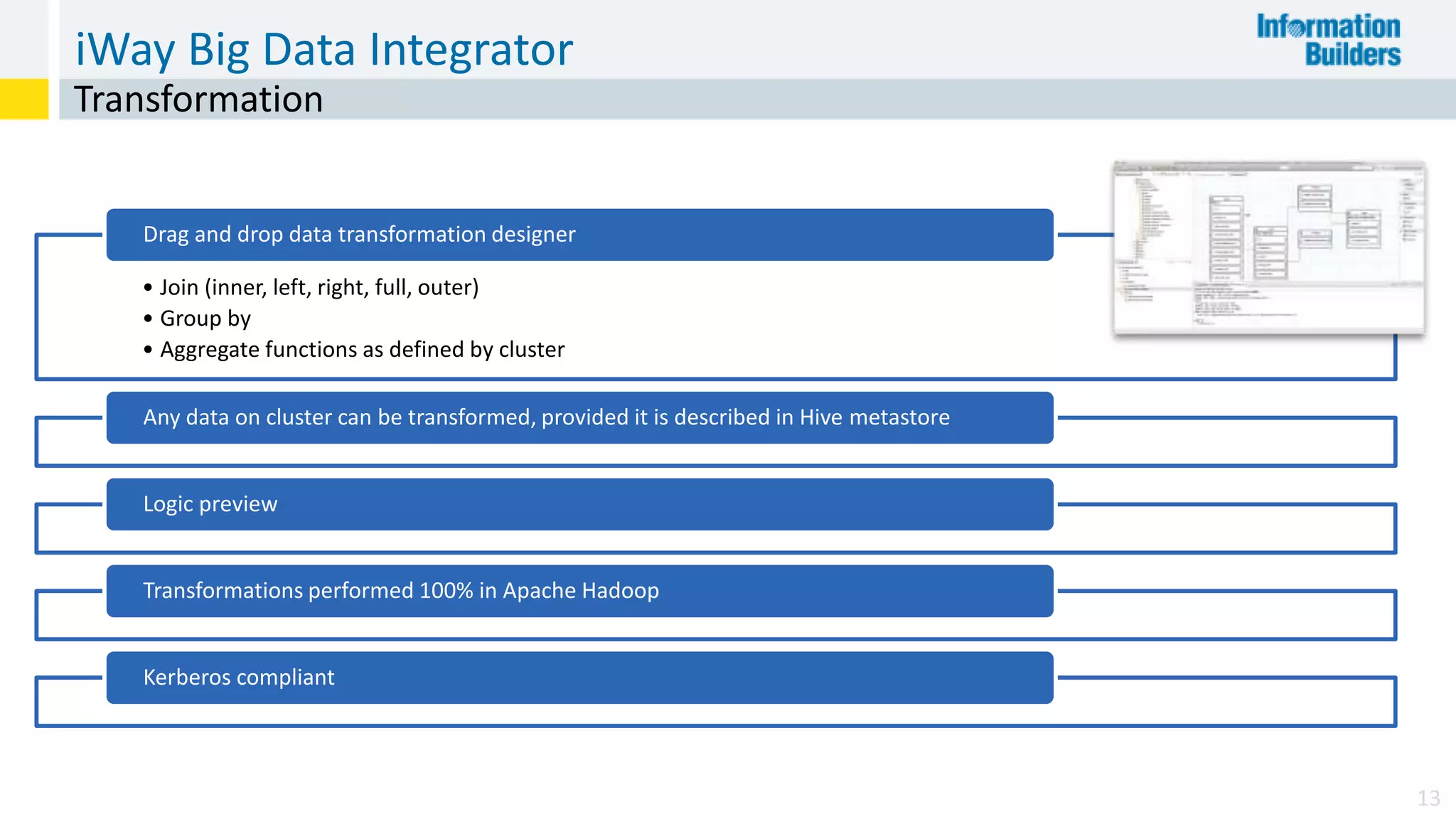

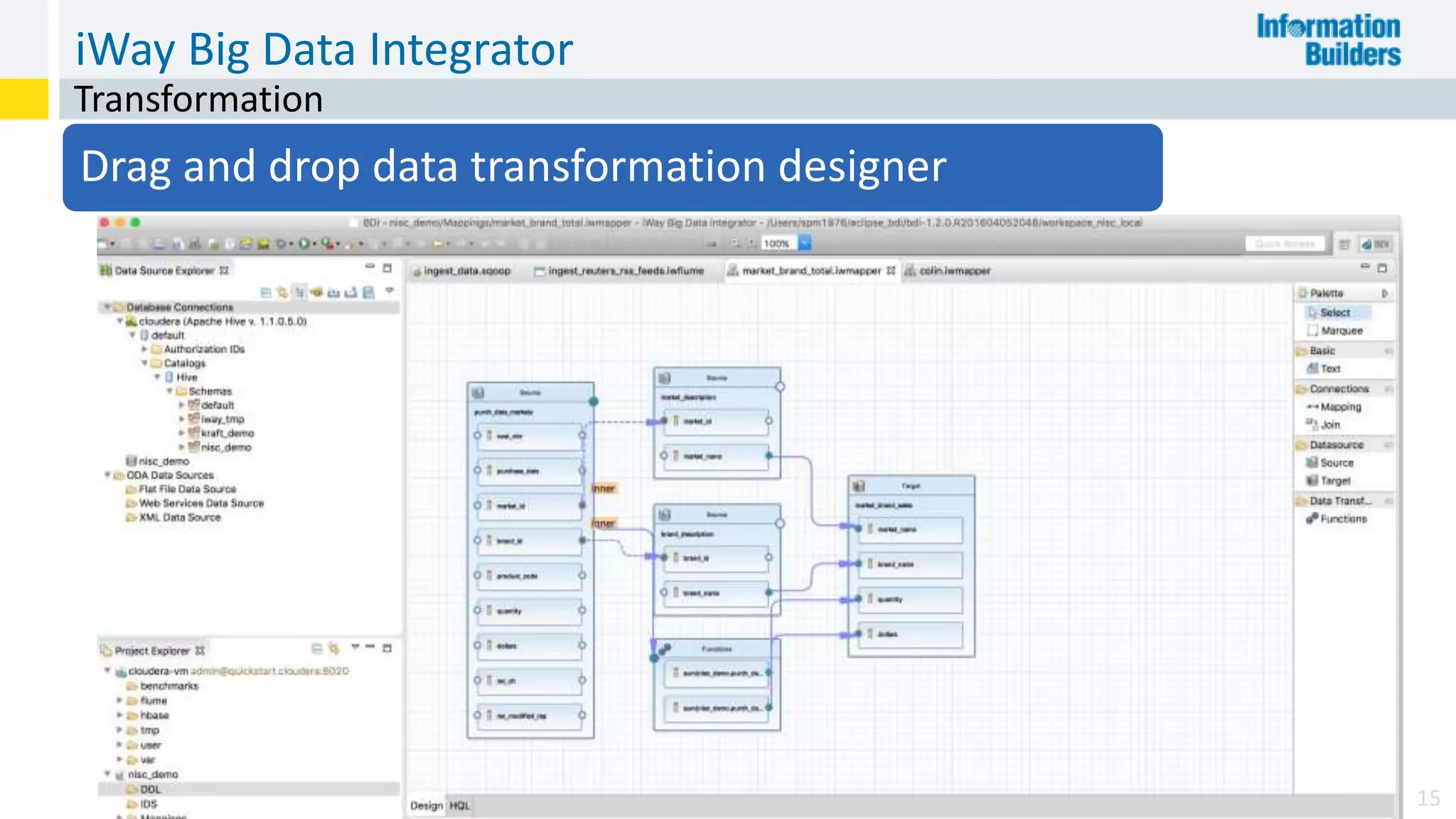

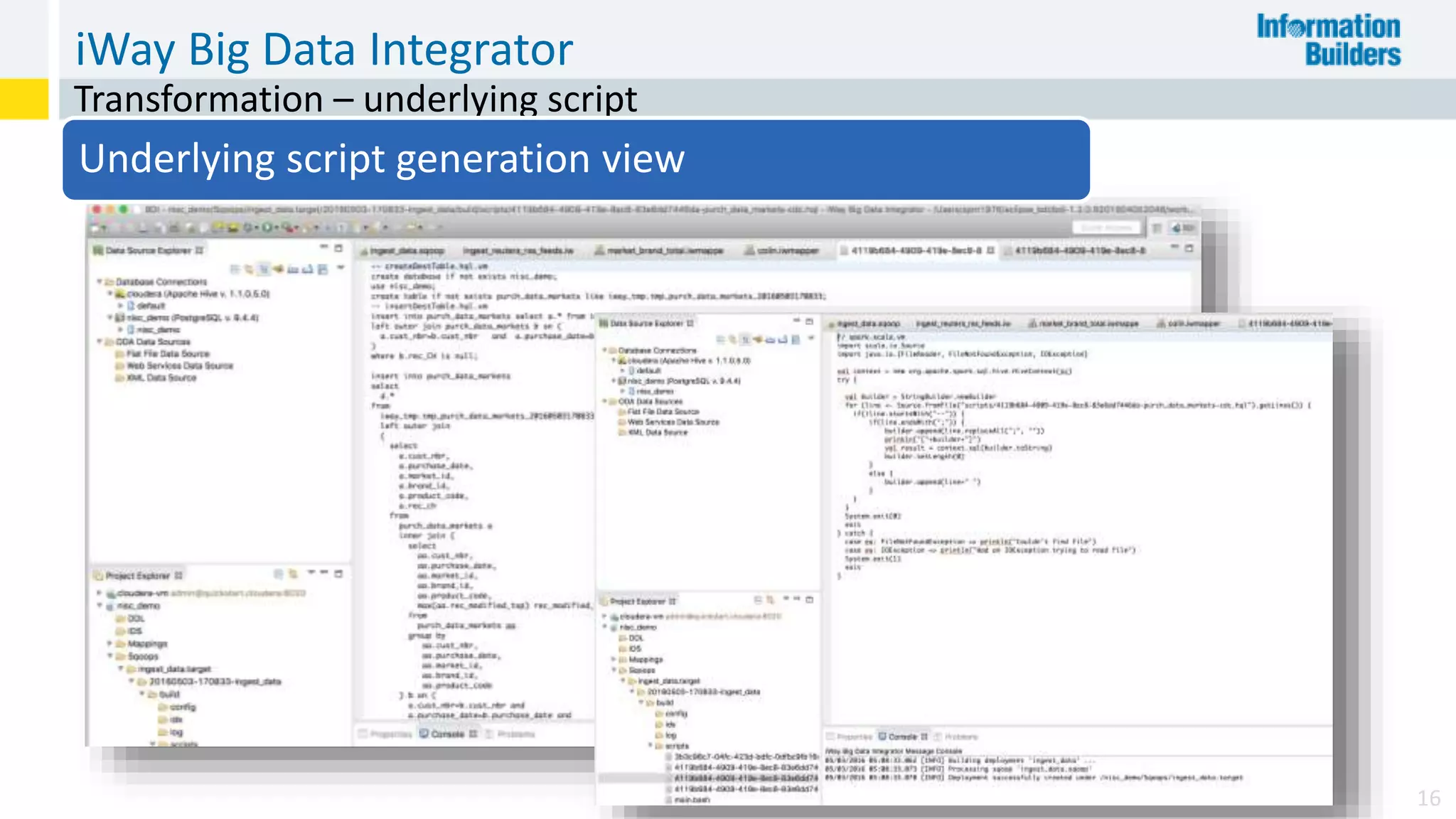

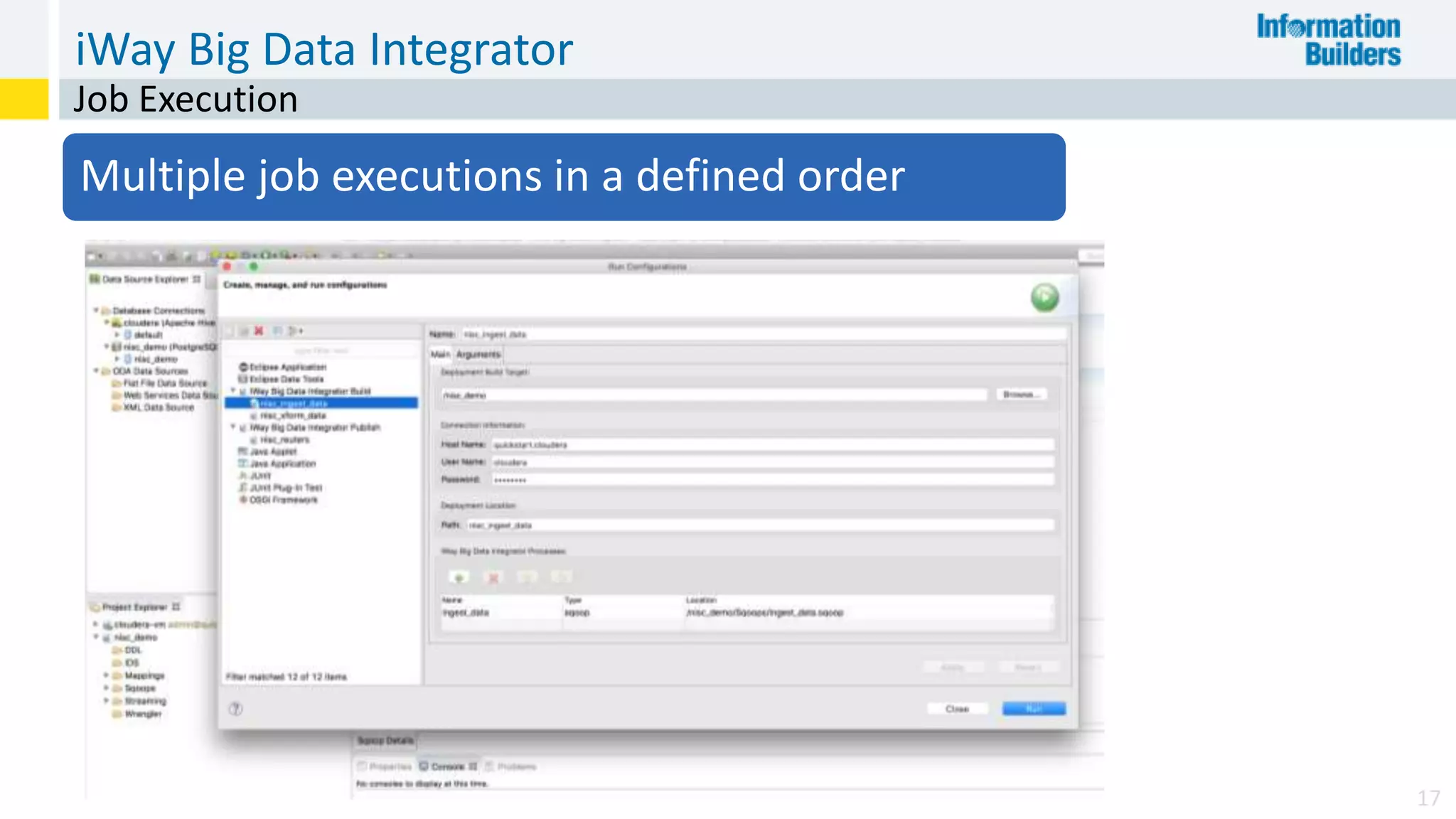

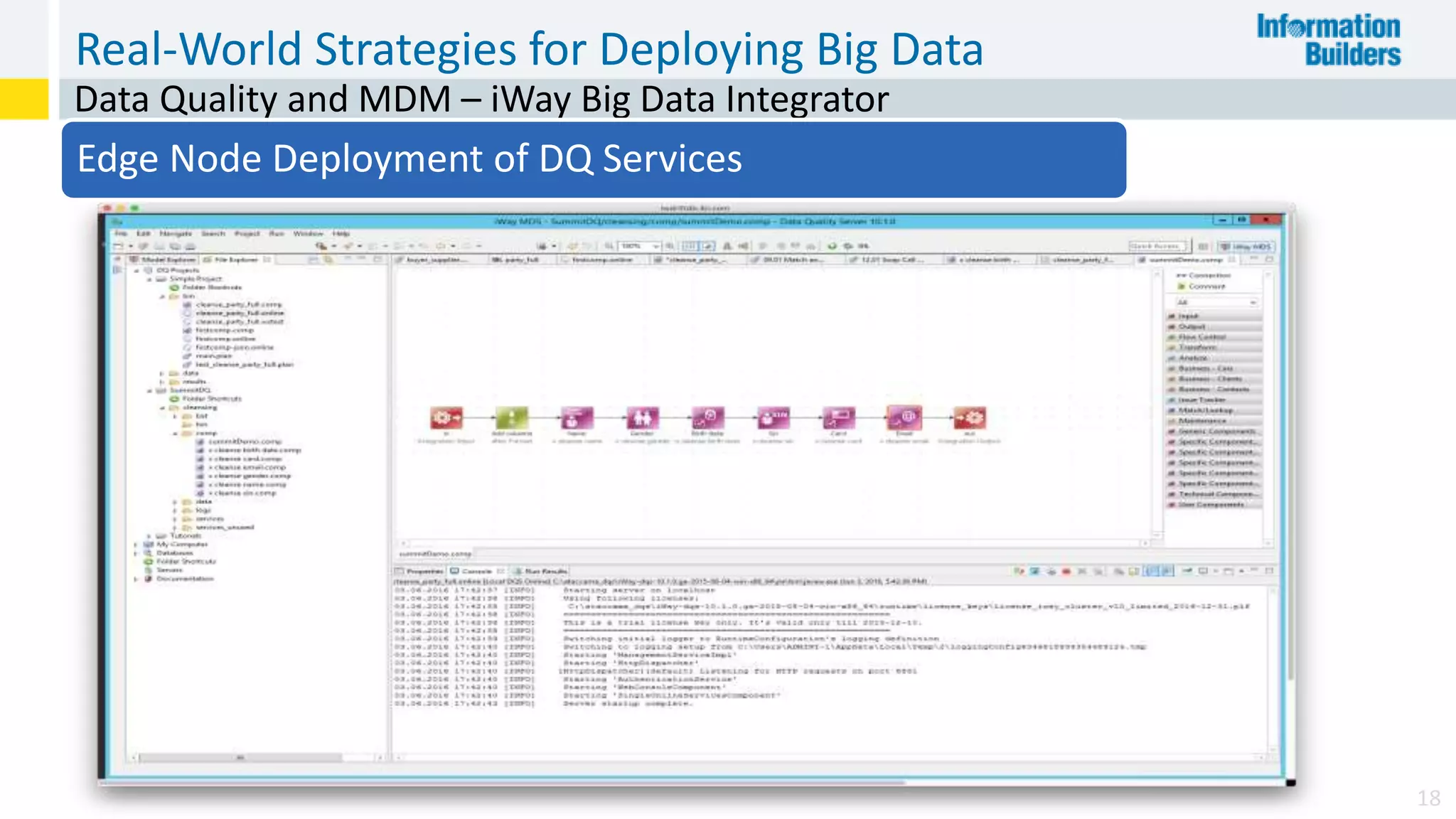

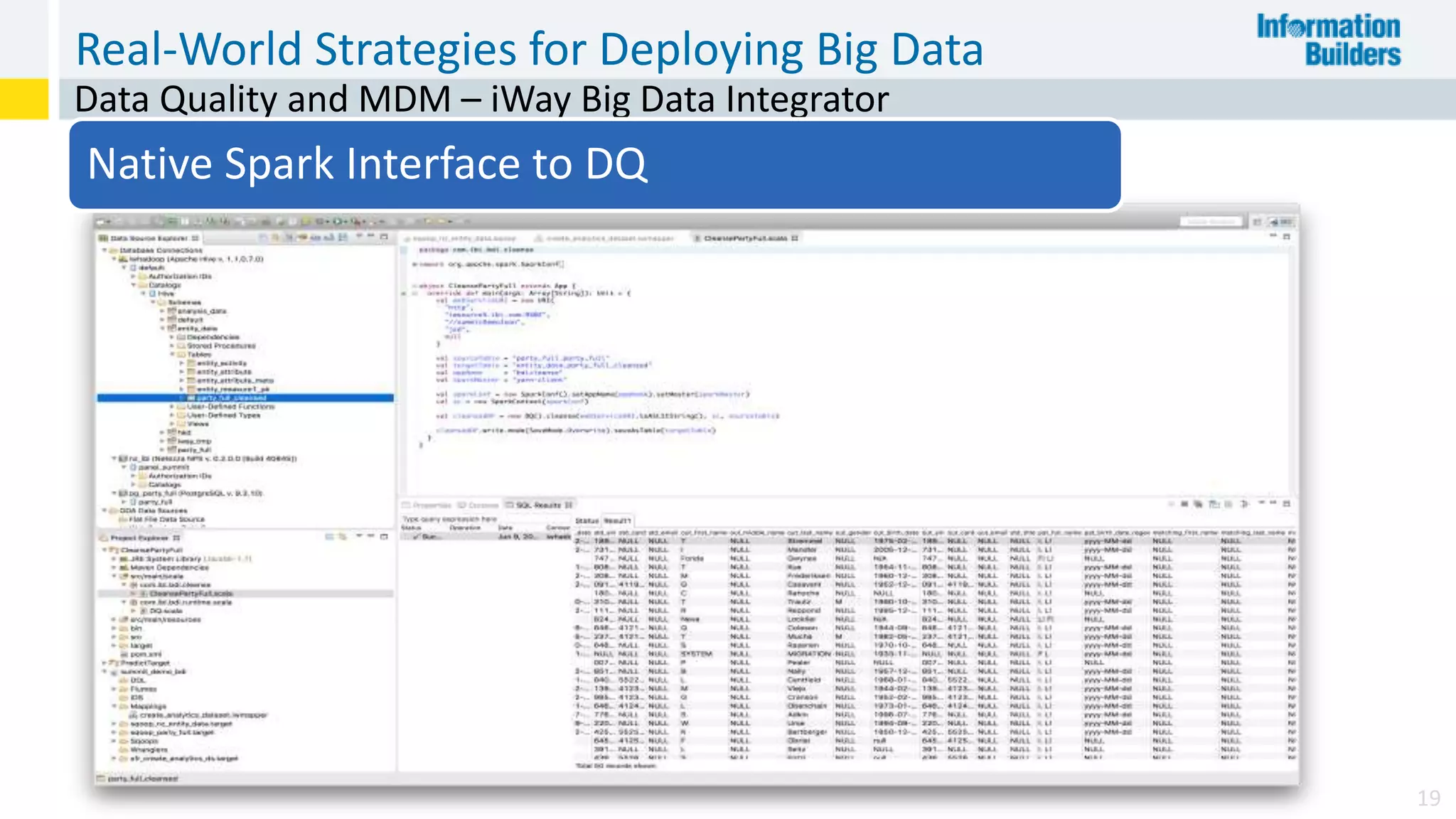

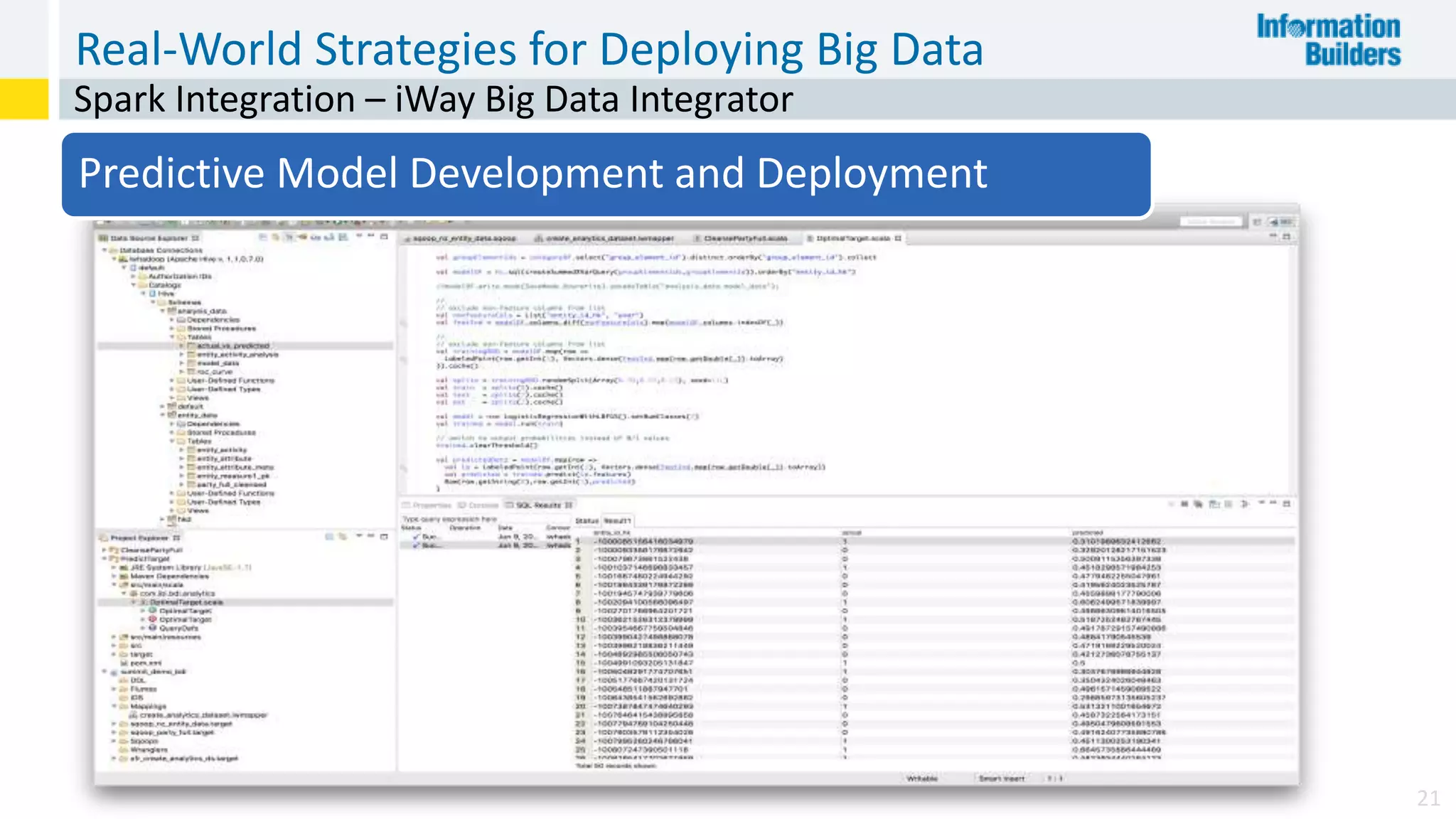

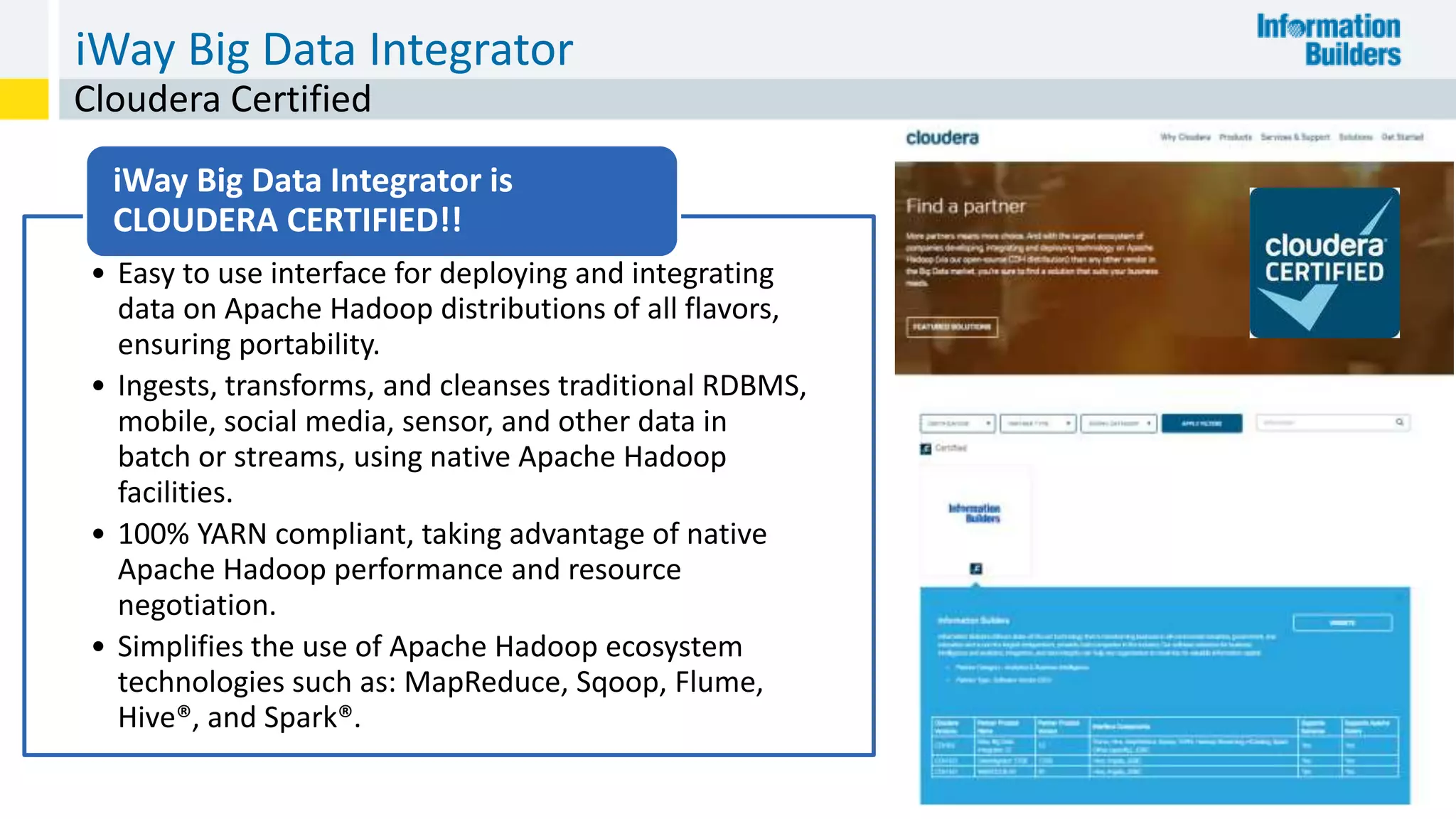

The document discusses the benefits of Apache Hadoop for big data integration, highlighting its capabilities in data loading, transformation, and archiving at a low cost. It outlines the industry's shift from traditional ETL to ELT processes and addresses challenges such as the skills gap and identifying value in deployed Hadoop systems. Lastly, it showcases the features of the iWay Big Data Integrator, emphasizing its user-friendly interface and integration with various data sources and formats.