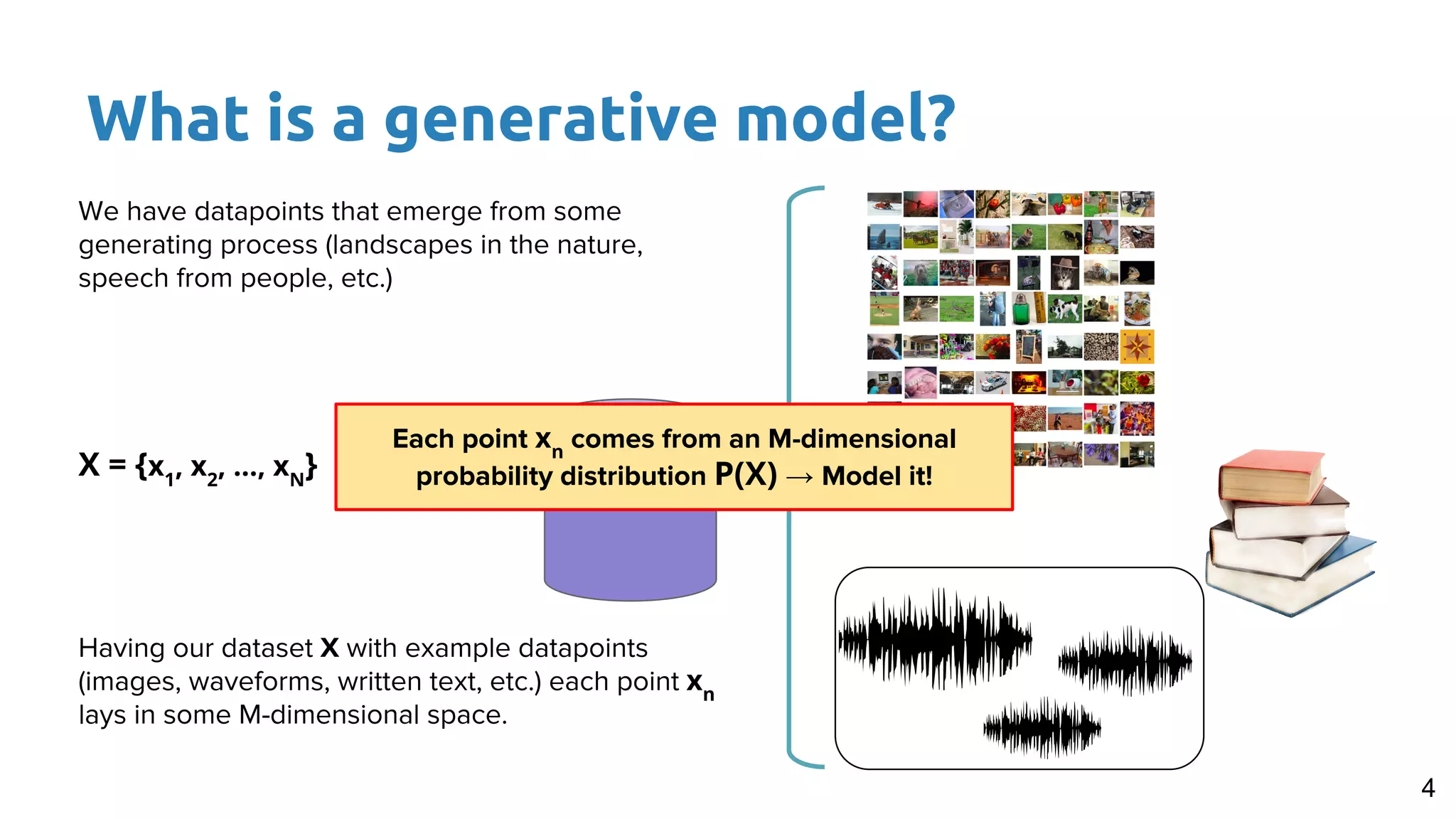

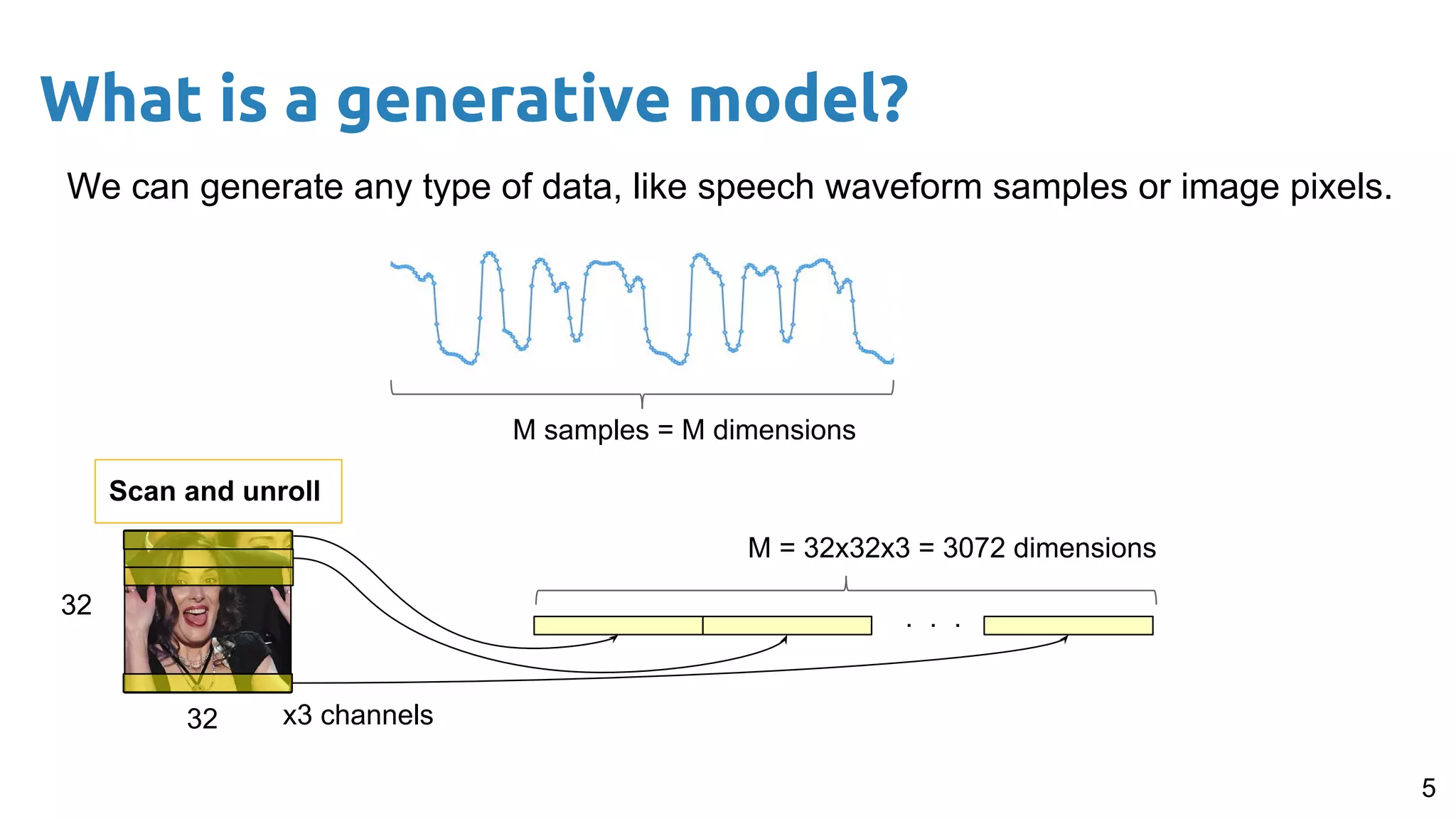

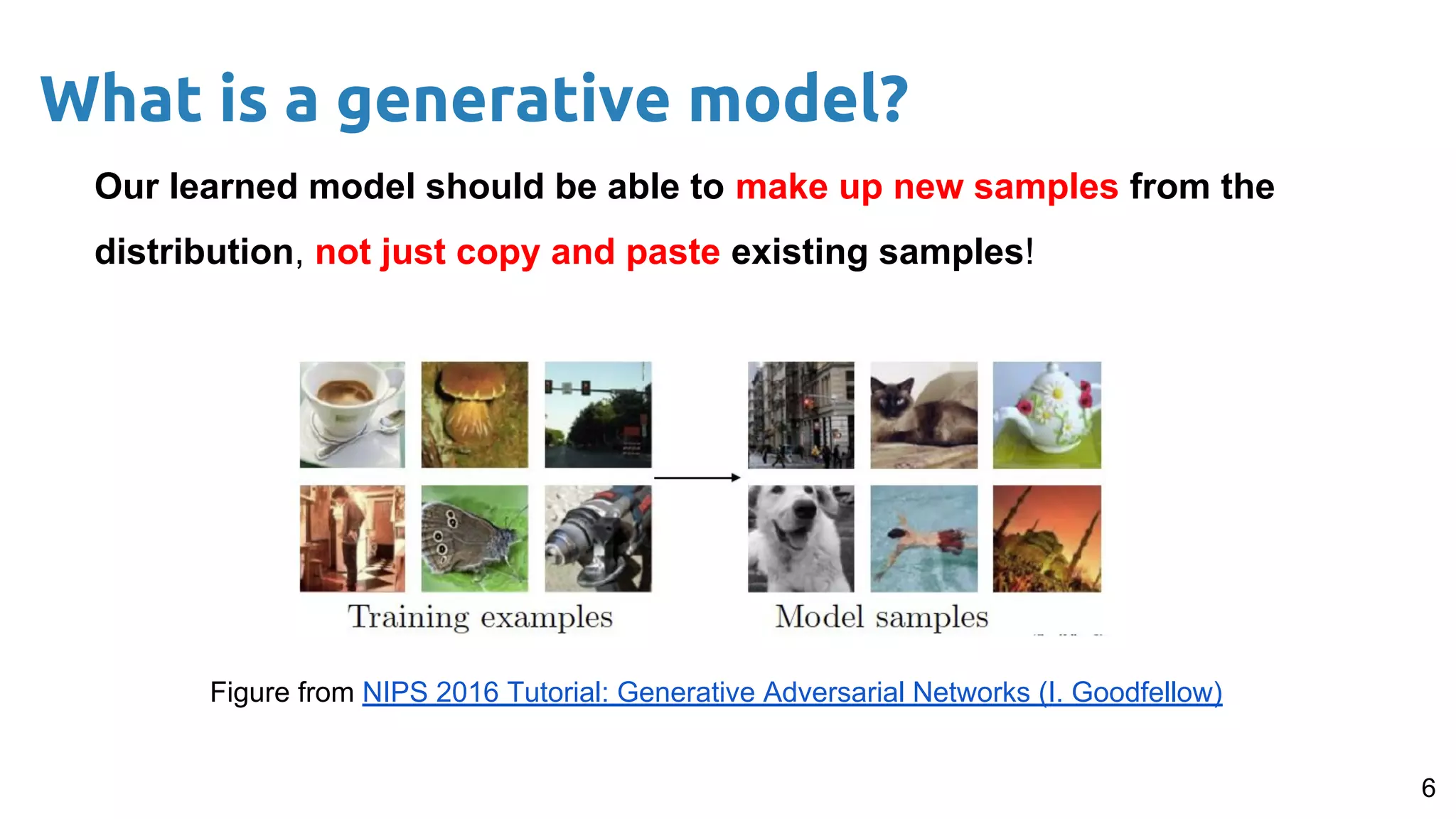

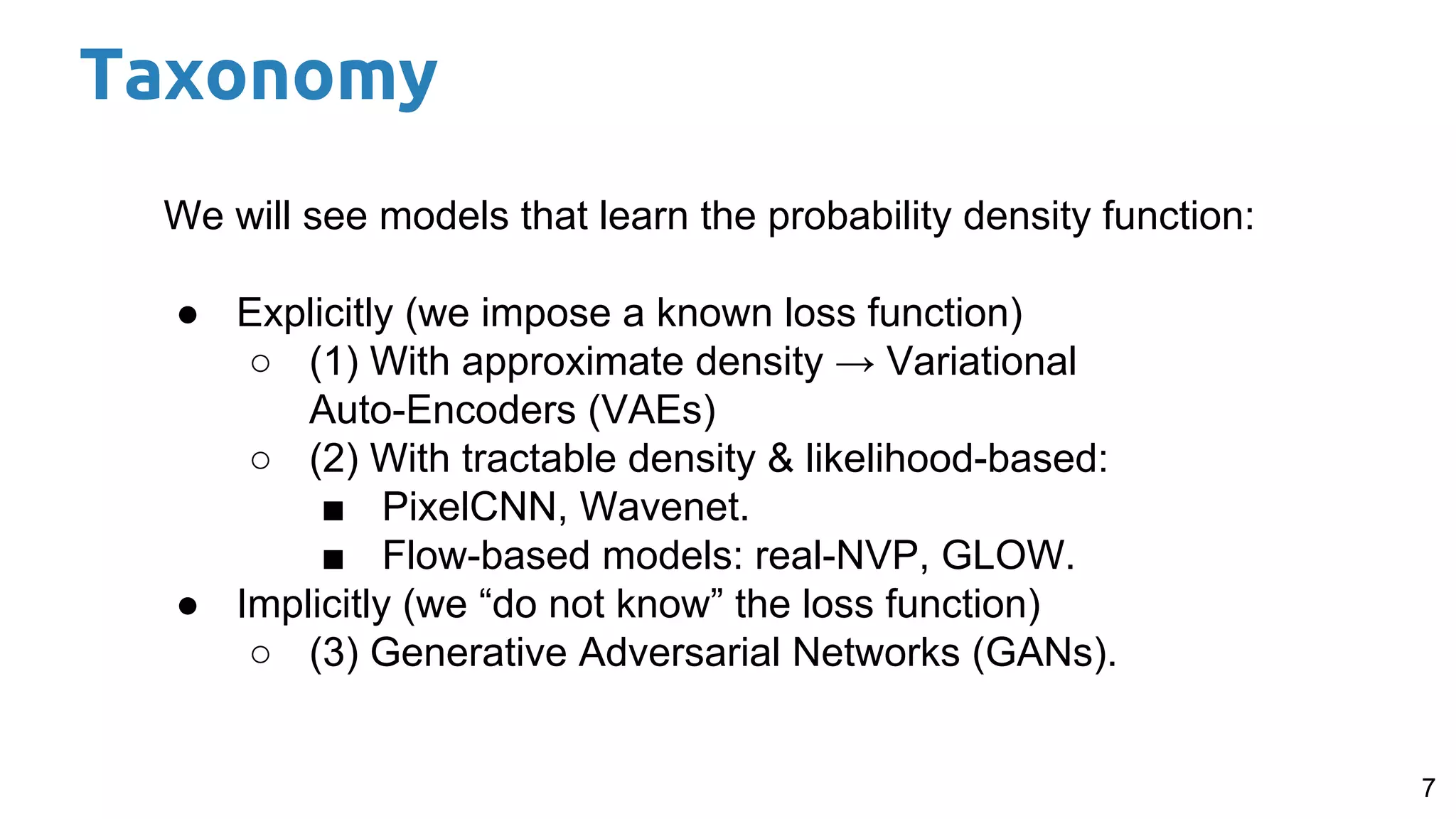

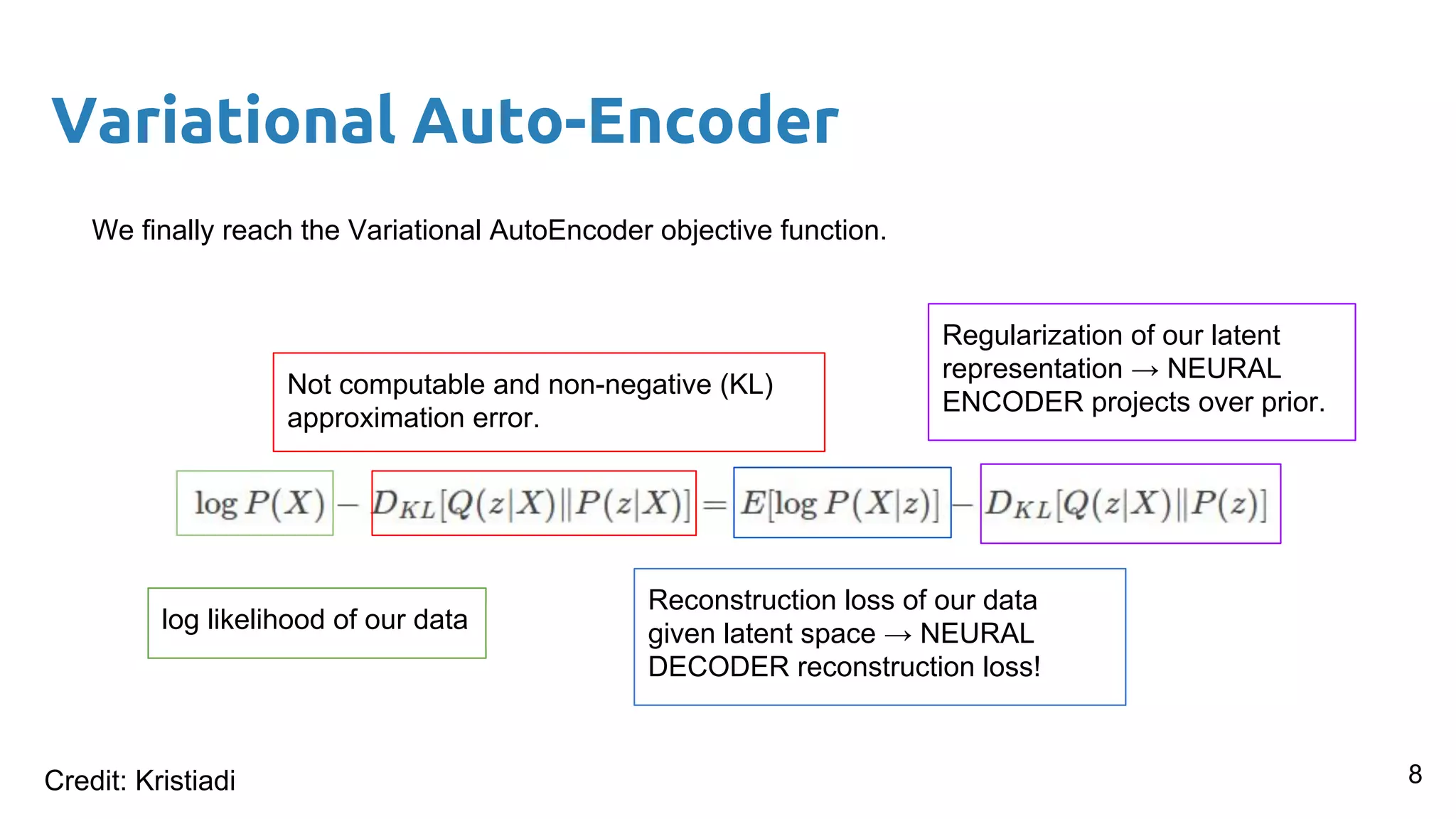

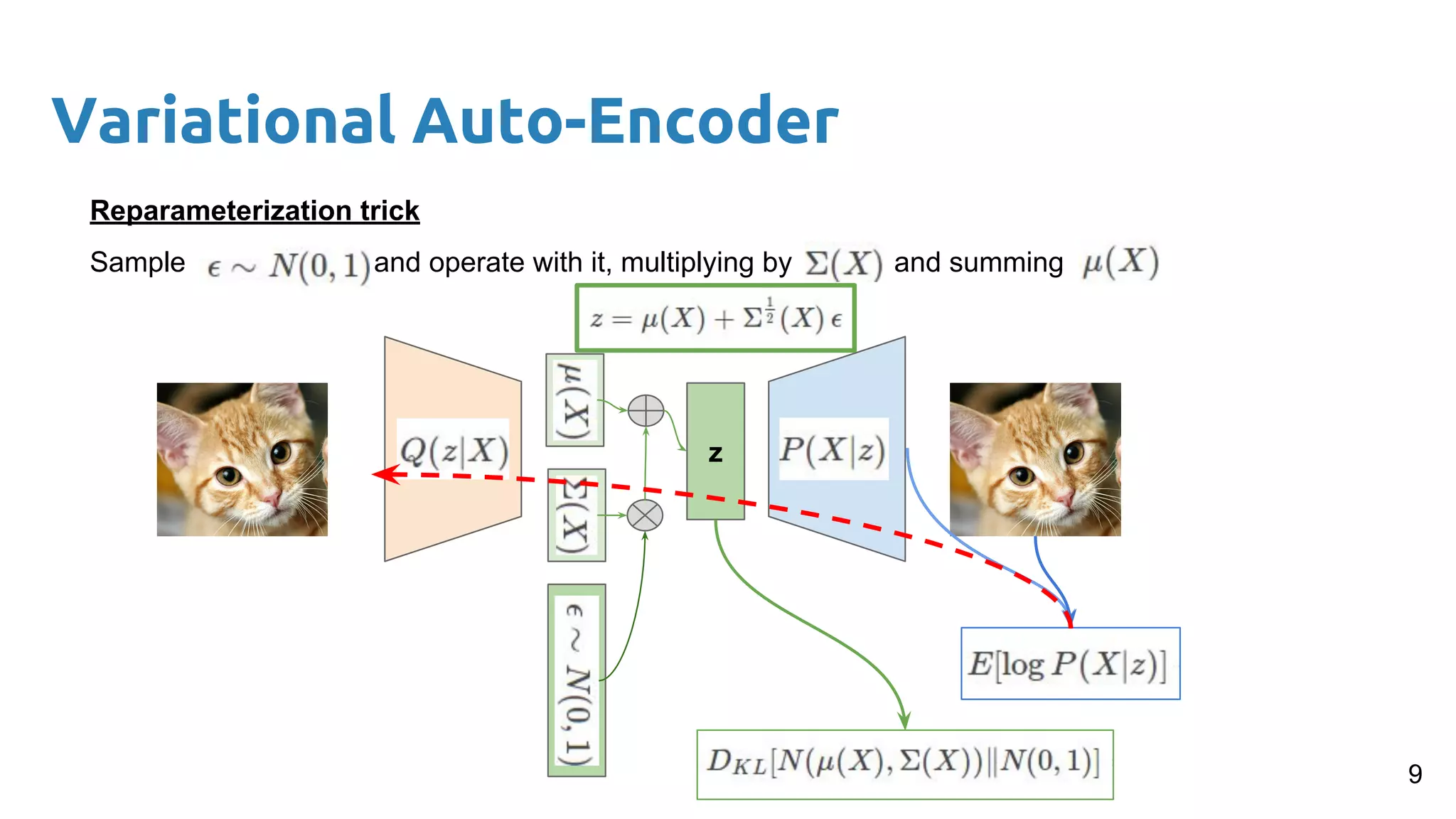

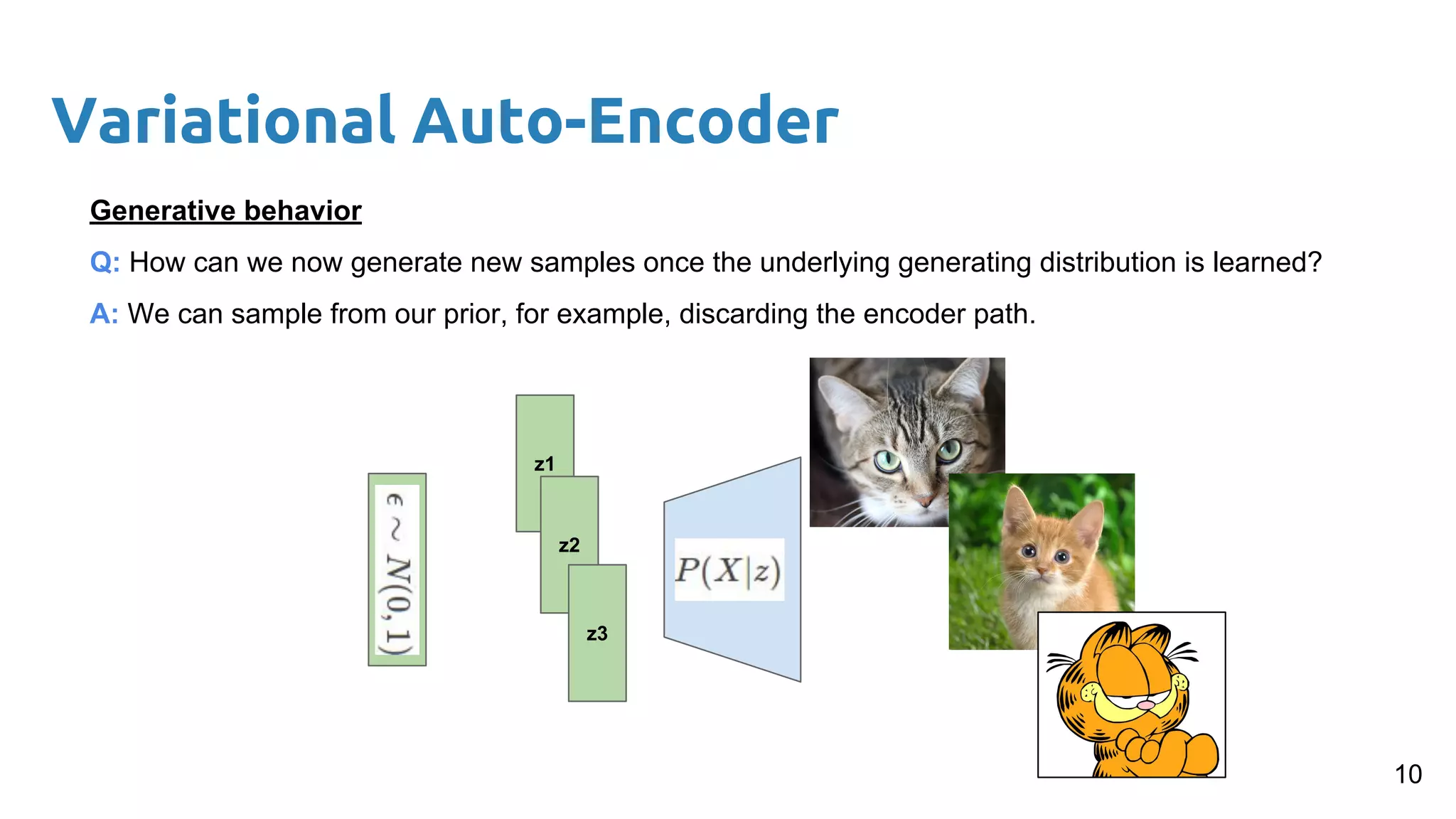

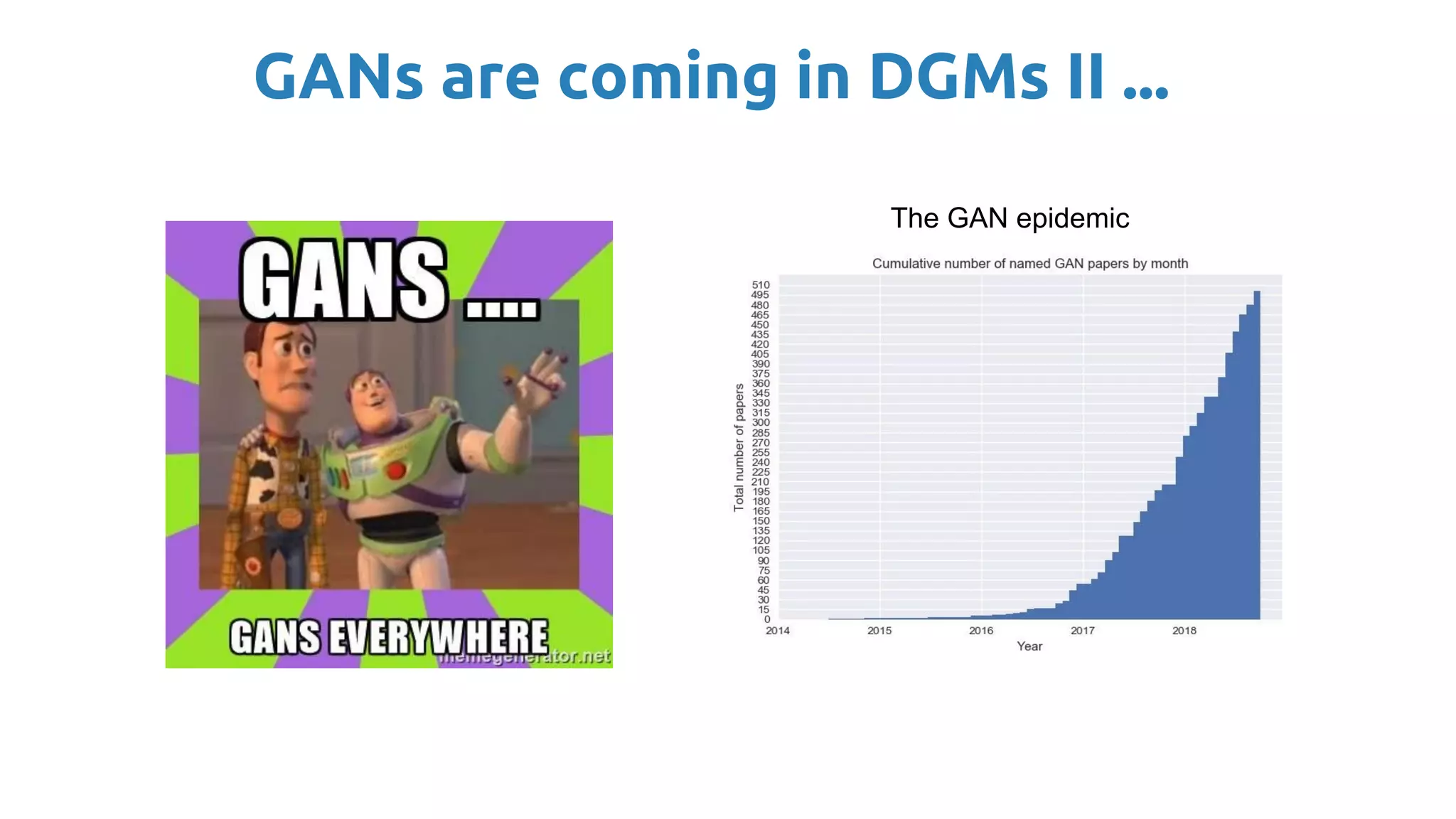

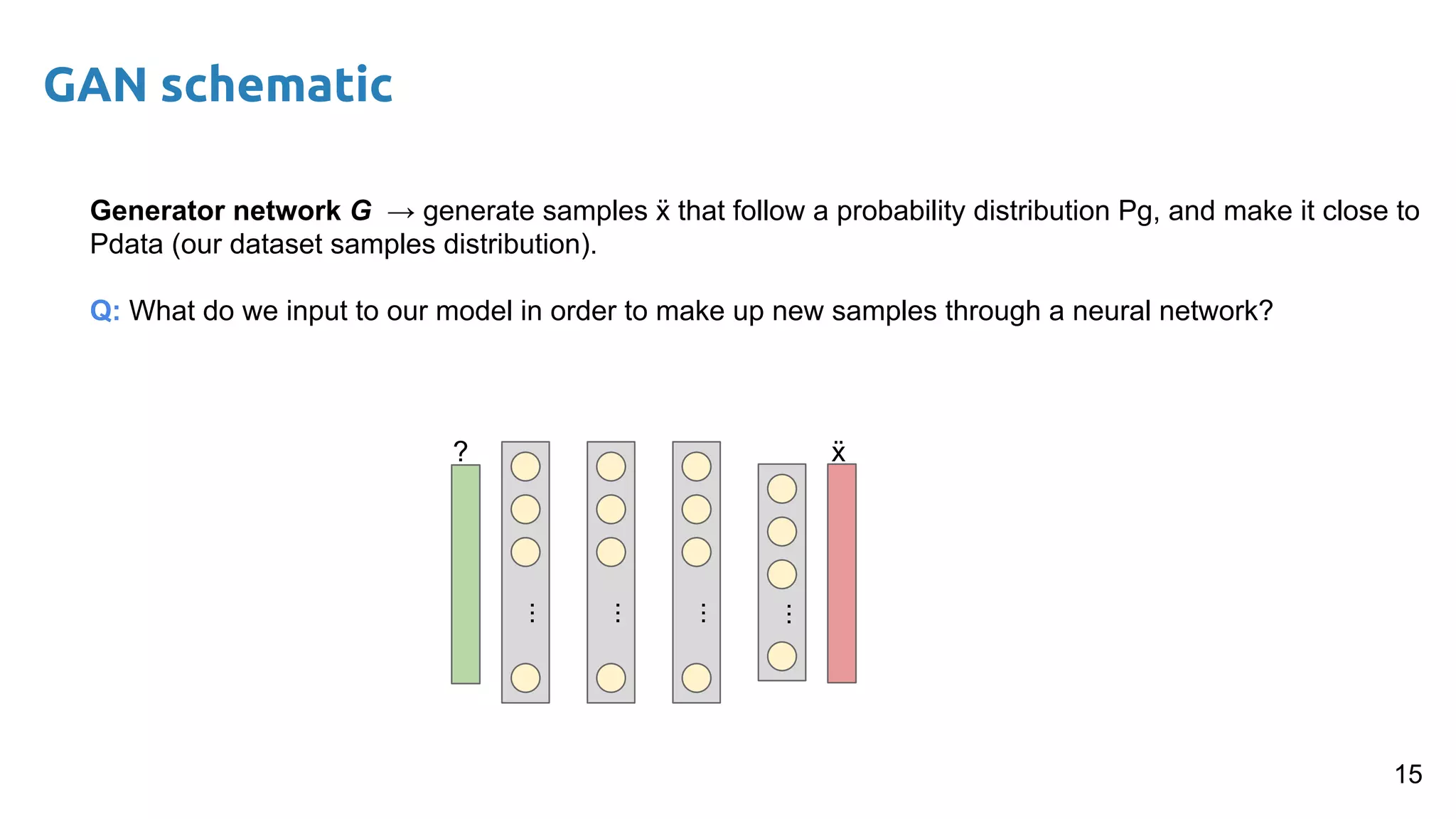

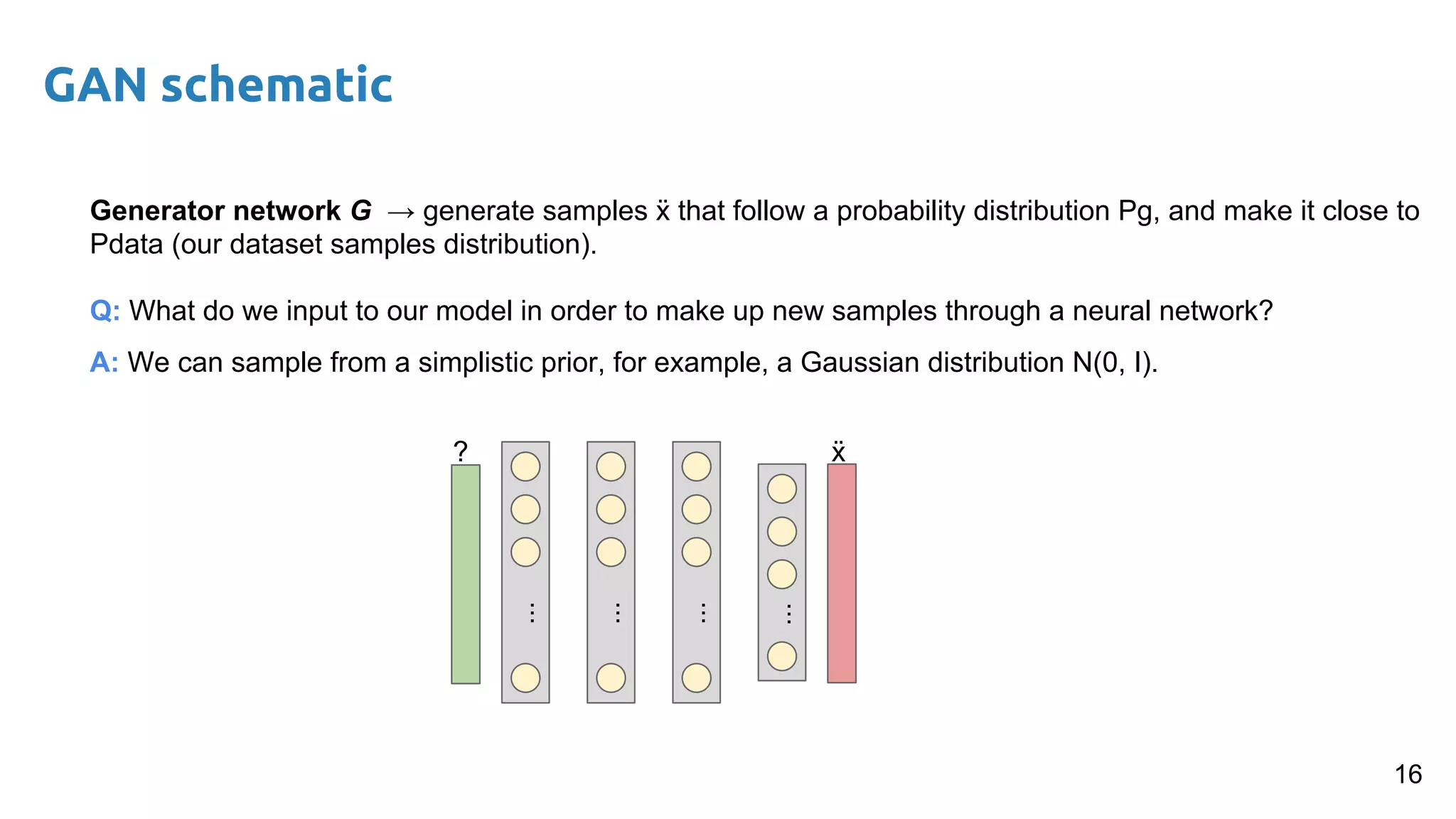

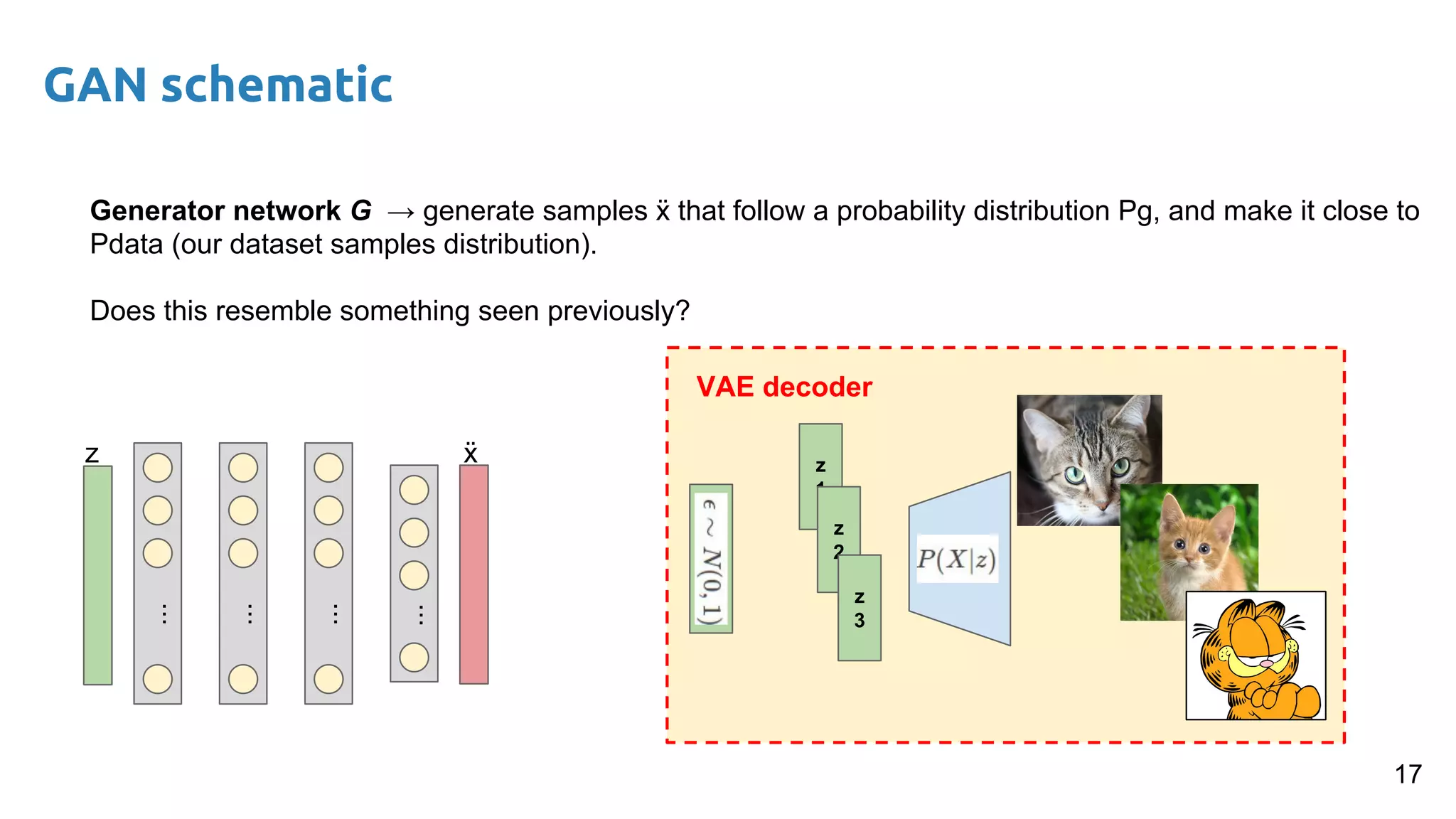

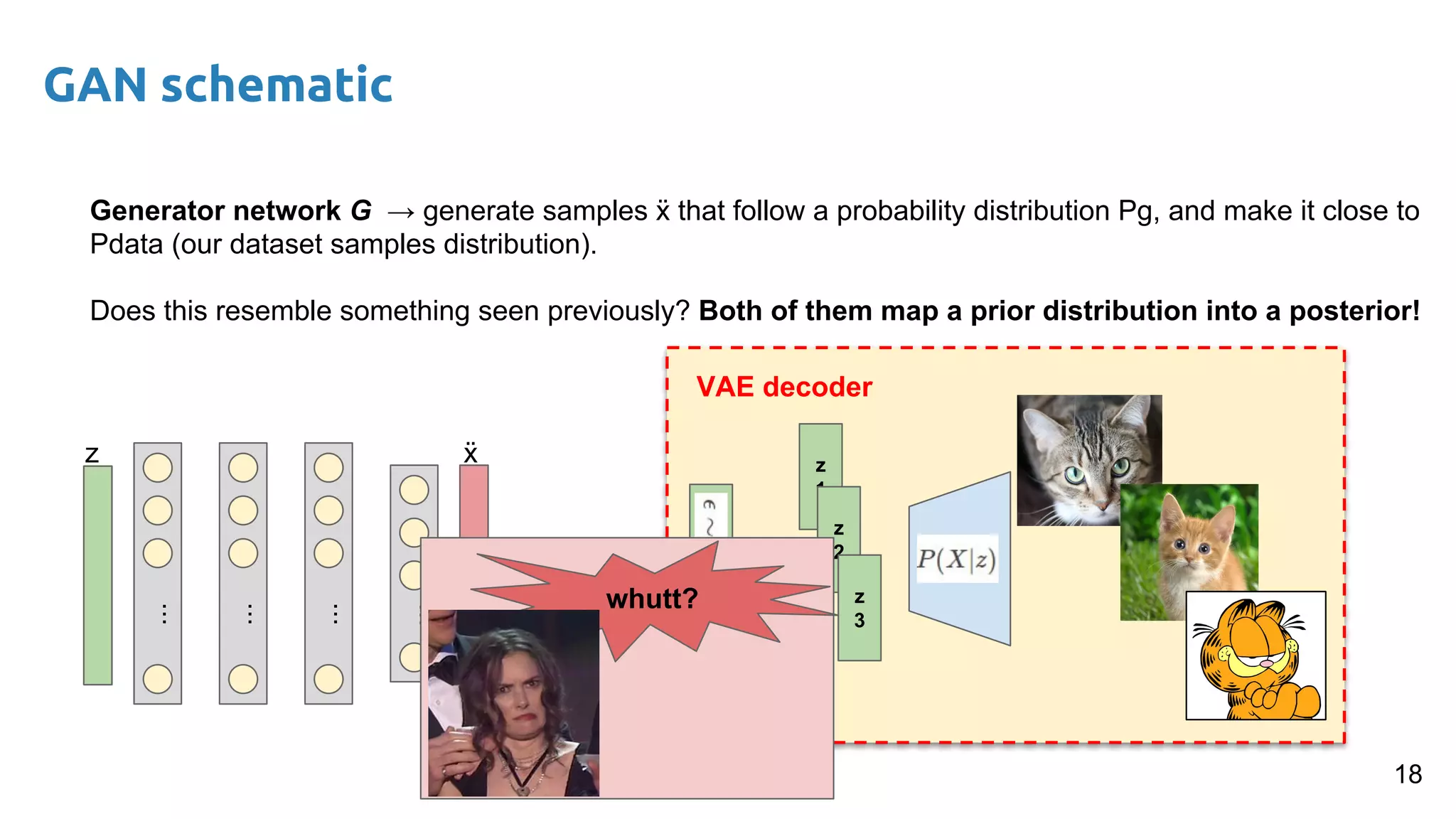

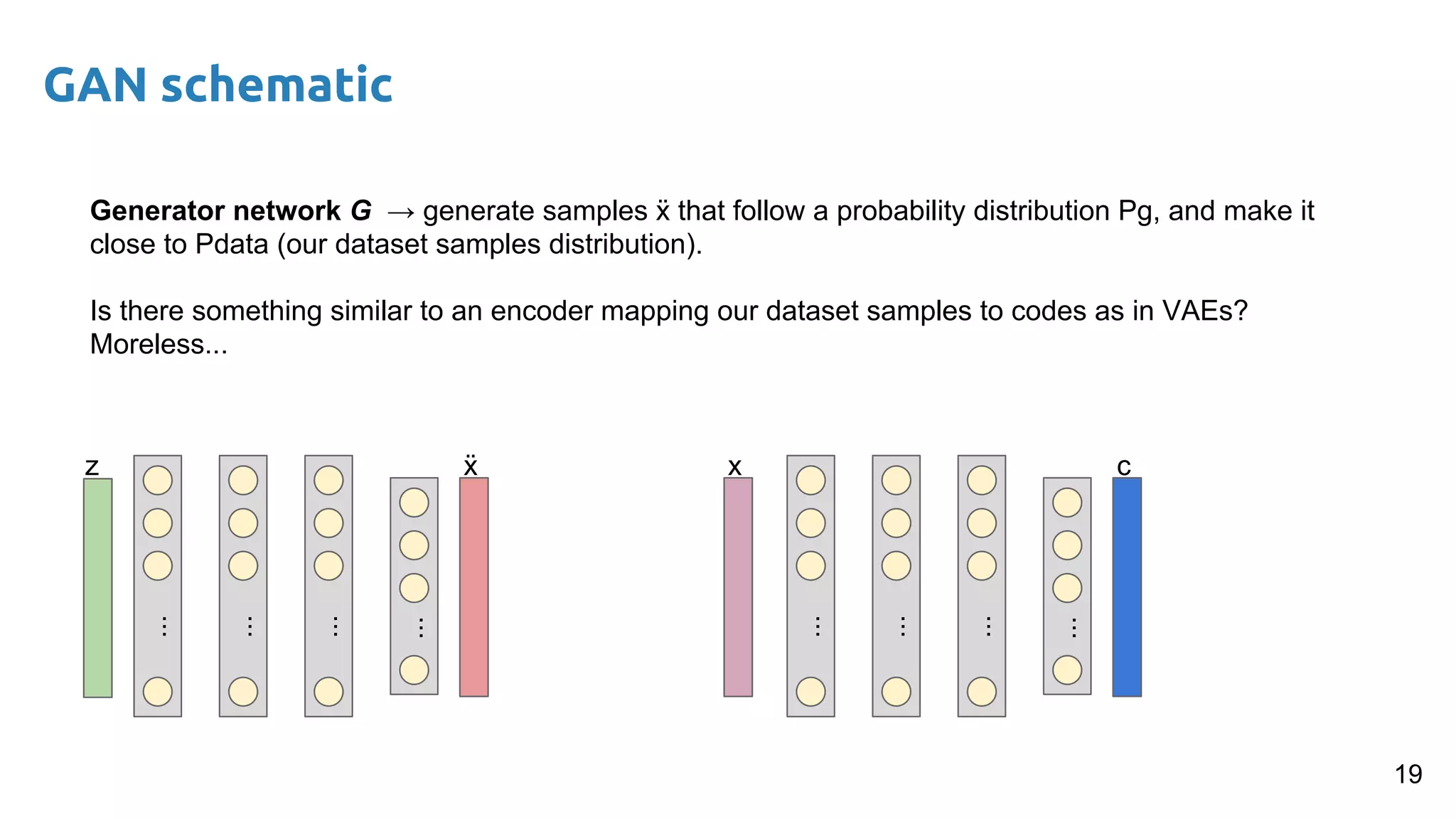

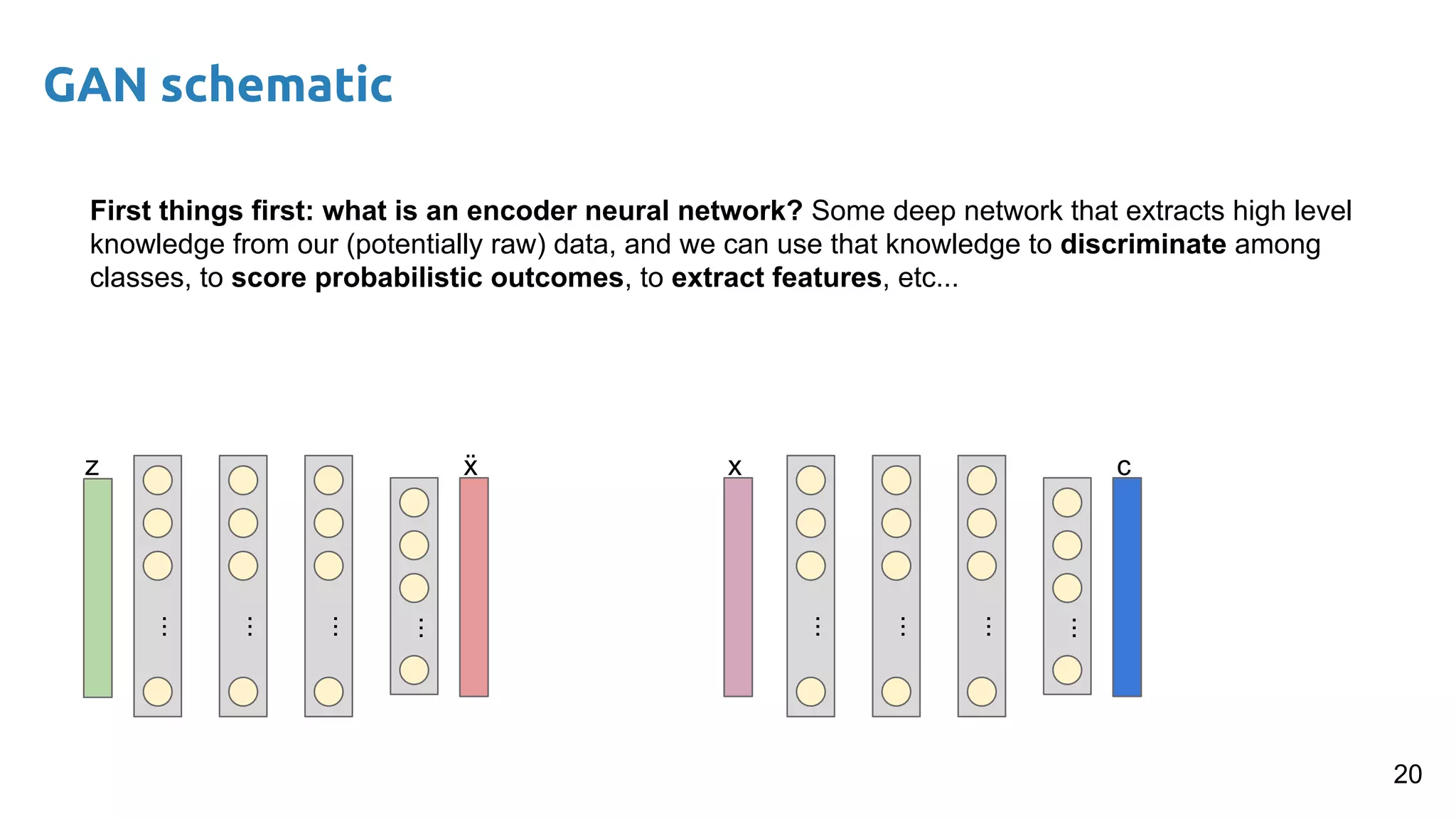

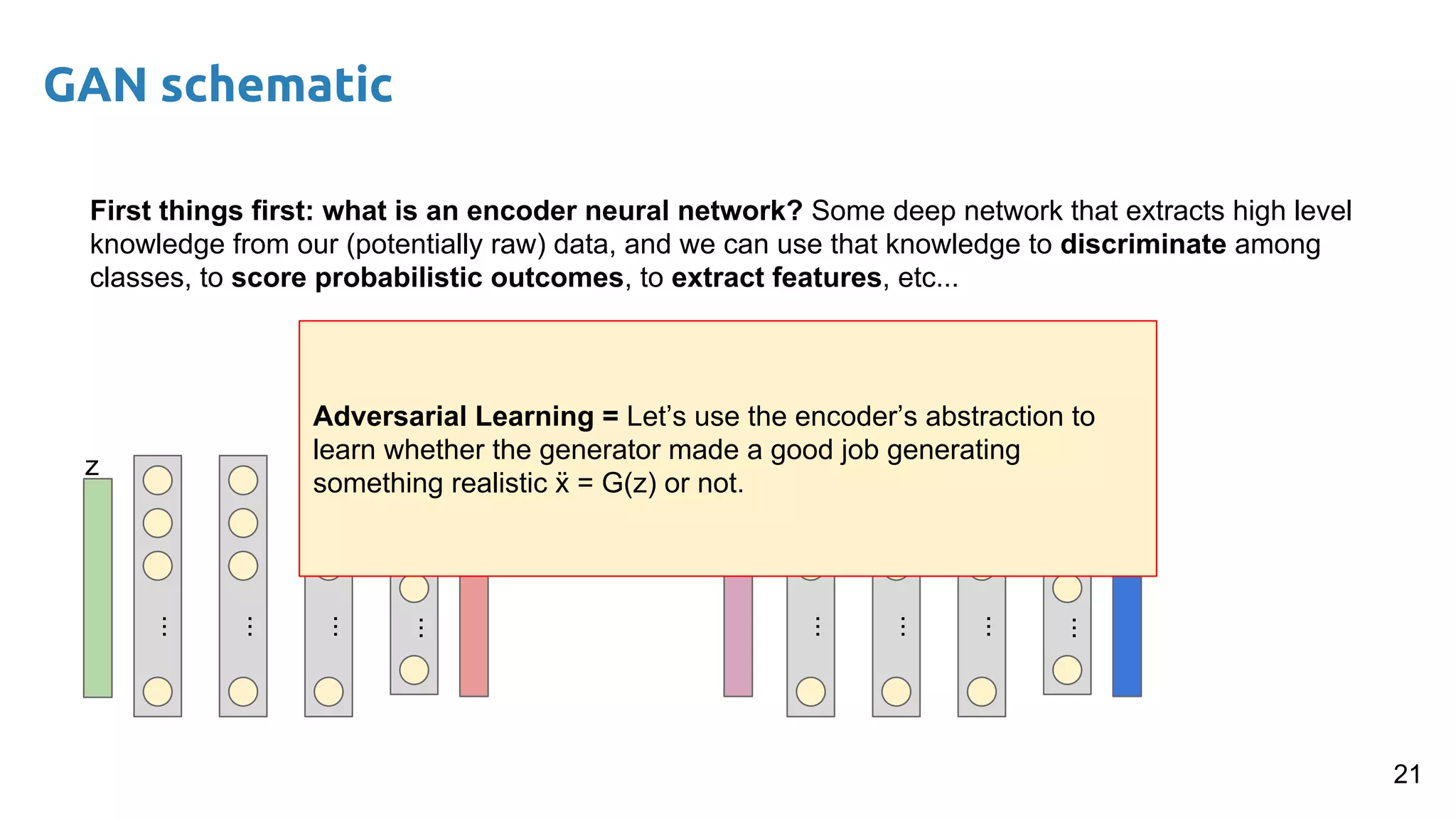

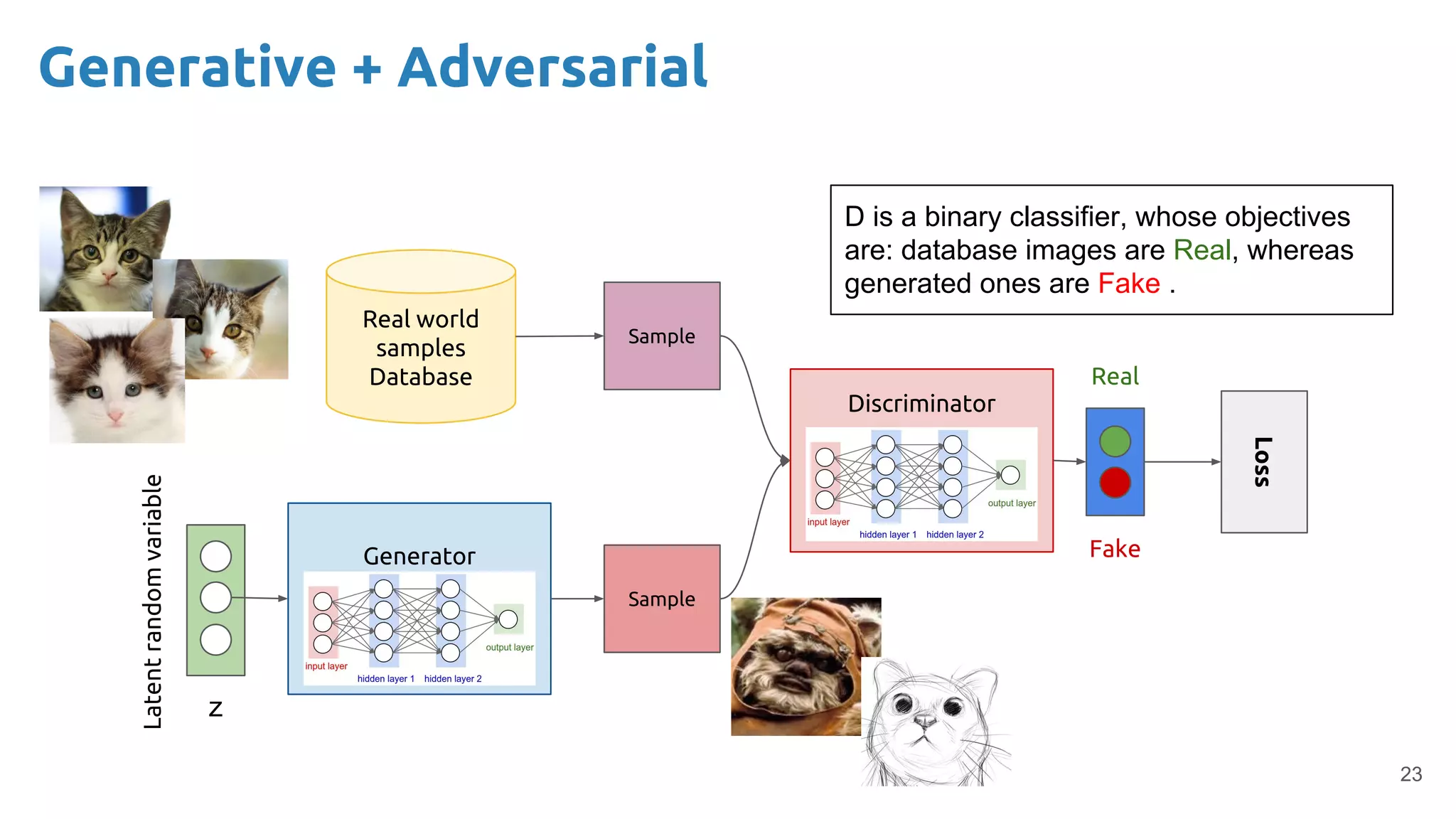

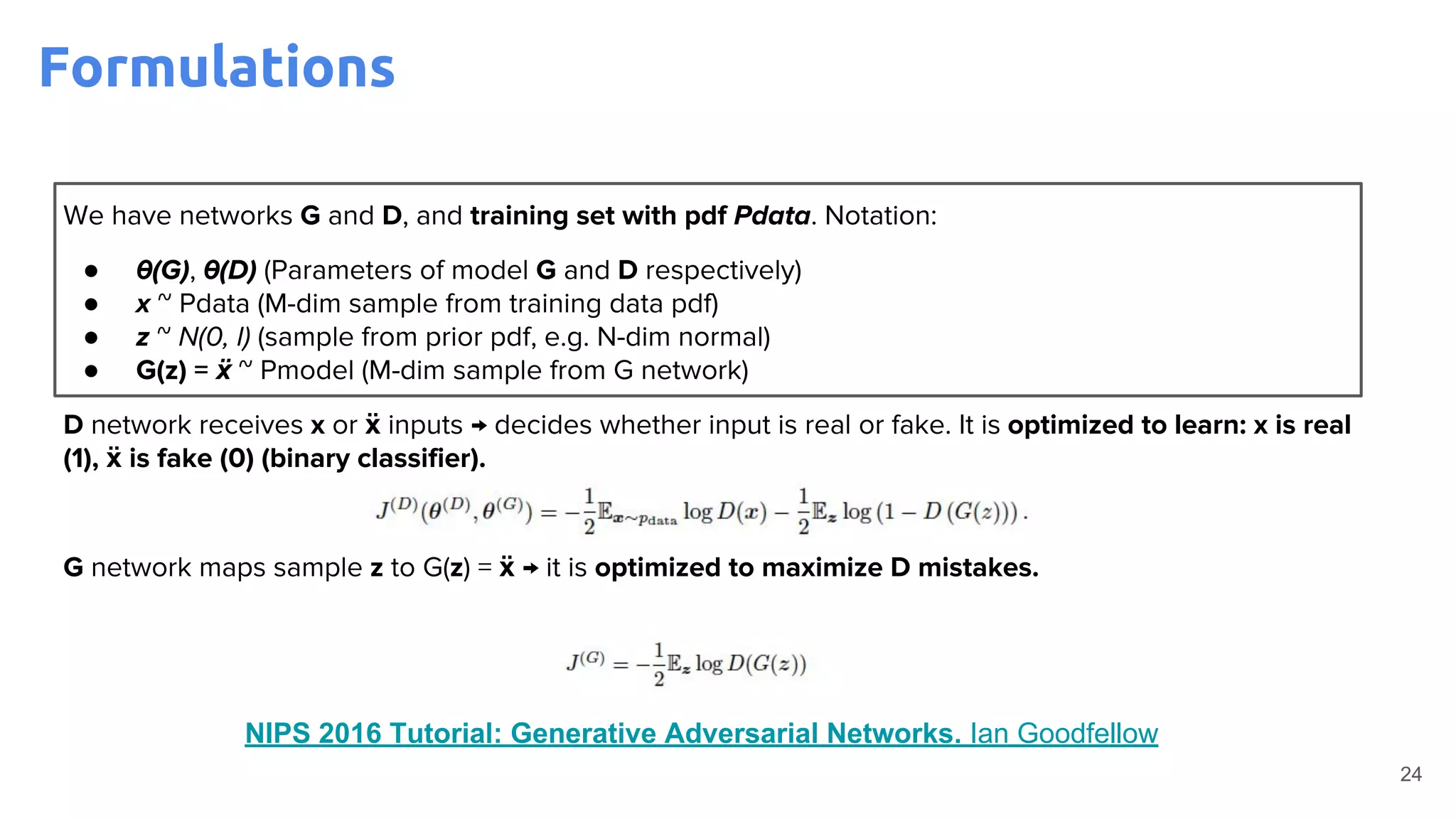

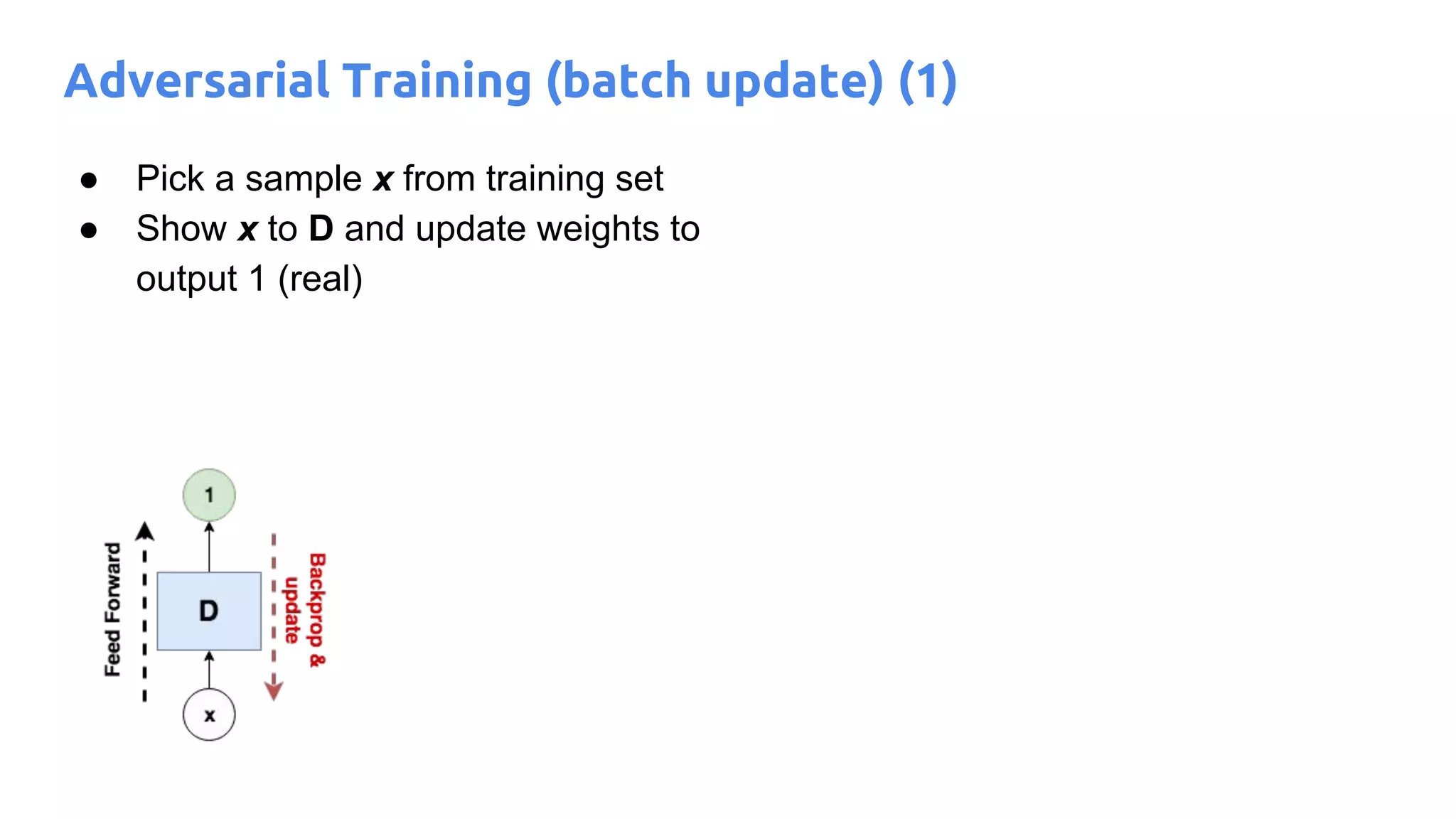

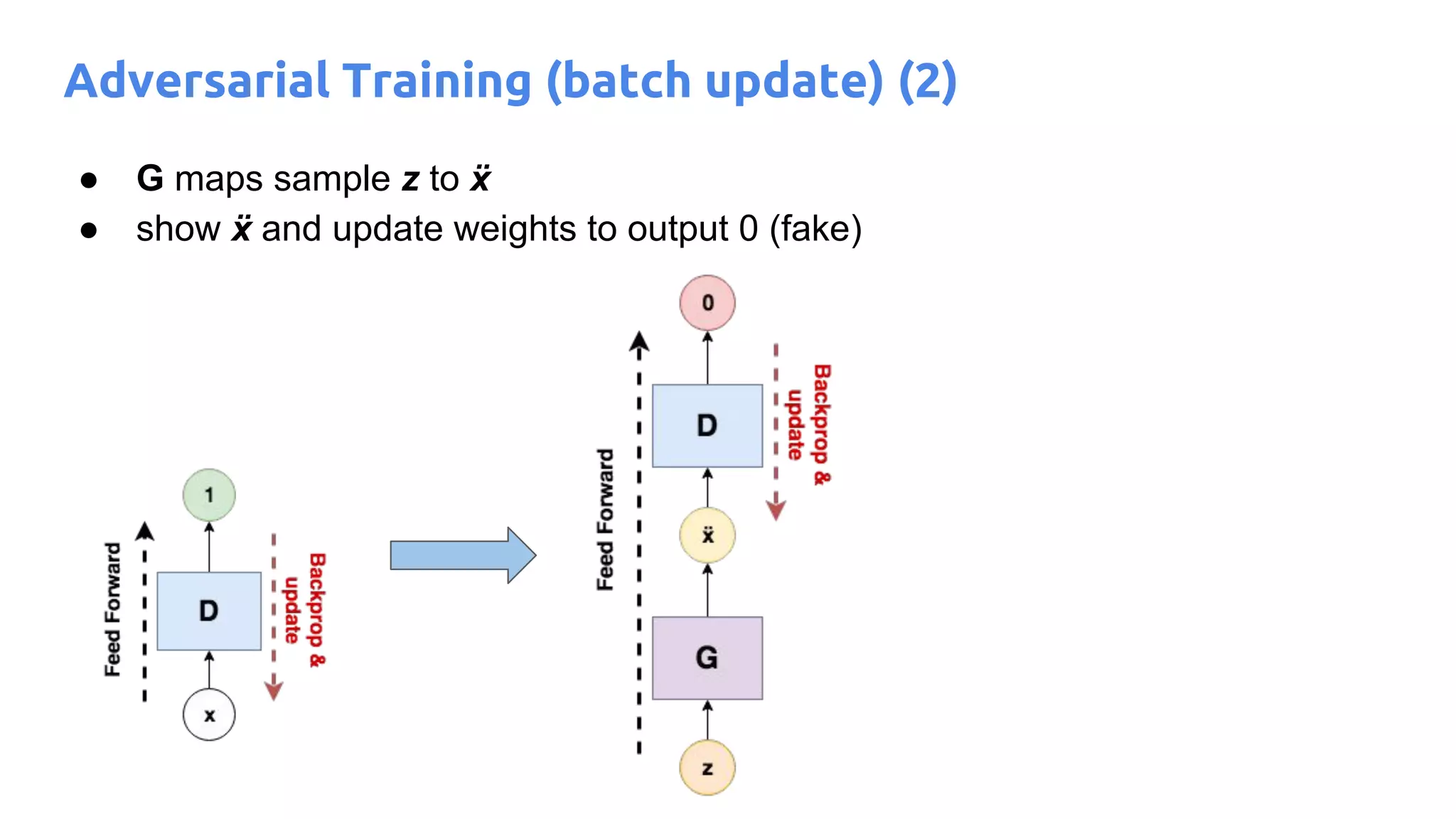

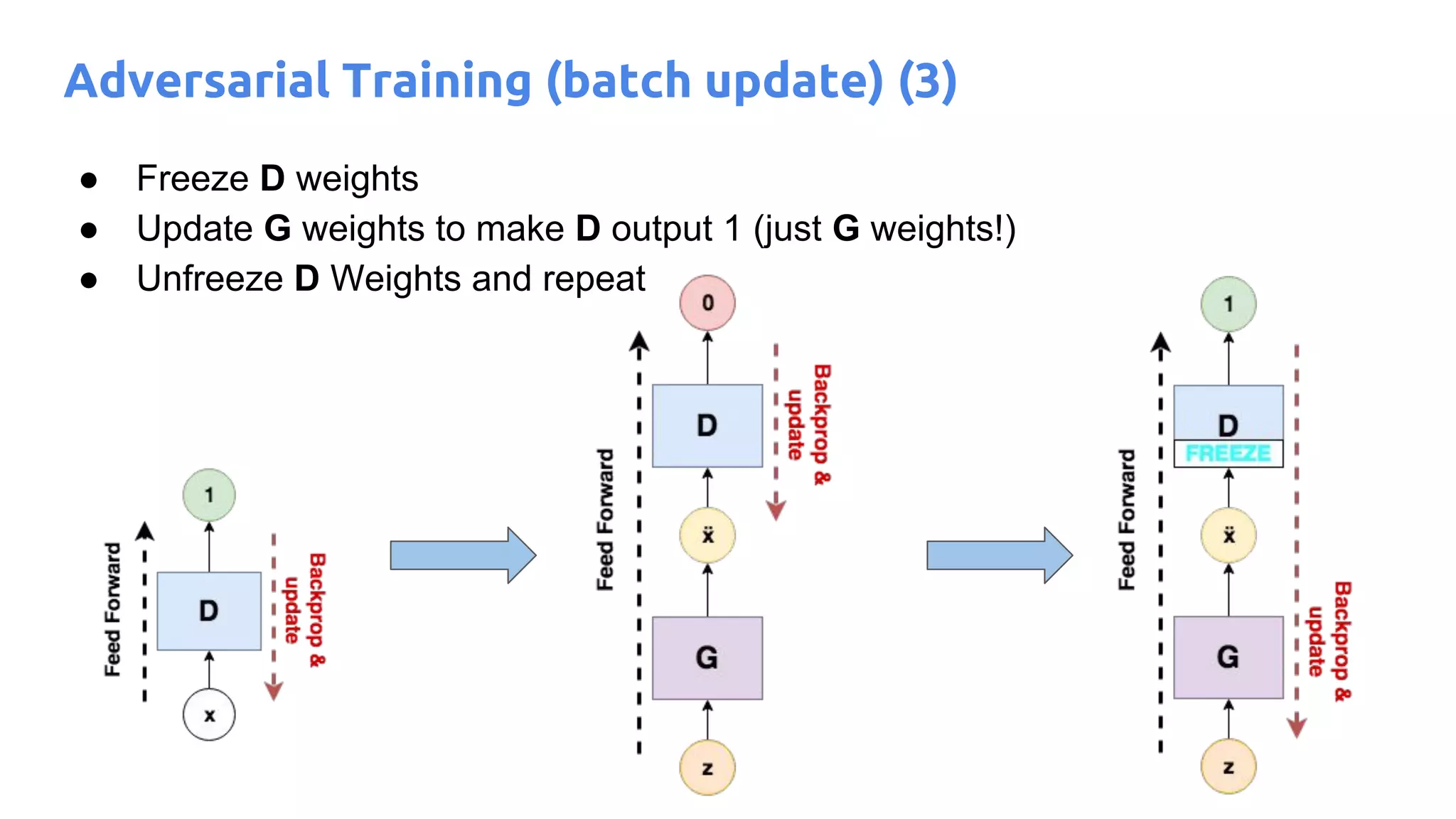

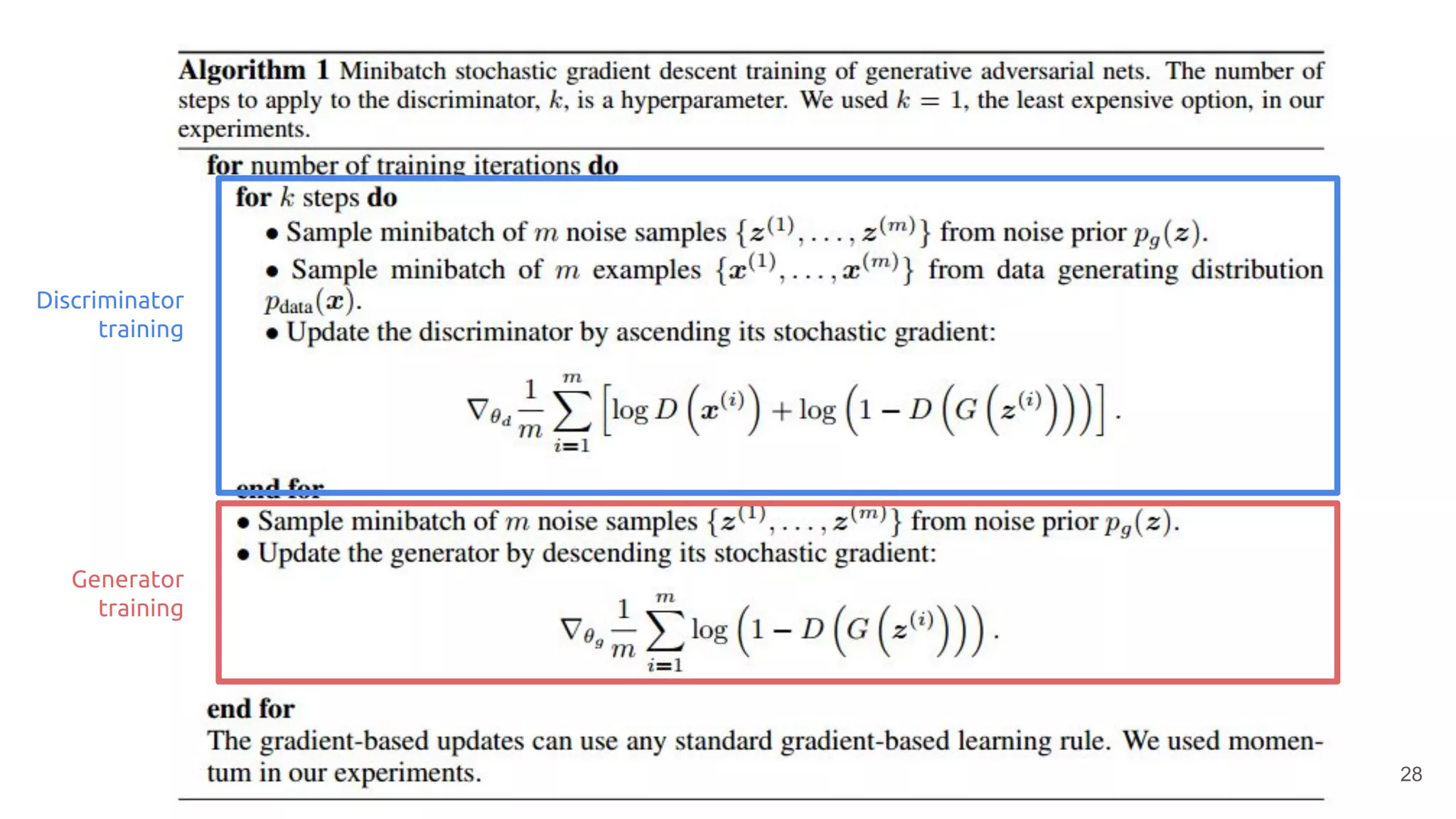

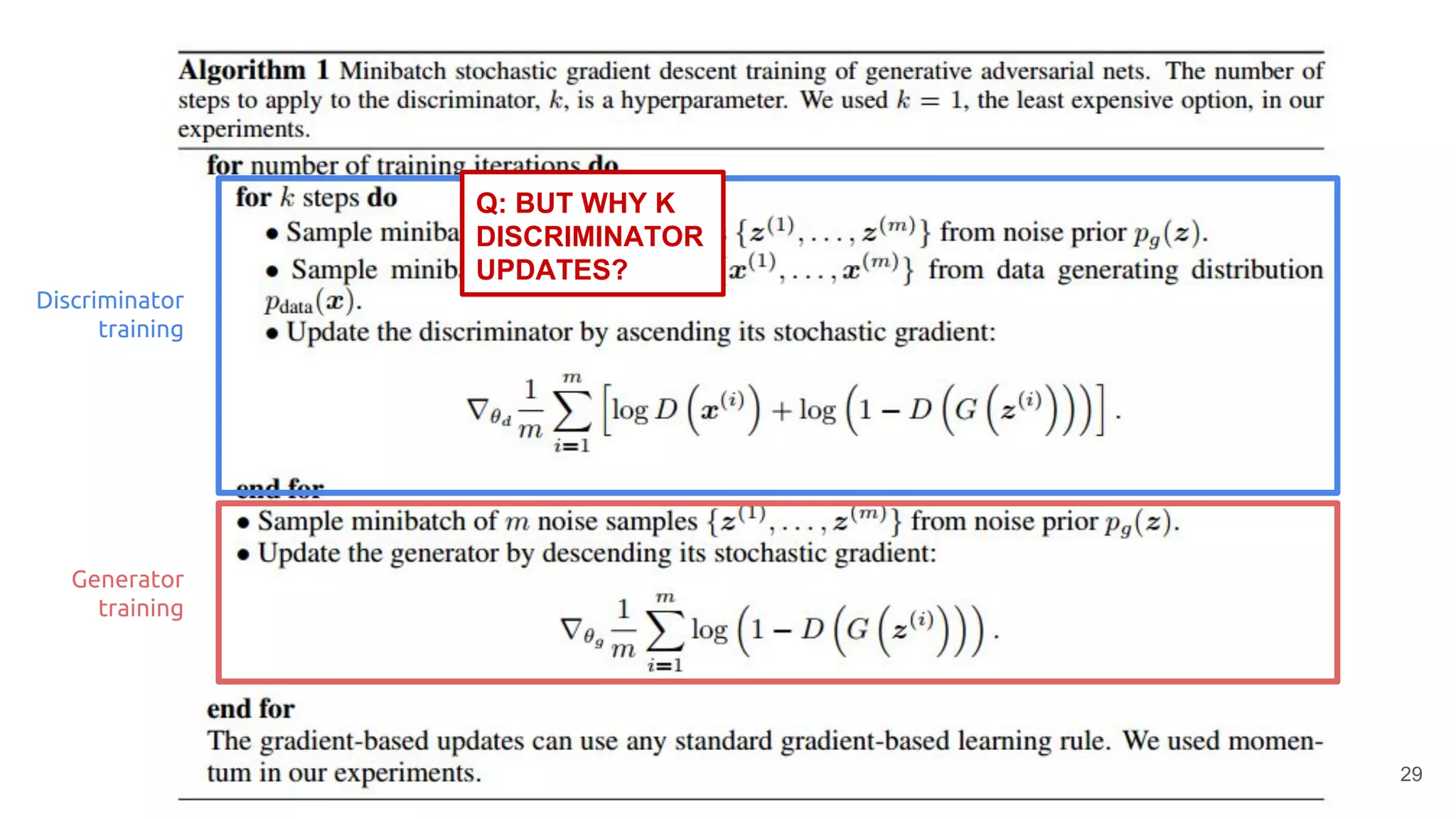

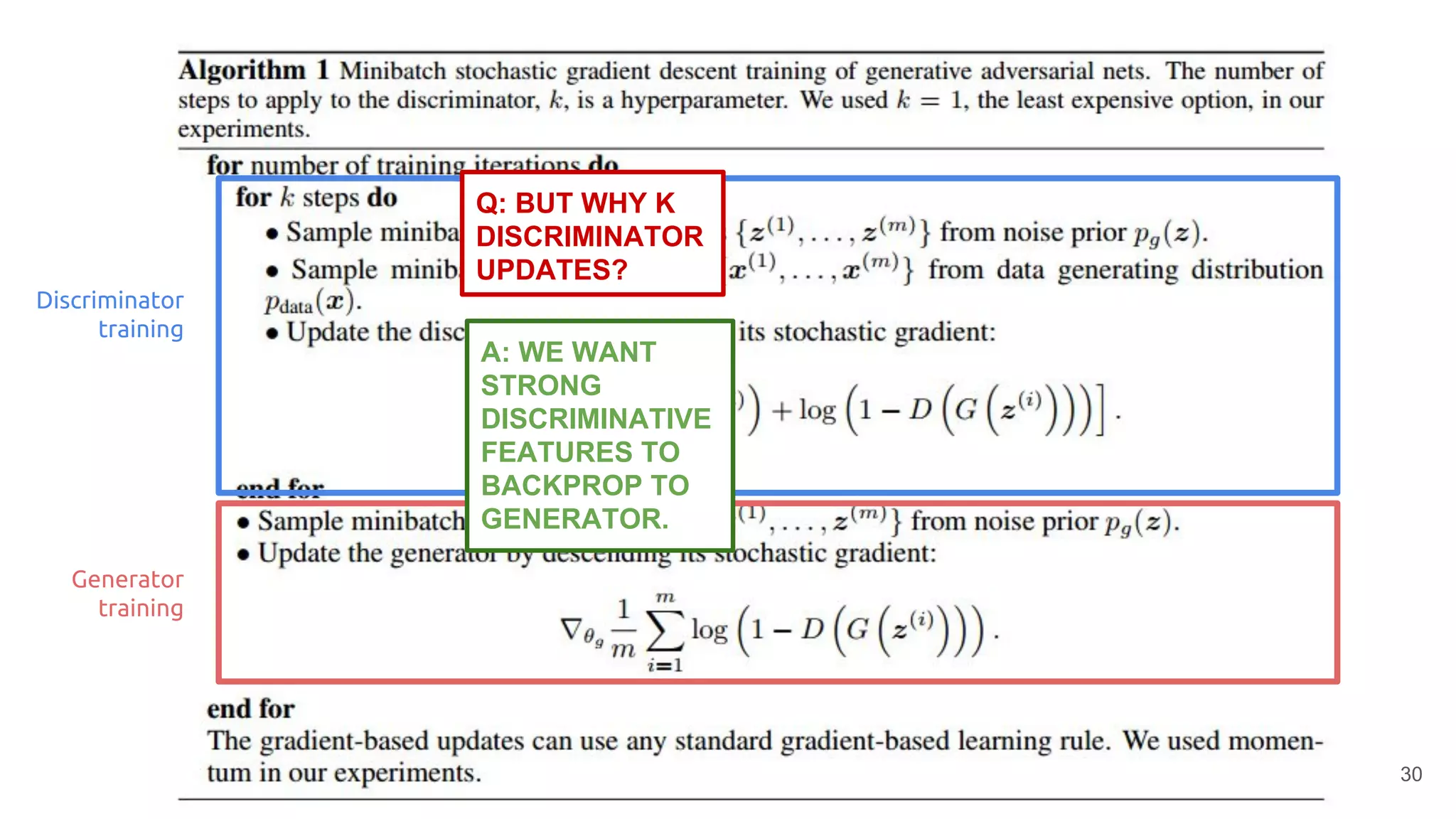

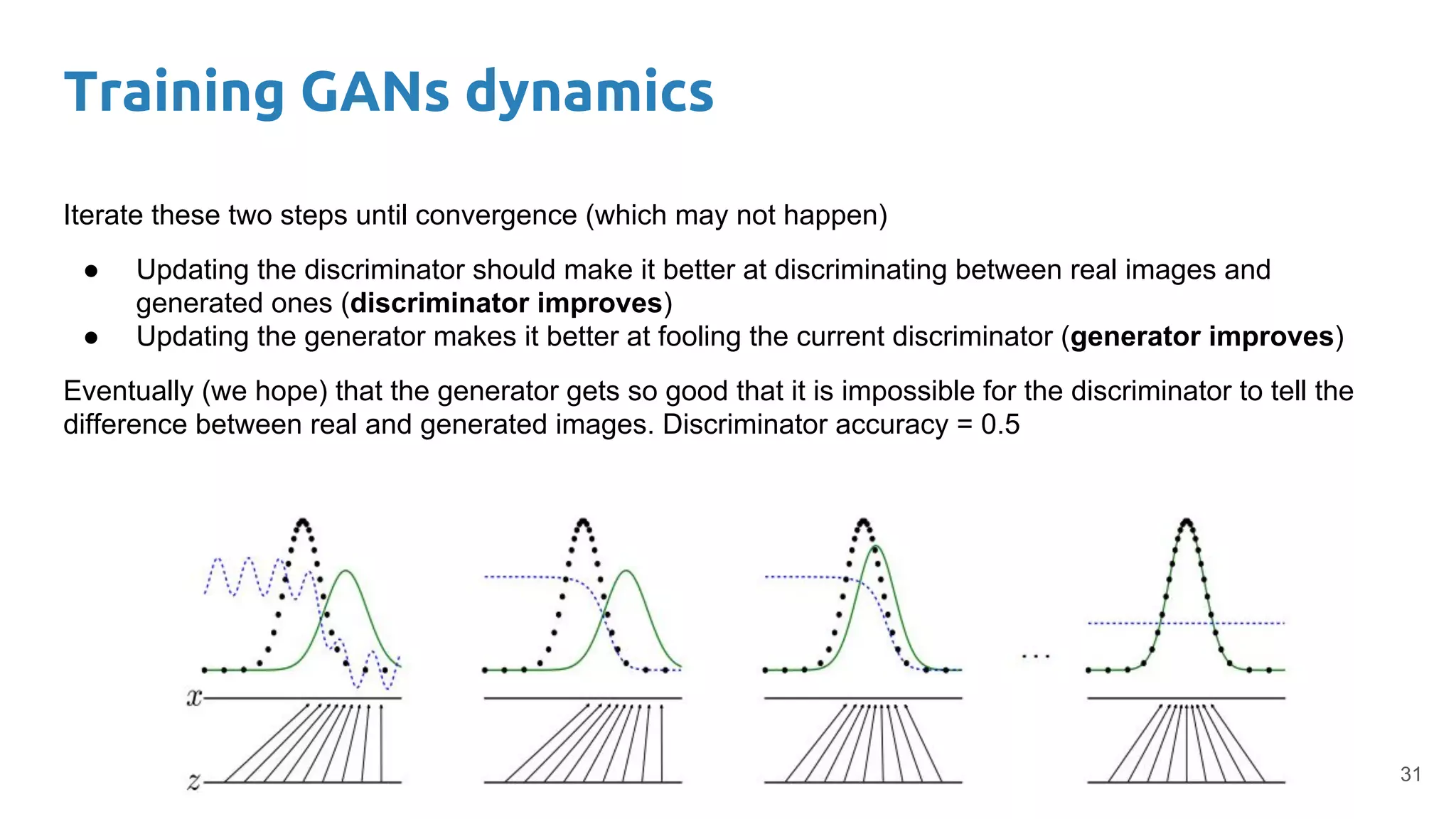

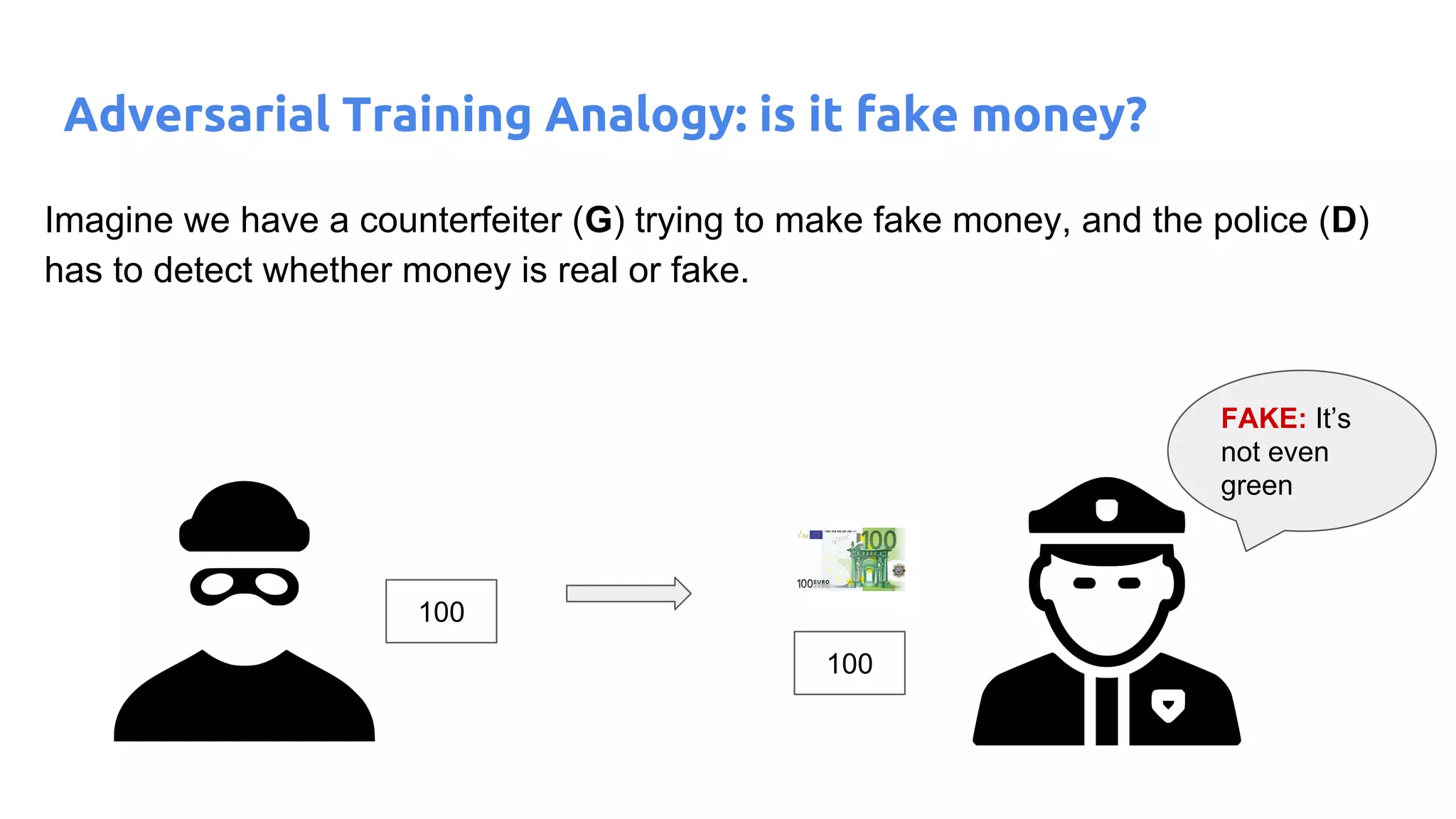

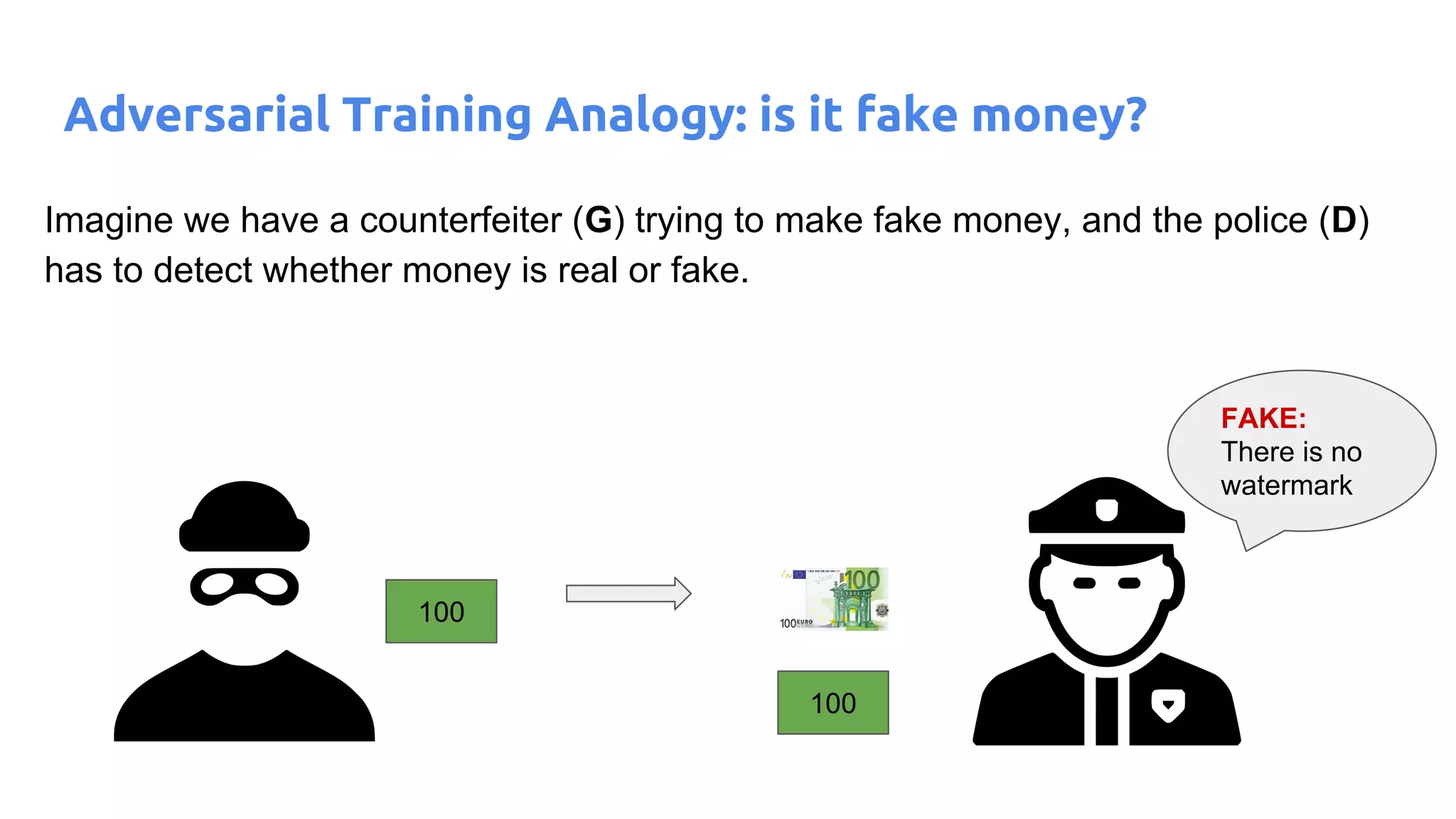

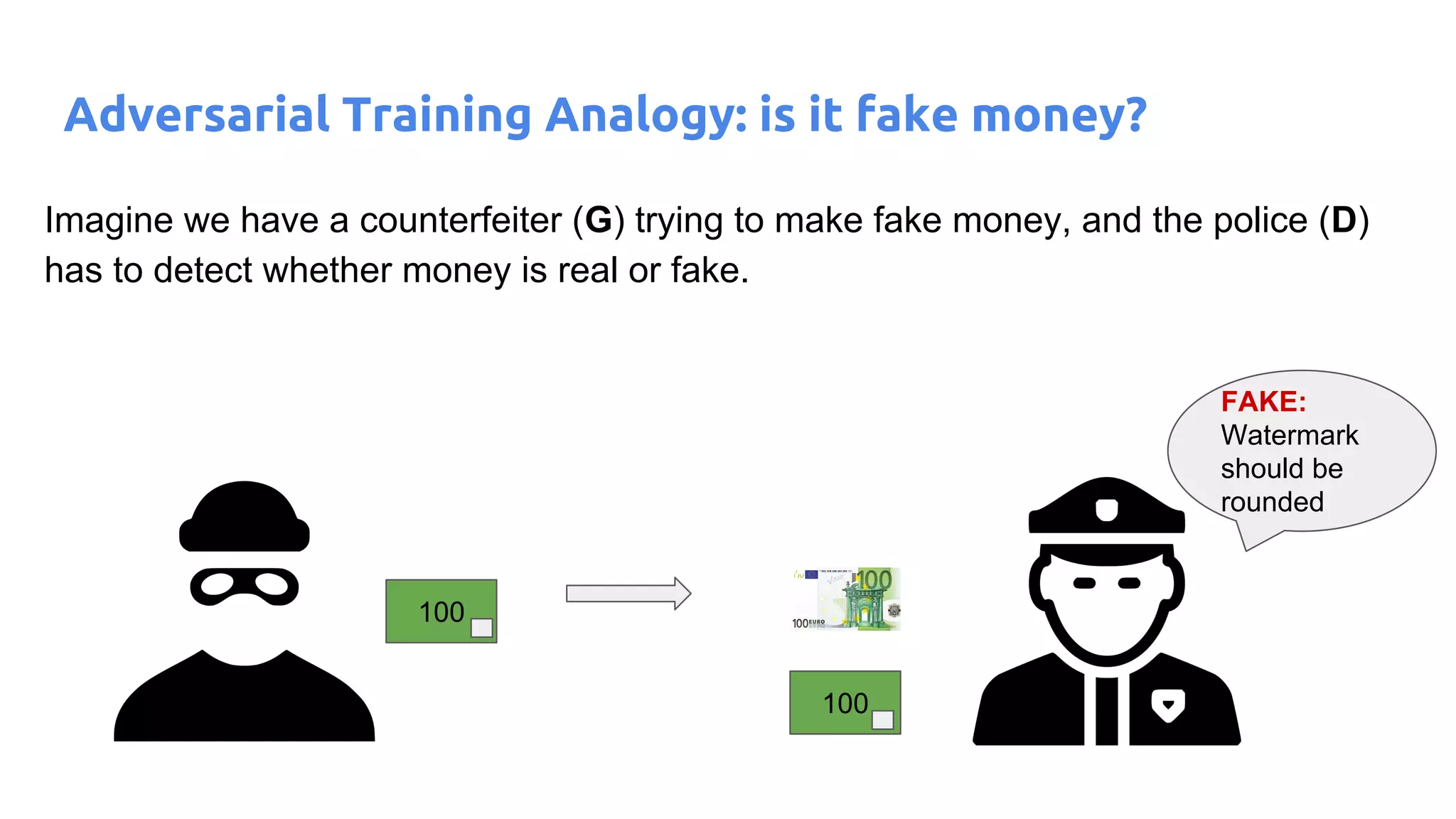

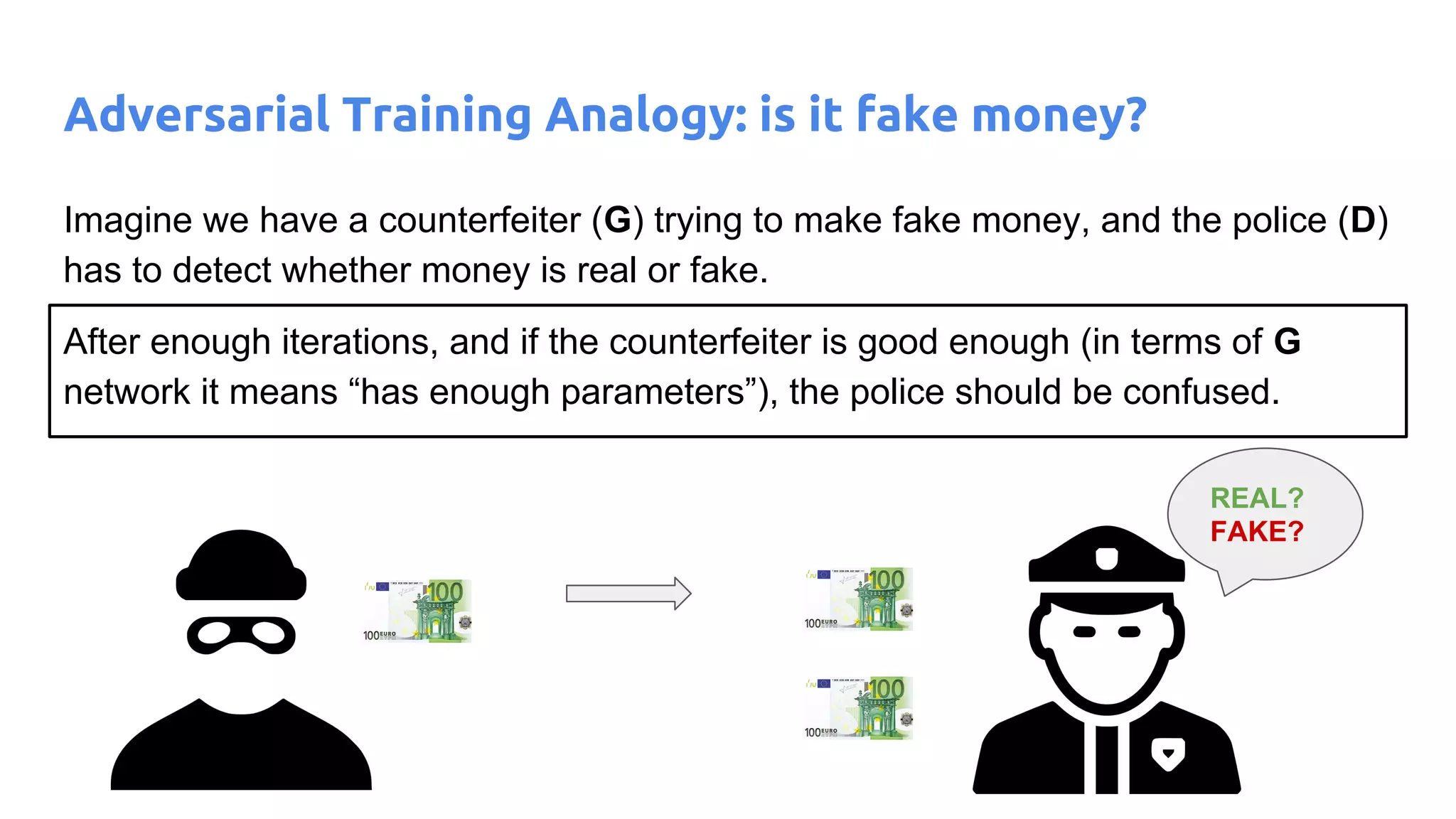

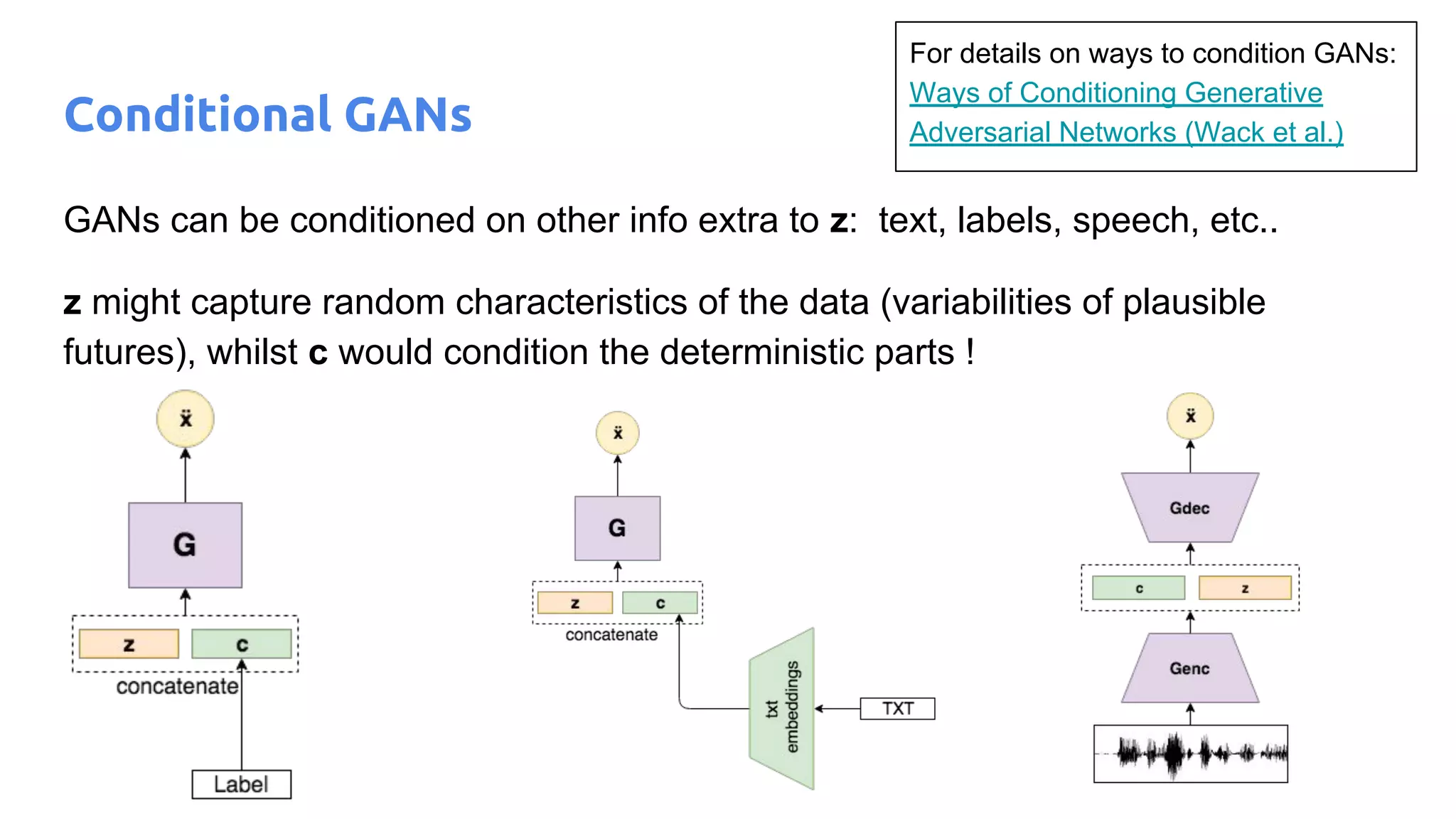

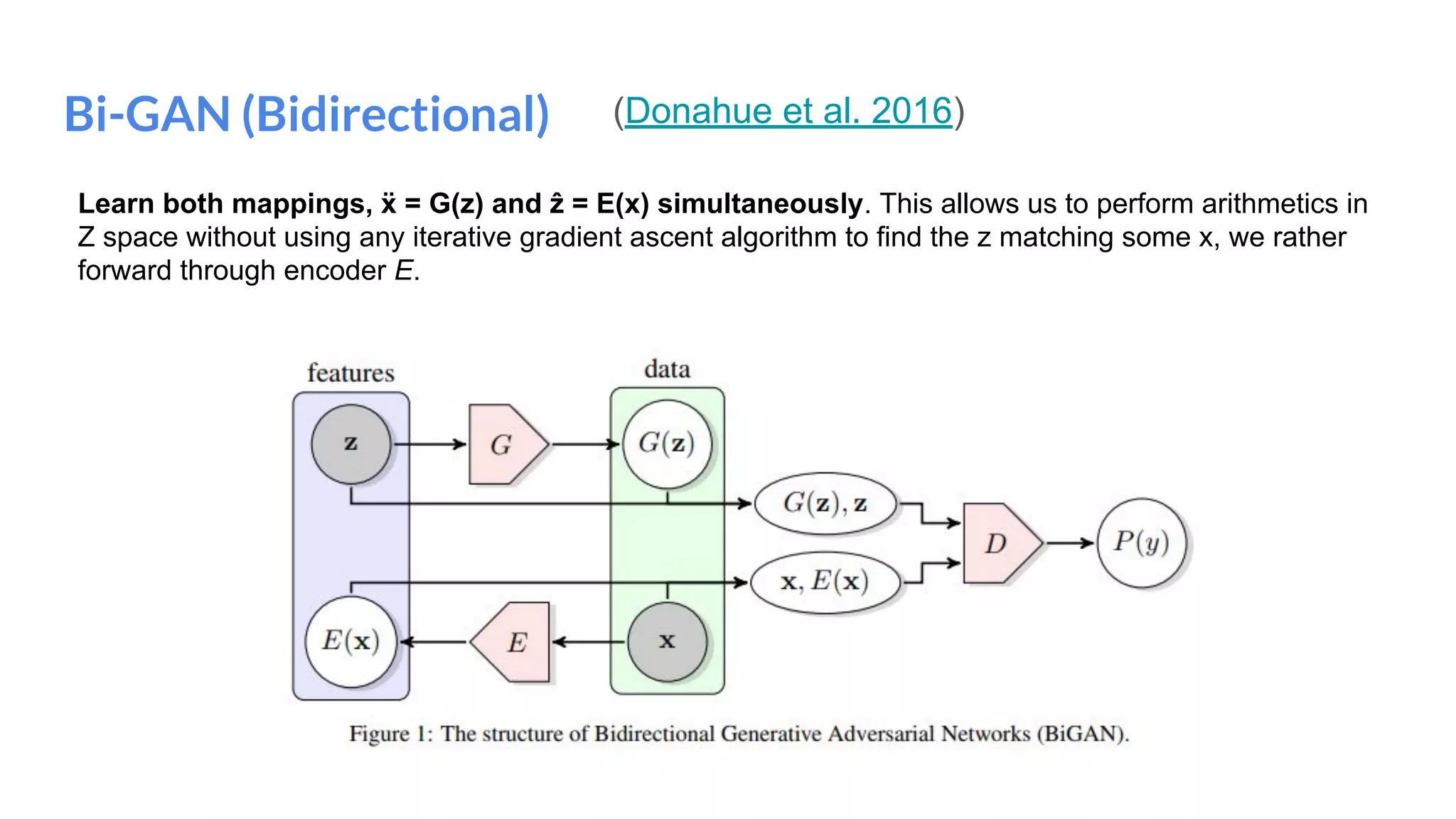

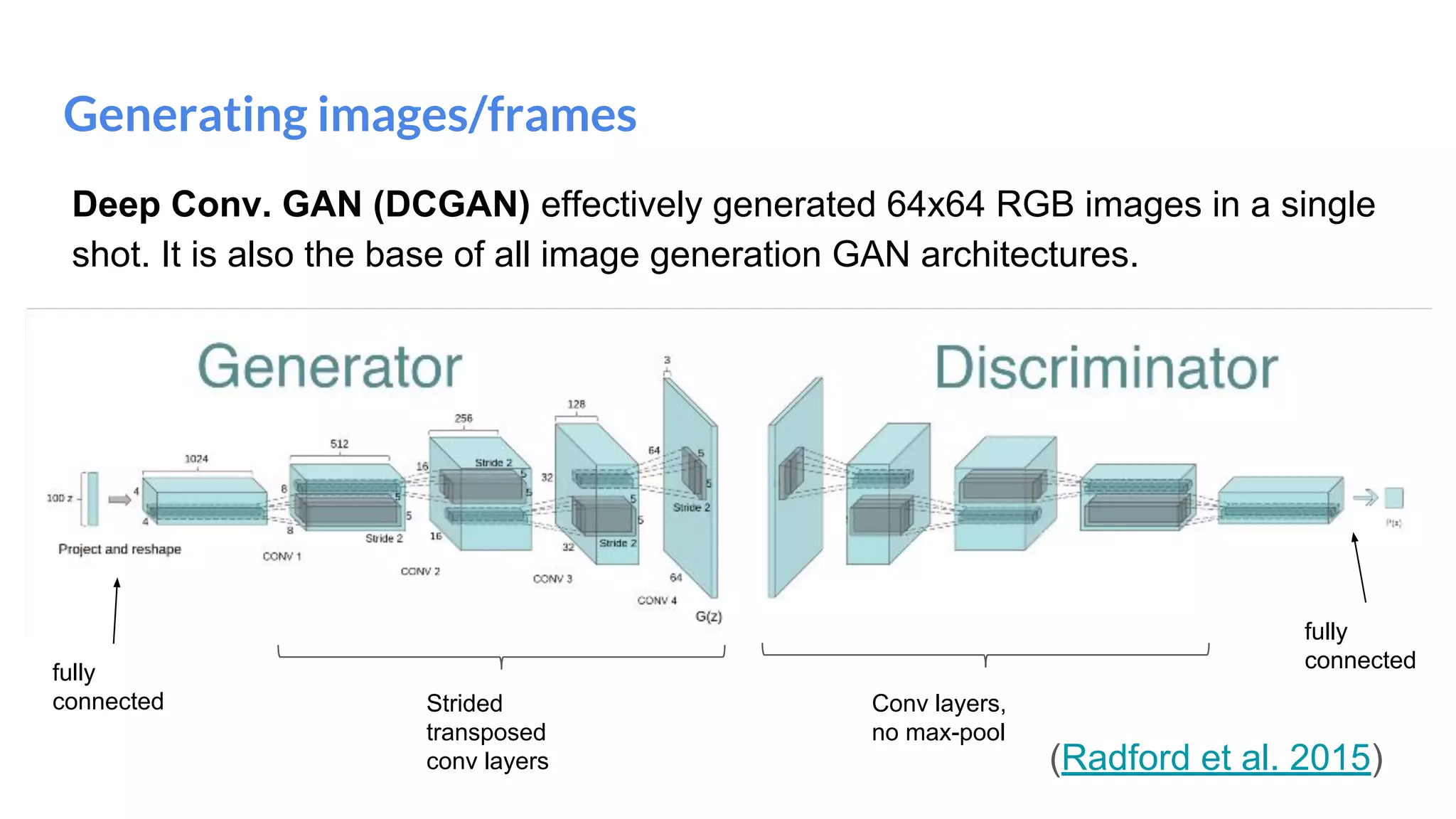

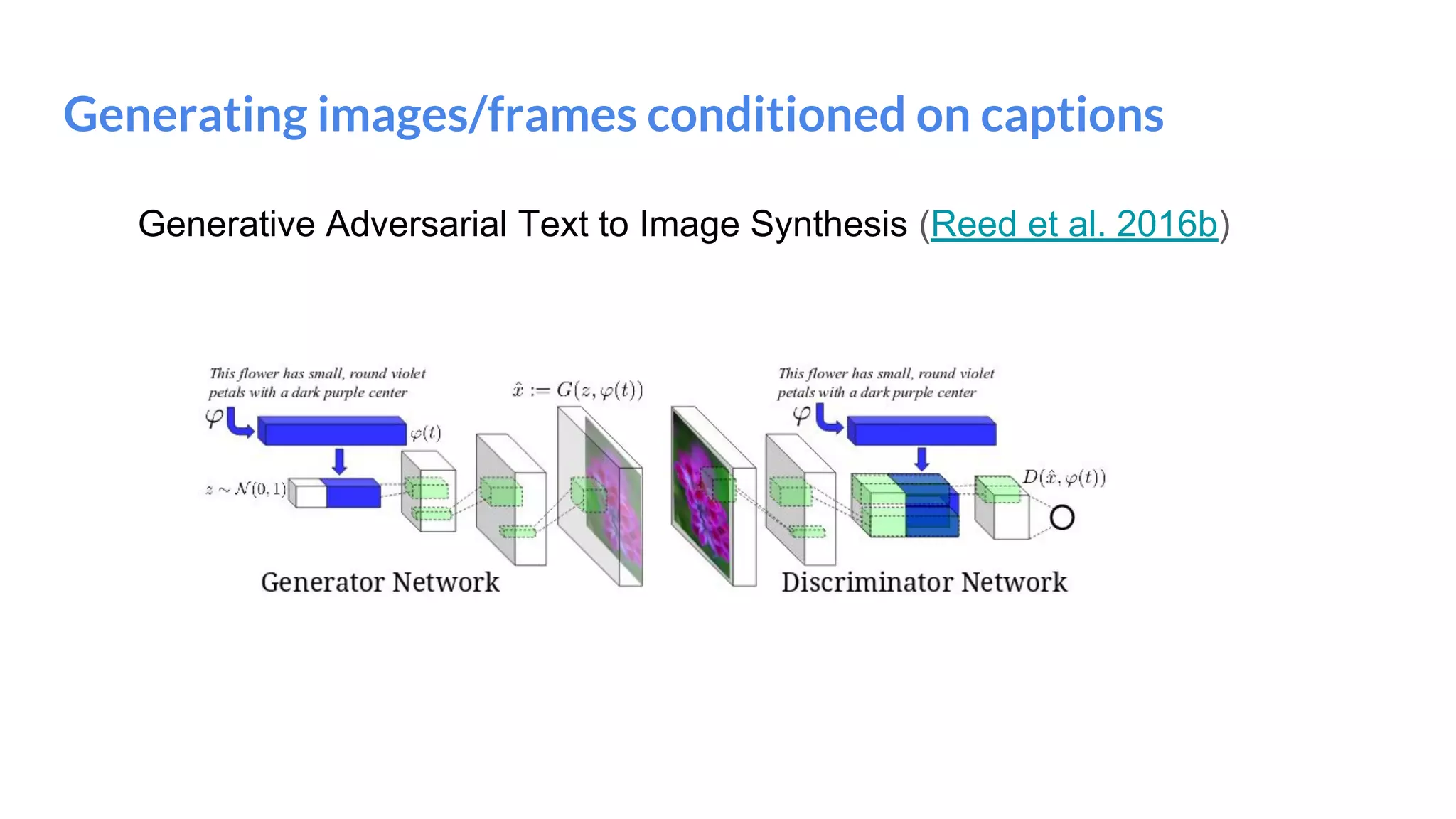

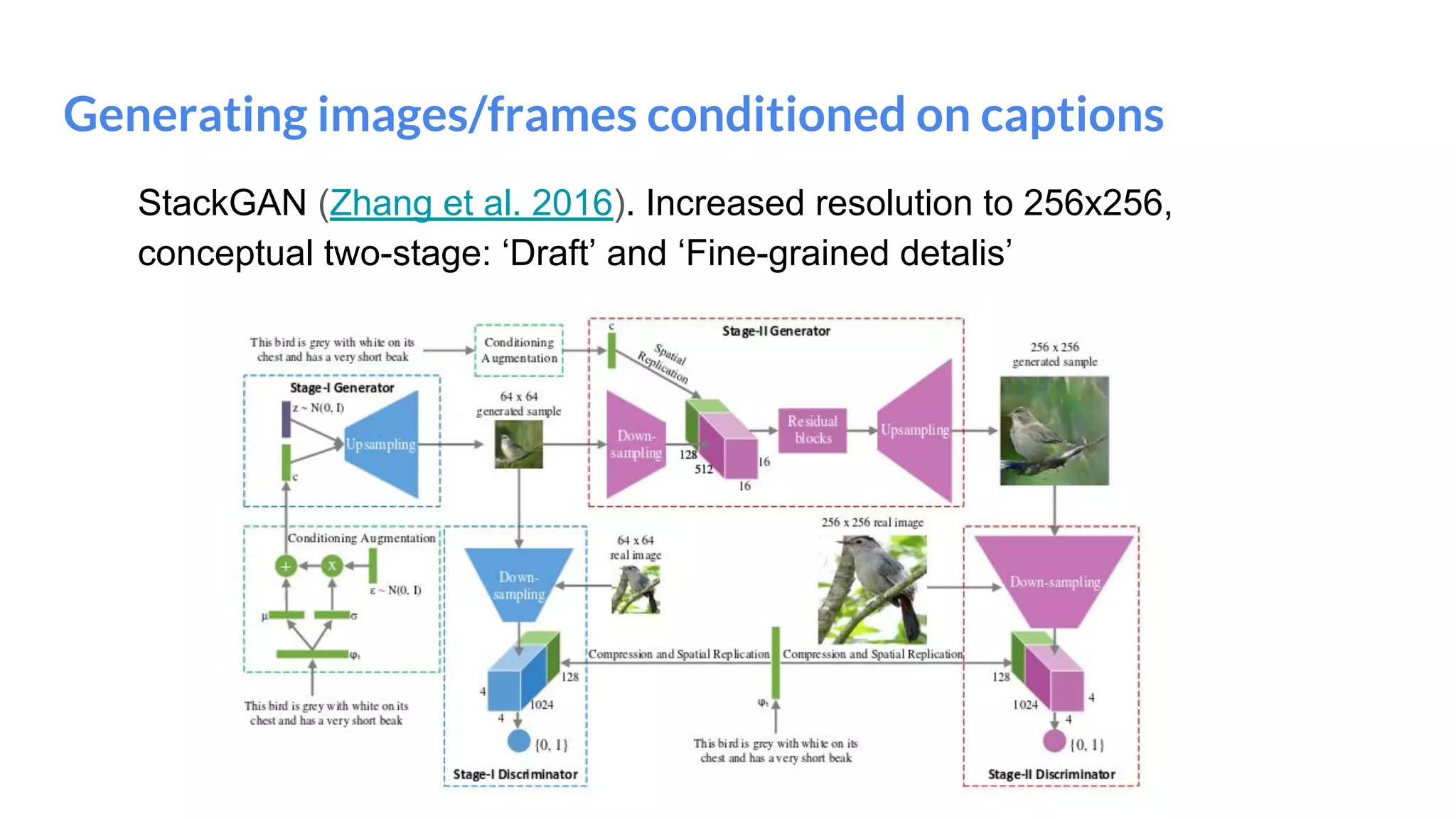

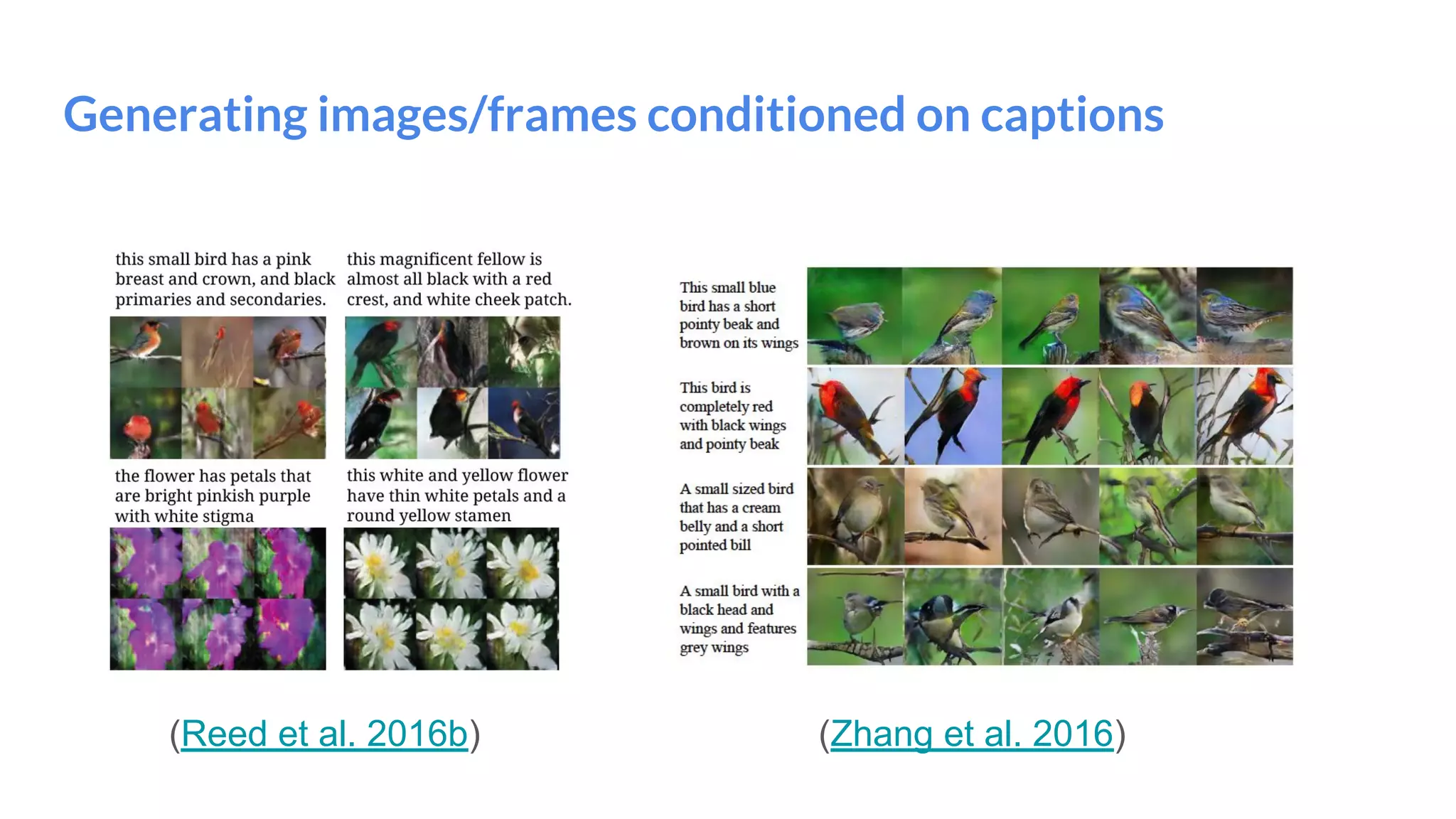

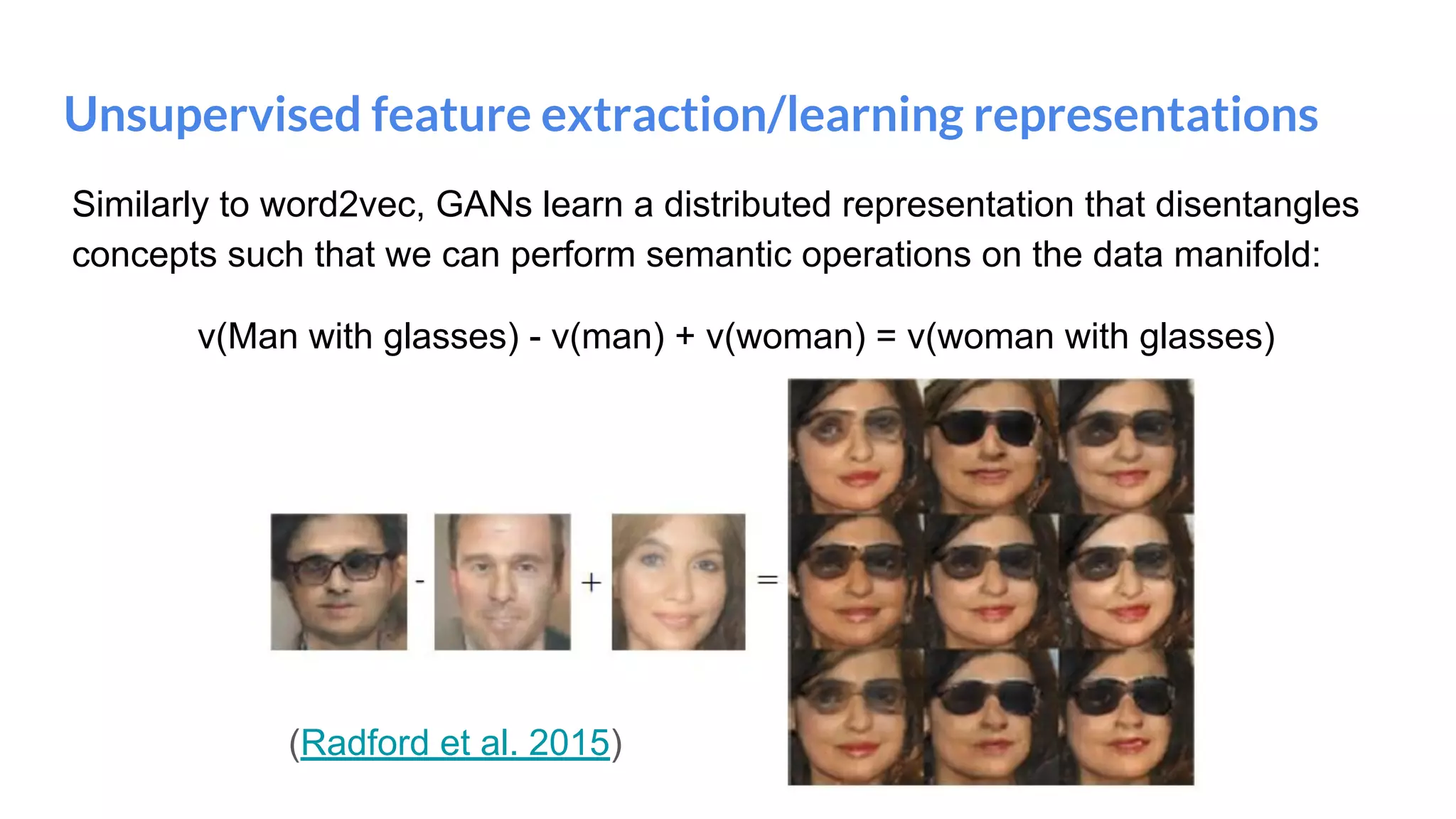

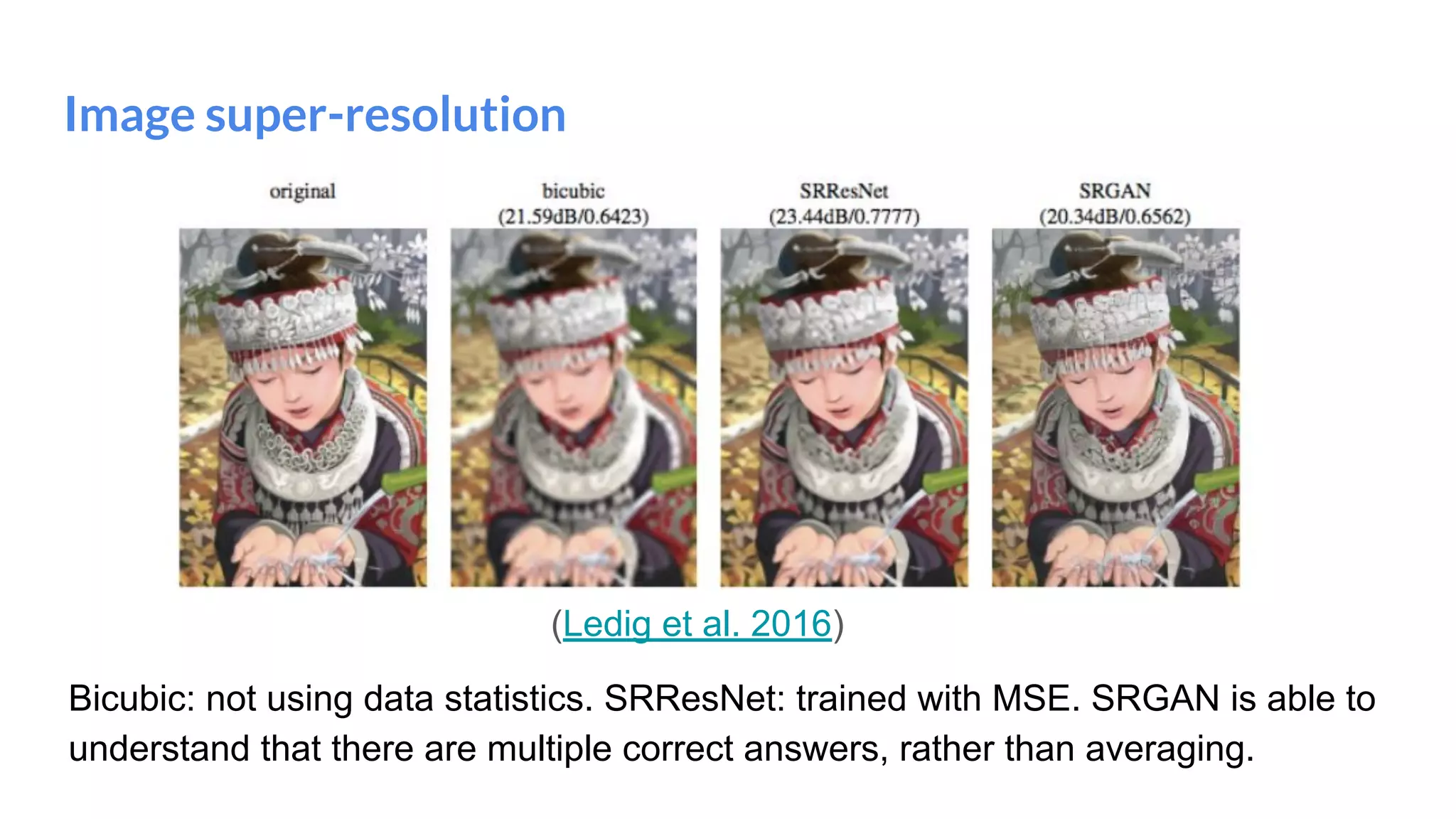

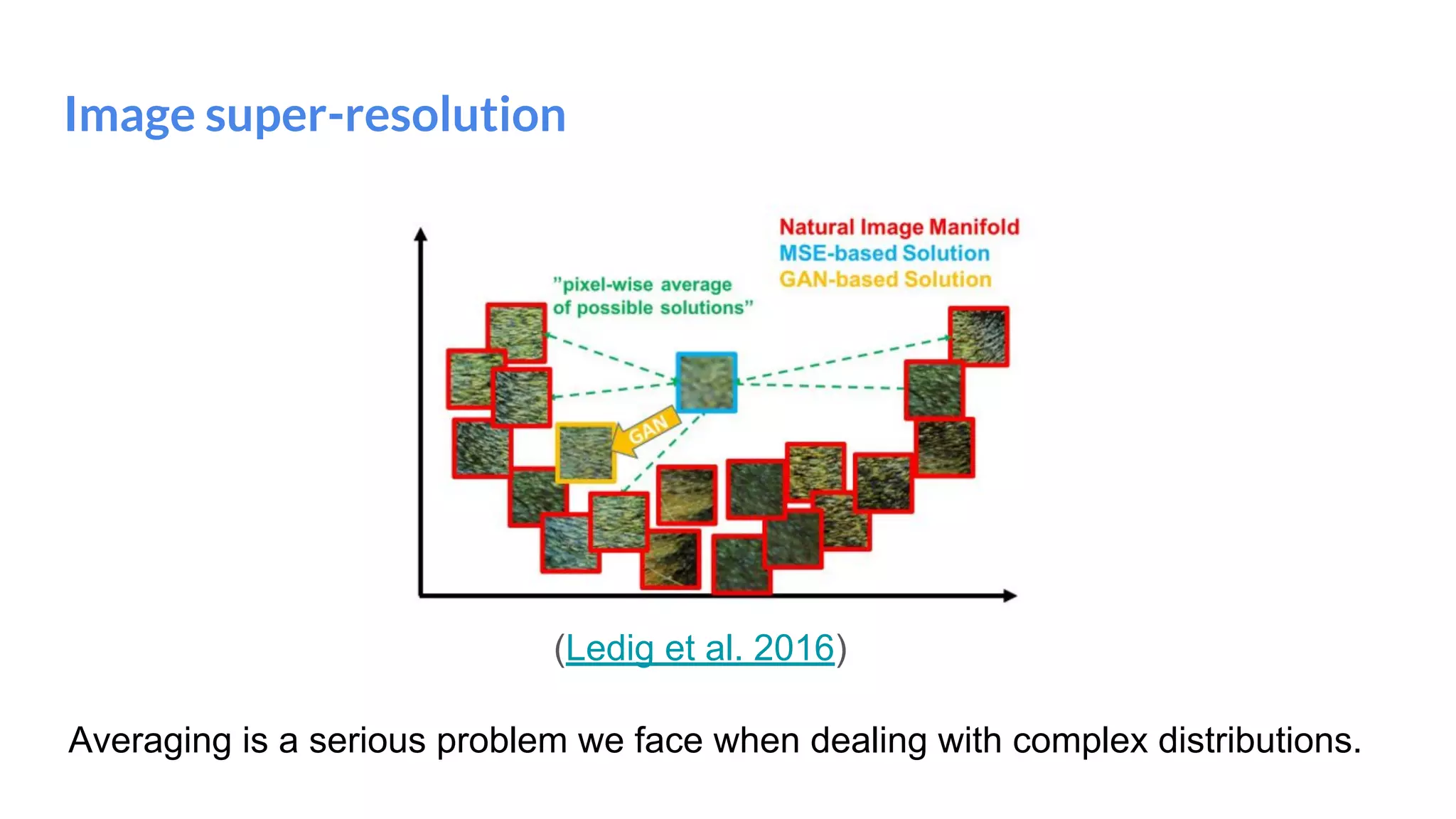

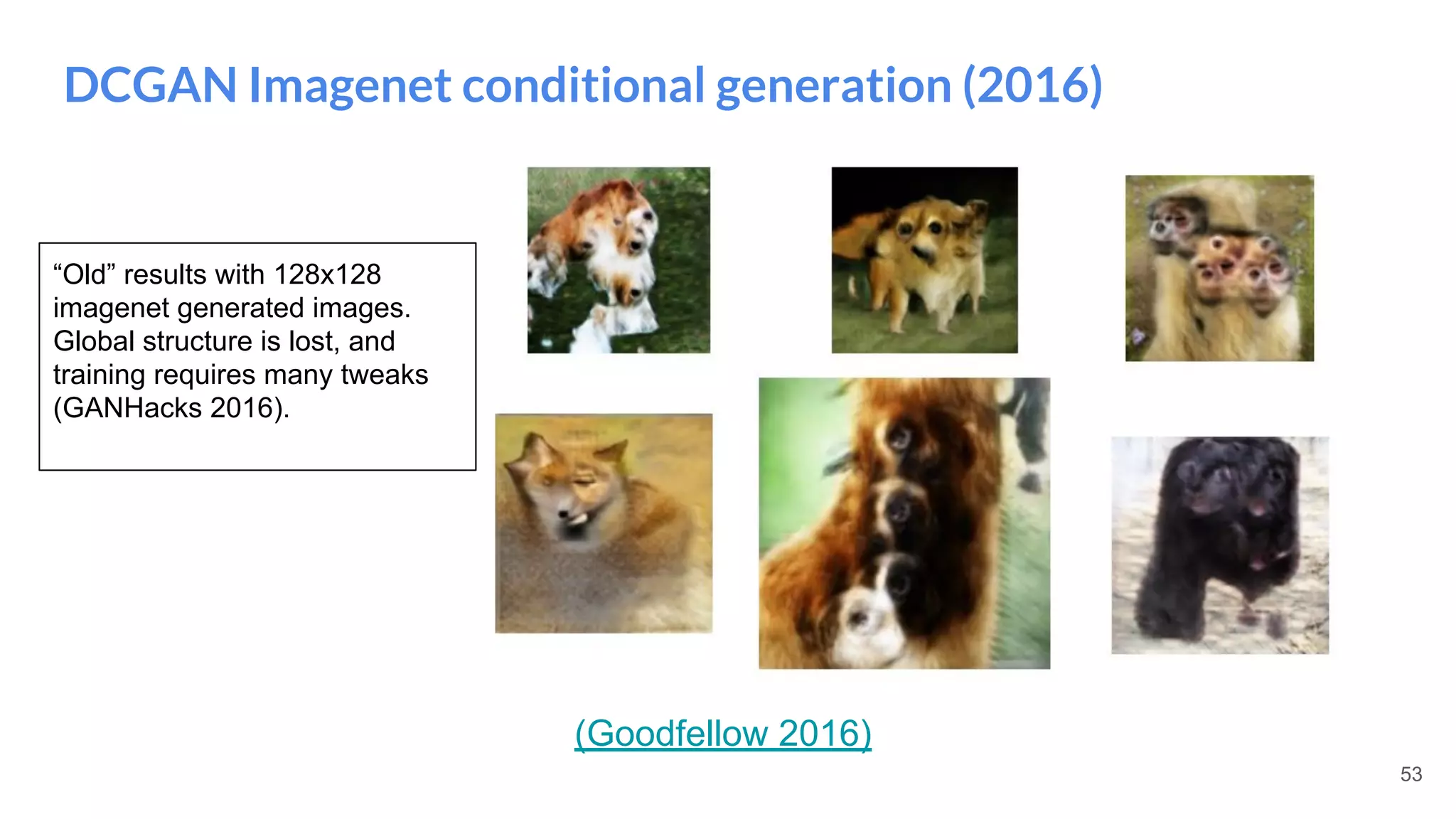

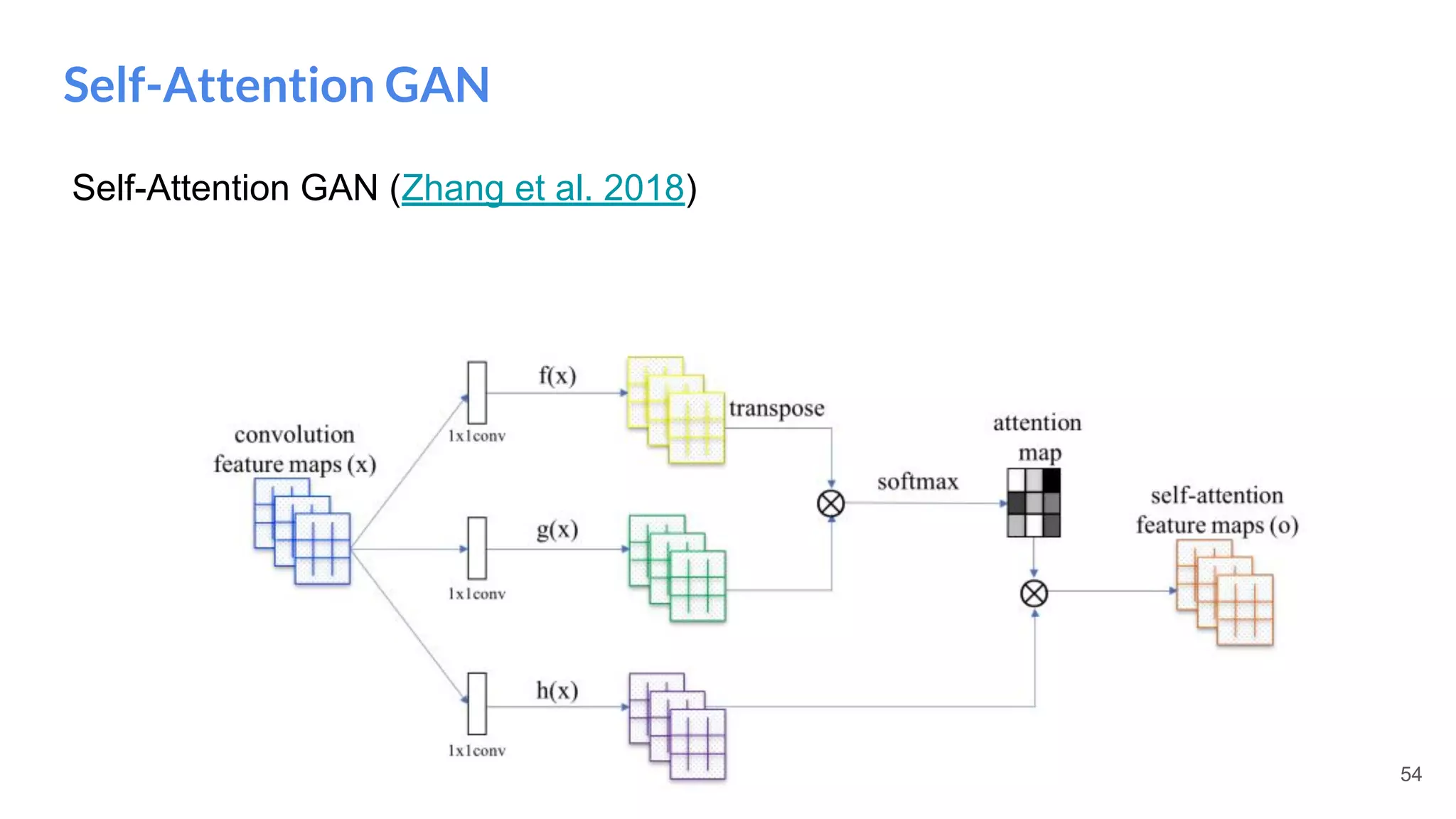

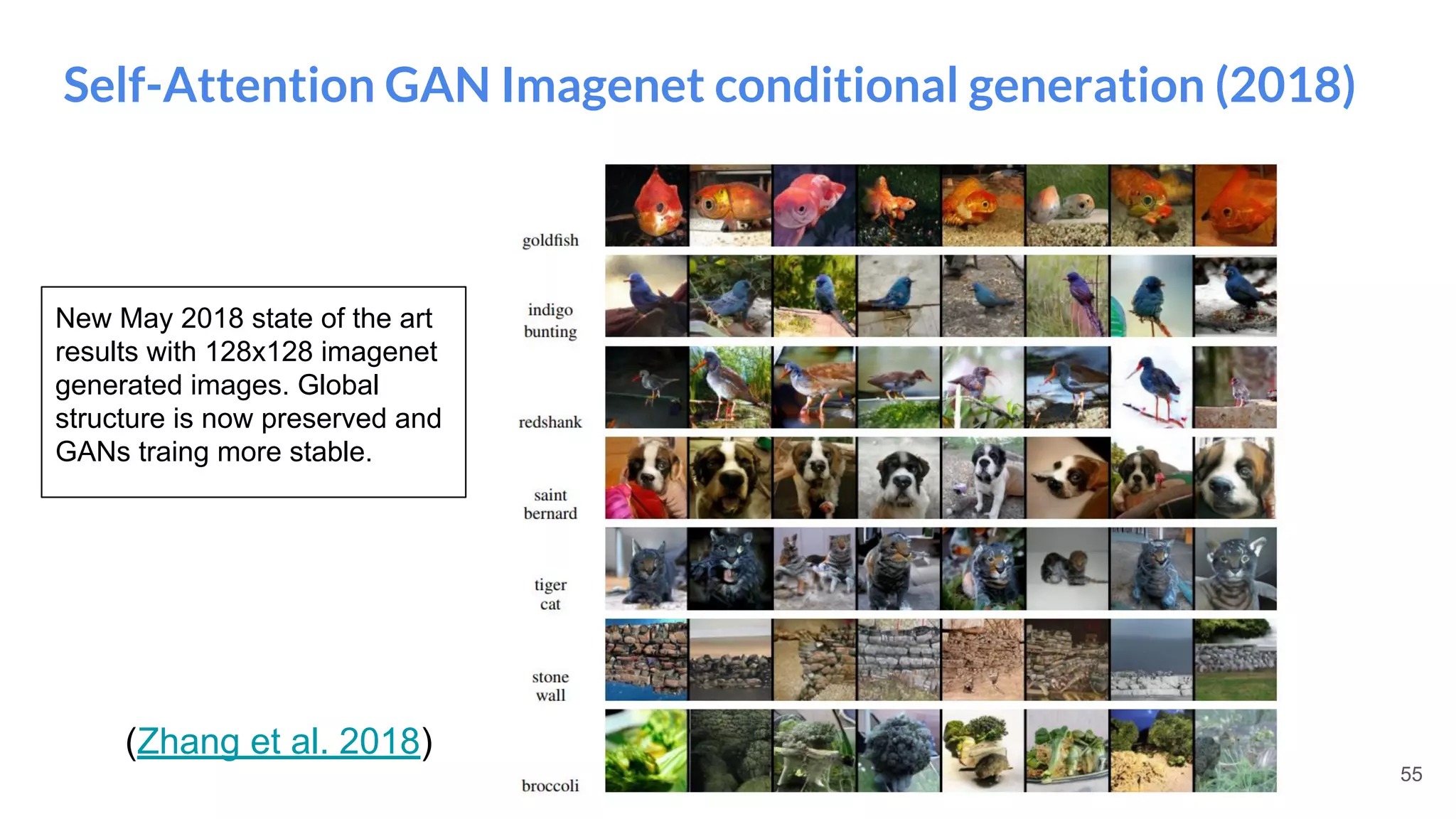

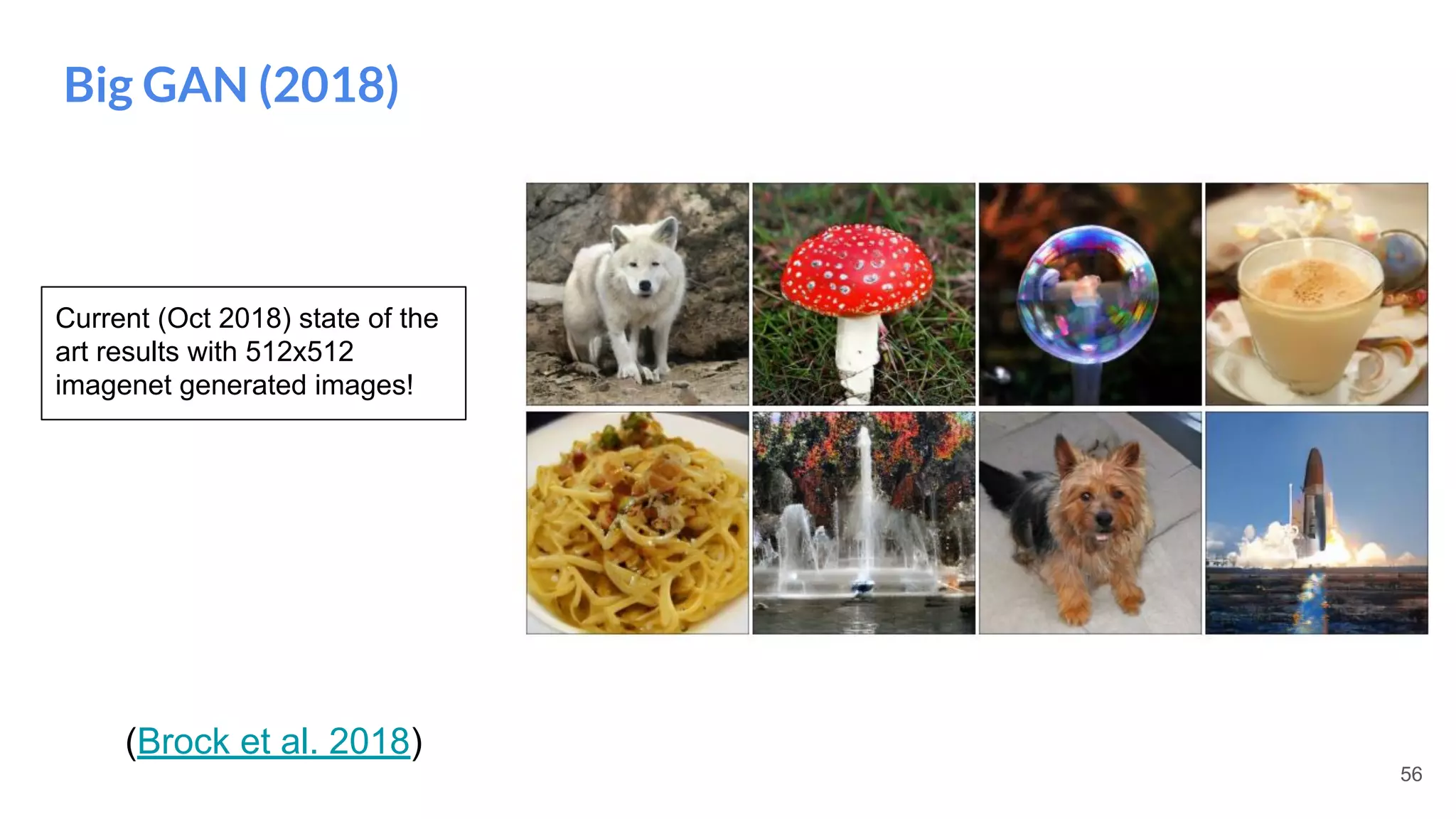

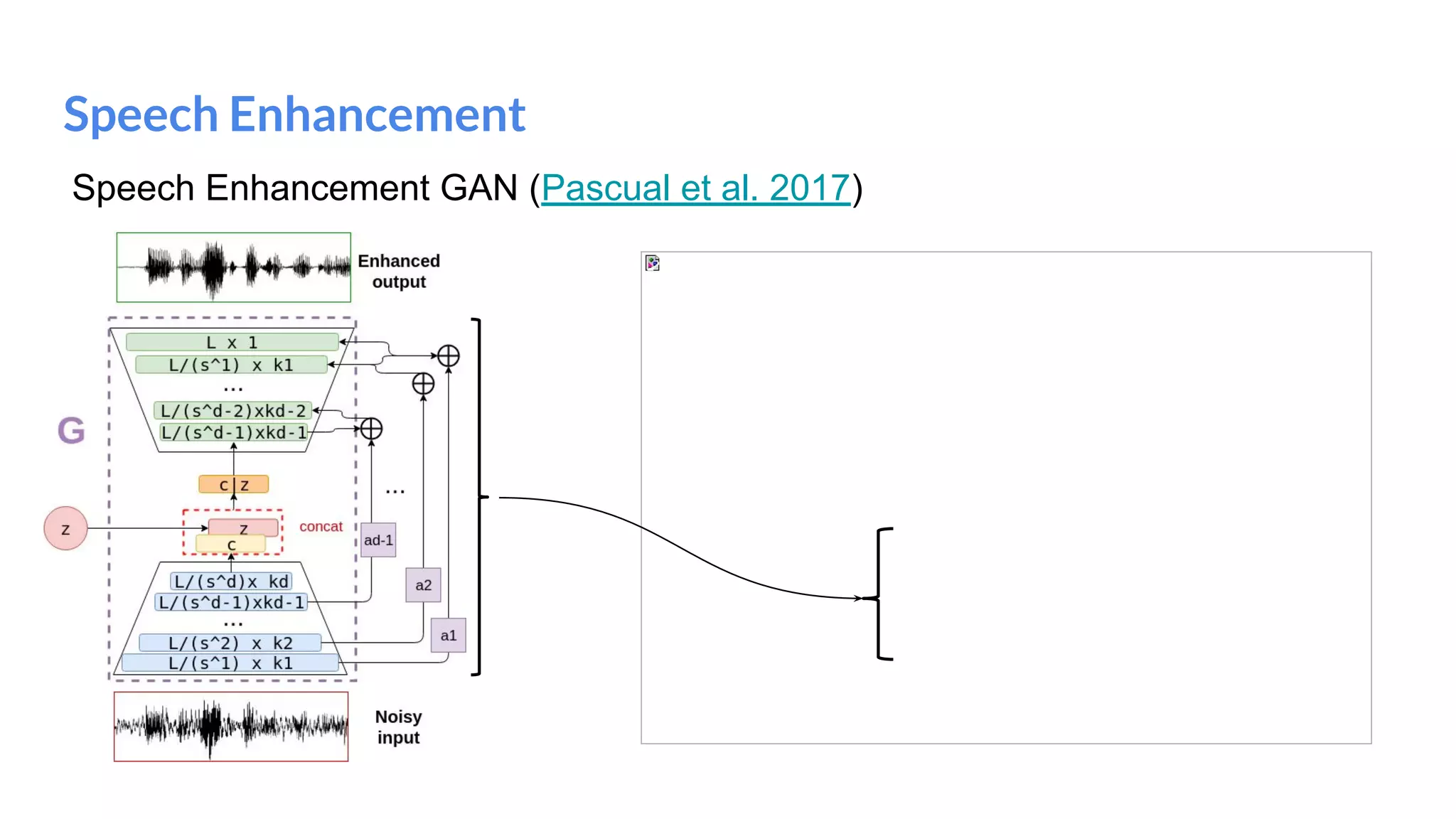

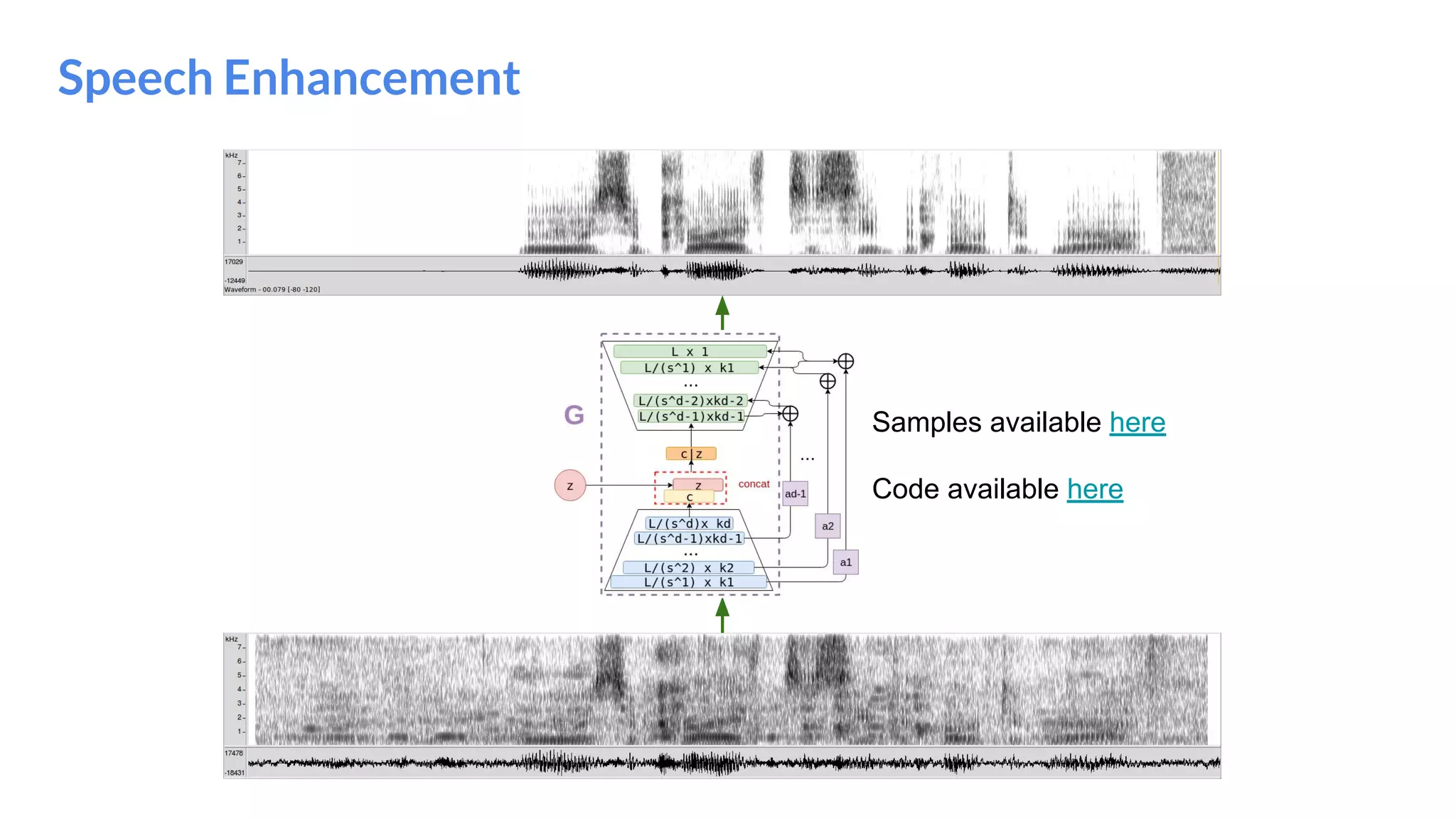

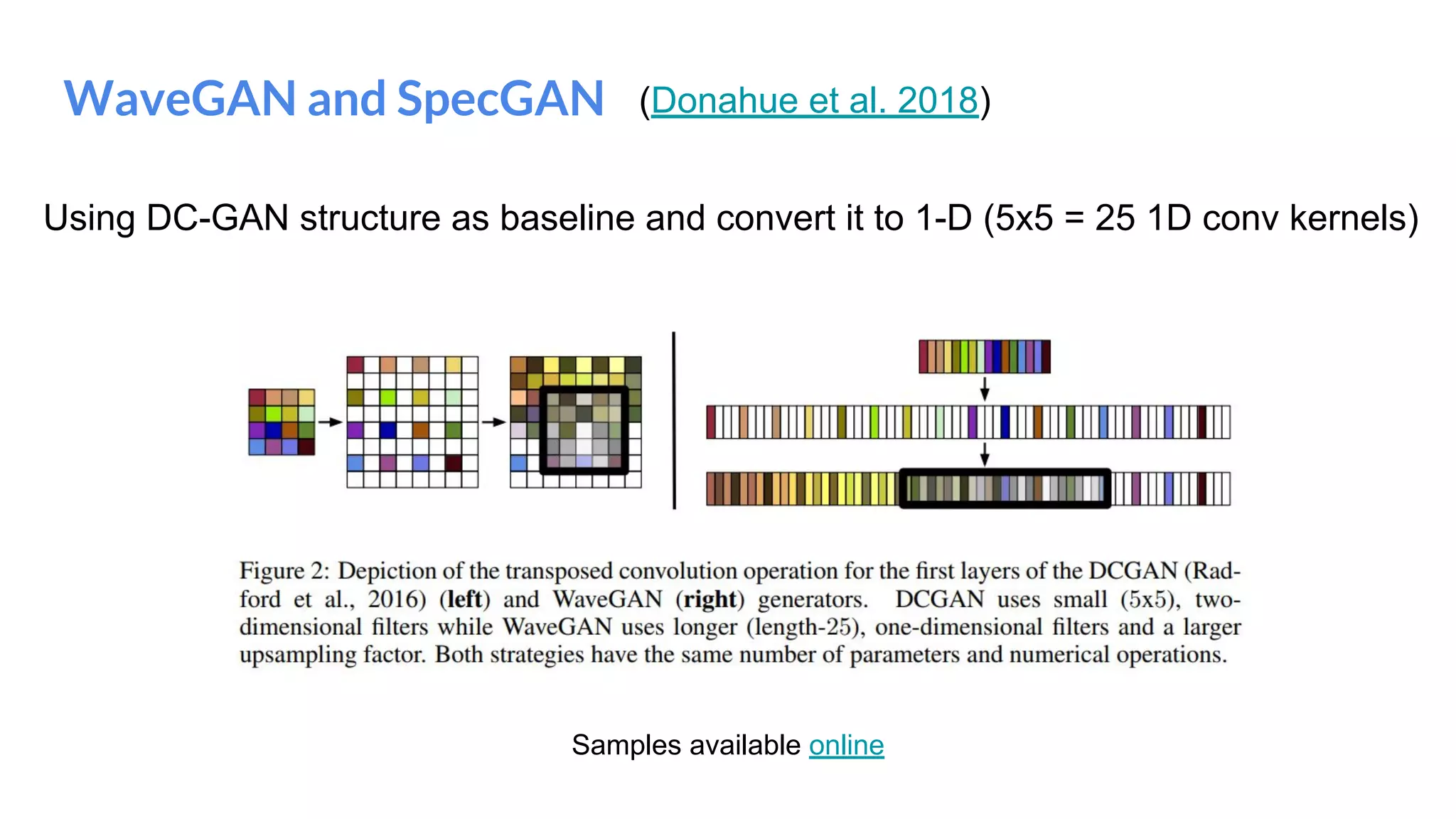

The document presents an overview of deep generative models, focusing on variational auto-encoders (VAEs) and generative adversarial networks (GANs) as key architectures. It provides a taxonomy of generative models, explaining how they learn data distributions and the dynamics of adversarial training between generator and discriminator networks. The document also discusses various implementations, challenges in training GANs, and advancements in generating high-quality images and other data types.