This document discusses generative adversarial networks (GANs) for generating synthetic images that are indistinguishable from real images. It introduces GANs, explaining how they involve two neural networks - a generator that produces synthetic images and a discriminator that evaluates them as real or fake. The networks are trained in an adversarial process, with the generator trying to fool the discriminator and the discriminator trying to distinguish real from fake images. The document outlines the GAN training process and theoretical guarantees, provides experimental results on image datasets, and discusses advantages, disadvantages and potential future applications of GANs.

![5

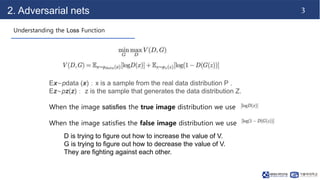

2. Adversarial nets

2) Fixed D, Training G

It is hoping that the value of V is as small as possible so that D cannot distinguish

between true and false data.

Because we have trained D, it is D constant, so it can be just ignored unaffected.

It is expected that the discriminator thinks that the probability that the false image is

the true image is as large as possible. So expect D(G(z)) to be close to 1. So expect

log[1-D(G(z))] to be close to 0.](https://image.slidesharecdn.com/gan-231106095140-c572f8b2/85/GAN-Generative-Adversarial-Networks-pptx-6-320.jpg)