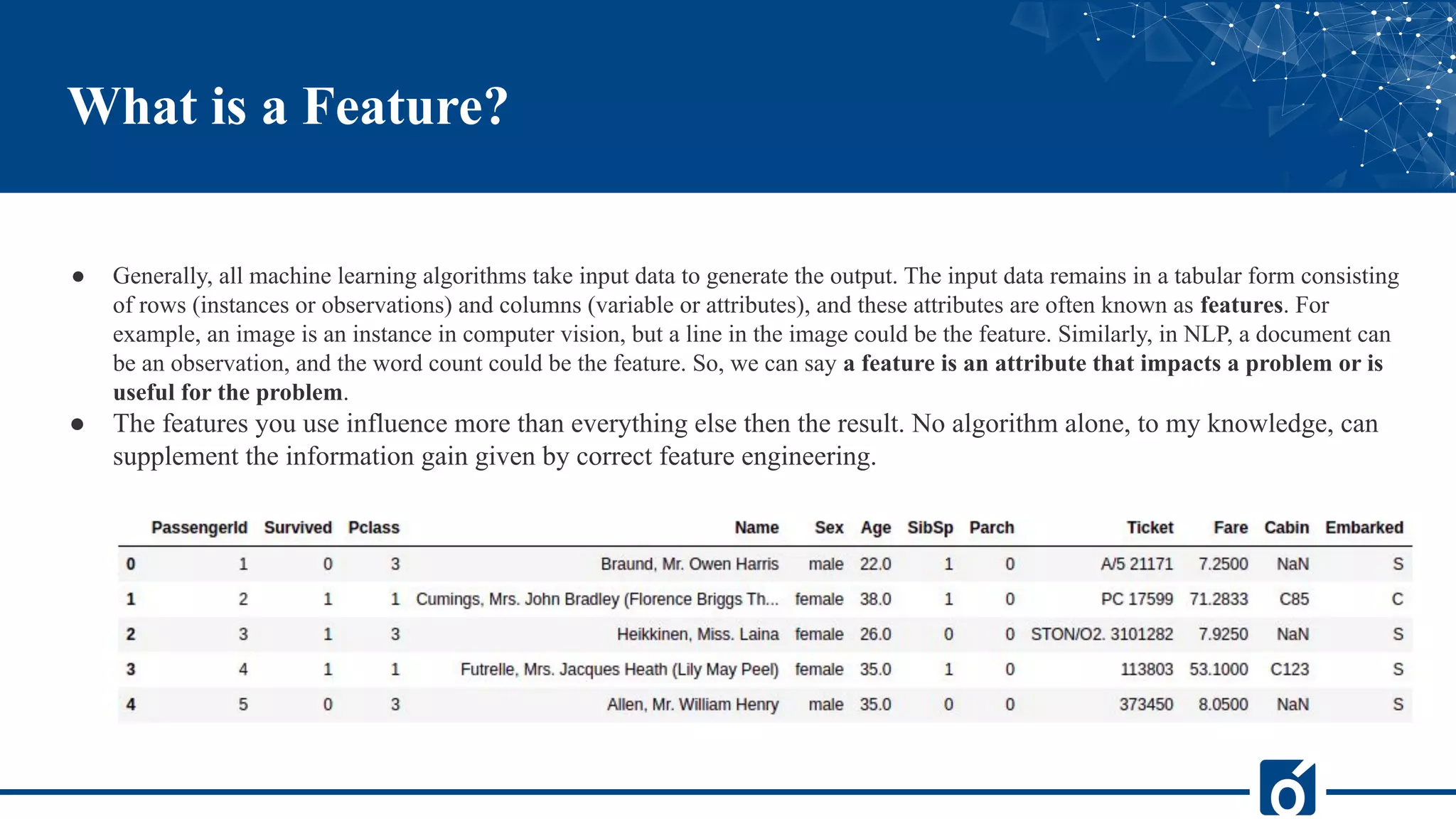

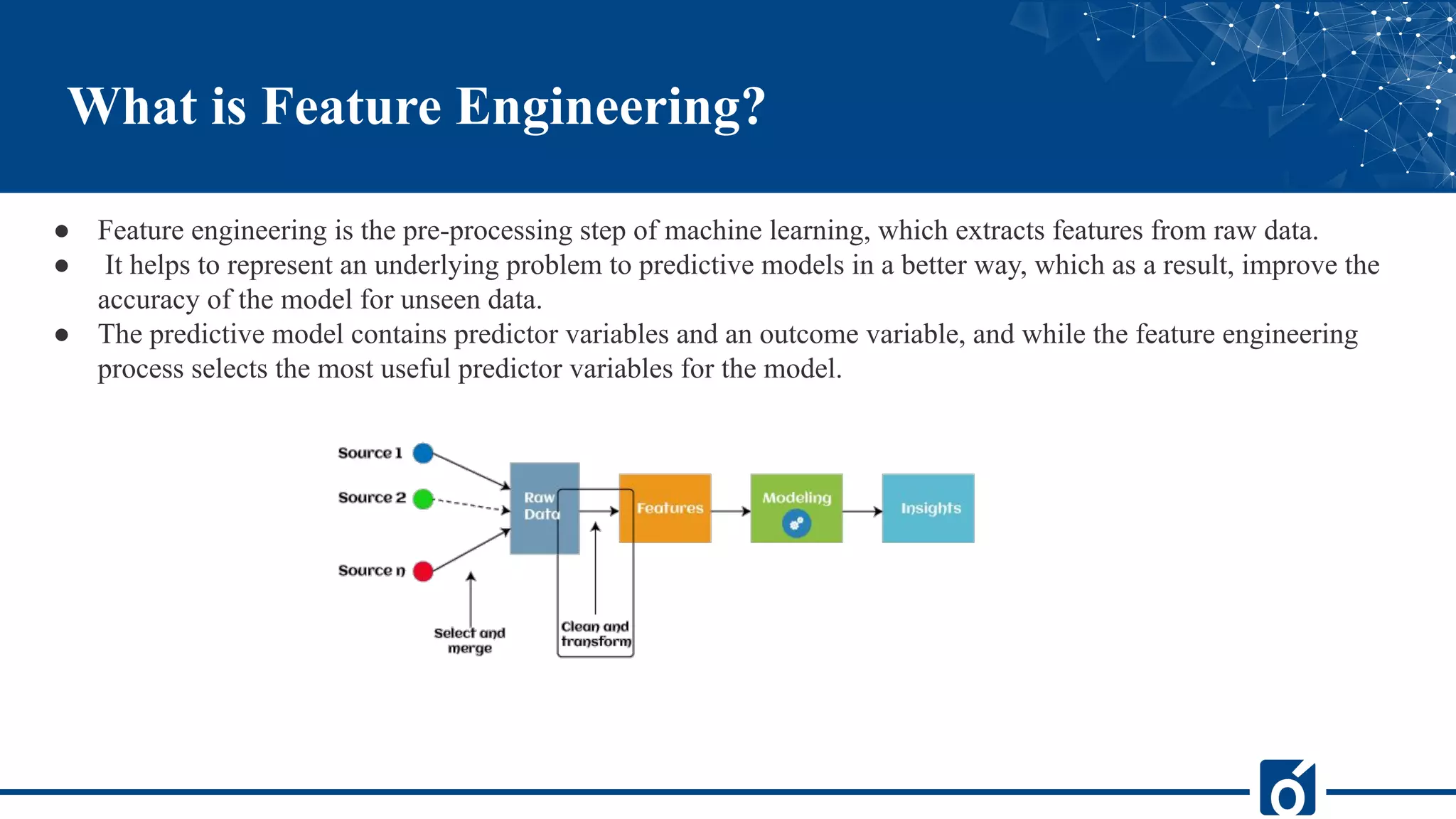

The document covers the concept of feature engineering in machine learning, explaining its definition, importance, and techniques. It outlines the main processes involved in feature engineering, such as data preparation and exploratory analysis, and highlights various techniques like imputation, handling outliers, and encoding. Overall, effective feature engineering is presented as crucial for enhancing model performance and accuracy.