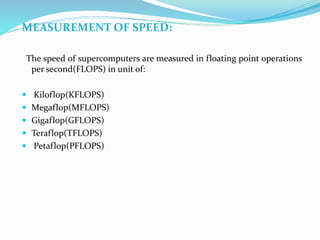

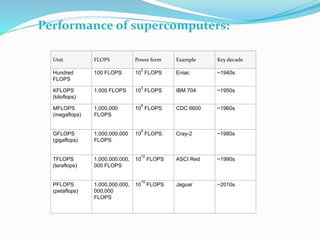

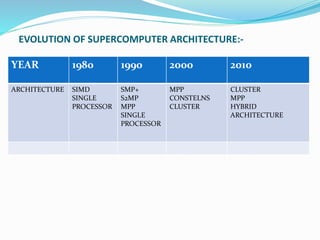

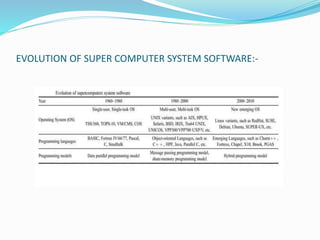

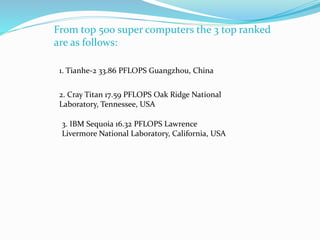

Supercomputers can perform calculations much faster than ordinary computers due to their high speeds and large memory. They are used for complex tasks like weather forecasting and scientific research that require extensive calculations. Supercomputers have evolved over time from single processors to massively parallel systems with thousands of processors. Their power is now measured in petaflops, or quadrillions of calculations per second. The top supercomputers currently are based in China, the United States, and use Linux operating systems and programming languages like Fortran and C++.