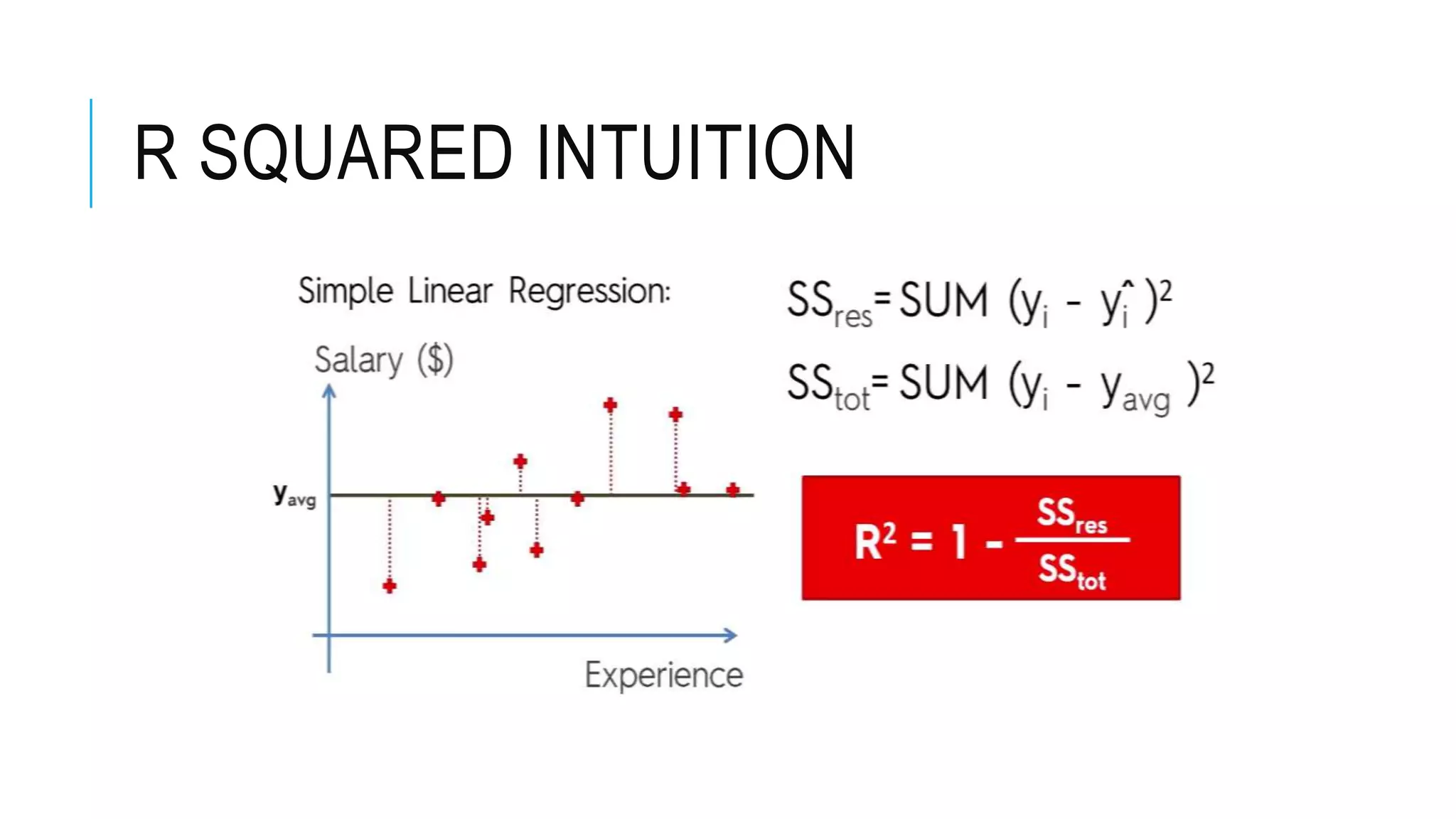

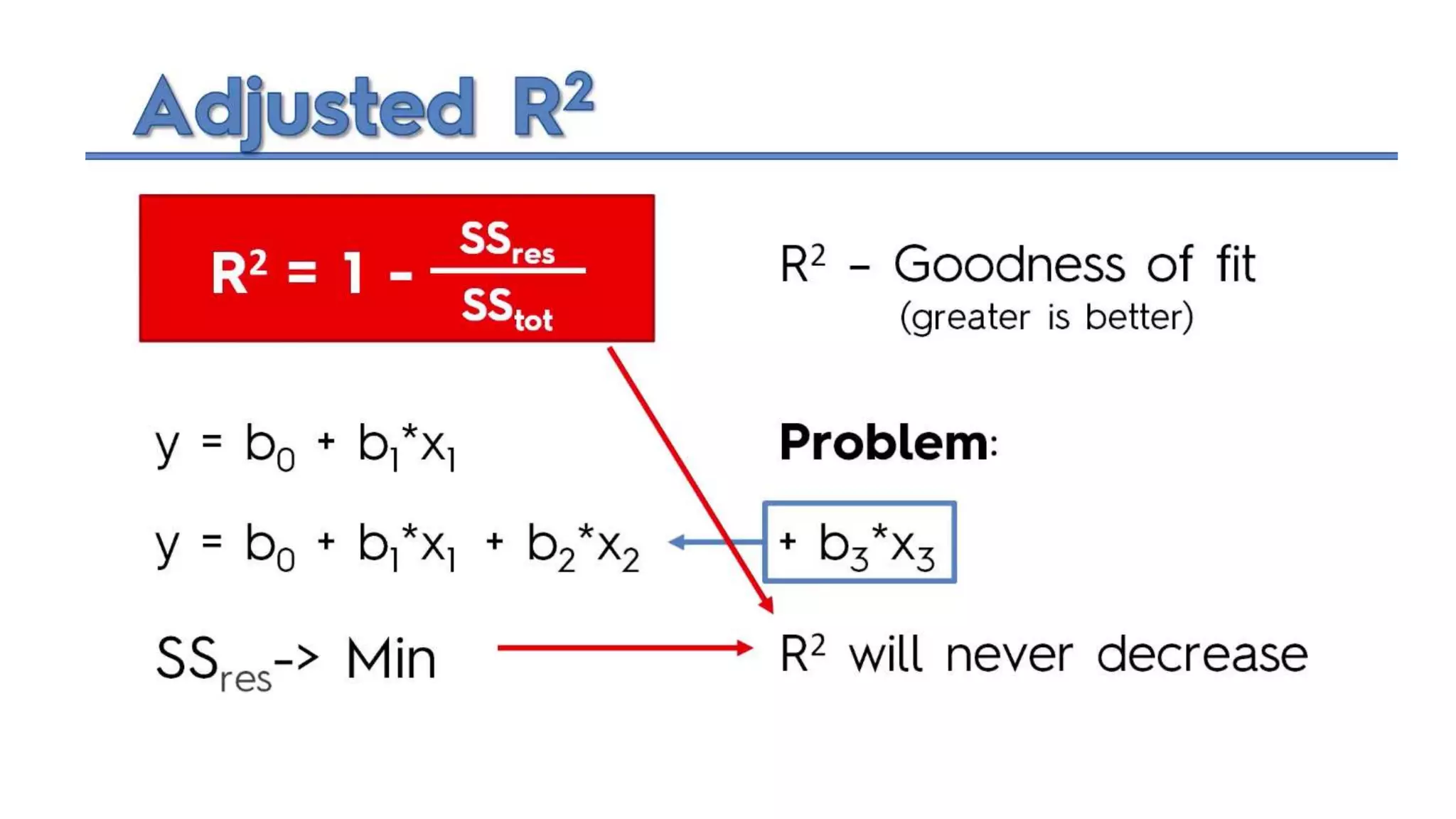

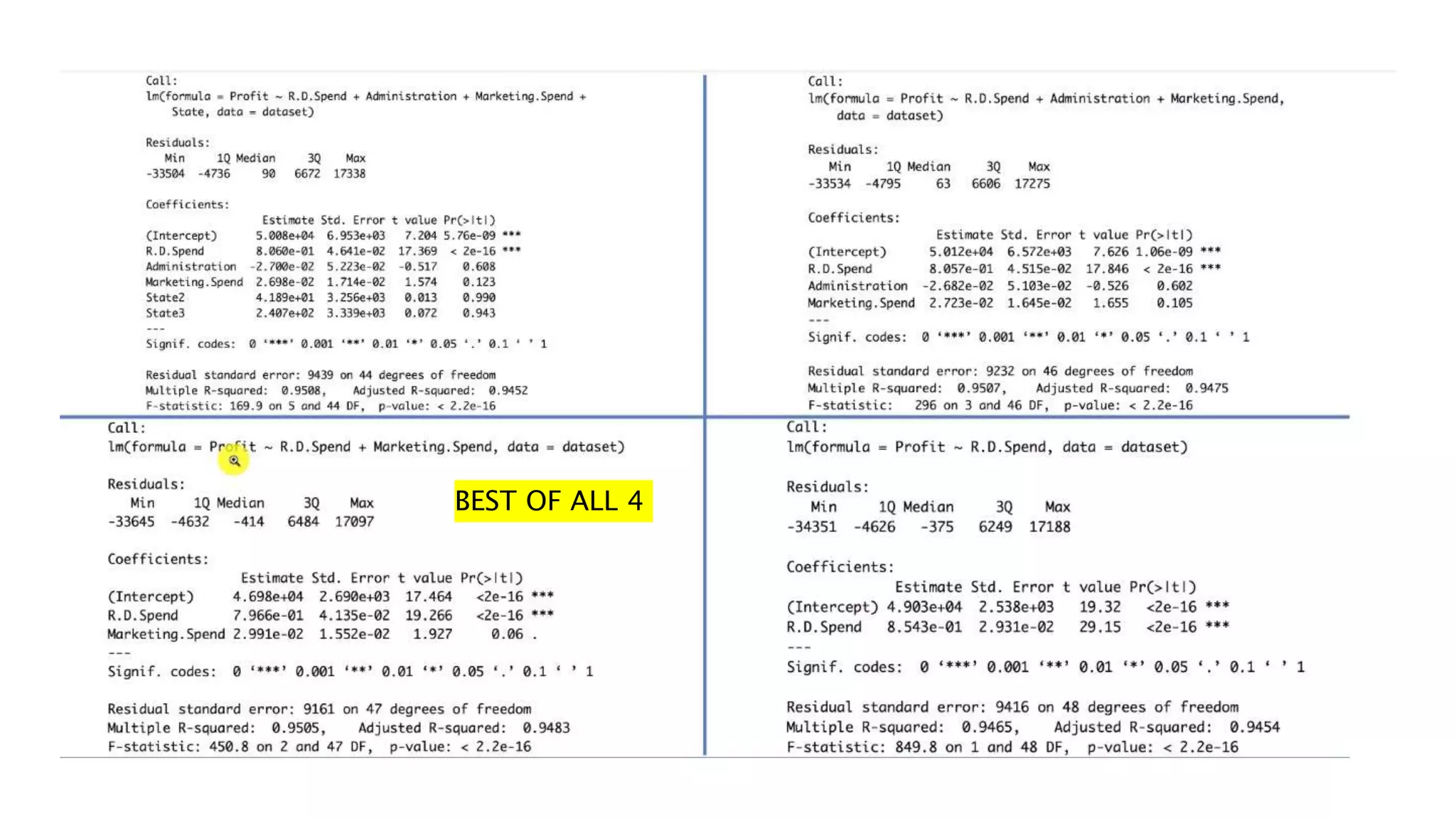

R-squared measures how well a linear regression model fits the data, but it will always increase or stay the same as more variables are added, even if they don't improve the model. Adjusted R-squared accounts for the number of predictors and is designed to penalize extra variables. It will only increase if a new variable significantly improves the model fit. The key differences are that adjusted R-squared deals better with additional variables and prevents overfitting, while R-squared is biased towards higher values as more predictors are included.