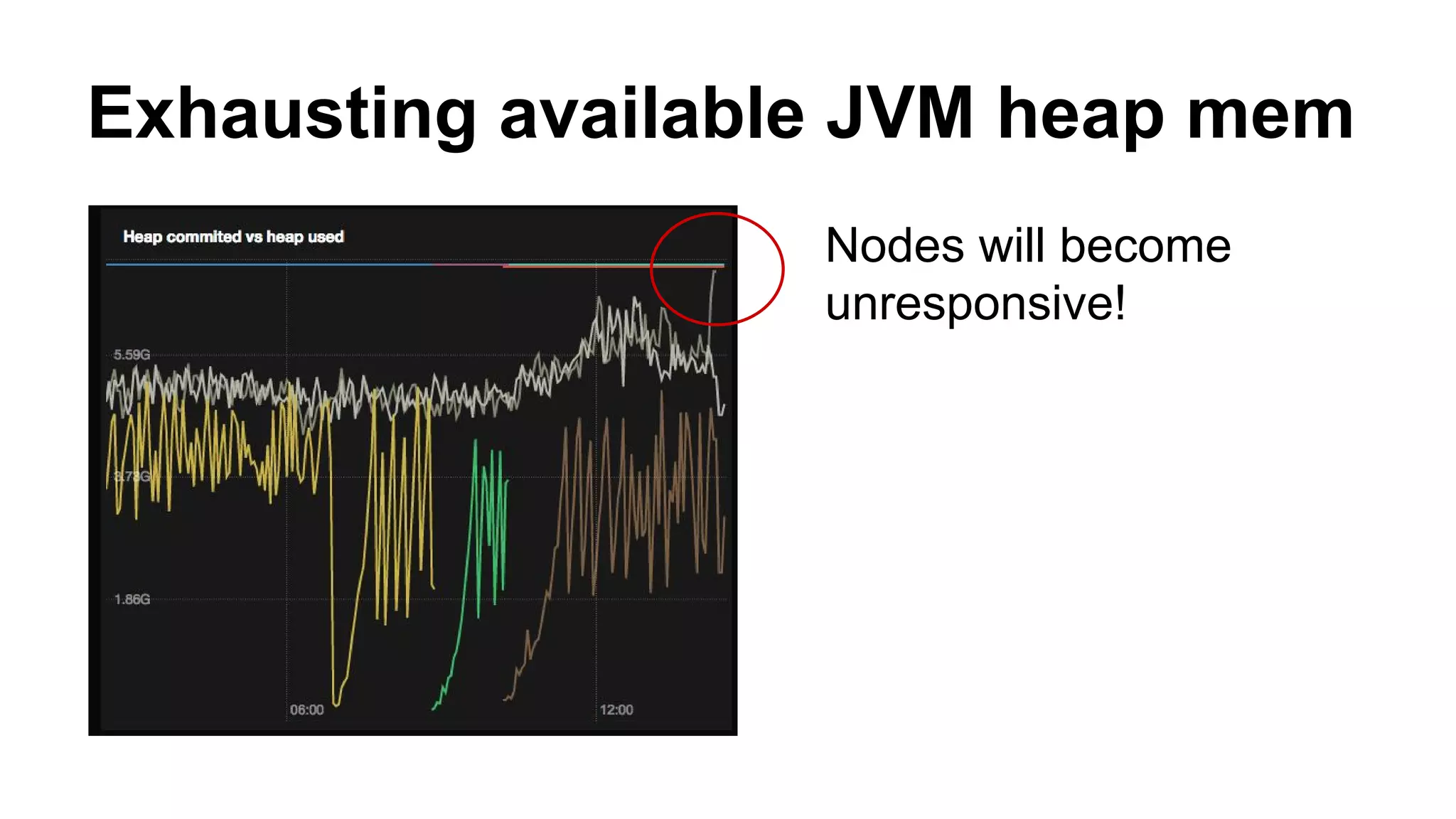

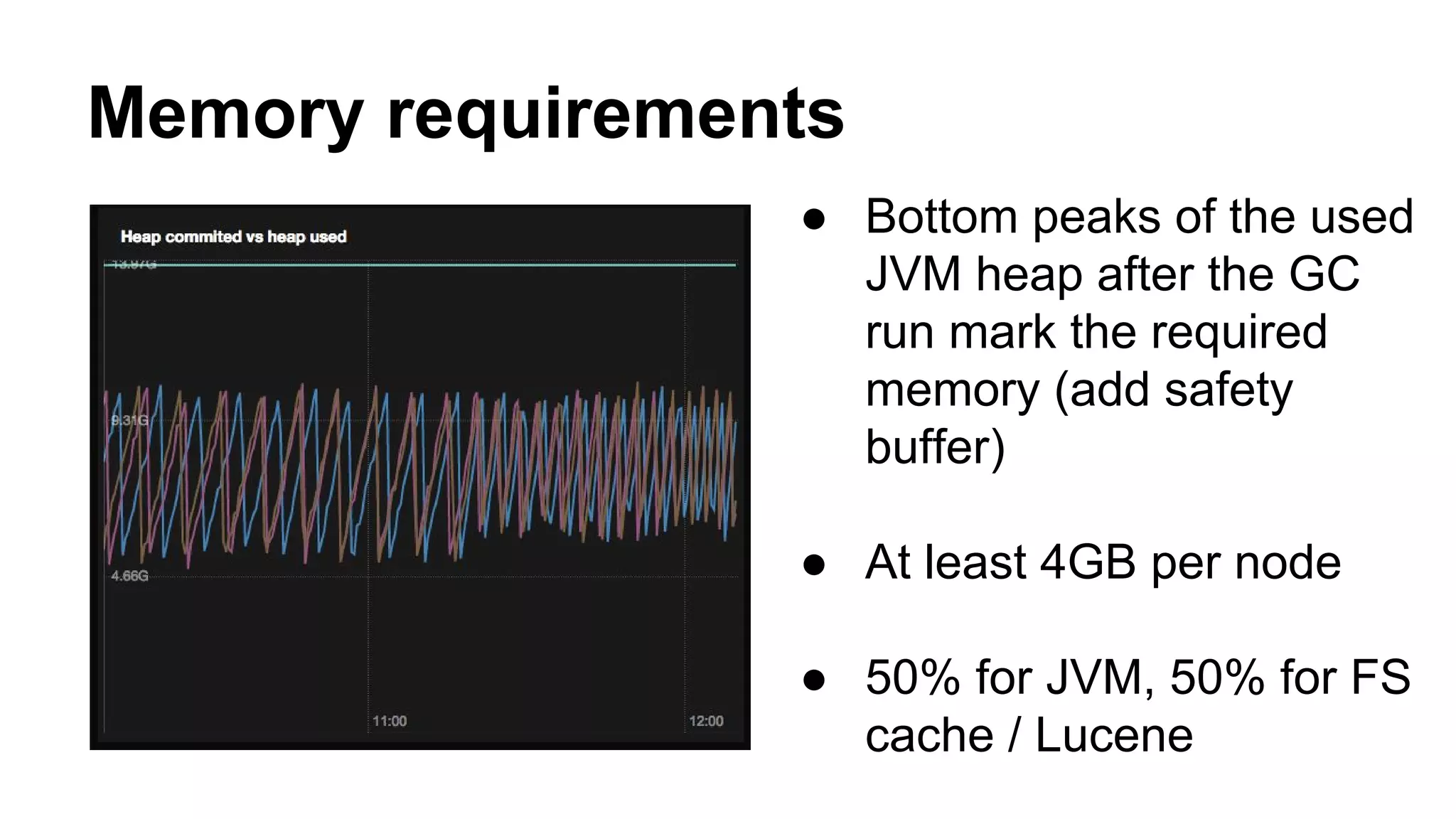

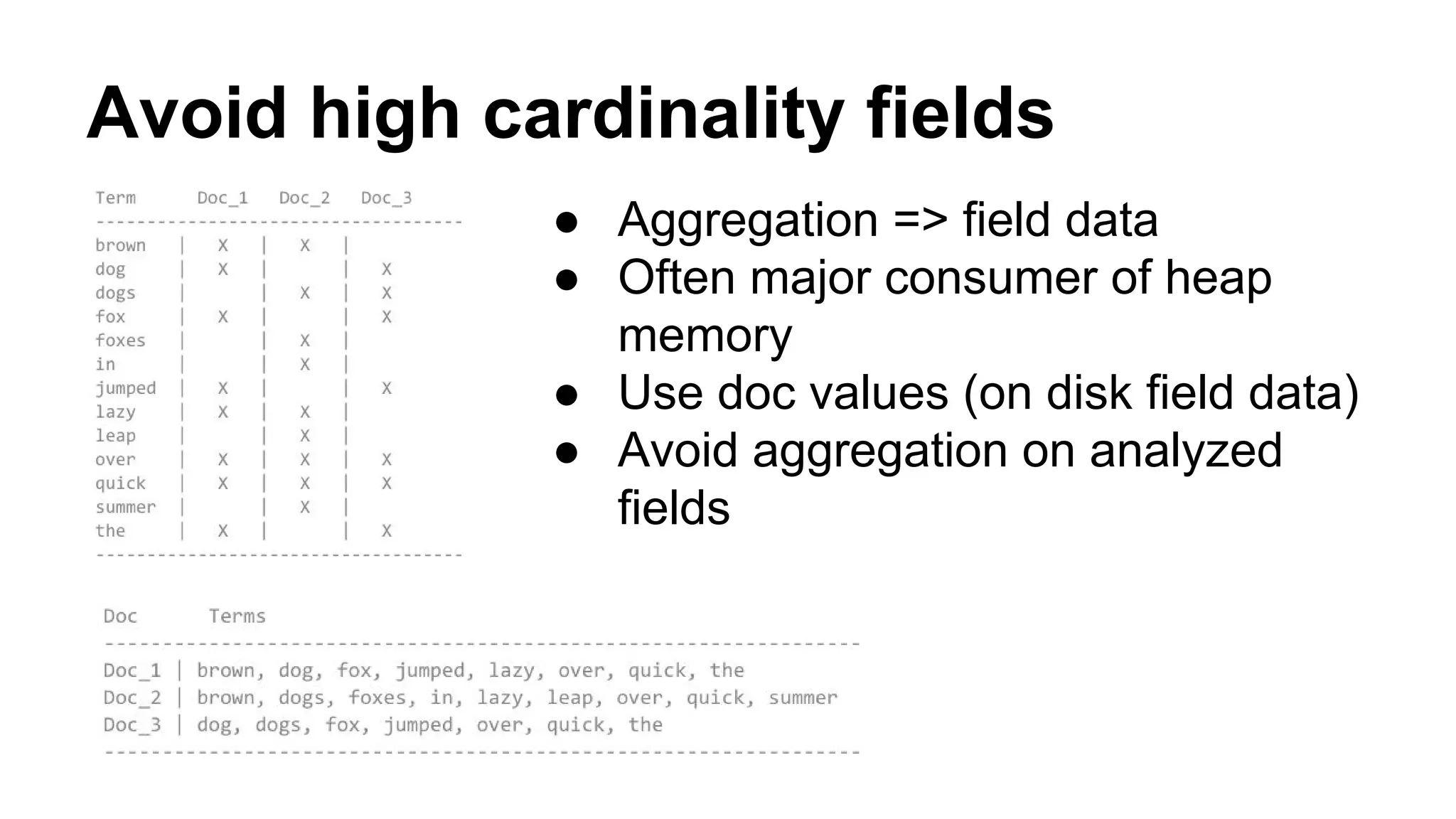

Elasticsearch 101 provides an overview of setting up, configuring, and tuning an Elasticsearch cluster. It discusses hardware requirements including memory, avoiding high cardinality fields, indexing and querying data, and tooling. The document also covers potential issues like data loss during network partitions and exhausting available Java heap memory.